ChatGPT, a recently released text generation tool, has caused heated discussions in the research community. It can write student essays, summarize research papers, answer questions, generate usable computer code, and is even enough to pass medical exams, MBA exams, judicial exams...

One of the key questions Yes: Can ChatGPT be named as an author on a research paper?

Now, the official clear answer from arXiv, the world’s largest preprint publishing platform, is: “No.”

Before ChatGPT, researchers had long been using chatbots as research assistants to help organize their thinking and generate insights into their work. feedback, assistance with coding, and summarizing research literature.

These auxiliary works seem to be recognized, but when it comes to "signature", it is another matter entirely. "Obviously, a computer program cannot be responsible for the content of a paper. Nor can it agree to the terms and conditions of arXiv."

There are some preprints and published articles that have been officially Authorship is given to ChatGPT. To address this issue, arXiv has adopted a new policy for authors regarding the use of generative AI language tools.

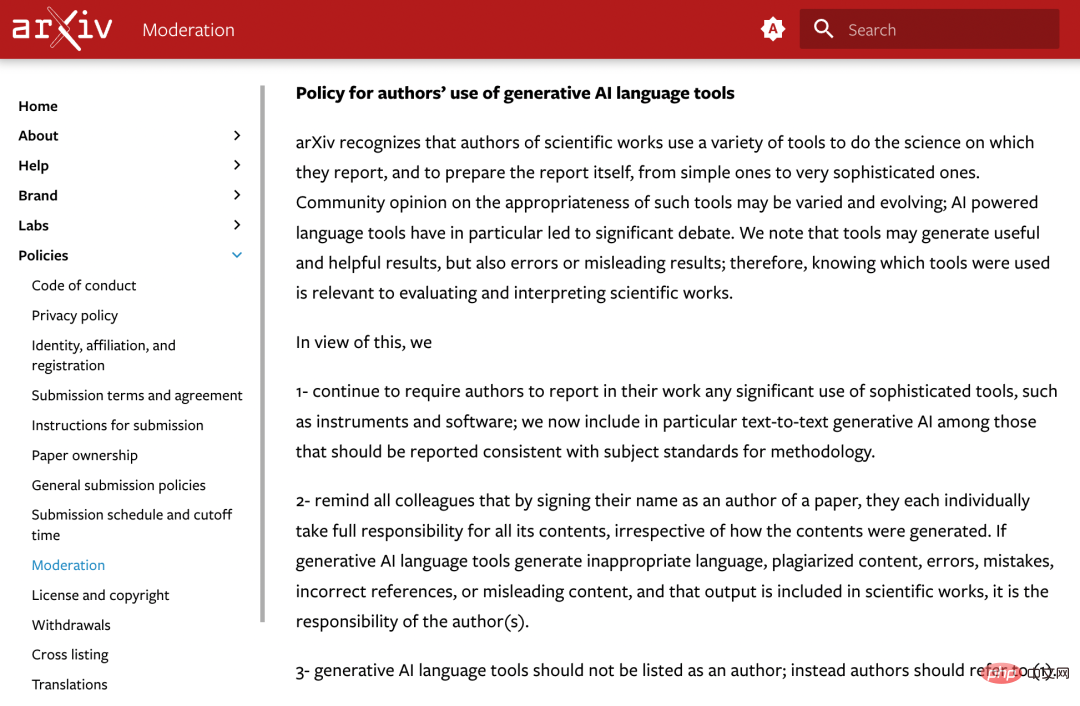

The official statement is as follows:

arXiv recognizes that scientific workers use a variety of tools to conduct the scientific work they report on and to prepare reports itself, ranging from simple tools to very complex tools.

Community views on the appropriateness of these tools may vary and are constantly changing; AI-driven language tools spark key debates. We note that tools may produce useful and helpful results, but may also produce erroneous or misleading results; therefore, understanding which tools are used is relevant to evaluating and interpreting scientific works.

Based on this, arXiv decided:

1. Continue to request authors Report the use of any complex tools, such as instrumentation and software, in their work; we now specifically include “text-to-text generative artificial intelligence” among the tools that should be reported that meet the methodological topic criteria.

2. All colleagues are reminded that by signing their name to the paper, they each take full responsibility for all contents of the paper, regardless of how they were produced. If a generative AI language tool produces inappropriate language, plagiarized content, erroneous content, incorrect references, or misleading content, and this output is incorporated into a scientific work, this is the author's responsibility.

3. Generative artificial intelligence language tools should not be listed as authors, please refer to 1.

A few days ago, "Nature" magazine publicly stated that it had jointly formulated two principles with all Springer Nature journals, and these Principles have been added to the existing author guidelines:

First, any large language modeling tool will not be accepted as a named author on a research paper. This is because any authorship comes with responsibility for the work, and AI tools cannot take on that responsibility.

Second, researchers who use large language modeling tools should document this use in the Methods or Acknowledgments section. If the paper does not include these sections, use an introduction or other appropriate section to document the use of large language models.

These regulations are very similar to the latest principles released by arXiv. It seems that organizations in the academic publishing field have reached some consensus.

Although ChatGPT has powerful capabilities, its abuse in areas such as school assignments and paper publishing has caused widespread concern.

The machine learning conference ICML stated: "ChatGPT is trained on public data, which is often collected without consent, which brings a series of responsibilities. The question of belonging."

Therefore, the academic community began to explore methods and tools for detecting text generated by large language models (LLM) such as ChatGPT. In the future, detecting whether content is generated by AI may become “an important part of the review process.”

The above is the detailed content of According to arXiv's official rules, using tools like ChatGPT as an author is not allowed. For more information, please follow other related articles on the PHP Chinese website!