Big Data Digest Produced

Author: Caleb

I believe everyone has seen how popular ChatGPT is now.

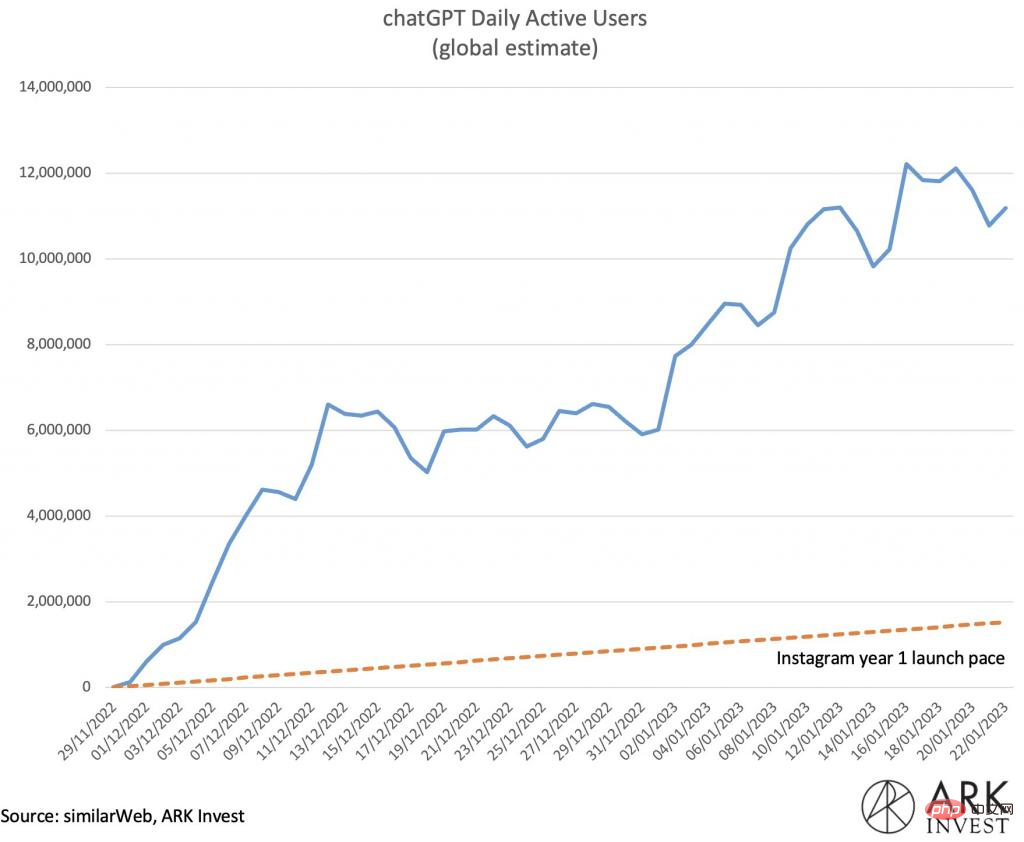

According to statistics from Brett Winton, chief futurist of ARK Venture Capital, it took less than two months for ChatGPT’s daily activity to exceed 10 million. As a comparison, it took 355 days for the last phenomenal software, Instagram, to achieve this feat.

In mid-January, the New York Times reported that Antony Aumann, a philosophy professor at Northern Michigan University, was surprised to read an article that “the whole class read” while grading a course on world religions. Best Paper". Using concise paragraphs, well-placed examples, and rigorous arguments, students explore the moral implications of the burqa ban. However, under Aumann's questioning, the student admitted that the paper was written using ChatGPT.

There are many similar examples.

It is precisely with the explosion of ChatGPT on a global scale that OpenAI has been quickly pushed to the "cusp of the storm" of public opinion.

It can be said that OpenAI is currently leading the chatbot arms race. Taking ChatGPT as an example, the public release of the multi-billion-dollar cooperation with Microsoft not only stimulated Google and Amazon to urgently deploy artificial intelligence in their product lines, OpenAI also cooperated with Bain to introduce machine learning into Coca-Cola’s operations, and plans to expand to Other corporate partners.

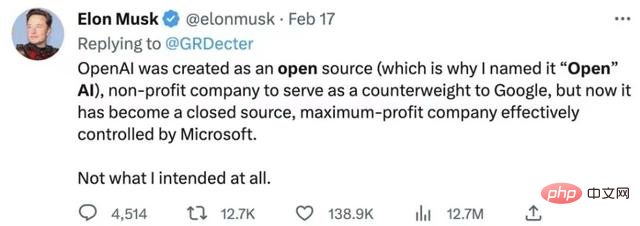

There is no doubt that OpenAI’s artificial intelligence has become a big business, but in fact this is somewhat different from OpenAI’s original plan.

Last Friday, OpenAI CEO Sam Altman published a blog post titled “Planning for AGI and Beyond” and beyond).

//m.sbmmt.com/link/3187b1703c3b9b19bb63c027d8efc2f1

In the article , he claimed that OpenAI’s general artificial intelligence (AGI) is currently far from meeting the requirements. Many people doubt whether it can benefit all mankind and whether it “has the potential to bring incredible new abilities to everyone.” Altman used a broad, idealistic language to argue that the development of artificial intelligence should not stop. "The future of mankind should be determined by humans." This implicitly pointed to OpenAI. Almost during the period when ChatGPT became popular, this blog post and OpenAI’s recent actions constantly remind everyone how much OpenAI’s tone and mission have changed compared to when it was founded. In its early days, OpenAI was just a non-profit organization. Although the company has always been focused on AGI development, when it was founded, they promised not to pursue profits and share development code for free. Today, these promises are nowhere to be found. The driving force of OpenAI became speed and profitIn 2015, Altman, Musk, Peter Thiel, and Reid Hoffman announced the establishment of a non-profit research organization OpenAI. In its founding statement, OpenAI announced that its research is committed to "advancing artificial intelligence in ways most likely to benefit all of humanity, without being constrained by generating financial returns." The blog also states that "since our research has no financial obligation, it can better focus on positive human impacts" and encourages all researchers to share "papers, blog posts or code, our patents (if any) will be shared with the world”. Eight years later, we are faced with a company that is neither transparent nor driven by positive human impact. Instead, as many critics, including Musk, believe, OpenAI's motivation became speed and profit.

This also invisibly contributed to the company's change in direction in 2018. At that time, OpenAI sought some direction from capital resources, saying, "Our primary fiduciary responsibility is to humans. We anticipate that we will need to mobilize significant resources to complete our mission."

By March 2019, OpenAI had completely shed its non-profit title and established a “capped profit” unit. This allows OpenAI to begin accepting investments and will provide investors with profits capped at 100 times their investment.

This decision was seen as a competition with big technology companies such as Google, and soon the company also received a US$1 billion investment from Microsoft as it wished. In a blog post announcing the for-profit company, OpenAI continued to use the same rhetoric, declaring that its mission is to "ensure that general artificial intelligence benefits all humanity."

But as Motherboard writes, it’s hard to believe that venture capitalists can save humanity when their main goal is profit.

In 2019, OpenAI faced public backlash when it announced and released the GPT-2 language model.

Initially, the company said it would not release the source code for the trained model due to "concerns about malicious applications of the technology." While this partly reflects its commitment to developing beneficial artificial intelligence, it's also not very "open."

Critics wondered why OpenAI would announce a tool but not release it, and many thought it was just a publicity stunt. It wasn't until three months later that OpenAI released the model on GitHub, saying the move was "an important foundation for the responsible release of artificial intelligence, especially when it comes to powerful generative models."

According to investigative reporter Karen Hao, who spent several days inside the company during 2020, OpenAI’s internal culture began to no longer reflect a cautious, research-driven approach. The AI development process is instead more focused on getting ahead, which has also led to accusations that it contributes to the “AI hype cycle.” Employees are also now required to remain silent about the work at hand to comply with the company's new bylaws.

"There is a misalignment between what the company publicly espouses and how it operates behind closed doors. Over time, it has allowed fierce competition and increasing financial pressures to erode its founding ideals of transparency, openness and collaboration ." Hao wrote.

But overall, the launch of GPT-2 is undoubtedly an event worth celebrating for OpenAI, and it is also an important cornerstone of the company's development to this day. “It’s definitely part of the success story framework,” Miles Brundage, now director of policy research, said at a conference. “That part of the story goes something like this: We did something ambitious, and now some people are replicating it. There should also be some explanation of the benefits of this innovation”.

Since then, OpenAI has retained the hype part of the GPT-2 release formula, but eliminated the openness. In 2020, GPT-3 was officially launched and quickly “exclusively” licensed to Microsoft. The source code of GPT-3 has not yet been released, although OpenAI is currently intensively preparing for GPT-4; the model is only open to the public through ChatGPT’s API, and OpenAI has also launched a paid layer to guarantee access to the model.

Of course there are some considerations for doing this. The first is money. "Commercializing the technology helps pay for our ongoing AI research, safety and policy work," OpenAI said in an API announcement blog. The second reason is the bias towards helping large companies. “It’s difficult for anyone but big companies to benefit from the underlying technology.” Finally, the company also says that releasing via an API rather than open source is safer because the company can deal with abuse.

“You guys keep telling us AGI is fast, but there’s not even a consistent definition on your website,” computer scientist Timnit Gebru said on Twitter.

Emily M. Bender, a professor of linguistics at the University of Washington, said on Twitter, “They don’t want to solve real problems in the real world (which would require ceding power). They want to believe they are gods who can not only create A 'superintelligence' that has the grace to do so in a way that is 'consistent' with humans."

Will this artificial intelligence be shared responsibly, developed openly, and with no profit motive, as the company originally envisioned? Or will it be rushed out, with a lot of troubling flaws, but mostly to generate revenue for OpenAI? Will OpenAI keep its sci-fi future closed-source?

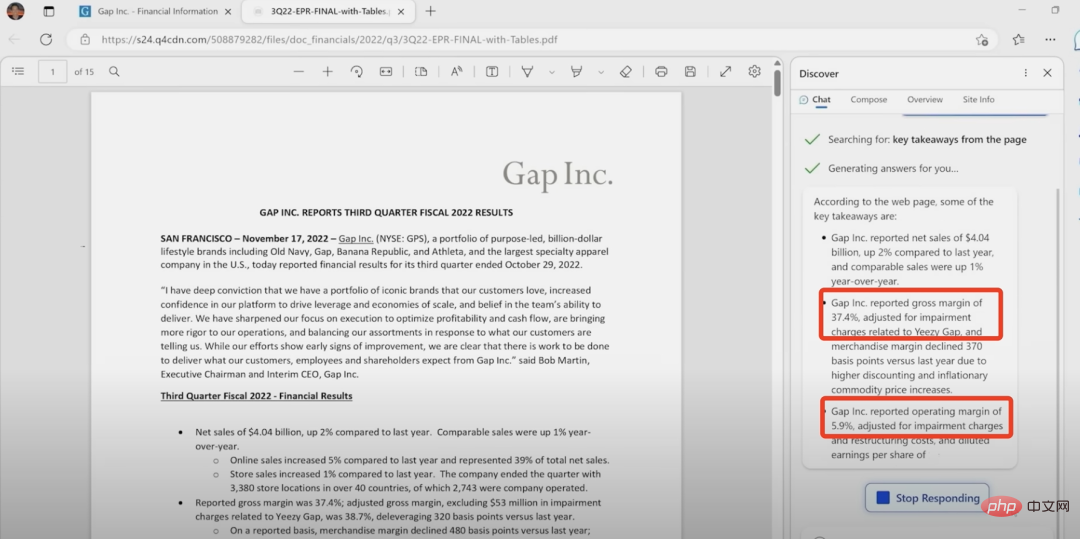

After being combined with ChatGPT, Microsoft’s Bing chatbot is in a state of “obsession”, constantly deceiving and scolding users, and spreading misinformation. OpenAI cannot reliably detect text generated by its own chatbot, even as educators are increasingly concerned about students using the app to cheat.

People have also easily jailbroken language models, ignoring the guardrails OpenAI has put around them, with the bot crashing when fed random words and phrases. No one can say clearly why, because OpenAI does not share the code of the underlying model, and perhaps to some extent, OpenAI itself is unlikely to fully understand how it works.

With all of this in mind, we should all carefully consider whether OpenAI is worthy of the trust it is asking the public to give, to which it has not responded in any substantive way.

Related reports://m.sbmmt.com/link/b4189d9de0fb2b9cce090bd1a15e3420

The above is the detailed content of Why is ChatGPT popular, but OpenAI has not received widespread attention?. For more information, please follow other related articles on the PHP Chinese website!