Chatbots or customer service assistants are AI tools that hope to achieve business value through delivery to users through text or voice over the Internet. The development of chatbots has progressed rapidly in the past few years, from the initial robots based on simple logic to the current artificial intelligence based on natural language understanding (NLU). For the latter, the most commonly used frameworks or libraries when building such chatbots include foreign RASA, Dialogflow, Amazon Lex, etc., as well as domestic major companies Baidu, iFlytek, etc. These frameworks can integrate natural language processing (NLP) and NLU to process input text, classify intent, and trigger the right actions to generate responses.

With the emergence of large language models (LLM), we can directly use these models to build fully functional chatbots. One of the famous LLM examples is Generative Pre-trained Transformer 3 from OpenAI (GPT-3: chatgpt is based on gpt fine-tuning and adding human feedback model), which can fine-tuning the model by using dialogue or session data , generate text that resembles natural conversation. This ability makes it the best choice for building custom chatbots.

Today we will talk about how to build our own simple conversational chatbot through fine-tuning the GPT-3 model.

Typically, we want to fine-tune the model on a dataset of examples of our business conversations, such as customer service conversation records, chat logs, or subtitles in movies. The fine-tuning process adjusts the model’s parameters to better fit this conversational data, making the chatbot better at understanding and responding to user input.

To fine-tuning GPT-3, we can use Hugging Face's Transformers library, which provides pre-trained models and fine-tuning tools. The library provides several GPT-3 models of different sizes and capabilities. The larger the model, the more data it can handle and the higher its accuracy is likely to be. However, for the sake of simplicity, we are using the OpenAI interface this time, which can implement fine-tuning by writing a small amount of code.

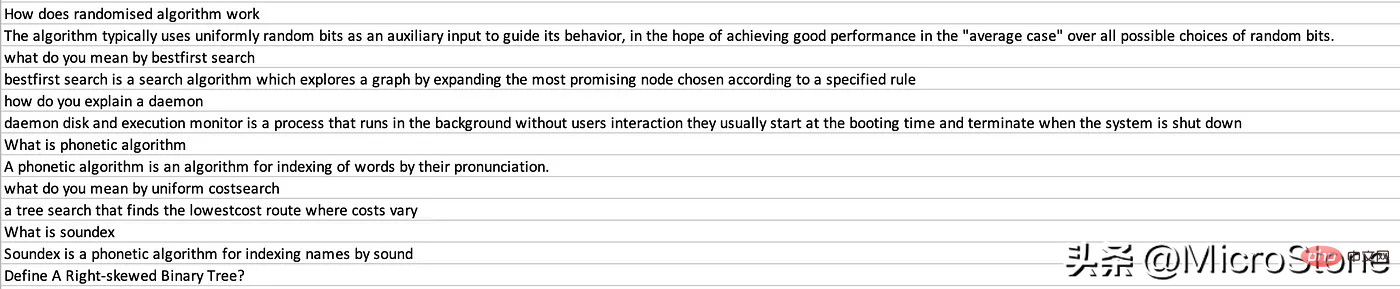

The next step is that we use OpenAI GPT-3 to implement fine-tuning. The data set can be obtained from here. Sorry, I used a foreign data set again. There are really very few such processed data sets in China. .

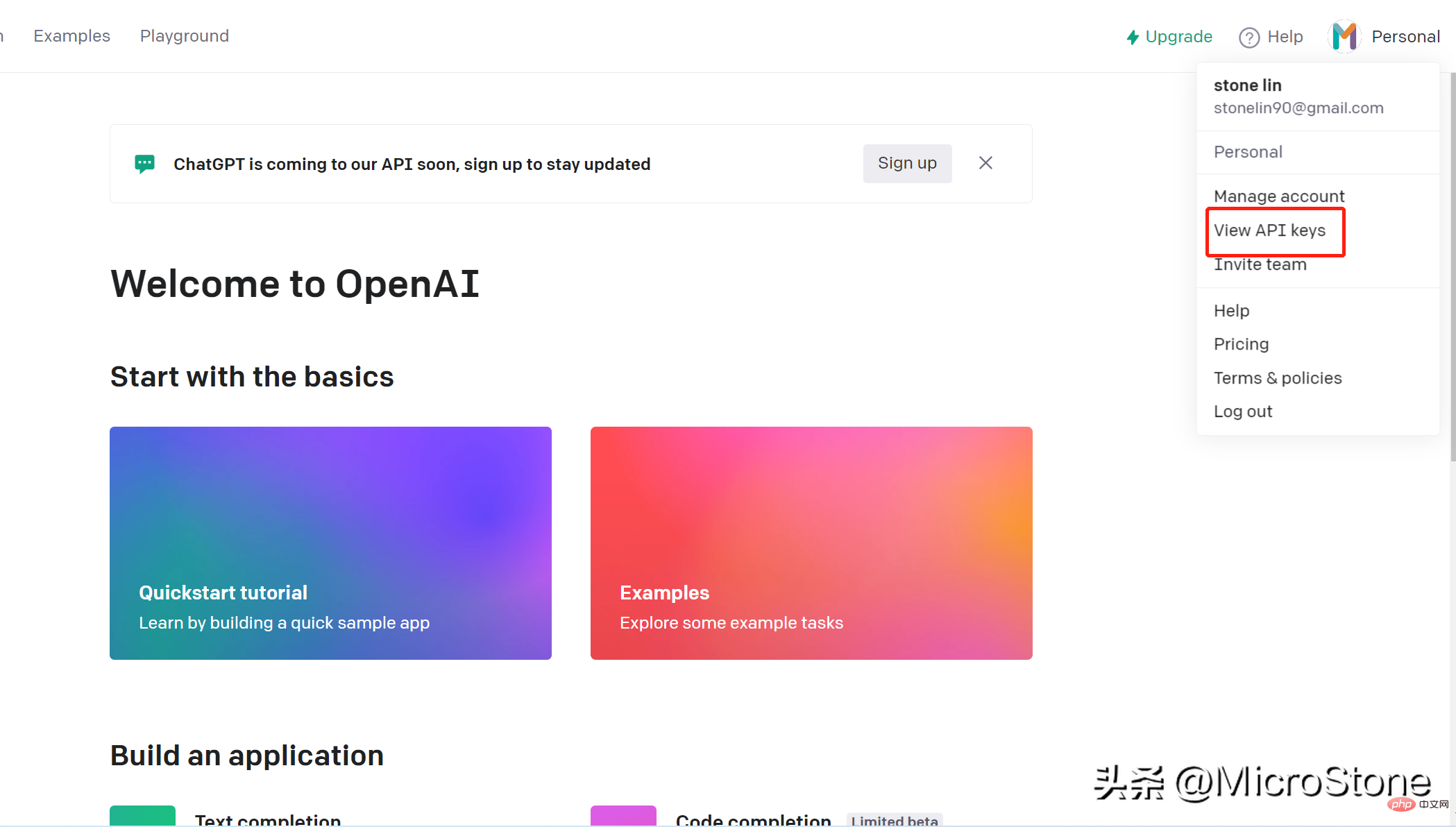

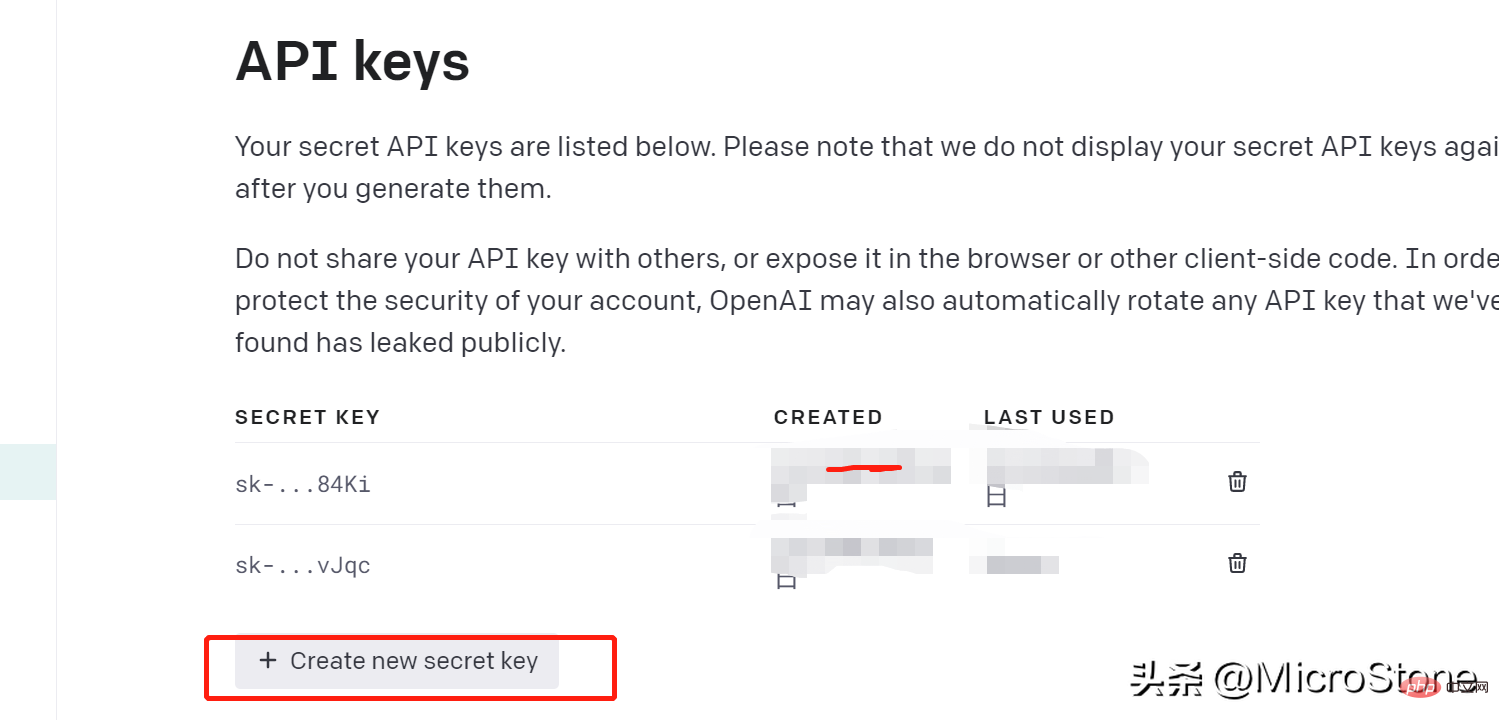

Creating an account is very simple, you can just open this link. We can access the model on OpenAI through the openai key. The steps to create an API key are as follows:

We have created the api key, then we can start preparing the data for the fine-tuning model, here The data set can be viewed.

Install the OpenAI library pip install openai

After installation, we can load the data:

import os

import json

import openai

import pandas as pd

from dotenv import load_dotenv

load_dotenv()

os.environ['OPENAI_API_KEY'] = os.getenv('OPENAI_KEY')

openai.api_key = os.getenv('OPENAI_KEY')

data = pd.read_csv('data/data.csv')

new_df = pd.DataFrame({'Interview AI': data['Text'].iloc[::2].values, 'Human': data['Text'].iloc[1::2].values})

print(new_df.head(5))We load the questions into the Interview AI column and the corresponding answers into the Human column. We also need to create an environment variable .env file to save OPENAI_API_KEY

Next, we convert the data to the GPT-3 standard. According to the docs, make sure the data is in JSONL format with two keys, this is important: prompt e.g. completion

{ "prompt" :"<prompt text>" ,"completion" :"<ideal generated text>" }

{ "prompt" :"<prompt text>" ,"completion" :"<ideal generated text>" }Restructure the dataset to fit the above, basically looping through each row in the data frame and placing Assign the text to Human and the Interview AI text to Complete.

output = []

for index, row in new_df.iterrows():

print(row)

completion = ''

line = {'prompt': row['Human'], 'completion': row['Interview AI']}

output.append(line)

print(output)

with open('data/data.jsonl', 'w') as outfile:

for i in output:

json.dump(i, outfile)

outfile.write('n')Use the prepare_data command. Some questions will be asked when prompted. We can provide Y or N responses.

os.system("openai tools fine_tunes.prepare_data -f 'data/data.jsonl' ")Finally, a file named data_prepared.jsonl is dumped into the directory.

To fun-tuning the model, we only need to run one line of commands:

os .system( "openai api fine_tunes.create -t 'data/data_prepared.jsonl' -m davinci " )

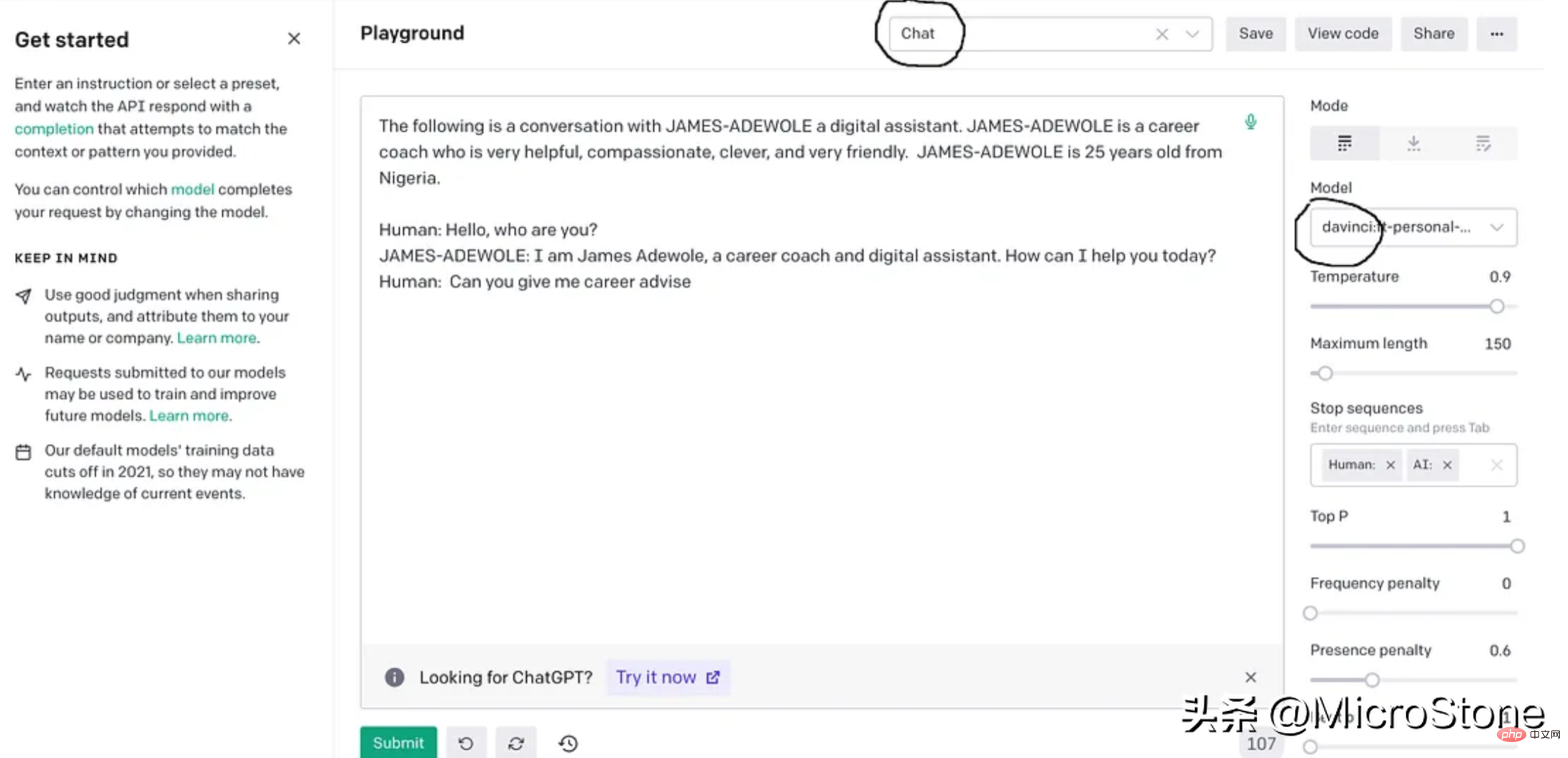

这基本上使用准备好的数据从 OpenAI 训练davinci模型,fine-tuning后的模型将存储在用户配置文件下,可以在模型下的右侧面板中找到。

我们可以使用多种方法来验证我们的模型。可以直接从 Python 脚本、OpenAI Playground 来测试,或者使用 Flask 或 FastAPI 等框构建 Web 服务来测试。

我们先构建一个简单的函数来与此实验的模型进行交互。

def generate_response(input_text):

response = openai.Completion.create(

engine="davinci:ft-personal-2023-01-25-19-20-17",

prompt="The following is a conversation with DSA an AI assistant. "

"DSA is an interview bot who is very helpful and knowledgeable in data structure and algorithms.nn"

"Human: Hello, who are you?n"

"DSA: I am DSA, an interview digital assistant. How can I help you today?n"

"Human: {}nDSA:".format(input_text),

temperature=0.9,

max_tokens=150,

top_p=1,

frequency_penalty=0.0,

presence_penalty=0.6,

stop=["n", " Human:", " DSA:"]

)

return response.choices[0].text.strip()

output = generate_response(input_text)

print(output)把它们放在一起。

import os

import json

import openai

import pandas as pd

from dotenv import load_dotenv

load_dotenv()

os.environ['OPENAI_API_KEY'] = os.getenv('OPENAI_KEY')

openai.api_key = os.getenv('OPENAI_KEY')

data = pd.read_csv('data/data.csv')

new_df = pd.DataFrame({'Interview AI': data['Text'].iloc[::2].values, 'Human': data['Text'].iloc[1::2].values})

print(new_df.head(5))

output = []

for index, row in new_df.iterrows():

print(row)

completion = ''

line = {'prompt': row['Human'], 'completion': row['Interview AI']}

output.append(line)

print(output)

with open('data/data.jsonl', 'w') as outfile:

for i in output:

json.dump(i, outfile)

outfile.write('n')

os.system("openai tools fine_tunes.prepare_data -f 'data/data.jsonl' ")

os.system("openai api fine_tunes.create -t 'data/data_prepared.jsonl' -m davinci ")

def generate_response(input_text):

response = openai.Completion.create(

engine="davinci:ft-personal-2023-01-25-19-20-17",

prompt="The following is a conversation with DSA an AI assistant. "

"DSA is an interview bot who is very helpful and knowledgeable in data structure and algorithms.nn"

"Human: Hello, who are you?n"

"DSA: I am DSA, an interview digital assistant. How can I help you today?n"

"Human: {}nDSA:".format(input_text),

temperature=0.9,

max_tokens=150,

top_p=1,

frequency_penalty=0.0,

presence_penalty=0.6,

stop=["n", " Human:", " DSA:"]

)

return response.choices[0].text.strip()示例响应:

input_text = "what is breadth first search algorithm" output = generate_response(input_text)

The breadth-first search (BFS) is an algorithm for discovering all the reachable nodes from a starting point in a computer network graph or tree data structure

GPT-3 是一种强大的大型语言生成模型,最近火到无边无际的chatgpt就是基于GPT-3上fine-tuning的,我们也可以对GPT-3进行fine-tuning,以构建适合我们自己业务的聊天机器人。fun-tuning过程调整模型的参数可以更好地适应业务对话数据,让机器人更善于理解和响应业务的需求。经过fine-tuning的模型可以集成到聊天机器人平台中以处理用户交互,还可以为聊天机器人生成客服回复习惯与用户交互。整个实现可以在这里找到,数据集可以从这里下载。

The above is the detailed content of Use GPT-3 to build an enterprise chatbot that meets your business needs. For more information, please follow other related articles on the PHP Chinese website!