While technology giants are fighting for ChatGPT, academic circles are also paying increasing attention to ChatGPT.

Within a week, Nature published two articles discussing ChatGPT and generative AI.

After all, ChatGPT first made waves in the academic circle. Scholars have used it to write abstracts and revise papers.

Nature has issued a special ban for this purpose: ChatGPT cannot be the author of the paper. Science directly prohibits submissions using ChatGPT to generate text.

But the trend is already there.

What we should do now is perhaps to clarify the significance of ChatGPT to the scientific community and what position it should be in.

As Nature said:

Generative AI and the technology behind it are developing so fast, with innovations appearing every month. How researchers use them will determine the future of technology and academia.

In the article "ChatGPT: Five Priority Research Issues", the researchers proposed:

It is impossible to prevent ChatGPT from entering In academic circles, the top priority should be to study and explore its potential impacts.

If ChatGPT is later used to design experiments, conduct peer review, assist in publishing, and help editors decide whether to accept articles... What issues should be paid attention to in these applications? What boundaries do humans need to clarify now?

The researchers believe that there are 5 aspects that need to be prioritized and explained the reasons.

One of the major features of conversational AI such as ChatGPT is that the accuracy of the answer content cannot be guaranteed. Moreover, the lies made up are very natural and can easily mislead people.

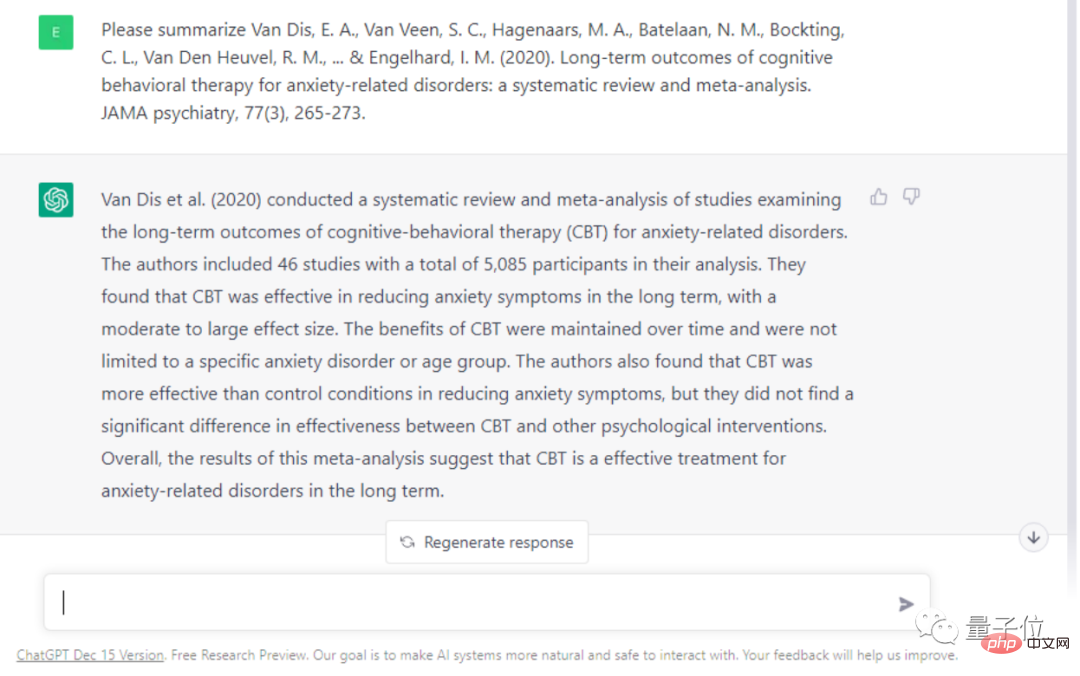

For example, researchers asked ChatGPT to summarize and summarize a paper on whether cognitive behavioral therapy (CBT) is effective in treating anxiety and other symptoms.

There are many factual errors in the answers given by ChatGPT. For example, it says that this review is based on 46 studies, but it is actually 69, and it exaggerates the effectiveness of CBT.

Researchers believe that if scholars use ChatGPT to help with research, they may be misled by wrong information. It can even lead to scholars plagiarizing the work of others without knowing it.

Therefore, the researchers believe that humans cannot rely too much on automated systems in the process of reviewing papers, and humans themselves must ultimately be responsible for scientific practices.

In order to deal with the abuse of generative AI, many AI text identification tools have been born. They can easily distinguish whether a piece of text was written by a human being.

However, researchers believe that this "arms race" is unnecessary. What really needs to be done is to allow academic circles and publishers to use AI tools more openly and transparently.

The author of the paper should clearly mark which tasks are performed by AI, and if the journal uses AI to review manuscripts, it should also make a public statement.

Especially now that generative AI has triggered discussions on patent issues. How should the copyright of images generated by AI be calculated?

So for problems generated by AI, the copyright should belong to the person who provides training data for AI? The production company behind AI? Or a scholar who uses AI to write articles? The issue of authorship also needs to be rigorously defined.

Currently, almost all advanced conversational AI is brought by technology giants.

Much remains unknown about the working principles of the algorithms behind AI tools.

This has also aroused concerns from all walks of life, because the monopoly behavior of the giants seriously violates the principle of openness in the scientific community.

This will influence the academic circle to explore the shortcomings and underlying principles of conversational AI, and further affect the progress of science and technology.

In order to overcome this opacity, researchers believe that the development and application of open source AI algorithms should be prioritized at the moment. For example, the open source large model BLOOM was jointly initiated by 1,000 scientists and can rival GPT-3 in terms of performance.

Although there are many aspects that need to be limited, it is undeniable that AI can indeed improve the academic circle efficiency.

For example, AI can quickly handle some review work, and scholars can focus more on the experiment itself, and the results can be published faster, thus pushing the entire academic circle to move faster.

Even in some creative tasks, researchers believe that AI can also be useful.

A seminal paper in 1991 proposed that an "intelligent partnership" formed between humans and AI could surpass the intelligence and capabilities of a single human.

This relationship can accelerate innovation to unimaginable levels. But the question is, how far can this automation go? How far should you go?

Therefore, researchers also call on scholars, including ethicists, to discuss the boundaries of today's AI in the generation of knowledge content. Human creativity and originality may still be necessary for innovative research. indispensable factor.

In view of the current impact of LLM, researchers believe that the academic community should urgently organize a big debate.

They called on every research group to hold a group meeting immediately to discuss and try ChatGPT for themselves. University teachers should take the initiative to discuss the use and ethical issues of ChatGPT with students.

In the early stage when the rules are not yet clear, it is important for the leader of the research team to call on everyone to use ChatGPT more openly and transparently and start to form some rules. And all researchers should be reminded to take responsibility for their own work, whether or not it was generated by ChatGPT.

Going further, the researchers believe that an international forum should be held immediately to discuss the research and use of LLM.

Members should include scientists from various fields, technology companies, research institutional investors, scientific academies, publishers, non-governmental organizations, and legal and privacy experts.

Excitement and worry are probably the feelings of many researchers about ChatGPT.

Up to now, ChatGPT has become a digital assistant for many scholars.

Computational biologist Casey Greene and others used ChatGPT to revise the paper. In 5 minutes, AI can review a manuscript and even find problems in the reference section.

Hafsteinn Einarsson, a scholar from Iceland, uses ChatGPT almost every day to help him make PPT and check student homework.

Also neurobiologist Almira Osmanovic Thunström believes that large language models can be used to help scholars write grant applications, and scientists can save more time.

However, Nature made an incisive summary of the output content of ChatGPT:

Smooth but inaccurate.

You must know that a major shortcoming of ChatGPT is that the content it generates is not necessarily true and accurate, which will affect its use in academic circles.

Can it be solved?

From now on, the answer is a bit confusing.

OpenAI’s competitor Anthroic claims to have solved some problems with ChatGPT, but they did not accept an interview with Nature.

Meta has released a large language model called Galactica, which is made from 48 million academic papers and works. It claims to be good at generating academic content and better understanding of research problems. However, its demo is no longer open (the code is still available) because users found it racist during use.

Even ChatGPT, which has been trained to be "well-behaved", may be deliberately guided to output dangerous remarks.

OpenAI’s method of making ChatGPT behave is also very simple and crude. It is to find a lot of manual labor to annotate the corpus. Some people think that this behavior of hiring people to read toxic corpus is also a kind of exploitation.

But in any case, ChatGPT and generative AI have opened a new door to human imagination.

Medical scholar Eric Topol said that he hopes that in the future there will be artificial intelligence that includes LLM, which can cross-check text and images in academic literature, thereby helping humans diagnose cancer and understand diseases. . Of course, all this must be supervised by experts.

He said, I really didn’t expect that we would see such a trend at the beginning of 2023.

And this is just the beginning.

Reference link:

[1]//m.sbmmt.com/link/492284833481ed2fd377c50abdedf9f1

[2]//m.sbmmt.com/link/04f19115dfa286fb61ab634a2717ed37

The above is the detailed content of ChatGPT asked Nature to publish two articles a week to discuss: It is inevitable to use it in academic circles, and it is time to clarify the usage specifications. For more information, please follow other related articles on the PHP Chinese website!