In 1950, Turing published the landmark paper "Computing Machinery and Intelligence" (Computing Machinery and Intelligence), proposing a famous judgment principle about robots - Figure The Turing Test, also known as the Turing Judgment, points out that if a third party cannot distinguish the difference between the reactions of humans and AI machines, it can be concluded that the machine has artificial intelligence.

In 2008, Jarvis, the AI butler in Marvel's "Iron Man", let people know how AI can accurately help humans (Tony) solve various matters thrown at them. of...

At the beginning of 2023, ChatGPT, a free chat robot that broke out in the technology industry in a 2C way, took the world by storm.

According to a research report by UBS, its monthly active users reached 100 million in January and are still growing. It has become the fastest growing consumer in history. or application. In addition, its owner OpenAI will soon launch the Plus version, which is said to be around $20 per month, after releasing the Pro version for $42 per month.When a new thing has hundreds of millions of monthly users, traffic, and commercial monetization, are you curious about the various technologies behind it? For example, how do chatbots process and query massive amounts of data?

Friends who have experienced ChatGPT have the same feeling. It is obviously more intelligent than Tmall Elf or Xiao Aitong Shoes - it is a chat robot with "invincible speaking skills" and a natural language processing tool, a large language model, and an artificial intelligence application. It can interact with humans based on the context of the question material, can reason and create, and even reject questions that it considers inappropriate, not just complete anthropomorphic communication.

Although the current evaluation of it is mixed, from the perspective of technological development, it may even pass the Turing test. Let me ask, when we communicate with it, its (for a novice) extensive knowledge and sweet and sweet answers, if we are completely unaware of it, it is difficult to distinguish whether the other party is a human or a machine (

Perhaps this is where it is dangerous - the core of ChatGPT still belongs to the category of deep learning, and there are a lot of black boxes and inexplicability!).

So, how does the chatbot quickly organize and output the training corpus of 300 billion words and 175 billion parameters, while also combining it with the context? , how can it freely respond to communication with humans based on the knowledge it "masters"?In fact, chatbots also have brains. Just like us humans, they need to learn and train.

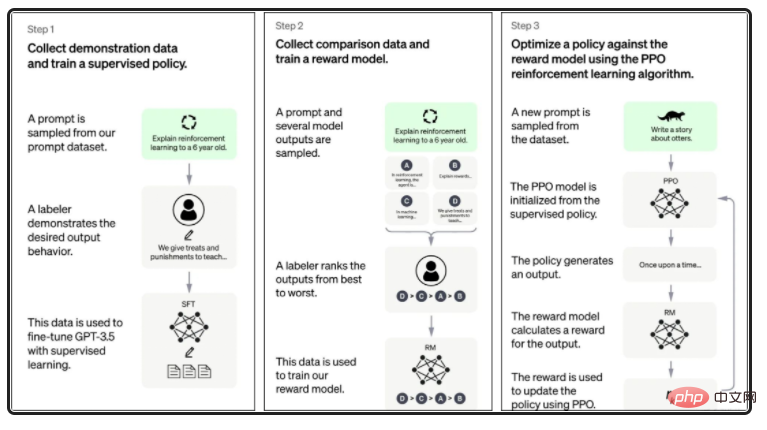

##Figure 2: ChatGPT learning and training chart (source official website)

##Figure 2: ChatGPT learning and training chart (source official website)

It uses NLP (natural language processing), target recognition, multi-modal recognition, etc. to structure massive amounts of text, pictures and other unstructured files into a knowledge graph according to their semantics. This knowledge graph is the brain of the chat robot.

Figure 3: Taking medical care as an example, artificial intelligence transforms data from multiple sources into scenarios such as question and answer, search, and drug research and development. In the knowledge graph,

Figure 3: Taking medical care as an example, artificial intelligence transforms data from multiple sources into scenarios such as question and answer, search, and drug research and development. In the knowledge graph,

#What does the knowledge graph consist of?

#What does the knowledge graph consist of? It is composed of

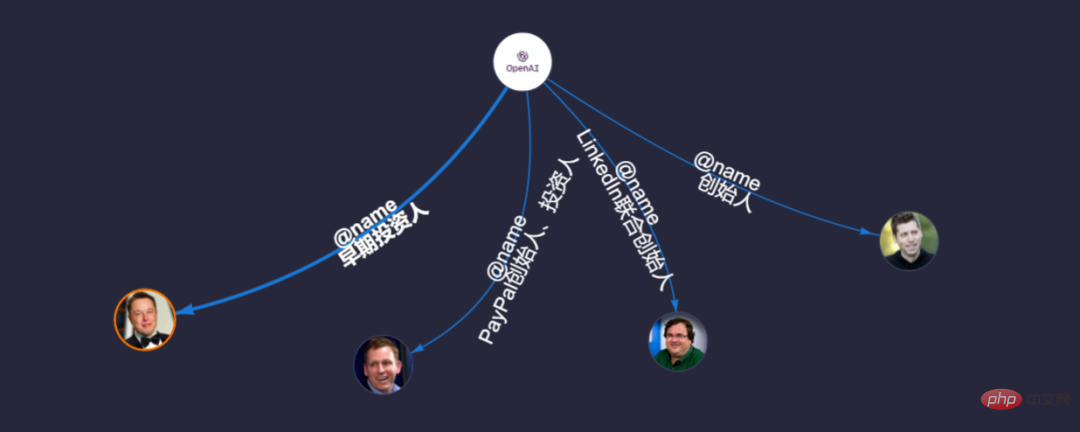

points (entities) and edges (relationships). It can integrate people, things, things and other related information to form a comprehensive graph, as shown below.

Figure 4: Graph (subgraph) composed of character points and attribute edges

Figure 4: Graph (subgraph) composed of character points and attribute edges

When asked "Who is the founder of OpenAI?", the chatbot's brain begins to quickly search and find in its own knowledge base, first identifying the target from the user's question Click "penAI", and then based on the user's question, another point will appear - the founder "Sam Altman". Figure 5: Connecting from the point "OpenAI" to another point "Sam Altman" by an edge In fact, when When we ask "Who is the founder of OpenAI", the chatbot will associate all the pictures surrounding the point in its own knowledge base. Therefore, when we ask relevant questions, it has already predicted our predictions. For example, when we ask: "Is Musk a founding team member of OpenAI?" With just one command, it has already queried all the members (replicating one case from a thousand), as shown in the figure below. Figure 6: Link to other characters by clicking “OpenAI”

In addition, if there are other "learning materials" included in its library, then its "brain" will also be associated with related pictures such as "What are the products of artificial intelligence robots?" as shown below.

Figure 7: Common AI robot product map

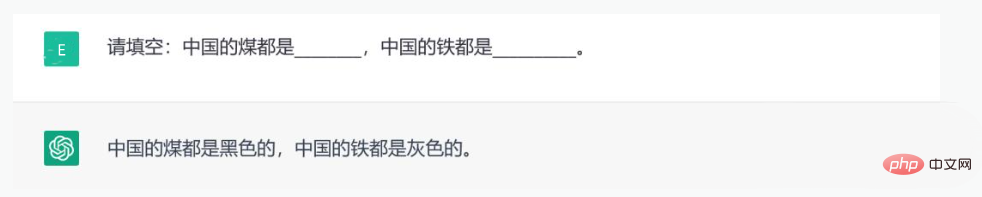

Of course, Chatbots are just like people. Answering questions will be limited by their own knowledge reserves, as shown in the figure below:

We know that the speed of a person’s brain determines What is the judgment of being unhappy, smart or not? From a human perspective, one of the simplest criteria is the ability to draw inferences from one example.

Confucius said: "If you are not angry, you will not be enlightened, if you are not angry, you will not be angry. If you do not counterattack with three things, you will never recover."

——The Analects of Confucius·Shuerpian

As early as two thousand years ago, Confucius emphasized the importance of being able to draw inferences from one instance, to draw inferences from one to the other, and to draw parallels. For chatbots, the quality of their answers depends on the computing power of building the knowledge graph.

We know that the construction ofgeneral knowledge graphs has focused on NLP and visual presentation for a long time, but ignored calculation timeliness and data modeling. Issues such as flexibility, query (calculation) process and result interpretability. Especially now that the whole world is transforming from the big data era to the deep data era, the shortcomings of the traditional graphs built based on SQL or NoSQL in the past are no longer capable of efficiently processing massive, complex, and dynamic data, let alone correlation. , mining and analyzing insights? So, what are the characteristics of the challenges faced by traditional knowledge graphs?

First, low computing power (inefficiency). The underlying architecture of the knowledge graph built using SQL or NoSQL database systems is inefficient and cannot process high-dimensional data at high speed.

Second, the flexibility is poor. Knowledge graphs built based on relational databases, document databases or low-performance graph databases are usually limited by the underlying architecture and cannot efficiently restore the real relationships between entities. For example, some of them only support simple graphs. When entering multilateral graph data, either information is easily lost, or it is costly to compose the graph.

Third, it’s just a superficial thing. Before 2020, few people really paid attention to the underlying computing power, and almost all knowledge graph system construction only focused on NLP and visualization. A knowledge graph without underlying computing power is only about the extraction and construction of ontology and triples, and does not have the ability to solve problems such as in-depth query, speed and interpretability. [Note: Here, we will not talk about the performance comparison between traditional relational databases and graph databases. Interested readers can read: Graph databases and graph databases What are the differences between relational databases? What problems does the graph database solve? ] At this point, we have talked about the topic of intelligent knowledge graph of chat robots and talked about another cutting-edge technology - the technical field of graph database (graph computing). What is a graph database (graph computing)? Graph database[See reference 1] is an application of graph theory that can store the attributes of entities The relationship information between information and entities. In terms of definition, Graph (Graph) is based on nodespoint## The data structure defined by #[See Reference 2] and edge[See Reference 2]. The graph is the foundation of knowledge graph storage and application services. It has strong data association and knowledge expression capabilities, so it is popular in academia and industry. of admiration.

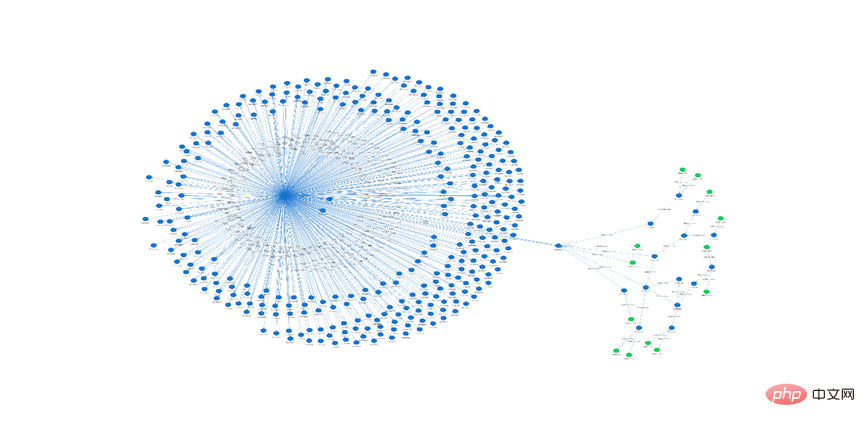

As shown in the figure above, we see that with the help of the real-time graph database (graph computing) engine, the industry can analyze different data in real time We can find various relationships with deep correlations between them, and even find optimal intelligent paths that are beyond the reach of the human brain - this is due to the high dimensionality of graph databases.

What is high dimensionality? Graphs not only serve as a tool that conforms to the thinking habits of the human brain and can intuitively model the real world, but also can establish deep insights (deep graph traversal).

For example, everyone knows the "butterfly effect", which is to capture the subtle relationship between two or more seemingly unrelated entities in a massive amount of data and information. From the perspective of data processing architecture, this is extremely difficult to achieve without the help of graph database (graph computing) technology. [Note: The topic of how to distinguish graph databases from graph computing will not be discussed here. Interested friends can read: What are the challenges from “graphs”? How to distinguish graph databases from graph computing? Quick explanation in one article]

Figure 9: Over the past 40 years, the development trend of data processing technology has been from relational to big data to Picture data

Figure 9: Over the past 40 years, the development trend of data processing technology has been from relational to big data to Picture data

Risk control is one of the typical scenarios. The 2008 financial crisis was triggered by the collapse of Lehman Brothers, the fourth largest investment bank in the United States. However, no one expected that the collapse of a 158-year-old investment bank would trigger a series of subsequent failures in the international banking industry. The trend...the breadth and scope of its impact are unexpected; and real-time graph database (graph computing) technology can find all the key nodes, risk factors, and risk propagation paths of risks...and then control Provide advance warning of all financial risks.

#[Note: The above compositions are all completed on Ultipa Manager. Friends who are willing to learn and explore further can read one of the series of articles: Entering into the high visualization of Ultipa Manager]

It should be pointed out that although many manufacturers can construct knowledge graphs nowadays, the reality is that among every 100 graph companies, less than 5 (low-performance) graph databases use (high-performance) graph databases to support computing power. at 5%).

Ultipa is the only fourth-generation real-time graph database in the world. It is realized through innovative patented technologies such as high-density concurrency, dynamic pruning, and multi-level storage computing acceleration. Ultra-deep real-time drilldown on data sets of any size.

First, high computing power.

Take finding the ultimate beneficiary of the enterprise (also known as the actual controller and major shareholder) as an example. The challenge with this type of problem is that in the real world, there are often many nodes (shell company entities) between the ultimate beneficiary and the company entity being inspected, or there may be multiple investment or shareholding paths between multiple natural persons or corporate entities. Control over other companies. Traditional relational databases or document databases, and even most graph databases, cannot solve this kind of graph penetration problem in real time.

Ultipa Yingtu real-time graph database system solves many of the above challenges. Its high-concurrency data structure and high-performance computing and storage engine can conduct in-depth mining 100 times or even faster than other graph systems, and find the ultimate beneficiary or discover a huge investment relationship in real time (within microseconds) network. On the other hand, microsecond latency means higher concurrency and system throughput, which is a 1000x performance improvement compared to systems that claim millisecond latency!

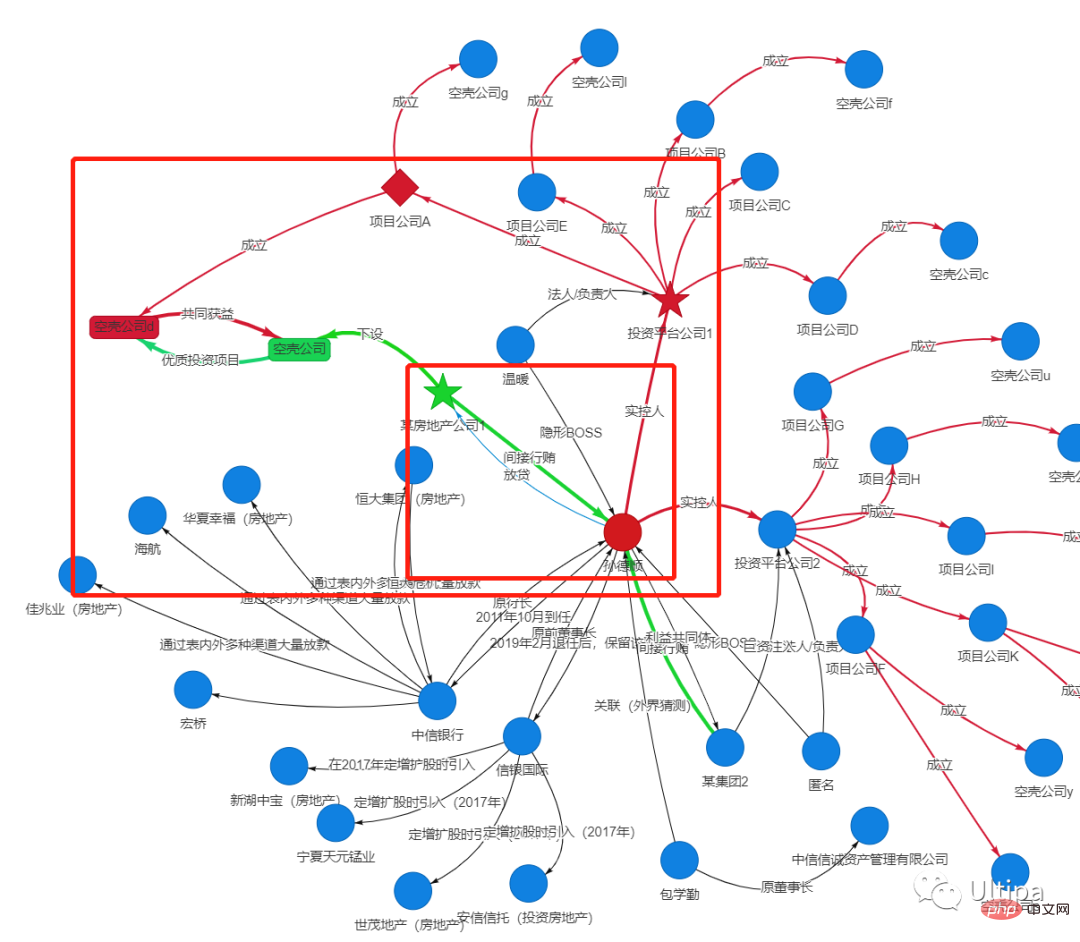

Taking a real-life scenario as an example, Sun De, the former president of China CITIC Bank, used financial means to complete the transfer of benefits by opening multiple "shadow companies".

Figure 11: Sun Deshun designed multiple "firewalls" with extremely complex structures, with multiple layers of shadow companies nested layer by layer. , to avoid supervision and obtain benefits

Figure 12: Connection: Sun Deshun - CITIC Bank - Business owner - (empty shell Company) Investment Platform Company - Sun Deshun

##As shown in the picture above, Sun Deshun uses CITICBank has the public power to grant loans to business bosses; correspondingly, business bosses may use the name of investment to Or send high-quality investment projects, investment opportunities, etc.; both parties complete direct transactions through their respective shell companies; or the business owner transfers huge sums of money to Inject it into the investment platform company actually controlled by Sun Deshun, and then the platform company will use these funds to invest in projects provided by the boss, thereby making money from money, and everyone will work together Share profits and dividends, and ultimately form a community of interests.

Ultipa real-time graph database system, through white box penetration, digs out the intricate relationships between people, people and companies, and companies and companies. , and lock the final person behind the scenes in real time.

The second is flexibility.

The flexibility of the graph system can be a very broad topic, which generally includes data modeling, query and calculation logic, result presentation, interface support, scalability, etc. part.

Data modeling is the foundation of all relational graphs and is closely related to the underlying capabilities of the graph system (graph database). For example, a graph database system built on a column database like ClickHouse cannot carry financial transaction graphs at all, because the most typical feature of a transaction network is multiple transfers between two accounts, but ClickHouse tends to merge multiple transfers into one. This unreasonable approach can lead to data confusion (distortion). Some graph database systems built based on the concept of single-sided graphs tend to use vertices (entities) to express transactions. As a result, the amount of data is amplified (storage waste), and the complexity of graph queries increases exponentially (timeliness changes). Difference).

The interface support level is related to user experience. To give a simple example, if a graph system in a production environment only supports CSV format, then all data formats must be converted to CSV format before they can be included in the graph. The efficiency is obviously too low. However, this is true in many graph systems. existing.

What about the flexibility of query and calculation logic? Let's still take the "butterfly effect" as an example: Is there some kind of causal (strong correlation) effect between any two people, things or things in the map? If it is just a simple one-step correlation, any traditional search engine, big data NoSQL framework or even relational database can solve it. But if it is a deep correlation, such as the correlation between Newton and Genghis Khan, how to calculate it? Woolen cloth?

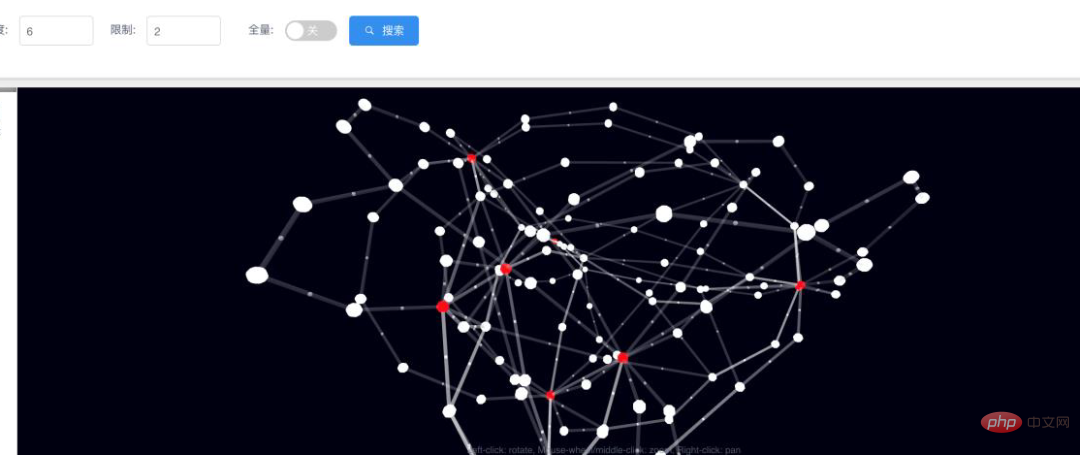

Ultipa Yingtu real-time graph data system can provide more than one way to solve the above problems. For example, point-to-point deep path search, multi-point network search, template matching search based on certain fuzzy search conditions, and graph-oriented fuzzy text path search similar to Web search engines.

Figure 13: Visualized results of real-time networking in a large graph (forming a subgraph) search depth ≥ 6 hops

There are many other tasks on the graph that must rely on high flexibility and computing power, such as finding points, edges, and paths based on flexible filtering conditions; pattern recognition, community, Customer group discovery; finding all or specific neighbors of a node (or recursively discovering deeper neighbors); finding entities or relationships with similar attributes in the graph... In short, a knowledge graph without the support of graph computing power is like having no soul. The body is nothing but superficial. Unable to complete various challenging and in-depth search capabilities.

The third is, low code, what you see is what you get.

In addition to the high computing power and flexibility mentioned above, the graph system also needs to be white-boxed (interpretable) and form-based (low code, no code ) and the ability to empower the business in a WYSIWYG manner.

Figure 14: One-click search for zero code, just fill in the value of the search range, and 2D, 3D, list, table and even It is the flexible transformation of multiple visual modes for heterogeneous data fusion

In the Ultipa real-time graph database system, developers only need to type one sentence of Ultipa GQL to complete the operation, and Business personnel can use preset form-based plug-ins to query business with zero code. This approach has greatly helped employees improve work efficiency, empowered organizations to reduce operating costs, and opened up communication barriers between departments.

To sum up, the combination of knowledge graph and graph database will help all walks of life to accelerate the business construction of data center, but such as the financial industry requires professionalism and security. In industries with high reliability, stability, real-time and accuracy, the use of relational databases to support upper-layer applications cannot provide good data processing performance, or even complete data processing tasks. Therefore, only real-time, comprehensive, deep penetration, and step-by-step capabilities can be achieved. Only graph database (graph computing) technology with pen traceability, precise measurement monitoring and early warning performance can empower organizations to better plan strategies and win thousands of miles!

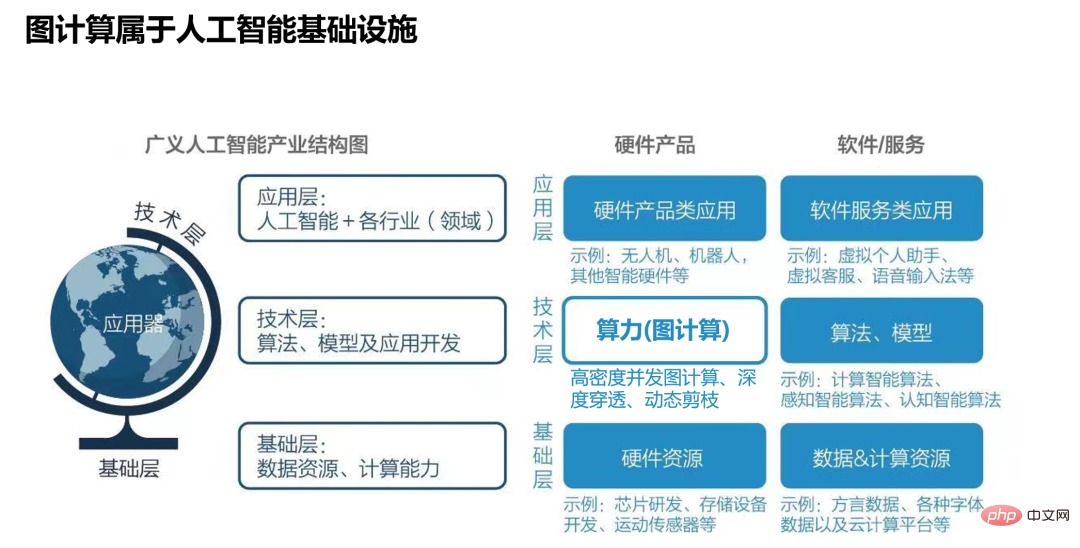

At this point, I suddenly remembered the hit "The Three-Body Problem", which mentioned a very interesting point - the sophon lock. It probably means that in order to prevent the Earth's technology from surpassing it, the Trisolaran civilization has carried out various obstacles by blocking basic human science. Because the leap of human civilization depends on the development and major breakthroughs of basic science, blocking basic science of human beings is equivalent to blocking the earth’s path to improve the level of civilization... Of course, what the author wants to tell you is that graph technology belongs to artificial intelligence One of the infrastructures, to be precise, is graph technology = enhanced intelligence explainable AI. It is an inevitable product of the integration of AI and big data in the development process.

Figure 15: Graph database (graph computing) technology, which belongs to artificial intelligence infrastructure

The above is the detailed content of How does a chatbot answer questions through a knowledge graph?. For more information, please follow other related articles on the PHP Chinese website!