Author | Xu Jiecheng

Reviewer | Yun Zhao

100 trillion, how big is this number? If you have the superpower of winning 5 million lottery tickets every day, then if you save all the money without eating or drinking, you still need to live about 5,500 years to save 100 trillion in wealth. However, the 100 trillion I want to talk to you about today is not behind the coveted units such as “RMB” and “Dollor”. The 100 trillion here refers to the number of parameters owned by OpenAI, an artificial intelligence research company co-founded by many Silicon Valley technology tycoons, that is about to release the fourth generation of generative pre-training Transformer-GPT-4.

In order to facilitate everyone to understand this data more intuitively, we can use the human brain to compare with GPT-4. Under normal circumstances, a normal human brain has about 80-100 billion neurons and about 100 trillion synapses. These neurons and synapses directly control almost all thoughts, judgments and behaviors of a person in a hundred years of life, and GPT-4 has as many parameters as synapses in the human brain. So, what is the potential of such a large-scale dense neural network? What surprises will the emergence of GPT-4 bring to us? Do we really have the ability to create a human brain?

Before exploring these exciting questions, we might as well first understand the development history of several "predecessors" of GPT-4.

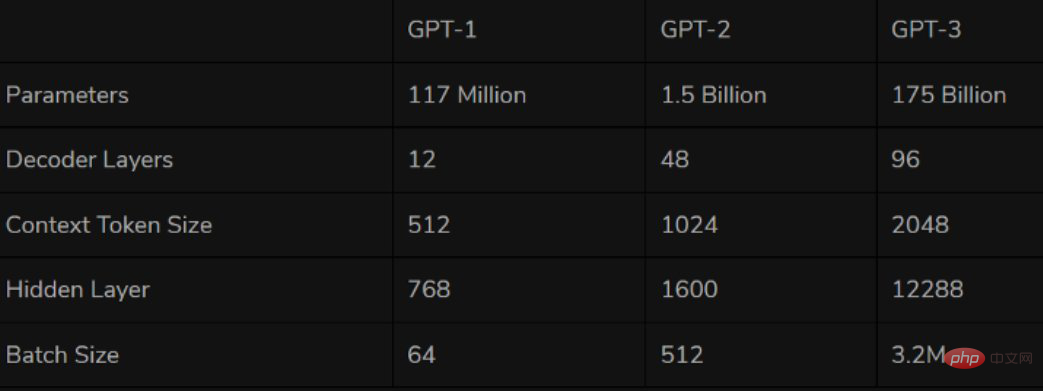

The first GPT series model GPT-1 was born in 2018, which is us The first year of the often said NLP pre-training model. As the first pre-training model based on Transformer, GPT-1 adopts two stages of pre-training FineTuning, using Transformer's decoder as the feature extractor. It has a total of 12 layers stacked with 110 million parameters. The pre-training stage uses "one-way Language model" as a training task.

In terms of performance, GPT-1 has a certain generalization ability and can be used in NLP tasks that have nothing to do with supervision tasks. Common tasks include:

Although GPT-1 has some effects on untuned tasks, its generalization ability is much lower than that of fine-tuned supervised tasks, so GPT-1 can only be regarded as a pretty good one. language understanding tools rather than conversational AI.

One year after the advent of GPT-1, GPT-2 also arrived as scheduled in 2019. Compared with its big brother GPT-1, GPT-2 did not make too many structural innovations and designs on the original network. It only used more network parameters and a larger data set: the largest model has a total of 48 layers, and the parameters The amount reaches 1.5 billion, and the learning target uses an unsupervised pre-training model to perform supervised tasks.

Source: Twitter

In terms of performance, OpenAI’s great strength seems to really bring some miracles. In addition to its understanding ability, GPT-2 has for the first time shown a strong talent in generation: reading summaries, chatting, continuing to write, making up stories, and even generating fake news, phishing emails or pretending to be others online is all a breeze. After "becoming bigger", GPT-2 did demonstrate a series of universal and powerful capabilities, and achieved the best performance at the time on multiple specific language modeling tasks. It’s no wonder that OpenAI said at the time that “GPT-2 was too dangerous to release.”

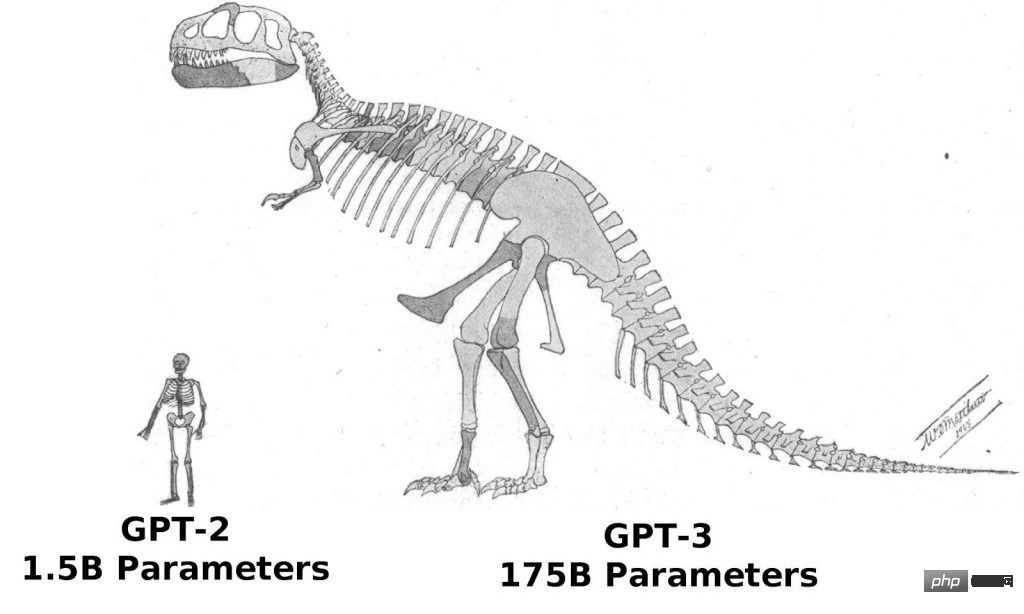

Since the success of GPT-2, OpenAI’s belief in the “Hercules” has become increasingly firm. GPT-3, released in 2020, continues to follow the development of micro-innovation and rapid expansion. ideas. Except that the Transformer in GPT-3 applies the Sparse structure, there is almost no difference in the structure of GPT-3 and GPT-2. In terms of "vigor", the GPT-3 model has reached 96 layers, and the training parameters have reached 175 billion (more than 10 times that of GPT-2).

GPT-3 has once again proved OpenAI’s vision. Due to GPT-3’s stronger performance and significantly more parameters, it contains more topic texts, which is obviously better than the previous generation GPT-2. As the largest dense neural network currently available, GPT-3 can convert web page descriptions into corresponding codes, imitate human narratives, create custom poems, generate game scripts, and even imitate deceased philosophers-predicting the true meaning of life. And GPT-3 does not require fine-tuning, it only requires a few samples of the output type (a small amount of learning) to deal with difficult grammar problems. It can be said that GPT-3 seems to have satisfied all our imaginations for language experts.

Speaking of this, I believe everyone will have the same question - GPT-3 is already very Now that it is powerful, what else can we look forward to in GPT-4?

As we all know, the core way to test the intelligence of an AI system is the Turing test. When we are still unable to use scientific quantifiable standards to define the concept of human intelligence, The Turing test is currently one of the few feasible testing methods that can determine whether the other party has human intelligence. To use a proverb: If something looks like a duck, walks like a duck, and quacks like a duck, then it is a duck. Therefore, if the AI system can successfully pass the Turing test, it means that the system has human thinking and may replace humans in some aspects. According to Korean IT media reports, since mid-November, the industry has reported that GPT-4 has fully passed the Turing test. South Korea's Vodier AI company executive Nam Se-dong said in a recent interview with South Korea's "Economic News": "Although the news that GPT-4 passed the Turing test has not been officially confirmed, the news should be quite credible."

Sam Altman, a technical person and current CEO of OpenAI, also seemed to confirm this information on his Twitter. Altman tweeted on Nov. 10, imitating a classic line from the "Star Wars" character Darth Vader: "Don't be proud of this technological panic you've created. The ability to pass the Turing Test is in 'original' There is nothing you can do in the face of force."

Source: Twitter

An executive from an AI startup company analyzed that “if GPT-4 really passed the Turing test perfectly, then its impact would be enough to cause a 'technological panic' in the AI world, so Altman used the character of Darth Vader to announce this information."

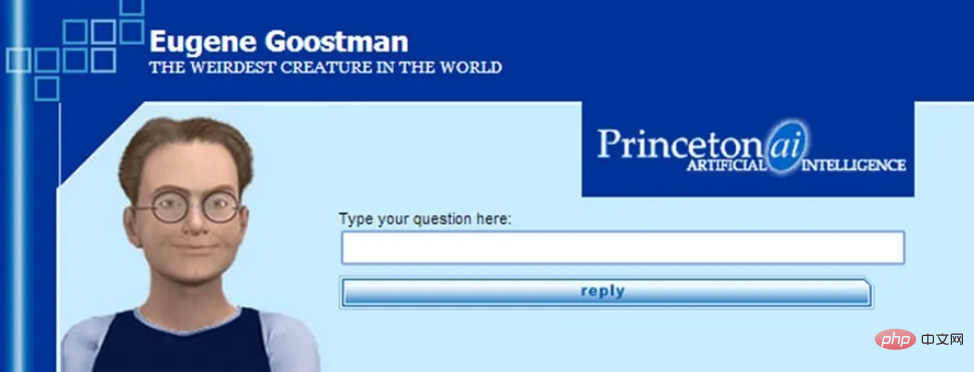

If GPT-4 passes the Turing test without any restrictions, it will indeed make history. Although some AI models have previously claimed to pass the Turing test, they have never been unanimously recognized by the AI industry. This is because the standards and rules of the Turing test are not clear, so many models cleverly exploit some "blind spots" in the test. The AI model "Eugene" launched by the University of Reading in the UK in 2014 is a typical example. At that time, the University of Reading claimed to the judges that the model was a 13-year-old Ukrainian boy, so when the algorithm could not give a good answer At that time, the jury believed that this was because the test subject was a foreign child.

Source: Internet

Although the Turing test is not the absolute reference point for AI technology, But as the oldest and most well-known AI technology test to date, the Turing Test still has great symbolic significance. If GPT-4 really officially and definitely passes the Turing test, then it will most likely create the largest milestone in the AI industry so far.

In addition, unlike GPT-3, GPT-4 will most likely be more than just a language model. Ilya Sutskever, chief scientist of OpenAI, once hinted at this in his multi-modal related article - "Text itself can express a lot of information about the world, but it is incomplete after all, because we also live in a visual world. .” Therefore, some experts in the industry believe that GPT-4 will be multi-modal and can accept audio, text, images and even video inputs, and predict that OpenAI’s Whisper audio data set will be used to create GPT-4. text data. This also means that GPT-4 will no longer have any limitations in receiving and processing external information.

The reason why the industry is paying attention to GPT-4 is probably because the actual commercial threshold of GPT-4 will be lower than that of traditional GPT-3. Enterprises that have previously been unable to use related technologies due to huge costs and infrastructure reasons will also be expected to use GPT-4. GPT-4 is currently in the final stage of listing and will be released between December this year and February next year. Alberto Garcia, an analyst at Cambridge AI Research, published a blog and predicted: "GPT-4 will focus more on optimizing data processing, so the learning cost of GPT-4 is expected to be lower than GPT-3. The learning cost per episode of GPT-4 It will probably be reduced from millions of dollars for GPT-3 to about $1 million."

If all the above information is true, then we can foresee at this moment that with the release of GPT-4, next year The field of deep learning research will usher in a new wave; a large number of chat service robots that are more advanced, more natural and whose identity is almost impossible to distinguish will likely appear in various industries; on this basis, there will also be more high-level chat robots. High-quality personalized AI services are born from different traditional businesses; we will also most likely realize barrier-free communication with cognitive intelligence for the first time.

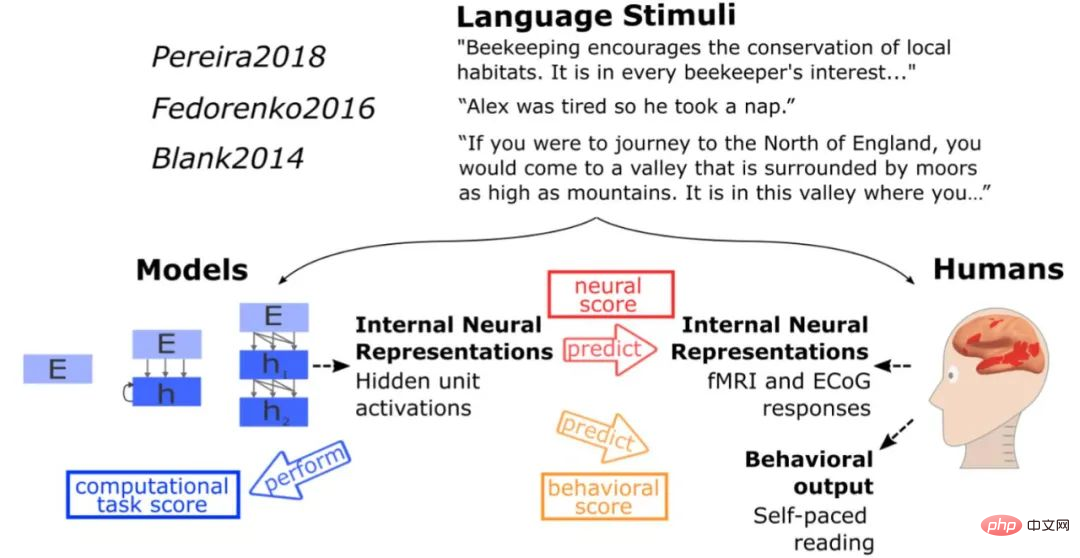

Let’s go back to the problem of manufacturing or simulating human brains mentioned at the beginning. According to a study by MIT, although the neural network in GPT-3 does not attempt to directly imitate the human brain, the language processing method presented by GPT-3 has certain similarities with the solutions obtained during the evolution of the human brain. , when the same stimulus as the test human brain was input to the model, the model obtained the same type of activation as the human brain, and in more than 40 language model tests, GPT-3 made almost perfect inferences. The basics of these models The function is indeed similar to that of the language processing center of the human brain. In this regard, Daniel Yamins, assistant professor of psychology and computer science at Stanford University, also said: "The artificial intelligence network does not directly imitate the brain, but it ends up looking like the brain. This in a sense shows that there is a gap between artificial intelligence and nature. There seems to be some convergent evolution taking place.”

Source: Internet

It can be seen that although the GPT series models do not directly use blue The design idea of the Brain Project is to simulate the brain structure, but the effect it presents seems to be closer to our expectations than the Blue Brain Project. Therefore, if this research direction is really feasible and GPT-4 can achieve a leap-forward breakthrough in some aspects based on GPT-3, then we will be one step closer to the goal of simulating some functions of the human brain. .

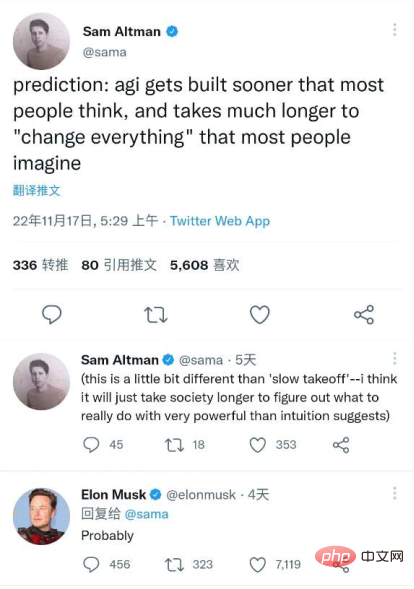

Finally, I would like to end with a quote from OpenAI CEO Sam Altman’s recent post on Twitter, which was also recognized by “Silicon Valley Iron Man” Elon Musk—— "General artificial intelligence will be established faster than most people think, and it will 'change' everything most people imagine over a long period of time."

Source: Twitter

https:/ /dzone.com/articles/what-can-you-do-with-the-openai-gpt-3-language-mod

https://analyticsindiamag.com/gpt-4-is-almost- here-and-it-looks-better-than-anything-else/

https://analyticsindiamag.com/openais-whisper-might-hold-the-key-to-gpt4/

The above is the detailed content of The imminent release of GPT4 is comparable to the human brain, and many industry leaders cannot sit still!. For more information, please follow other related articles on the PHP Chinese website!