The technology of generating images from a single natural image is widely used and has therefore received more and more attention. This research aims to learn an unconditional generative model from a single natural image to generate different samples with similar visual content by capturing patch internal statistics. Once trained, the model can not only generate high-quality, resolution-independent images, but can also be easily adapted to a variety of applications, such as image editing, image harmonization, and conversion between images.

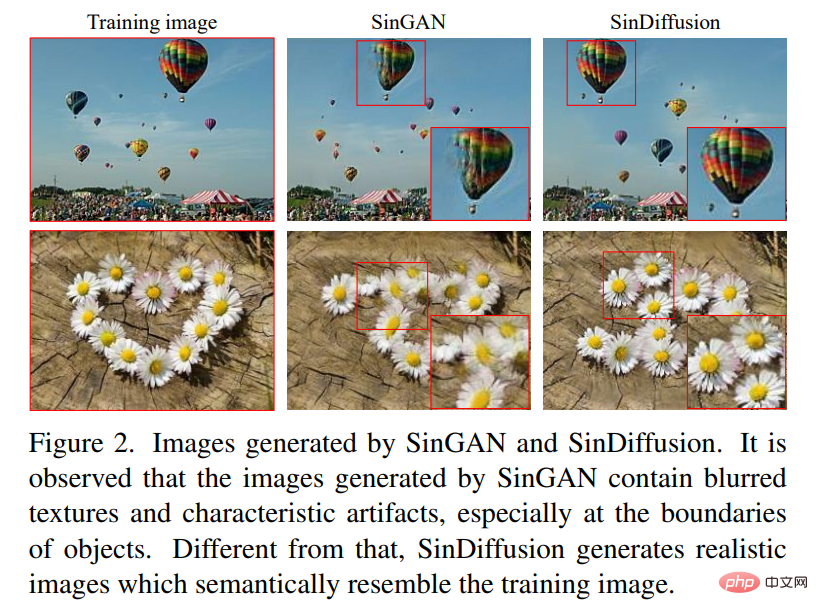

SinGAN can meet the above requirements. This method can construct multiple scales of natural images and train a series of GANs to learn the internal statistics of patches in a single image. The core idea of SinGAN is to train multiple models at progressively increasing scales. However, the images generated by these methods can be unsatisfactory because they suffer from small-scale detail errors, resulting in obvious artifacts in the generated images (see Figure 2).

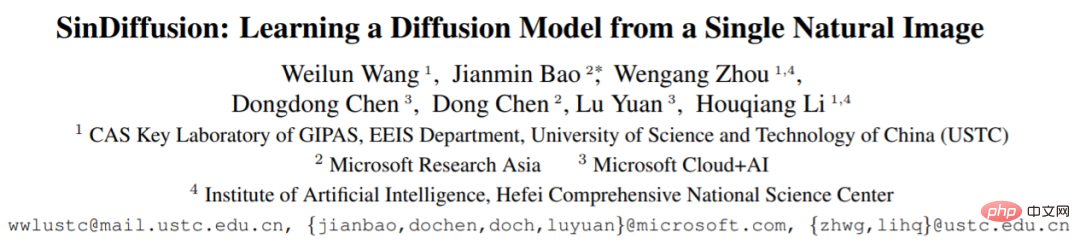

In this article, researchers from the University of Science and Technology of China, Microsoft Research Asia and other institutions proposed a new Framework - Single-image Diffusion (SinDiffusion, Single-image Diffusion), for learning from a single natural image, which is based on the Denoising Diffusion Probabilistic Model (DDPM). Although the diffusion model is a multiple-step generation process, it does not have the problem of cumulative errors. The reason is that the diffusion model has a systematic mathematical formula, and errors in intermediate steps can be regarded as interference and can be improved during the diffusion process.

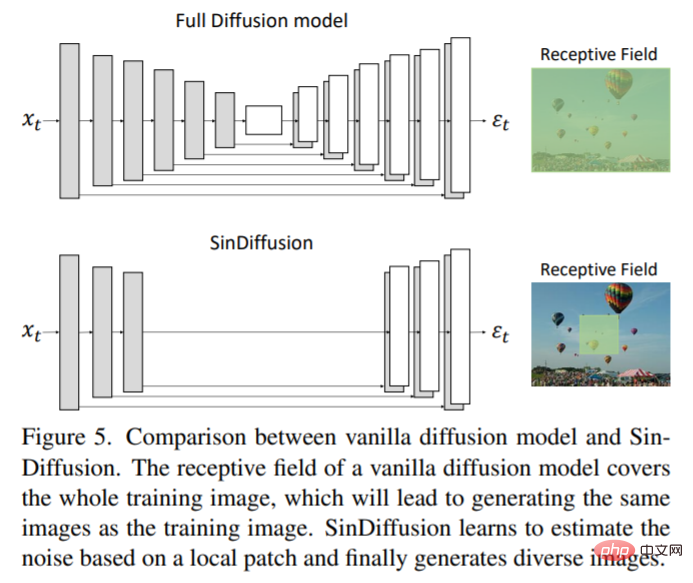

Another core design of SinDiffusion is to limit the receptive field of the diffusion model. This study reviewed the network structure commonly used in previous diffusion models [7] and found that it has stronger performance and deeper structure. However, the receptive field of this network structure is large enough to cover the entire image, which causes the model to tend to rely on memory training images to generate images that are exactly the same as the training images. In order to encourage the model to learn patch statistics instead of memorizing the entire image, the research carefully designed the network structure and introduced a patch-wise denoising network. Compared with the previous diffusion structure, SinDiffusion reduces the number of downsampling and the number of ResBlocks in the original denoising network structure. In this way, SinDiffusion can learn from a single natural image and generate high-quality and diverse images (see Figure 2).

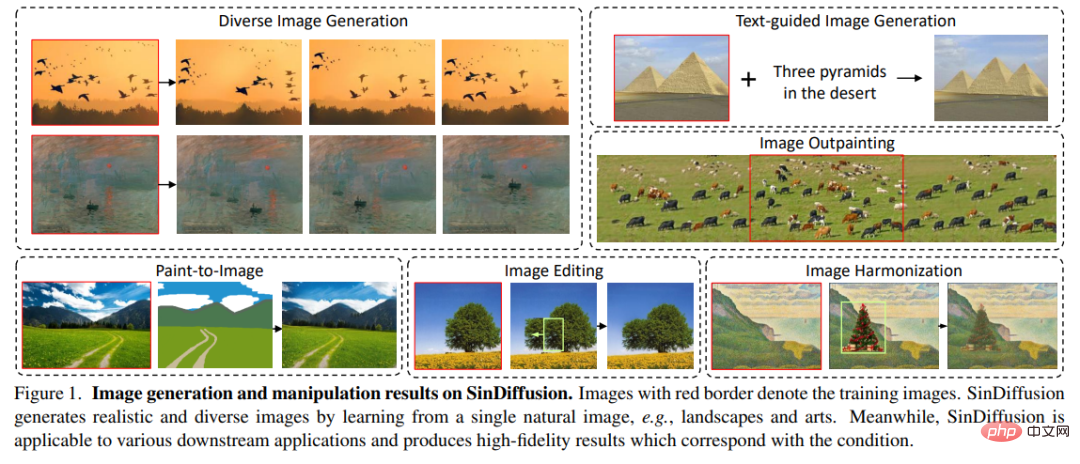

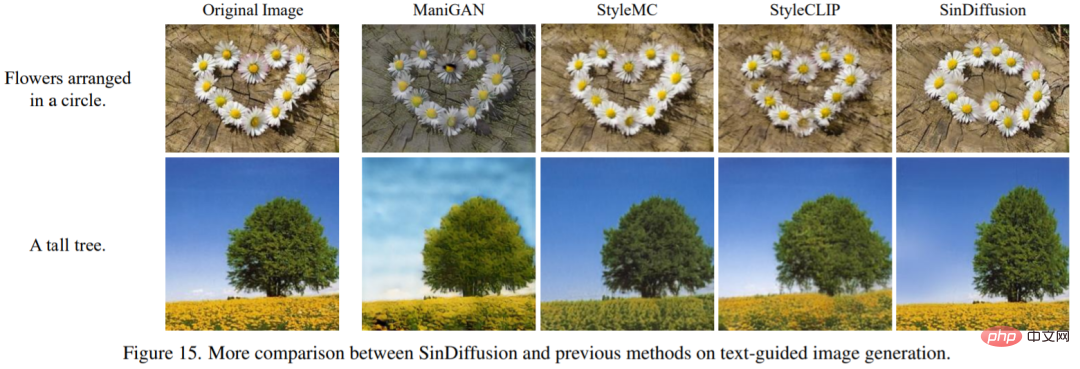

The advantage of SinDiffusion is that it can be flexibly used in various scenarios (see Figure 1). It can be used in various applications without any retraining of the model. In SinGAN, downstream applications are mainly implemented by inputting conditions into pre-trained GANs at different scales. Therefore, the application of SinGAN is limited to those given spatially aligned conditions. In contrast, SinDiffusion can be used in a wider range of applications by designing the sampling procedure. SinDiffusion learns to predict the gradient of a data distribution through unconditional training. Assuming there is a scoring function describing the correlation between generated images and conditions (i.e., L−p distance or a pre-trained network such as CLIP), this study utilizes the gradient of the correlation score to guide the sampling process of SinDiffusion. In this way, SinDiffusion is able to generate images that fit both the data distribution and the given conditions.

The study conducted experiments on various natural images to demonstrate the advantages of the proposed framework. The experimental subjects include Landscapes and famous art. Both quantitative and qualitative results confirm that SinDiffusion can produce high-fidelity and diverse results, while downstream applications further demonstrate the utility and flexibility of SinDiffusion.

Different from the progressive growth design in previous studies, SinDiffusion uses a single denoising model at a single scale for training, preventing the accumulation of errors. In addition, this study found that the patch-level receptive field of the diffusion network plays an important role in capturing the internal patch distribution, and designed a new denoising network structure. Based on these two core designs, SinDiffusion generates high-quality and diverse images from a single natural image.

The rest of this section is organized as follows: first we review SinGAN and show the motivation of SinDiffusion, and then introduce the structural design of SinDiffusion.

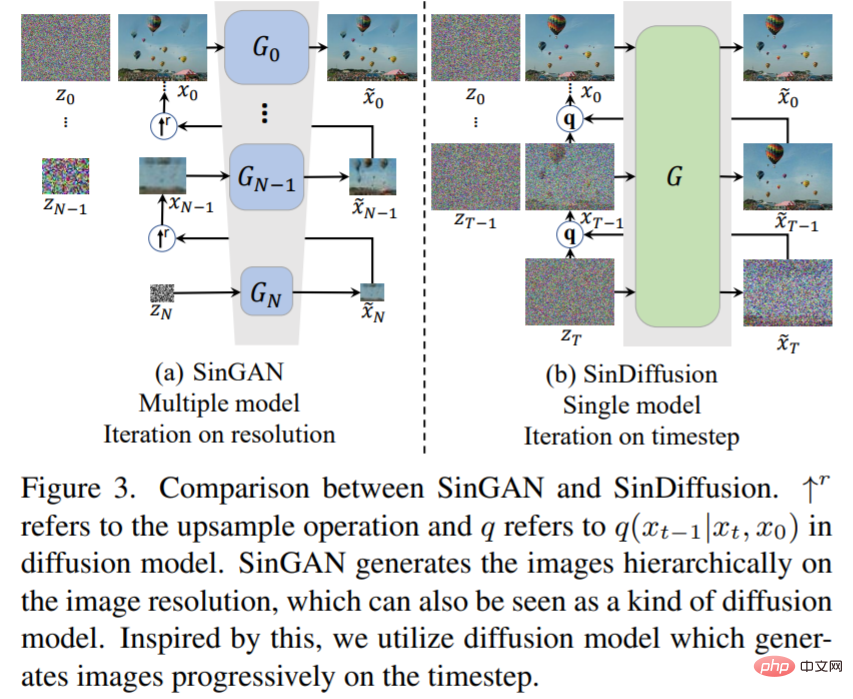

First, let’s briefly review SinGAN. Figure 3(a) shows the generation process of SinGAN. In order to generate different images from a single image, a key design of SinGAN is to build an image pyramid and gradually increase the resolution of the generated images.

Figure 3(b) shows the new framework of SinDiffusion. Unlike SinGAN, SinDiffusion performs a multi-step generation process using a single denoising network at a single scale. Although SinDiffusion also uses the same multi-step generation process as SinGAN, the generated results are of high quality. This is because the diffusion model is based on the systematic derivation of mathematical equations, and errors generated by intermediate steps are repeatedly refined into noise during the diffusion process.

SinDiffusion

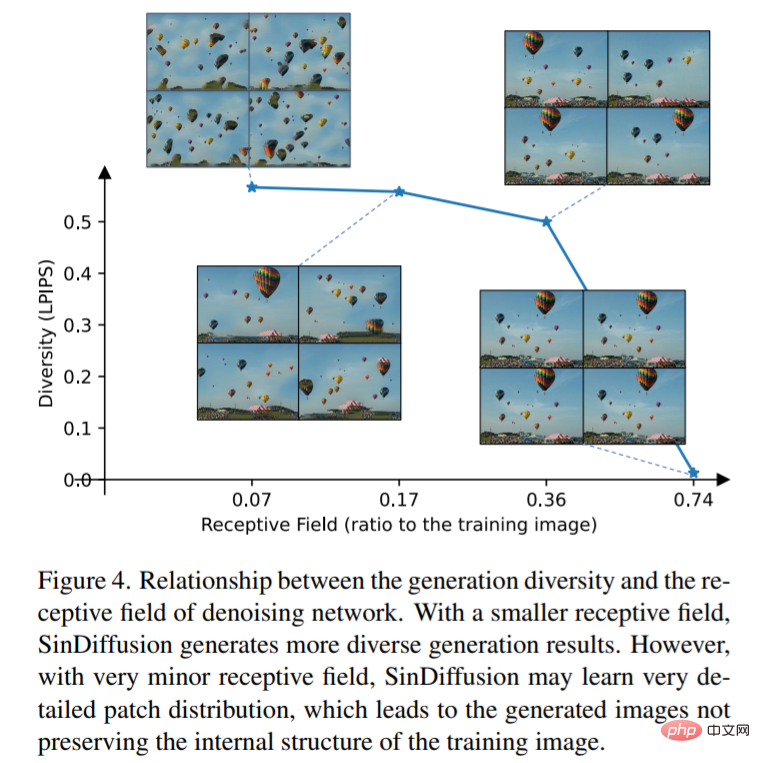

This article studied The relationship between generation diversity and the receptive field of the denoising network - Modifying the network structure of the denoising network can change the receptive field, and four network structures with different receptive fields but equivalent performance were designed to train these models on a single natural image. Figure 4 shows the results generated by the model under different receptive fields. It can be observed that the smaller the receptive field, the more diverse the generated results produced by SinDiffusion and vice versa. However, research has found that extremely small receptive field models cannot maintain the reasonable structure of the image. Therefore, a suitable receptive field is important and necessary to obtain reasonable patch statistics.

This research redesigns the commonly used diffusion model and introduces patch-wise for single image generation Denoising network. Figure 5 is an overview of the patch-wise denoising network in SinDiffusion and shows the main differences from previous denoising networks. First, the depth of the denoising network is reduced by reducing downsampling and upsampling operations, thereby greatly expanding the receptive field. At the same time, the deep attention layers originally used in the denoising network are naturally removed, making SinDiffusion a fully convolutional network suitable for generation at any resolution. Second, the receptive field of SinDiffusion is further limited by reducing the resblock of embedded time in each resolution. This method is used to obtain a patch-wise denoising network with appropriate receptive fields, achieving realistic and diverse results.

The qualitative results of SinDiffusion’s randomly generated images are shown in Figure 6.

It can be found that at different resolutions, SinDiffusion can generate real images with similar patterns to the training images.

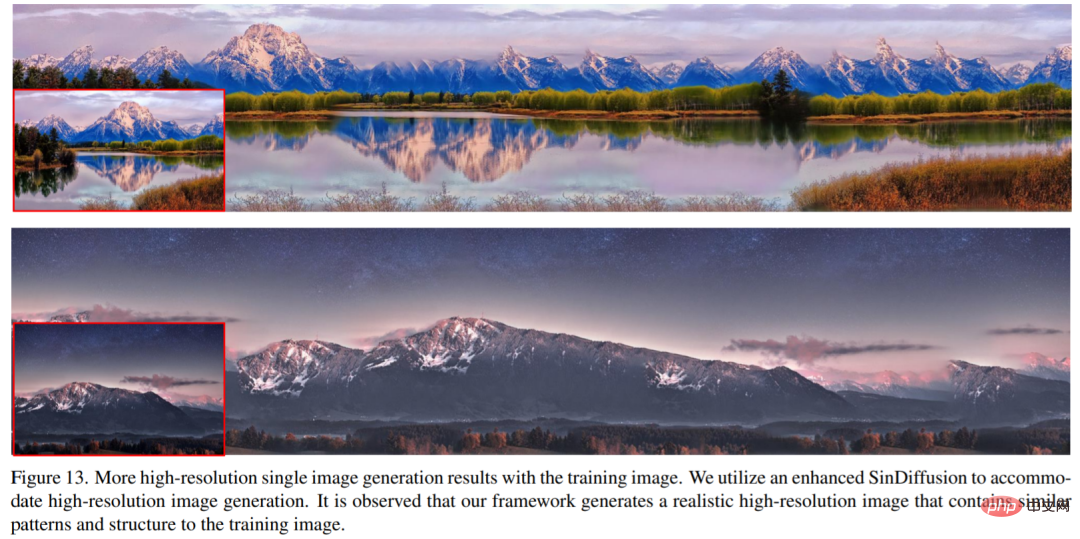

In addition, this article also studies SinDiffusion to generate high-resolution images from a single image. Figure 13 shows the training images and the generated results. The training image is a 486 × 741 resolution landscape image containing rich components such as clouds, mountains, grass, flowers, and a lake. To accommodate high-resolution image generation, SinDiffusion has been upgraded to an enhanced version with larger receptive fields and network capabilities. The enhanced version of SinDiffusion generates a high-resolution long scrolling image with a resolution of 486×2048. The generated effect keeps the internal layout of the training image unchanged and summarizes new content, as shown in Figure 13.

Comparison with previous methods

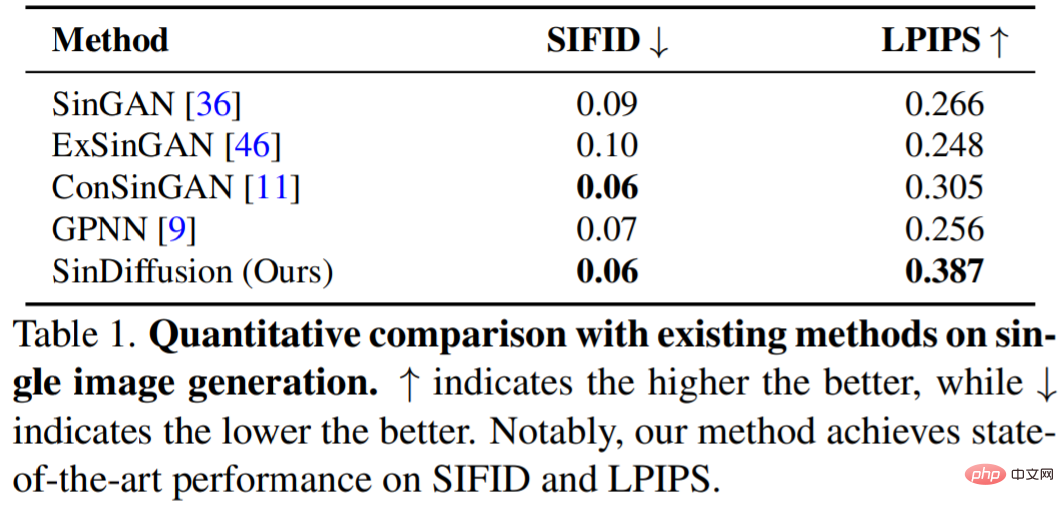

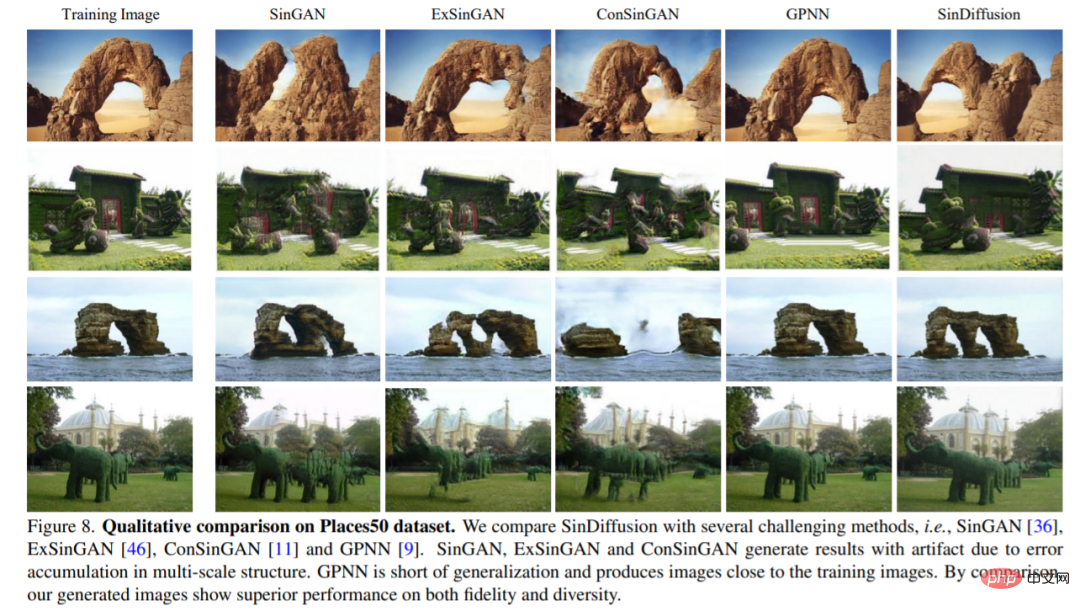

Table 1 shows the difference between SinDiffusion and The quantitative results produced are compared with several challenging methods (i.e., SinGAN, ExSinGAN, ConSinGAN and GPNN). Compared with previous GAN-based methods, SinDiffusion achieved SOTA performance after gradual improvements. It is worth mentioning that the research method in this article has greatly improved the diversity of generated images. On the average of 50 models trained on the Places50 data set, this method surpassed the current most challenging method with a score of 0.082 LPIPS. .

In addition to the quantitative results, Figure 8 also shows the qualitative results on the Places50 dataset.

Figure 15 shows the text-guided image generation results of SinDiffusion and previous methods.

Please see the original paper for more information.

The above is the detailed content of Learning a diffusion model from a single natural image is better than GAN, SinDiffusion achieves new SOTA. For more information, please follow other related articles on the PHP Chinese website!