In the past decade, the landscape of machine learning software development has undergone significant changes. Many frameworks have sprung up, but most rely heavily on NVIDIA's CUDA and get the best performance on NVIDIA's GPUs. However, with the arrival of PyTorch 2.0 and OpenAI Triton, Nvidia’s dominance in this field is being broken.

Google had great advantages in machine learning model architecture, training, and model optimization in the early days, but now it is difficult to fully utilize these advantages. On the hardware side, it will be difficult for other AI hardware companies to weaken Nvidia's dominance. Until PyTorch 2.0 and OpenAI Triton emerge, the default software stack for machine learning models will no longer be Nvidia’s closed-source CUDA.

A similar competition occurs in machine learning frameworks. A few years ago, the framework ecosystem was quite fragmented, but TensorFlow was the front-runner. On the surface, Google seems to be firmly in the machine learning framework industry. They designed the AI application-specific accelerator TPU with TensorFlow, thus gaining a first-mover advantage.

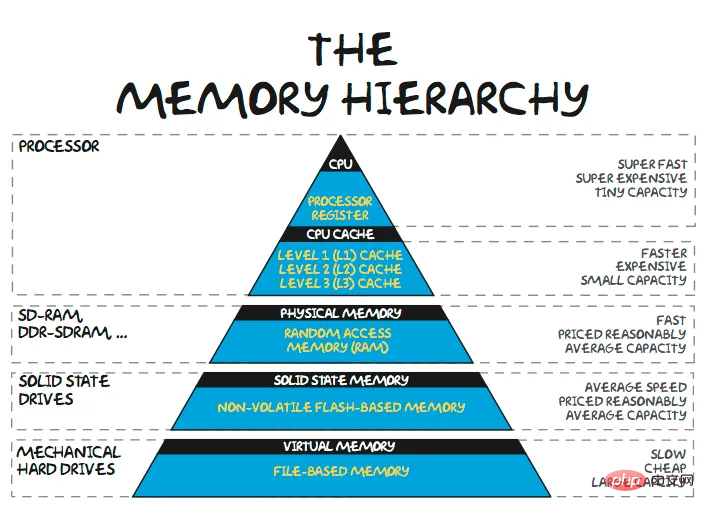

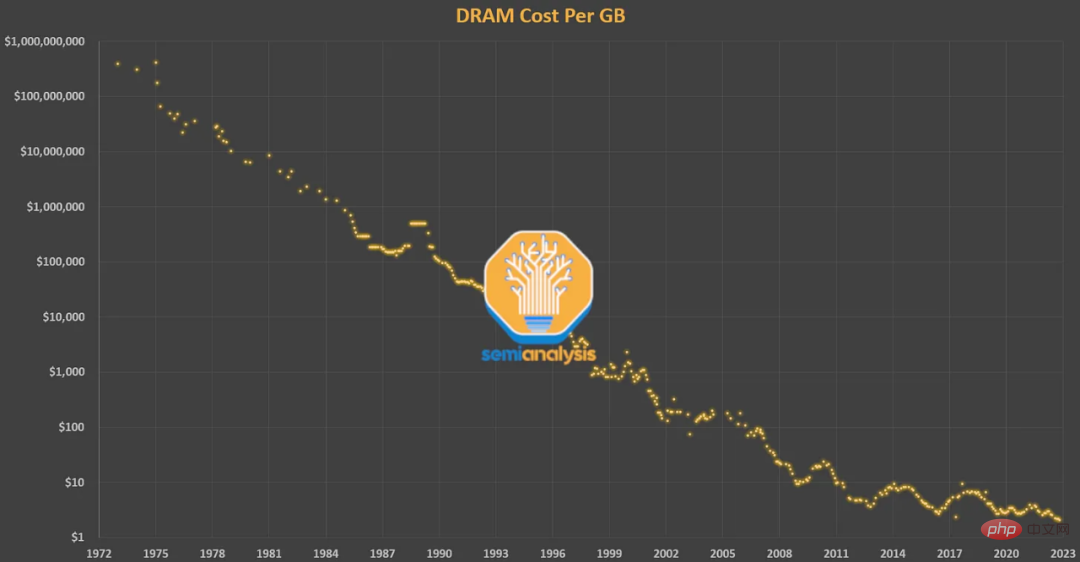

##In 2018, the most advanced model was BERT, and NVIDIA V100 was the most advanced GPU. At that time, matrix multiplication had already is no longer the main factor in improving model performance. Afterwards, models grew by 3 to 4 orders of magnitude in number of parameters, while the fastest GPUs grew by 1 order of magnitude in FLOPS.

Even in 2018, pure compute-bound workloads accounted for 99.8% of FLOPS but only 61% of runtime. Compared to matrix multiplication, normalization and pointwise ops use only 1/250 and 1/700 of the FLOPS of matrix multiplication, but they consume nearly 40% of the model run time.

Increasing the GPU's FLOPS will not help if all the time is spent on memory transfers (i.e., being memory bandwidth limited). On the other hand, if all your time is spent executing large matmuls, then even rewriting the model logic into C to reduce overhead will not help.

The reason why PyTorch can outperform TensorFlow is because Eager mode improves flexibility and usability, but moving to Eager mode is not the only benefit. When running in eager mode, each operation is read from memory, calculated, and then sent to memory before processing the next operation. Without extensive optimization, this can significantly increase memory bandwidth requirements.

So for models executed in Eager mode, one of the main optimization methods is operator fusion. Fusion operations compute multiple functions in a single pass to minimize memory reads/writes, rather than writing each intermediate result to memory. Operator fusion improves operator scheduling, memory bandwidth, and memory size costs.

This kind of optimization usually involves writing a custom CUDA kernel, but this is better than using a simple Python scripts are much harder. Over time, more and more operators have been steadily implemented in PyTorch, many of which simply combine multiple common operations into a more complex function.

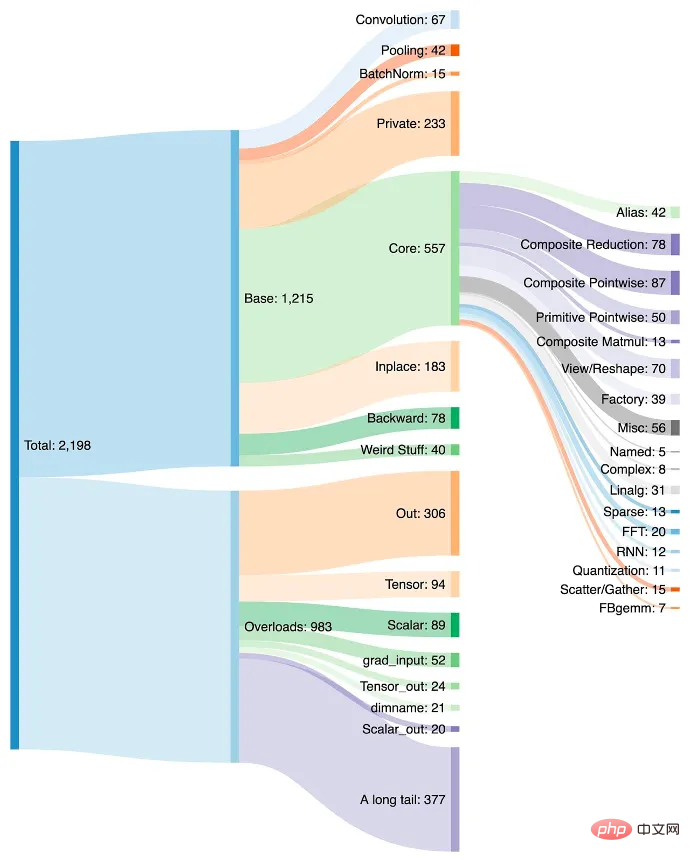

The addition of operators makes it easier to create models in PyTorch, and Eager mode performs faster due to fewer memory reads/writes. The downside is that PyTorch has exploded to over 2000 operators within a few years.

We can say that software developers are too lazy, but to be honest, who has not been lazy. Once they get used to a new operator in PyTorch, they keep using it. The developer may not even realize that performance is improving but continue to use the operator because it eliminates the need to write more code.

In addition, not all operators can be fused. Deciding which operations to combine and which to allocate to specific computing resources at the chip and cluster levels takes a lot of time. Although the strategies for where operators are fused are generally similar, they can vary greatly due to different architectures.

The growth and default position of operators is an advantage for NVIDIA because each operator targets Its architecture is optimized for speed, but is not optimized for any other hardware. If an AI hardware startup wanted to fully implement PyTorch, that would mean supporting a growing list of 2,000 operators with high performance.

Because extracting maximum performance requires so much skill, training large models with high FLOPS utilization on GPUs requires an increasingly high level of talent. Eager mode execution of additive operator fusion means that the software, techniques and models developed are constantly being pushed to accommodate the compute and memory ratios that current generation GPUs have.

Everyone developing a machine learning chip is constrained by the same memory wall. ASICs are limited by supporting the most commonly used frameworks, by default development methods, GPU-optimized PyTorch code, and a mix of NVIDIA and external libraries. In this case, it makes little sense to have an architecture that eschews the various non-computational baggage of the GPU in favor of more FLOPS and a stricter programming model.

However, ease of use comes first. The only way to break the vicious cycle is to make the software that runs models on Nvidia’s GPUs as easy and seamlessly transferable to other hardware as possible. As model architectures stabilize and abstractions from PyTorch 2.0, OpenAI Triton, and MLOps companies like MosaicML become the default, the architecture and economics of chip solutions begin to be the biggest drivers of purchase, rather than the ease of use provided by Nvidia's advanced software sex.

A few months ago, the PyTorch Foundation was established and separated from Meta. In addition to changes to the open development and governance model, 2.0 was released in early beta and became generally available in March. PyTorch 2.0 brings many changes, but the main difference is that it adds a compilation solution that supports a graphical execution model. This shift will make it easier to properly utilize various hardware resources.

PyTorch 2.0 improves training performance by 86% on NVIDIA A100 and inference performance on CPU by 26%. This significantly reduces the computational time and cost required to train the model. These benefits extend to other GPUs and accelerators from AMD, Intel, Tenstorrent, Luminous Computing, Tesla, Google, Amazon, Microsoft, Marvell, Meta, Graphcore, Cerebras, SambaNova, and more.

For currently unoptimized hardware, PyTorch 2.0 has greater room for performance improvement. Meta and other companies are making such huge contributions to PyTorch because they want to achieve higher FLOPS utilization with less effort on their multi-billion dollar GPU training clusters. This way they also have an incentive to make their software stacks more portable to other hardware, introducing competition into the machine learning space.

With the help of better APIs, PyTorch 2.0 can also support data parallelism, sharding, pipeline parallelism and tensor parallelism, bringing progress to distributed training. Additionally, it supports dynamic shapes natively across the stack, which among many other examples makes it easier to support different sequence lengths for LLMs. The picture below is the first time that a major compiler supports Dynamic Shapes from training to inference:

Writing a high-performance backend for PyTorch that fully supports all 2000+ operators is no easy task for every machine learning ASIC except NVIDIA GPUs. PrimTorch reduces the number of operators to approximately 250 original operators while maintaining the same usability for PyTorch end users. PrimTorch makes implementation of different non-NVIDIA backends of PyTorch simpler and more accessible. Custom hardware and systems vendors can more easily roll out their software stacks.

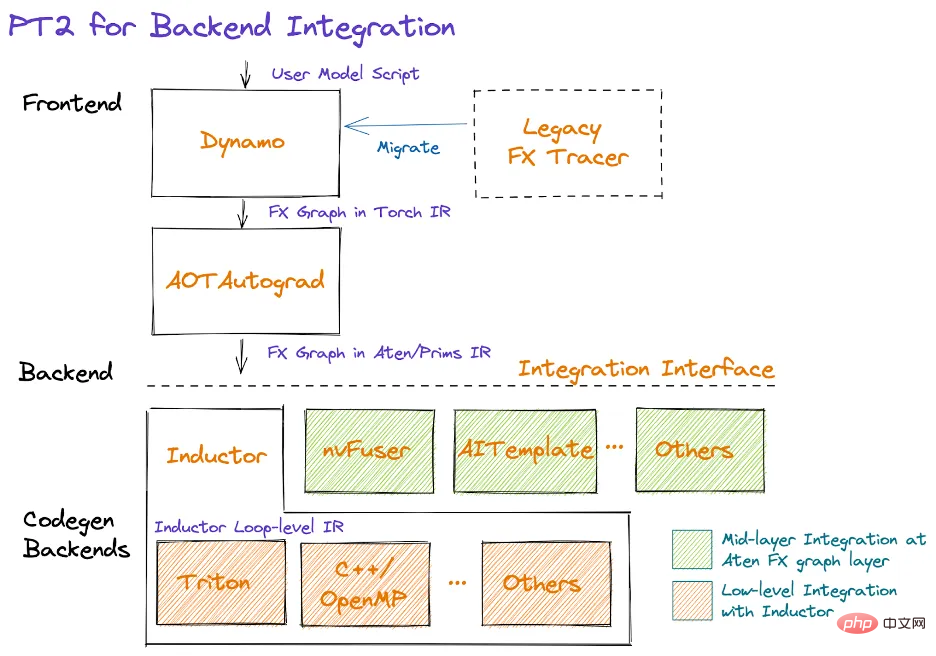

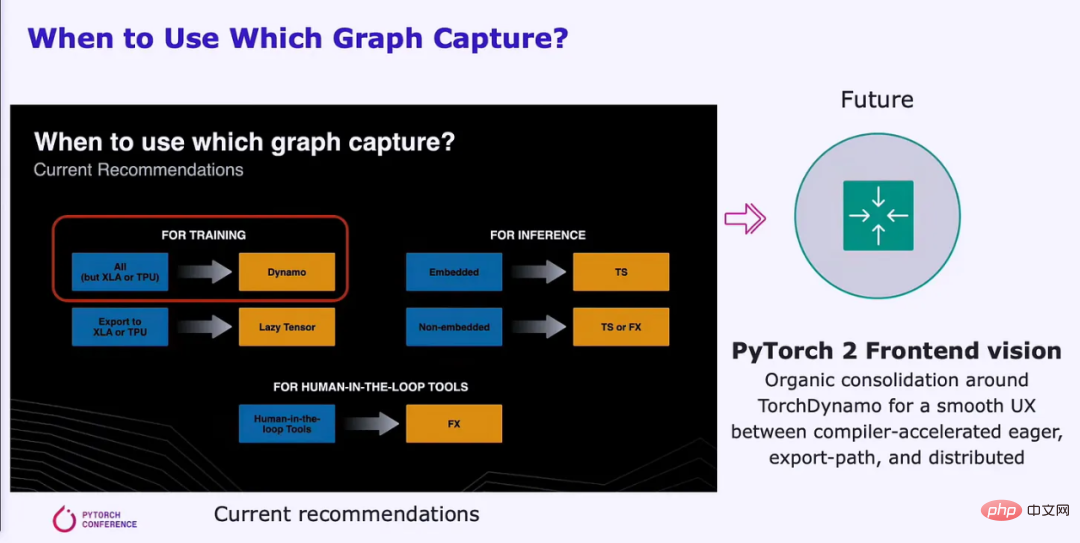

Turning to graph patterns requires a reliable graph definition. Meta and PyTorch have been trying to make this shift for about 5 years, but every solution they came up with had significant shortcomings. Finally, they solved the problem using TorchDynamo. TorchDynamo will ingest any PyTorch user script, including scripts that call external third-party libraries, and generate FX graphs.

Dynamo reduces all complex operators to approximately 250 primitive operators in PrimTorch. Once the graph is formed, unused operators are discarded and the graph determines which intermediate operators need to be stored or written to memory, and which ones may be fused. This greatly reduces overhead within the model while being "seamless" to the user.

Of the 7000 PyTorch models tested, TorchDynamo has been applied to more than 99% of the models, including models from OpenAI, HuggingFace, Meta, NVIDIA, Stability.AI, etc., without the need for Make any changes to the original code. The 7000 models tested were randomly selected from the most popular projects using PyTorch on GitHub.

Google's TensorFlow/Jax and other graph mode execution pipelines often require users to ensure that their models fit the compiler architecture, So that the picture can be captured. Dynamo changes this by enabling partial graph capture, protected graph capture, and instant recapture.

Partial graph capture allows models to contain unsupported/non-python constructs. When a graph cannot be generated for a model part, a graph break will be inserted and unsupported construction will be performed in eager mode between part graphs.

Protected graph capture checks whether the captured graph is valid for execution. "Protection" means a change that requires recompilation. This is important because running the same code multiple times will not recompile multiple times. On-the-fly recapture allows the graph to be re-captured if the captured graph is not valid for execution.

The goal of PyTorch is to create a unified front-end with a smooth UX that leverages Dynamo Generate graph. The user experience of the solution does not change, but performance can be significantly improved. Capture graphs can be executed more efficiently in parallel on large amounts of computing resources.

Dynamo and AOT Autograd then pass the optimized FX graph to the PyTorch native compiler level TorchInductor. Hardware companies can also feed this graph into their own backend compilers.

TorchInductor is a Python native deep learning compiler that can generate fast code for multiple accelerators and backends. Inductor will take FX graphs with about 250 operators and reduce them to about 50 operators. Next, the Inductor enters the scheduling phase, where operators are fused and memory planning is determined.

The Inductor then enters "Wrapper Codegen," which generates code that runs on a CPU, GPU, or other AI accelerator. The wrapper Codegen replaces the interpreter part of the compiler stack and can call the kernel and allocate memory. The backend code generation part leverages OpenAI Triton for GPUs and outputs PTX code. For CPUs, the Intel compiler generates C (also works on non-Intel CPUs).

They will support more hardware in the future, but the point is that Inductor greatly reduces the amount of work that compiler teams have to do when making compilers for their AI hardware accelerators. In addition, the code is more optimized for performance, and memory bandwidth and capacity requirements are significantly reduced.

What researchers need is not just a compiler that only supports GPUs, but also a compiler that supports various hardware backends.

OpenAI Triton is a disruptive presence for Nvidia’s closed-source machine learning software. Triton takes data directly from Python or through the PyTorch Inductor stack, the latter being the most common usage. Triton is responsible for converting the input into an LLVM intermediate representation and generating code. NVIDIA GPUs will generate PTX code directly, skipping NVIDIA's closed-source CUDA libraries (such as cuBLAS) and instead using open-source libraries (such as cutlass).

CUDA is popular in the world of accelerated computing, but little known among machine learning researchers and data scientists. Using CUDA can present challenges and require a deep understanding of the hardware architecture, which can slow down the development process. As a result, machine learning experts may rely on CUDA experts to modify, optimize, and parallelize their code.

Triton makes up for this shortcoming, allowing high-level languages to achieve comparable performance to low-level languages. The Triton kernel itself is very clear to the typical ML researcher, which is very important for usability. Triton automates memory coalescing, shared memory management, and scheduling in SM. Triton is not particularly useful for element-wise matrix multiplication, but matrix multiplication can already be done very efficiently. Triton is useful for expensive point-by-point operations and reducing the overhead of complex operations.

OpenAI Triton currently only officially supports NVIDIA GPUs, but this will change in the near future to support multiple other hardware vendors. Other hardware accelerators can be integrated directly into Triton’s LLVM IR, which greatly reduces the time to build an AI compiler stack for new hardware.

Nvidia’s huge software system lacks foresight and cannot take advantage of its huge advantages in ML hardware and software, and it has failed to become the default compiler for machine learning. They lack the focus on usability that allows OpenAI and Meta to create software stacks that are portable to other hardware.

Original link: https://www.semianalysis.com/p/nvidiaopenaitritonpytorch

The above is the detailed content of Like TensorFlow, will NVIDIA's CUDA monopoly be broken?. For more information, please follow other related articles on the PHP Chinese website!