Remote sensing imaging technology has made significant progress in the past few decades. With the continuous improvement of space, spectrum and resolution of modern airborne sensors, they can cover most of the earth's surface. Therefore, remote sensing technology has many applications in ecology, environmental science, soil science, water pollution, glaciology, land measurement and analysis, etc. The field of research plays a vital role. Because remote sensing data are often multi-modal, located in geographic space (geolocation), often at a global scale, and the data size is growing, these characteristics bring unique challenges to the automatic analysis of remote sensing imaging.

In many areas of computer vision, such as object recognition, detection, segmentation, etc., deep learning, especially convolutional neural networks (CNN), has become mainstream. Convolutional neural networks typically take RGB images as input and perform a series of convolution, local normalization, and pooling operations. CNNs typically rely on large amounts of training data and then use the resulting pre-trained model as a universal feature extractor for various downstream applications. The success of computer vision technology based on deep learning has also inspired the remote sensing community and has made significant progress in many remote sensing tasks, such as hyperspectral image classification and change detection.

One of the main fundamentals of CNN is the convolution operation, which captures local interactions between elements in the input image, such as contour and edge information. CNNs encode biases such as spatial connectivity and translational equivalence, features that help build versatile and efficient architectures. The local receptive field in CNN limits the modeling of long-range dependencies in images (such as relationships between distant parts). Convolution is content-independent because the weights of the convolutional filters are fixed, applying the same weight to all inputs regardless of their nature. Visual transformers (ViTs) have demonstrated impressive performance in a variety of tasks in computer vision. Based on the self-attention mechanism, ViT effectively captures global interactions by learning the relationships between sequence elements. Recent studies have shown that ViT has content-dependent long-range interaction modeling capabilities and can flexibly adjust its receptive fields to combat interference in data and learn effective feature representations. As a result, ViT and its variants have been successfully used in many computer vision tasks, including classification, detection, and segmentation.

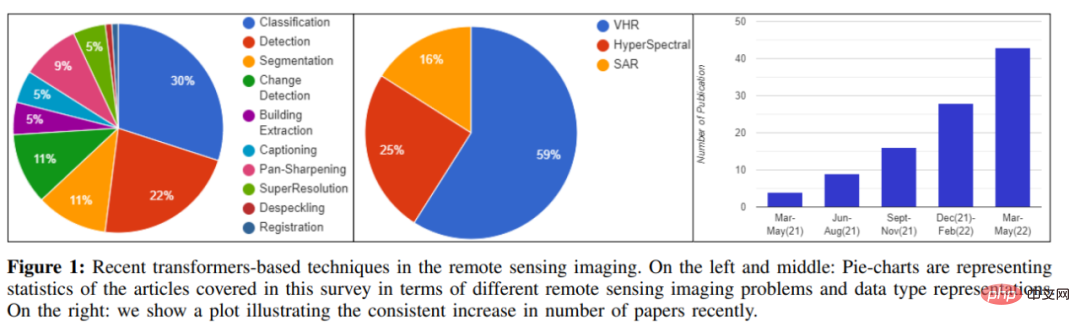

With the success of ViT in the field of computer vision, the number of tasks using the transformer framework based on remote sensing analysis has increased significantly (see Figure 1), such as ultra-high-resolution image classification, change detection, Transformers are used for full-color sharpening, building detection and image subtitles. This opens a new era of remote sensing analysis, with researchers using various methods such as leveraging ImageNet pre-training or using visual transformers to perform remote sensing pre-training.

#Similarly, there are approaches in the literature based on pure transformer designs or utilizing hybrid approaches based on transformers and CNNs. Due to the rapid emergence of transformer-based methods for different remote sensing problems, it is becoming increasingly challenging to keep up with the latest advances.

In the article, the author reviews the progress made in the field of remote sensing analysis and introduces the popular transformer-based methods in the field of remote sensing. The main contributions of the article are as follows:

Provides an overall overview of the application of transformer-based models in remote sensing imaging, and the author is the first to investigate the use of transformers in remote sensing analysis, bridging computer vision and remote sensing in this rapidly developing and popular field. the gap between recent advances in the field.

The rest of the article is organized: Section 2 discusses other related research on remote sensing imaging; Section 3 provides an overview of different imaging modes in remote sensing; Section 4 provides a brief overview of CNN and visual transformers; Section 5 reviews very high resolution (VHR) imaging; Section 6 introduces hyperspectral image analysis; Section 7 introduces the progress of transformer-based methods in synthetic aperture radar (SAR); Section 8 discusses future research directions .

Please refer to the original paper for more details.

The above is the detailed content of Reviewing more than 60 Transformer studies, one article summarizes the latest progress in the field of remote sensing. For more information, please follow other related articles on the PHP Chinese website!