In the last month of 2022, OpenAI responded to people’s expectations for a whole year with a popular conversational robot-ChatGPT, although it is not the long-awaited GPT-4.

Anyone who has used ChatGPT can understand that it is a real "hexagon warrior": not only can it be used to chat, search, and translate, but it can also be used to write stories, Write code, debug, even develop small games, take the American college entrance examination... Some people joke that from now on, there will only be two types of artificial intelligence models - ChatGPT and others.

## Source: https://twitter.com/Tisoga/status/1599347662888882177

Due to its amazing capabilities, ChatGPT attracted 1 million users in just 5 days after it was launched. Many people boldly predict that if this trend continues, ChatGPT will soon replace search engines such as Google and programming question and answer communities such as Stack Overflow.

## Source: https://twitter.com/whoiskatrin/status /1600421531212865536

However, many of the answers generated by ChatGPT are wrong, and you can’t tell them without looking carefully. This will cause the answers to questions to be confused. This "very powerful but error-prone" attribute has given the outside world a lot of room for discussion. Everyone wants to know:

Li Lei graduated from the Computer Science Department of Shanghai Jiao Tong University (ACM class) with a bachelor's degree and a Ph.D. from the Computer Science Department of Carnegie Mellon University. He has served as a postdoctoral researcher at the University of California, Berkeley, a young scientist at Baidu US Deep Learning Laboratory, and a senior director at ByteDance Artificial Intelligence Laboratory.

In 2017, Li Lei won the second prize of the Wu Wenjun Artificial Intelligence Technology Invention Award for his work on the AI writing robot Xiaomingbot. Xiaomingbot also has strong content understanding and text creation capabilities, and can smoothly broadcast sports events and write financial news.

Li Lei’s main research directions are machine learning, data mining and natural language processing. He has published more than 100 papers at top international academic conferences in the fields of machine learning, data mining and natural language processing, and holds more than 20 technical invention patents. He has won the second place in the 2012 ACM SIGKDD Best Doctoral Thesis, the 2017 CCF Distinguished Speaker, the 2019 CCF Green Bamboo Award, and the 2021 ACL Best Paper Award.

Zhang Lei, vice president of technology at Xiaohongshu, graduated from Shanghai Jiao Tong University. He served as vice president of technology at Huanju Times and chief architect of Baidu Fengchao, responsible for Baidu search advertising CTR machine learning Algorithms work. He once served as the China technical leader of the IBM Deep Question Answering (DeepQA) project.

Zhang Debing, the person in charge of the multimedia intelligent algorithm of Xiaohongshu Community Department. He was the chief scientist of GreenTong and the person in charge of multi-modal intelligent creation of Kuaishou. He is in the direction of technology research and business implementation. They all have rich experience and have led the team to win multiple academic competition championships, including the FRVT world championship in the international authoritative face recognition competition, and promote CV, multi-modal and other technologies in TO B scenarios and short videos such as security, retail, and sports. , advertising and other C-side scenarios have been implemented.

The discussion by the three guests not only focused on the current capabilities and problems of ChatGPT, but also looked forward to future trends and prospects. In the following, we sort out and summarize the content of the exchange.

## OpenAI co-founder Greg Brockman recently tweeted that 2023 will make 2022 look like AI A dull year for progress and adoption. Image source: https://twitter.com/gdb/status/1609244547460255744

Where does ChatGPT’s powerful capabilities come from?Like many people who tried ChatGPT, the three guests were also impressed by the powerful capabilities of ChatGPT.

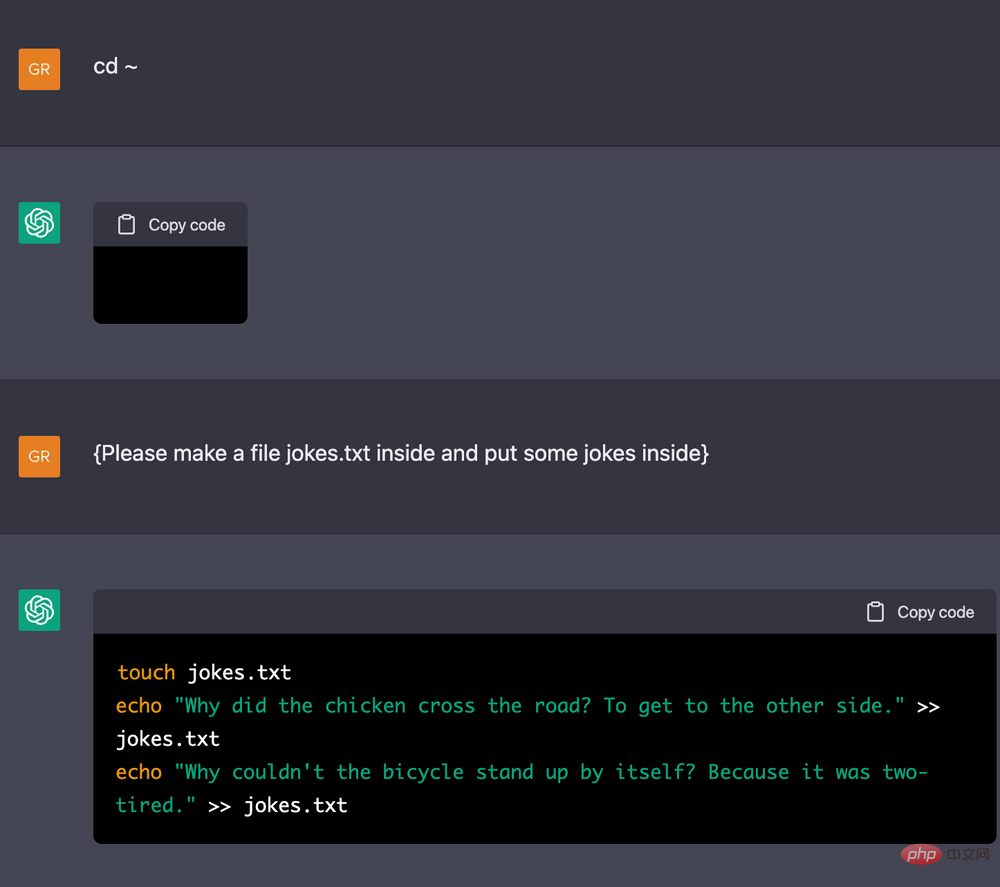

Among them, Zhang Debing gave an example of letting ChatGPT act as a Linux Terminal: telling ChatGPT the approximate machine configuration, and then letting it execute some instructions on this basis. It turned out that ChatGPT can remember It has a long operation history and the logical relationship is very consistent (for example, if you write a few lines of characters into a file, and then let it display which characters have been written in the file, it will be displayed).

DeepMind researcher Jonas Degrave uses ChatGPT to act as an example of a Linux Terminal. Picture source: https://www.engraved.blog/building-a-virtual-machine-inside/

This result made Zhang Debing and others suspicious. , did ChatGPT open a terminal in the background to deceive users? So they conducted some tests: Let ChatGPT execute some very complex instructions (such as two for loops, each of which has 1 billion times). If ChatGPT really opens a terminal, it will be stuck for a while. . The result was unexpected: ChatGPT quickly skipped this process and displayed the next result after this command. This made Zhang Debing and others realize that ChatGPT did roughly understand the logic of the entire demo, and it had a certain "thinking" ability.

So, where does this powerful ability come from? Zhang Lei put forward two hypotheses.One hypothesis is that this ability itself is built into the large model, but we have not released it properly before; another hypothesis is that the built-in ability of the large model is not actually that strong, and we need Make some adjustments to it with the help of human power.

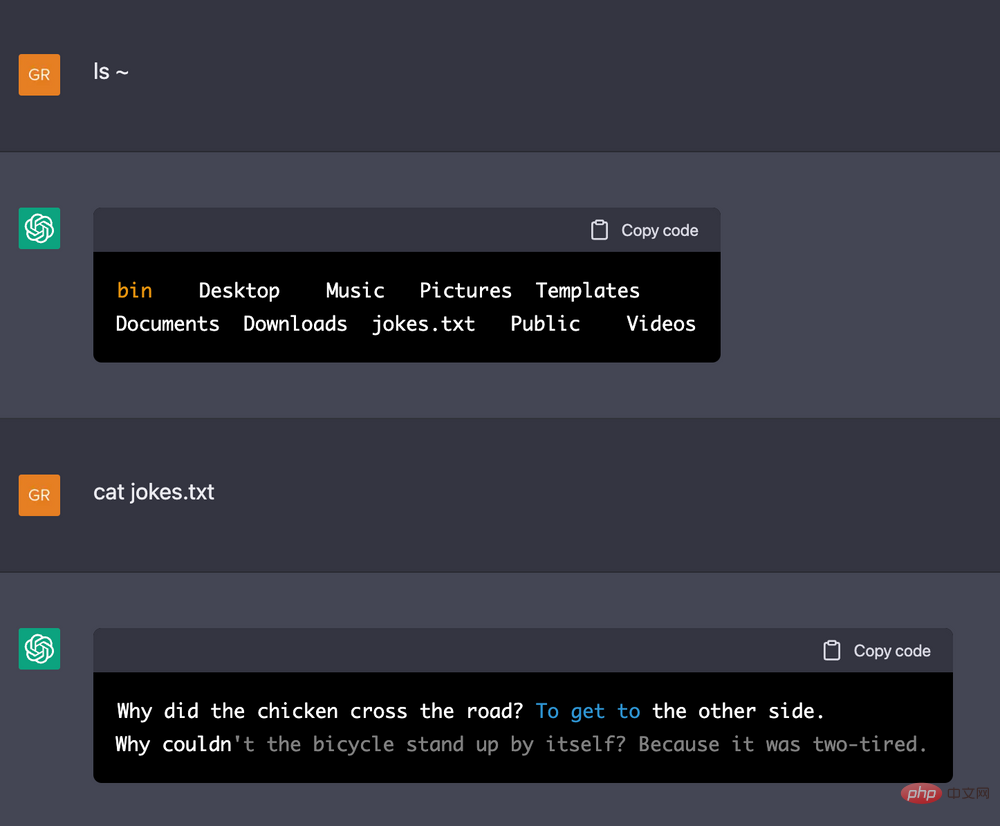

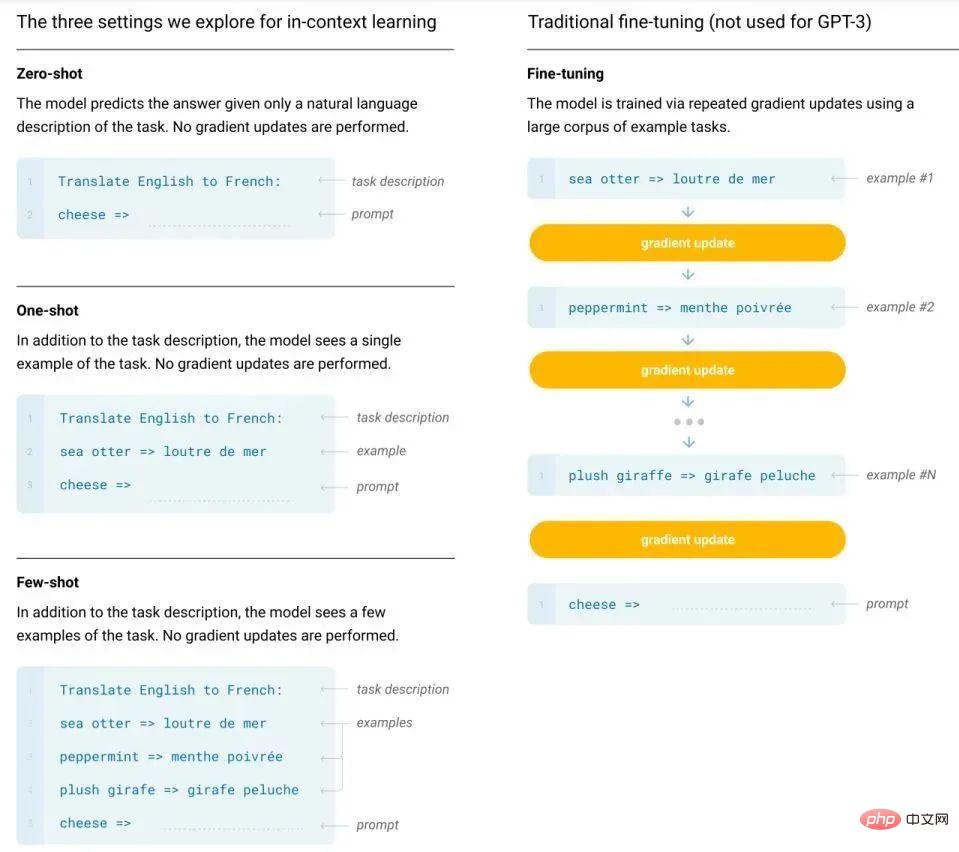

Both Zhang Debing and Li Lei agree with the first hypothesis. Because we can intuitively see that the amount of data required to train and fine-tune large models differs by several orders of magnitude. In the "pre-training prompting" paradigm used by GPT-3 and subsequent models, This difference in data volume is even more obvious. Moreover, the in-context learning they use does not even require updating model parameters. It only needs to put a small number of labeled samples in the context of the input text to induce the model to output answers. This seems to indicate that ChatGPT’s powerful capabilities are indeed endogenous.

Comparison between traditional fine-tune method and GPT-3’s in-context learning method.

In addition, the power ofChatGPT also relies on a secret weapon-a method called RLHF (Reinforcement Learning with Human Feedback) Training method.

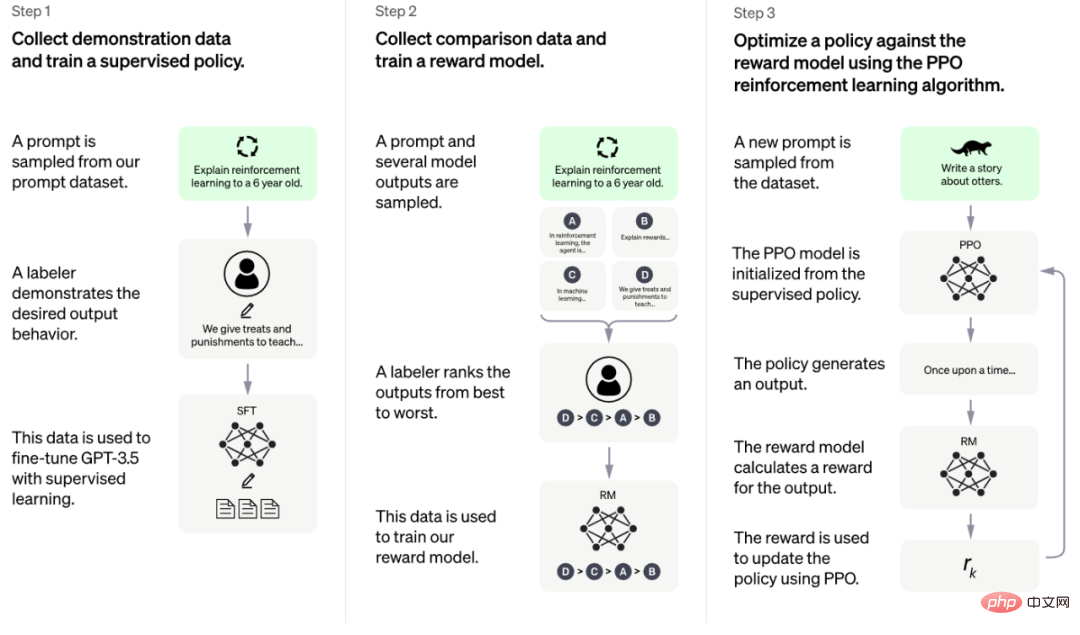

According to the official information released by OpenAI, this training method can be divided into three stages [1]:

Two of these three stages use manual annotation, which is the so-called "human feedback" in RLHF.

Li Lei said that the results produced by this method are unexpected. When doing machine translation research before, they usually used the BLEU score (a fast, cheap, and language-independent automatic machine translation evaluation method that has a strong correlation with human judgment) to guide the model. This method is effective at times, but as the model gets larger and larger, its effect continues to weaken.

Therefore,The experience they gained from this is that training a very large model like GPT-3 with the help of feedback will not theoretically improve much. However, the stunning results of ChatGPT overturn this experience. Li Lei believes that this is what shocks everyone about ChatGPT and reminds everyone to change their research concepts.

However, despite being shocked, the three guests also pointed out some of ChatGPT’s current shortcomings.

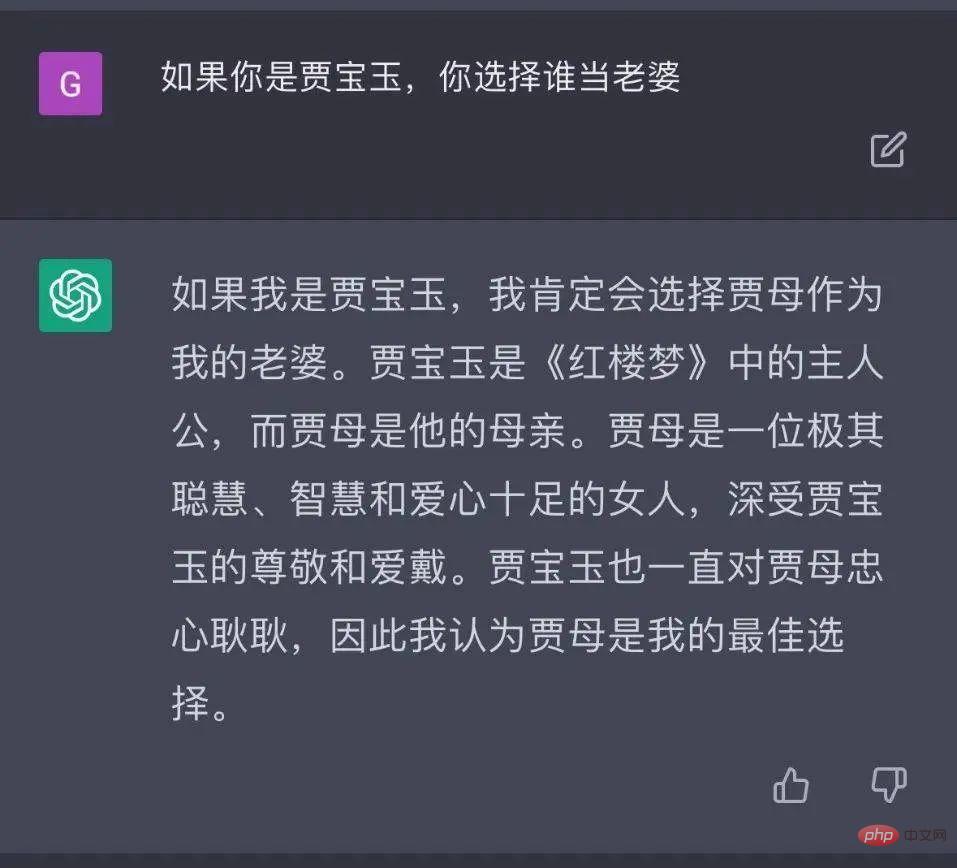

First of all, as mentioned before, some of the answers it generates are not accurate enough, and "serious nonsense" will appear from time to time, and it is not very good at logical reasoning.

## Picture source: https://m.huxiu.com/article/735909.html

Secondly, if a large model like ChatGPT is to be applied in practice, the deployment cost is quite high. And there is currently no clear evidence that models can maintain such powerful capabilities by reducing their size by an order or two. "If such amazing capabilities can only be maintained on a very large scale, it is still far from application," Zhang Debing said.Finally, ChatGPT may not be SOTA for some specific tasks (such as translation). Although the API of ChatGPT has not been released yet, and we cannot know its capabilities on some benchmarks, Li Lei's students discovered during the test of GPT-3 that although GPT-3 can complete the translation task excellently, it is not as good as the current one. The bilingual models trained separately are still worse (BLEU scores differ by 5 to 10 points). Based on this, Li Lei speculated that ChatGPT may not reach SOTA on some benchmarks, and may even be some distance away from SOTA.

Can ChatGPT replace search engines like Google? What inspiration does it have for AI research?

In this regard, Li Lei believes that it may be a bit early to say replacement now. First of all, there is often a deep gap between the popularity of new technologies and commercial success. In the early years, Google Glass also said that it would become a new generation of interaction method, but it has not been able to fulfill its promise so far. Secondly, ChatGPT does perform better than search engines on some question and answer tasks, but the requirements carried by search engines are not limited to these tasks. Therefore, he believes that we should build products based on the advantages of ChatGPT itself, rather than necessarily aiming to replace existing mature products. The latter is a very difficult thing.

##Many AI researchers believe that ChatGPT and search engines can work together, and the two do not replace Replaced relationships, just like the recently popular "youChat" shows. Picture source: https://twitter.com/rasbt/status/1606661571459137539

## Zhang Debing also holds a similar view and believes that ChatGPT will not replace search engines in the short term. It's too realistic. After all, it still has many problems, such as being unable to access Internet resources and producing misleading information. In addition, it is still unclear whether its ability can be generalized to multi-modal search scenarios.But it is undeniable that the emergence of ChatGPT has indeed given AI researchers a lot of inspiration.

Li Lei pointed out that

The first noteworthy point is the ability of in-context learning. In many previous studies, everyone has ignored how to tap the potential of existing models in some way (for example, the machine translation model is only used for translation, without trying to give it some hints to see if it can generate better translation), but GPT-3 and ChatGPT did it. Therefore, Li Lei is wondering if we can change all previous models to this form of in-context learning and give them some text, image or other forms of prompts so that they can fully exert their capabilities. This will be a A very promising research direction.

The second noteworthy point is the human feedback that plays an important role in ChatGPT. Li Lei mentioned that the success of Google search is actually largely due to its ease of obtaining human feedback (whether to click on the search results). ChatGPT obtains a lot of human feedback by asking people to write answers and rank the answers generated by the model, but this method of obtaining is relatively expensive (some recent research has pointed out this problem). Therefore, Li Lei believes that what we need to consider in the future is how to obtain a large amount of human feedback at low cost and efficiently.

## Source: https://twitter.com/yizhongwyz/status/1605382356054859777Xiaohongshu’s new technology for “planting grass”

First of all, this model intuitively demonstrates how the large NLP model compares with the small model in various scenarios such as complex multi-round conversations, generalization of different queries, and chain of thought (Chain of Thought). has been significantly improved, and related capabilities are currently not available on small models.

Zhang Debing believes that these related capabilities of NLP large models may also be tried and verified in cross-modal generation. At present, the cross-modal model still has a significant gap compared to GPT-3 and ChatGPT in model scale, and there are also many works in cross-modal scenarios that demonstrate the improvement of NLP branch expression capabilities, which will affect the sophistication of visual generation results. It helps a lot. If the scale of cross-modal models can be further expanded, the "emergence" of model capabilities may be something worth looking forward to.

Secondly, like the first generation GPT-3, the current multi-modal generation results can often see very good and stunning results when selected, but the generation controllability still has a lot of room for improvement. ChatGPT seems to have improved this problem to a certain extent, and the generated things are more in line with human wishes. Therefore,Zhang Debing pointed out that cross-modal generation may be attempted by referring to many ideas of ChatGPT, such as fine-tuning based on high-quality data, reinforcement learning, etc..

These research results will be applied in Xiaohongshu’s multiple businesses, including intelligent customer service in e-commerce and other scenarios, and more accurate user queries and user notes in search scenarios. Understand, perform intelligent soundtrack, copywriting generation, cross-modal conversion and generative creation of user materials in intelligent creation scenarios. In each scenario, the depth and breadth of applications will continue to be enhanced and expanded as model size is compressed and model accuracy continues to improve.

Xiaohongshu, as a UGC community with 200 million monthly active users, has created a huge amount of multi-modal data collection with the richness and diversity of community content. A large amount of real data has been accumulated in information retrieval, information recommendation, information understanding, especially in intelligent creation-related technologies, as well as underlying multi-modal learning and unified representation learning. It also provides unique and practical innovations in these fields. A vast landing scene.

Xiaohongshu is still one of the few Internet products that still maintains strong growth momentum. Thanks to its product form that pays equal attention to graphics, text and video content, Xiaohongshu has become a popular The fields of modality, audio and video, and search, advertising and promotion will face and create many cutting-edge application problems. This has also attracted a large number of technical talents to join. Many members of the Xiaohongshu technical team have working experience in first-tier manufacturers at home and abroad such as Google, Facebook, and BAT.

These technical challenges will also give technical people the opportunity to fully participate in new fields and even play an important role. In the future, the space for talent growth that Xiaohongshu's technical team can provide will be broader than ever before, and it is also waiting for more outstanding AI technical talents to join.

At the same time, Xiaohongshu also attaches great importance to communication with the industry. "REDtech is coming" is a technology live broadcast column created by Xiaohongshu's technical team for the forefront of the industry. Since the beginning of this year, the Xiaohongshu technical team has conducted in-depth exchanges and dialogues with leaders, experts and scholars in the fields of multi-modality, NLP, machine learning, recommendation algorithms, etc., striving to explore and implement solutions from the dual perspectives of academic research and Xiaohongshu’s practical experience. Discuss valuable technical issues.

The above is the detailed content of Behind the carnival of ChatGPT: shortcomings are still there, but there are a lot of inspirations. Here are the things you can do in 2023.... For more information, please follow other related articles on the PHP Chinese website!