Very large models with hundreds of billions or trillions of parameters need to be studied, as do large models with billions or tens of billions of parameters.

Just now, Meta chief AI scientist Yann LeCun announced that they have "open sourced" a new large model series - LLaMA (Large Language Model Meta AI), with parameter quantities ranging from 7 billion to 65 billion range. The performance of these models is excellent: the LLaMA model with 13 billion parameters can outperform GPT-3 (175 billion parameters) "on most benchmarks" and can run on a single V100 GPU; while the largest 65 billion The parameter LLaMA model is comparable to Google's Chinchilla-70B and PaLM-540B.

As we all know, parameters are the variables that a machine learning model uses to predict or classify based on input data. The number of parameters in a language model is a key factor affecting its performance. Larger models are usually able to handle more complex tasks and produce more coherent outputs, which Richard Sutton calls a "bitter lesson." In the past few years, major technology giants have launched an arms race around large models with hundreds of billions and trillions of parameters, greatly improving the performance of AI models.

However, this kind of research competition to compete for “monetary ability” is not friendly to ordinary researchers who do not work for technology giants, and hinders their understanding of the operating principles and potential problems of large models. Research on solutions and other problems. Moreover, in practical applications, more parameters will occupy more space and require more computing resources to run, resulting in high application costs for large models. Therefore, if one model can achieve the same results as another model with fewer parameters, it represents a significant increase in efficiency. This is very friendly to ordinary researchers, and it will be easier to deploy the model in real environments. That’s the point of Meta’s research.

"I now think that within a year or two we will be running language models with a substantial portion of ChatGPT's capabilities on our (top-of-the-line) phones and laptops," independent artificial intelligence researcher Simon Willison writes when analyzing the impact of Meta's new AI model.

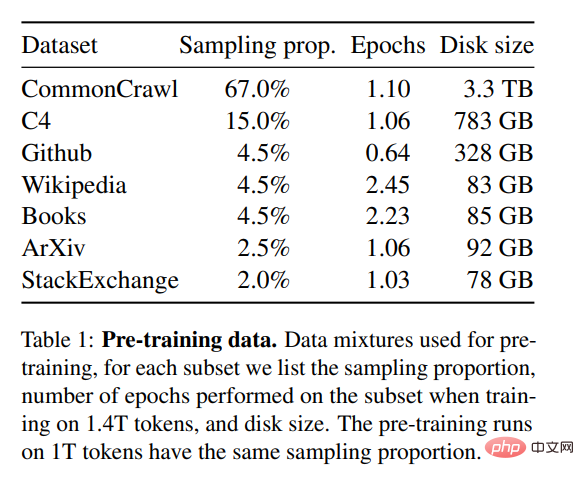

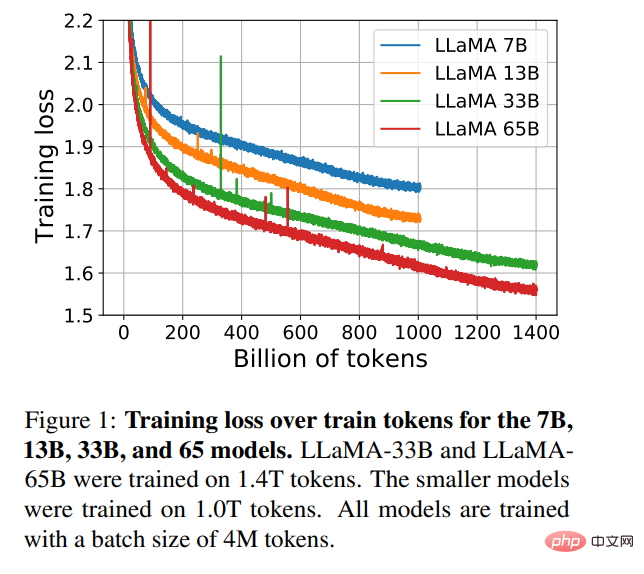

In order to train the model while meeting the requirements of open source and reproducibility, Meta only used publicly available data sets, which is different from most large-scale models that rely on non-public data. Model. Those models are often not open source and are private assets of large technology giants. In order to improve model performance, Meta trained on more tokens: LLaMA 65B and LLaMA 33B were trained on 1.4 trillion tokens, and the smallest LLaMA 7B also used 1 trillion tokens.

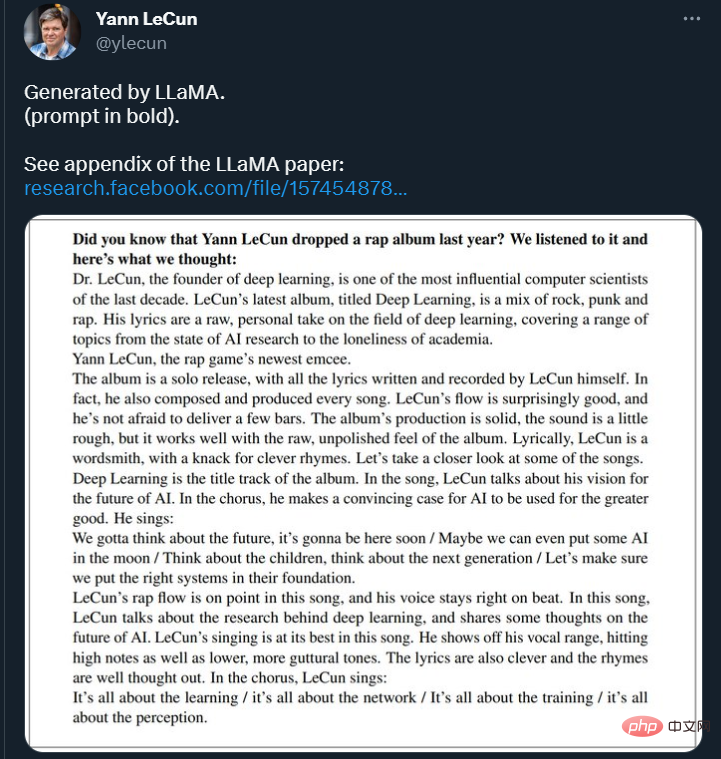

On Twitter, LeCun also showed some results of the LLaMA model continuation of text. The model was asked to continue: "Did you know Yann LeCun released a rap album last year? We listened to it and here's what we thought: ____ "

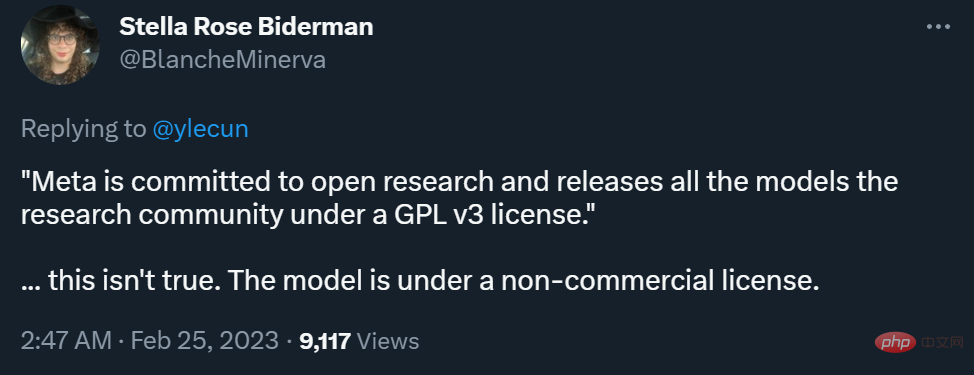

However, in terms of whether it can be commercialized, the differences between Meta blog and LeCun’s Twitter have caused some controversy.

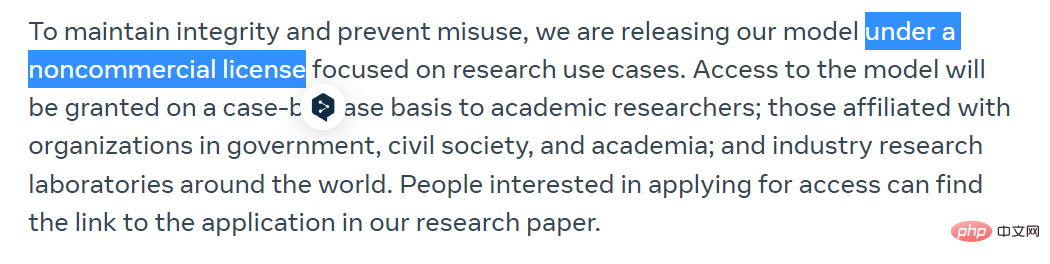

Meta stated in the blog post that to maintain integrity and prevent abuse, they will release them under a non-commercial license model, focusing on study use cases. Access to the model will be granted on a case-by-case basis to academic researchers, organizations affiliated with government, civil society and academia, as well as industrial research laboratories around the world. Interested persons can apply at the following link:

## https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform

LeCun said that Meta is committed to open research and releases all models to the research community under the GPL v3 license (GPL v3 allows commercial use).

This statement is quite controversial because he does not make it clear whether the "model" here refers to code or weights, or both. In the opinion of many researchers, model weight is much more important than code.

In this regard, LeCun explained that what is open under the GPL v3 license is the model code.

Some people believe that this level of openness is not true “AI democratization.”

##Currently, Meta has uploaded the paper to arXiv, and some content has also been uploaded to the GitHub repository. You can go to Go browse.

Large language models (LLMs) trained on large-scale text corpora have shown their ability to perform new tasks from text prompts or from a small number of samples. task. These few-shot properties first emerged when scaling models to a sufficiently large scale, spawning a line of work focused on further scaling these models.

These efforts are based on the assumption that more parameters will lead to better performance. However, recent work by Hoffmann et al. (2022) shows that for a given computational budget, the best performance is achieved not by the largest models, but by smaller models trained on more data.

The goal of scaling laws proposed by Hoffmann et al. (2022) is to determine how best to scale dataset and model sizes given a specific training compute budget. However, this goal ignores the inference budget, which becomes critical when serving language models at scale. In this case, given a target performance level, the preferred model is not the fastest to train, but the fastest to infer. While it may be cheaper to train a large model to reach a certain level of performance, a smaller model that takes longer to train will ultimately be cheaper at inference. For example, although Hoffmann et al. (2022) recommended training a 10B model on 200B tokens, the researchers found that the performance of the 7B model continued to improve even after 1T tokens.

This work focuses on training a family of language models to achieve optimal performance at a variety of inference budgets by training on more tokens than typically used. The resulting model, called LLaMA, has parameters ranging from 7B to 65B and performs competitively with the best existing LLMs. For example, despite being 10 times smaller than GPT-3, LLaMA-13B outperforms GPT-3 in most benchmarks.

The researchers say this model will help democratize LLM research because it can run on a single GPU. At higher scales, the LLaMA-65B parametric model is also comparable to the best large language models such as Chinchilla or PaLM-540B.

Unlike Chinchilla, PaLM or GPT-3, this model only uses publicly available data, making this work open source compatible, while most existing models rely on data that either is not Publicly available or unrecorded (e.g. Books-2TB or social media conversations). Of course there are some exceptions, notably OPT (Zhang et al., 2022), GPT-NeoX (Black et al., 2022), BLOOM (Scao et al., 2022) and GLM (Zeng et al., 2022), But none can compete with the PaLM-62B or Chinchilla.

The remainder of this article outlines the researchers’ modifications to the transformer architecture and training methods. Model performance is then presented and compared with other large language models on a set of standard benchmarks. Finally, we demonstrate bias and toxicity in models using some of the latest benchmarks from the responsible AI community.

The training method used by the researchers is the same as that described in previous work such as (Brown et al., 2020), (Chowdhery et al., 2022) The approach is similar and inspired by Chinchilla scaling laws (Hoffmann et al., 2022). The researchers used a standard optimizer to train large transformers on large amounts of text data.

Pre-training data

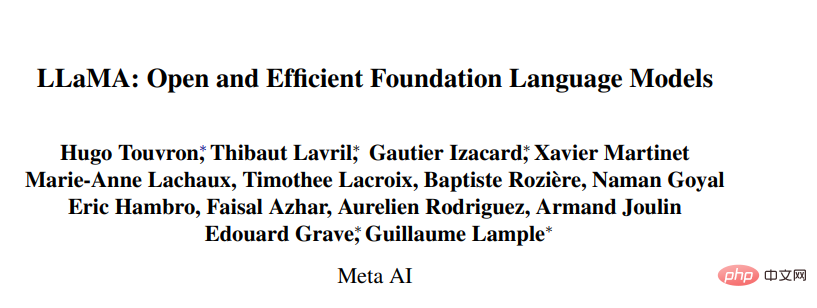

As shown in Table 1, the training data sets for this study are several A mixture of sources covering different areas. In most cases, researchers reuse data sources that have been used to train other large language models, but the restriction here is that only publicly available data can be used and it is compatible with open resources. The mix of data and their percentage in the training set is as follows:

The entire training data set contains approximately 1.4T tokens after tokenization. For most of the training data, each token is used only once during training, except for the Wikipedia and Books domains, on which we perform approximately two epochs.

Architecture

#Based on recent work on large language models, this study also uses a transformer architecture. Researchers drew on various improvements that were subsequently proposed and used in different models, such as PaLM. In the paper, the researchers introduced the main difference from the original architecture:

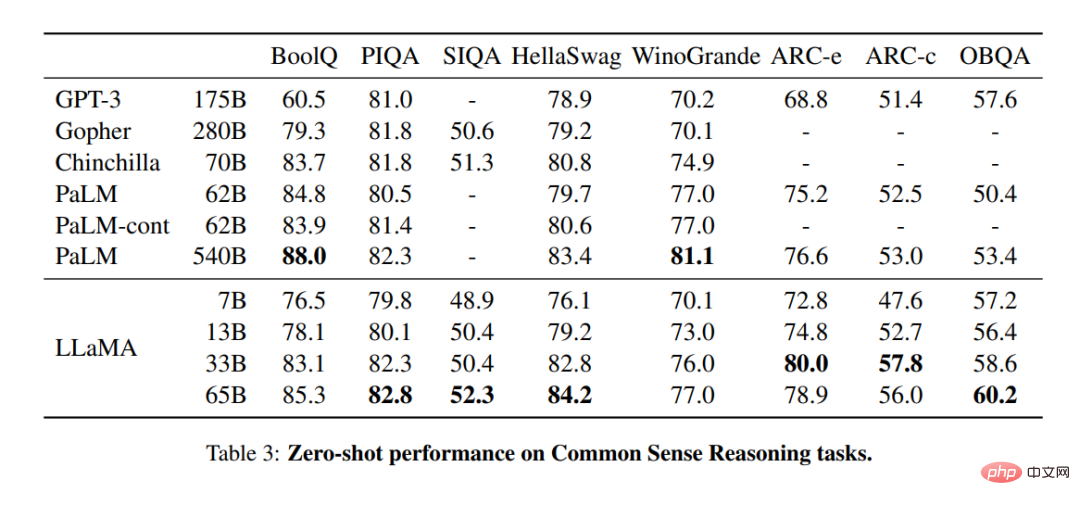

Common sense reasoning

In Table 3, the researchers compare with existing models of various sizes and report the numbers from the corresponding papers. First, LLaMA-65B outperforms Chinchilla-70B on all reported benchmarks except BoolQ. Again, this model surpasses the PaLM540B in every aspect except on BoolQ and WinoGrande. The LLaMA-13B model also outperforms GPT-3 on most benchmarks despite being 10 times smaller.

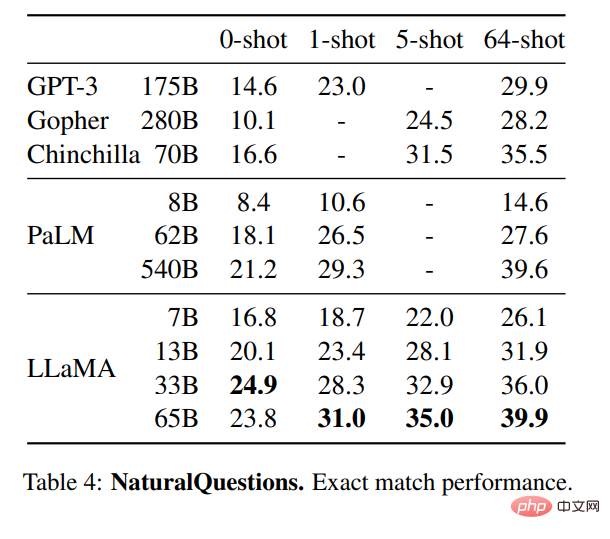

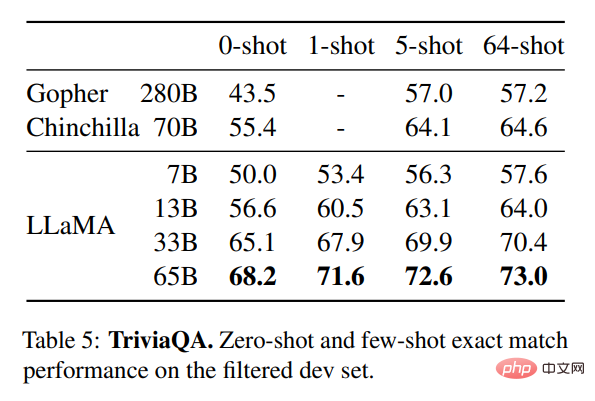

## Closed book answers

Table 4 shows the performance of NaturalQuestions and Table 5 shows the performance of TriviaQA. In both benchmarks, LLaMA-65B achieves state-of-the-art performance in both zero- and few-shot settings. What's more, LLaMA-13B is equally competitive on these benchmarks despite being one-fifth to one-tenth the size of GPT-3 and Chinchilla. The model's inference process is run on a single V100 GPU.

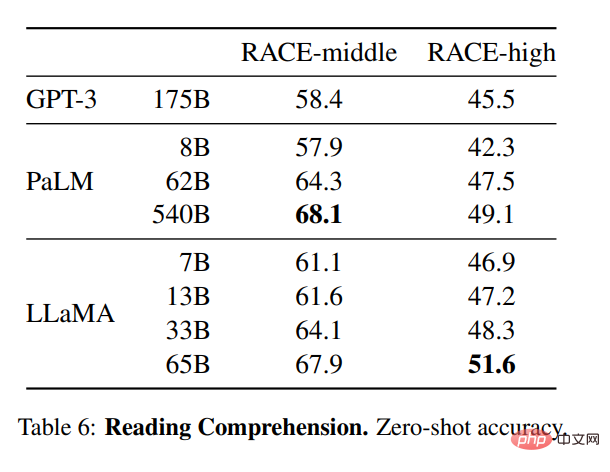

##Reading Comprehension

The researchers also evaluated the model on the RACE reading comprehension benchmark (Lai et al., 2017). The evaluation setup of Brown et al. (2020) is followed here, and Table 6 shows the evaluation results. On these benchmarks, LLaMA-65B is competitive with PaLM-540B, and LLaMA-13B outperforms GPT-3 by several percentage points.

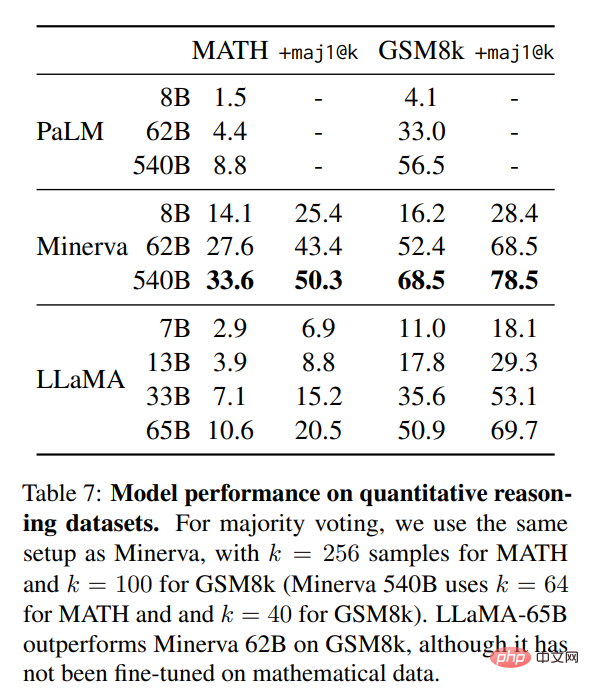

Mathematical Reasoning

In the table 7, researchers compared it with PaLM and Minerva (Lewkowycz et al., 2022). On GSM8k, they observed that LLaMA65B outperformed Minerva-62B, although it was not fine-tuned on the mathematical data.

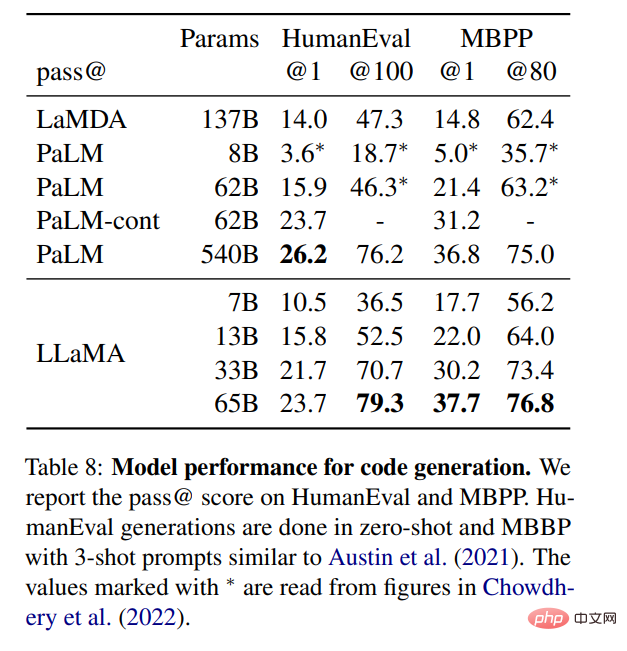

Code generation

As shown in the table 8 shows that for a similar number of parameters, LLaMA performs better than other general models, such as LaMDA and PaLM, which have not been trained or fine-tuned with specialized code. On HumanEval and MBPP, LLaMA exceeds LaMDA by 137B for parameters above 13B. LLaMA 65B also outperforms PaLM 62B, even though it takes longer to train.

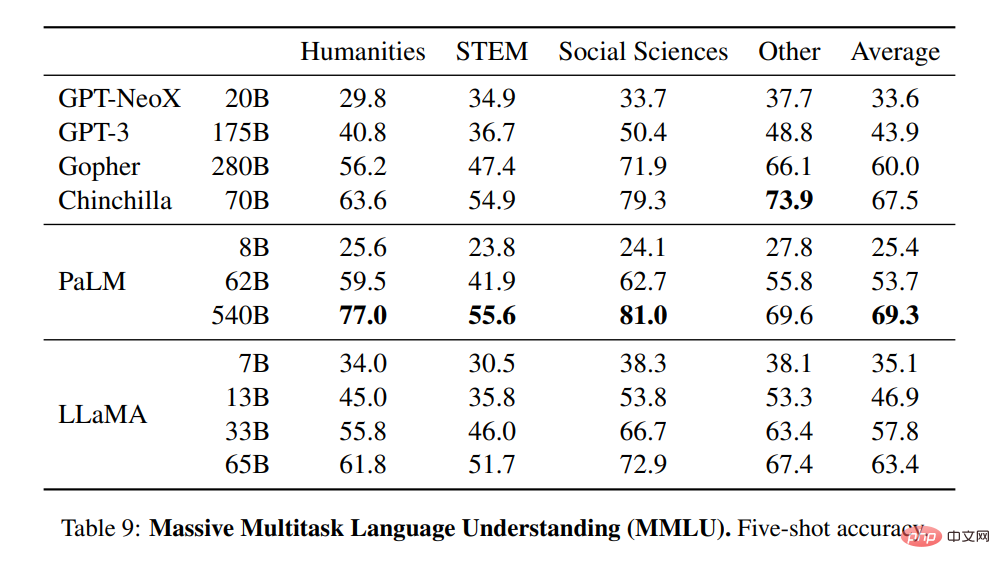

Large-scale multi-task language understanding

The researchers used the examples provided by the benchmark to evaluate the model in the 5-shot case, and show the results in Table 9. On this benchmark, they observed that LLaMA-65B trailed Chinchilla70B and PaLM-540B by an average of a few percentage points in most areas. One potential explanation is that the researchers used a limited number of books and academic papers in the pre-training data, namely ArXiv, Gutenberg, and Books3, which totaled only 177GB, while the models were trained on up to 2TB of books. The extensive books used by Gopher, Chinchilla, and PaLM may also explain why Gopher outperforms GPT-3 on this benchmark but is on par on other benchmarks.

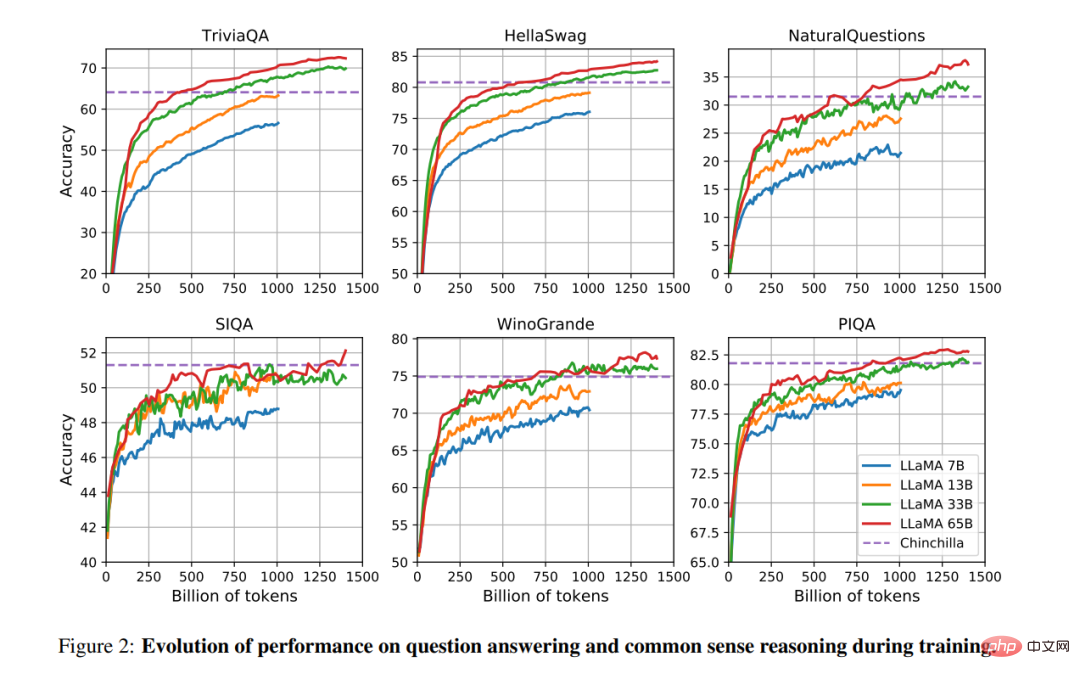

Performance changes during training

During the training period, the researchers tracked the performance of the LLaMA model on some question answering and common sense benchmarks, and the results are shown in Figure 2. Performance improves steadily on most benchmarks and is positively correlated with the model's training perplexity (see Figure 1).

##

The above is the detailed content of Is this the prototype of the Meta version of ChatGPT? Open source, can run on a single GPU, beats GPT-3 with 1/10 the number of parameters. For more information, please follow other related articles on the PHP Chinese website!

The difference between footnotes and endnotes

The difference between footnotes and endnotes

The difference between while loop and do while loop

The difference between while loop and do while loop

What should I do if the CAD image cannot be moved?

What should I do if the CAD image cannot be moved?

What is the difference between TCP protocol and UDP protocol?

What is the difference between TCP protocol and UDP protocol?

Top ten digital currency exchanges

Top ten digital currency exchanges

Which is more worth learning, c language or python?

Which is more worth learning, c language or python?

Virtual machine software

Virtual machine software

What is the principle and mechanism of dubbo

What is the principle and mechanism of dubbo