Large-scale language models can be said to be the cornerstone of modern natural language processing technology, such as GPT-3 with 175 billion parameters and PaLM with 540 billion parameters. Pre-training models provide very powerful few-shot learning for downstream tasks. ability.

But reasoning tasks are still a difficult problem, especially questions that require multi-step reasoning to get the correct answer.

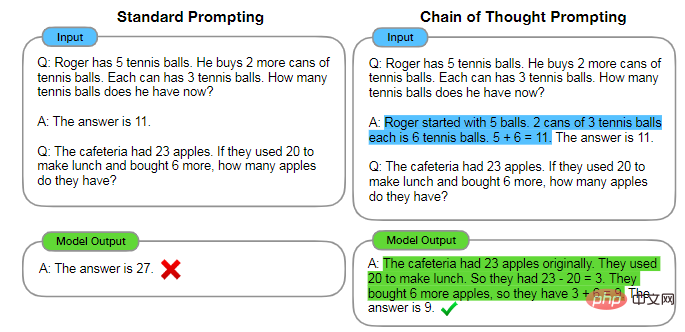

Recently, researchers have discovered that as long as a properly designed prompt can guide the model to perform multi-step reasoning to generate the final answer, this method is also called chain-of-thought reasoning.

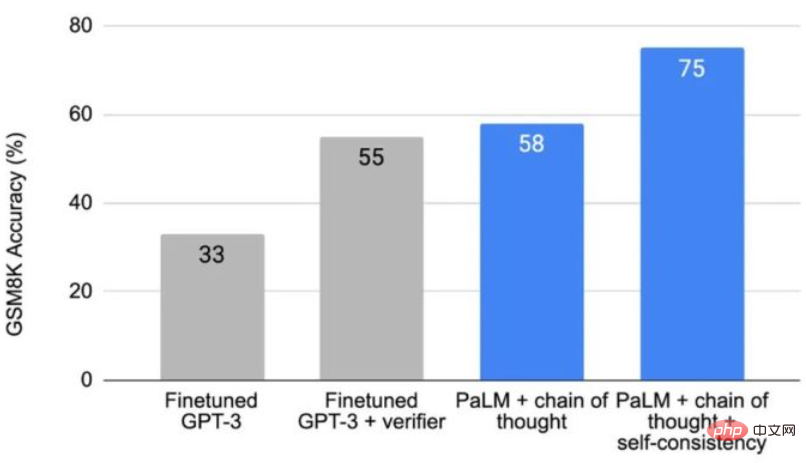

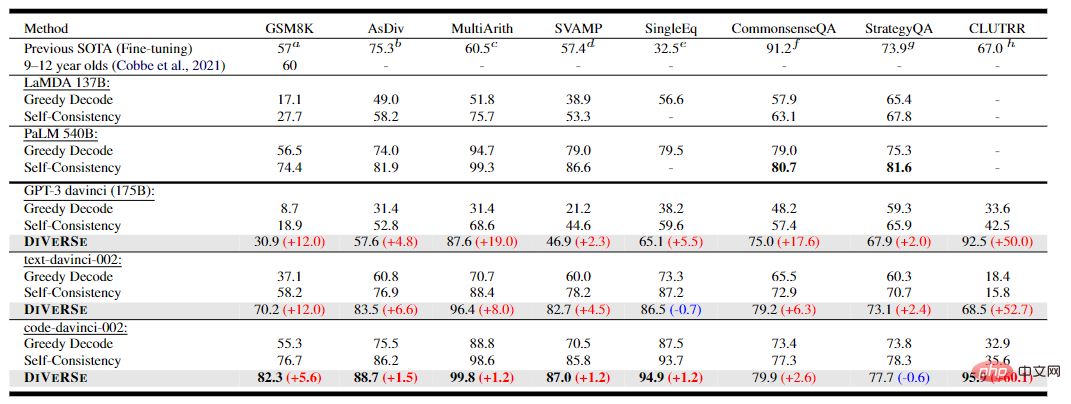

Thinking chain technology increased the accuracy from 17.9% to 58.1% on the arithmetic benchmark GSM8K. The self-consistency mechanism of voting introduced later further improved the accuracy. Increased to 74.4%

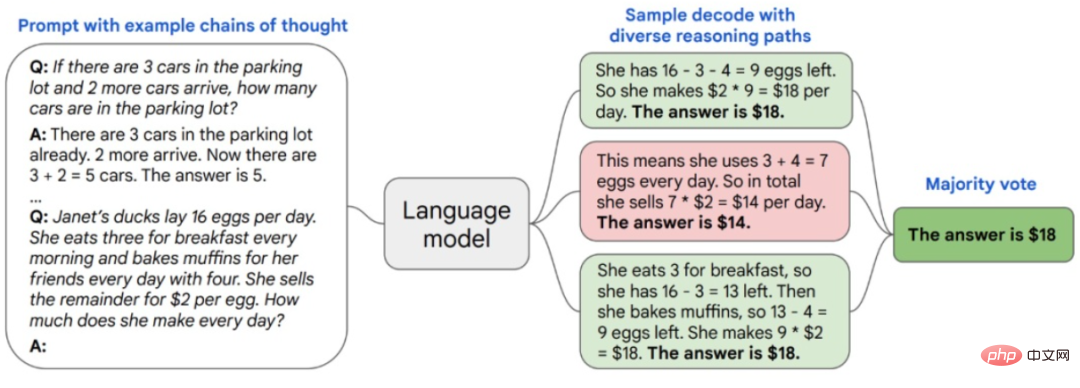

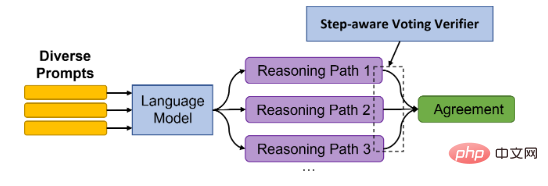

Simply put, complex reasoning tasks usually have multiple reasoning paths that can get the correct answer. The self-consistent method samples from the language model through the thinking chain A set of different reasoning paths, and then the most consistent answer among them is returned.

Recently, researchers from Peking University and Microsoft based on the new self-consistent method DiVeRSe, which contains three main innovation points, further improving the model's reasoning capabilities.

Paper link: https://arxiv.org/abs/2206.02336

Code link: https://github.com/microsoft/DiVerSe

First, inspired by the self-consistent approach of "different ideas, same answers", that is, sampling different reasoning paths from the language model, DiVeRSe goes a step further in diversity, following the principle of "all roads lead to Rome" The idea is that using multiple prompts to generate answers can generate more complete and complementary answers.

The researchers first provide 5 different prompts for each question, then sample 20 reasoning paths for each prompt, and finally Generate 100 solution reasoning paths for each question.

A key issue is how to obtain different prompts. Assume that after obtaining a sample library, we can sample K samples from it to construct a prompt, and then repeat it 5 times

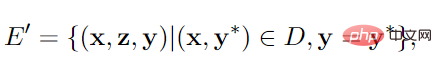

If there are not enough samples, use self-teaching to improve prompt diversity, that is, generate pseudo inference paths and pairs from a part of the samples.

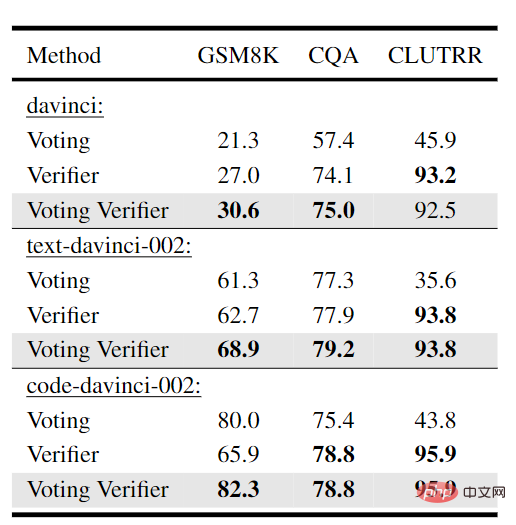

Second, when generating the inference path, there is no mechanism in the language model to correct errors in previous steps, which may lead to confusion in the final prediction result. DiVeRSe draws on the idea of verifier to verify the correctness of each reasoning path to guide the voting mechanism. That is, not all reasoning mechanisms are equally important or good.

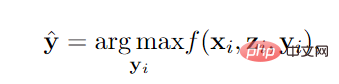

Suppose we have 100 reasoning paths for a question, 60 of which result in "the answer is 110", and 40 of which result in "the answer is 150". Without a validator (i.e. the original self-consistent method), "the answer is 110" is a majority vote, so we can treat 110 as the final answer and delete the 40 reasoning paths that result in 150.

verifier scores the reasoning path. The function f is trained by a two-classifier. The input is question x, path z and answer y, and the output is the probability of positive.

With verifier, assume that the average score of the 60 reasoning paths of "The answer is 110" is 0.3; the average score of the 40 reasoning paths of "The answer is 150" is 0.8. Then the final answer should be 150, because 40*0.8>60*0.3

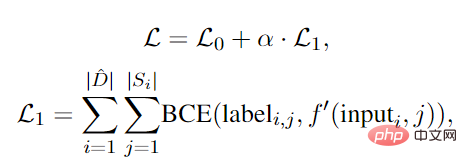

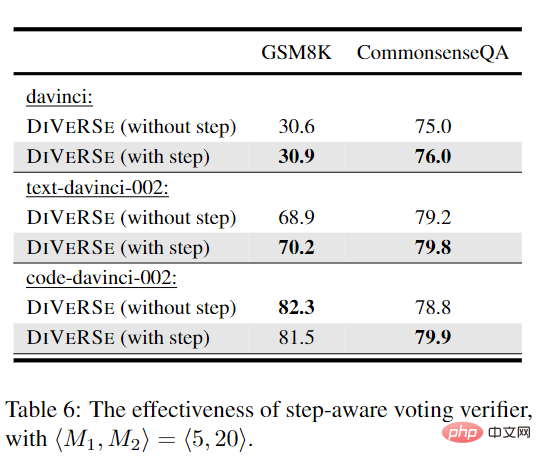

Thirdly, since the answer is generated based on multiple steps of reasoning, when a path generates a correct answer, it can be considered All steps contribute to the final correctness. However, when a wrong answer is generated, it does not mean that all steps were wrong or contributed to the error.

In other words, although the result is wrong, some intermediate steps may still be correct, but some subsequent deviation steps lead to the final wrong answer. DiVeRSe designed a mechanism to assign a fine-grained label to each step and proposed a step-aware verifier and assigned correctness to the reasoning of each step instead of just looking at the final answer.

The main body is still a two-classifier, but the key question is how to obtain the step-level negative label, because if the final answer is wrong, without human participation, we I don’t know which step went wrong, but the correct answer is that the process should be correct.

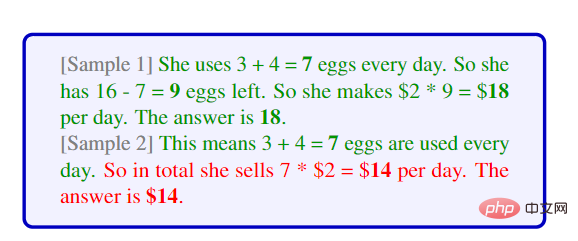

Researchers proposed the concept of supports. For example, in arithmetic tasks, there needs to be an intermediate result of another example that is the same as the result of the intermediate step.

Based on these three improvements, the researchers conducted experiments on 5 arithmetic reasoning data sets. It can be seen that the DiVeRSe method based on code-davinci-002 has achieved The new SOTA algorithm has an average improvement rate of 6.2%. ), it is speculated that the reason may be that the common sense reasoning task is a multiple-choice task rather than an open-ended generation task, resulting in more false-positive pseudo-examples.

On the inductive reasoning task, DiVeRSe achieved a score of 95.9% on the CLUTRR task, exceeding the previous SOTA fine-tuning result (28.9%)

# In most experiments, extending the voting verifier to a step-aware version can improve performance. For code-davinci-002 on GSM8K, the step-aware version of verifier will cause a slight decrease in performance.

The possible reason is that code-davinci-002 is more powerful and can produce higher quality inference paths for GSM8K, thereby reducing the necessity of step-level information, i.e. text-davinci is more likely to generate short/incomplete inference path, while code-davinci is more friendly to generating growing content.

The first author of the paper is Yifei Li. He graduated from Northeastern University with a bachelor's degree in software engineering in 2020. He is currently studying for a master's degree at Peking University. His main research direction is natural language processing. , especially prompt-tuning and inference in large-scale language models.

The second author of the article is Zeqi Lin, a DKI researcher at Microsoft Research Asia. He received his bachelor's degree and doctorate degree from Peking University in 2014 and 2019 respectively. His main research direction is machine learning and its application in software analysis. and applications in data analysis.

The above is the detailed content of Beyond PaLM! Peking University Master proposed DiVeRSe, completely refreshing the NLP reasoning rankings. For more information, please follow other related articles on the PHP Chinese website!