Written by Yan Zheng

It hasn’t been so lively for a long time! A product that can arouse great discussion among people around the world is so precious. I thought about it while my brain was running at high speed, and it seems that the only way to describe it is to use Nvidia CEO Jensen Huang's "iPhone moment" to describe it.

5 days after its launch, the number of users exceeded one million. Two months, monthly life of 100 million.

So what is the ROI of entering ChatGPT? The editor has sorted out the cost and revenue model of ChatGPT, and briefly reviewed the layout and related policies of domestic giants, as well as how developers should invest in it. I hope it can help everyone.

Although the arrival of ChatGPT is the "iPhone moment", the level of money burning is outrageous. Here is a compilation of calculation data from relevant institutions, divided into three aspects: initial investment cost, training cost and operating cost.

Guosheng Securities, a well-known domestic securities firm, has calculated the computing power costs required by OpenAI at the current stage. Taking an average of about 13 million unique visitors using ChatGPT every day in January this year, the corresponding chip demand is more than 30,000 NVIDIA A100 GPUs, the initial investment cost is about 800 million US dollars, and the daily electricity bill will also be around 50,000 US dollars. .

With the chip and the computing power foundation, the training cost of the model is also a big threshold. Guosheng Securities estimates based on the number of parameters and tokens that the cost of training GPT-3 once is about US$1.4 million; using the same calculation formula for some larger LLM models, the training cost is between US$2 million and US$12 million. .

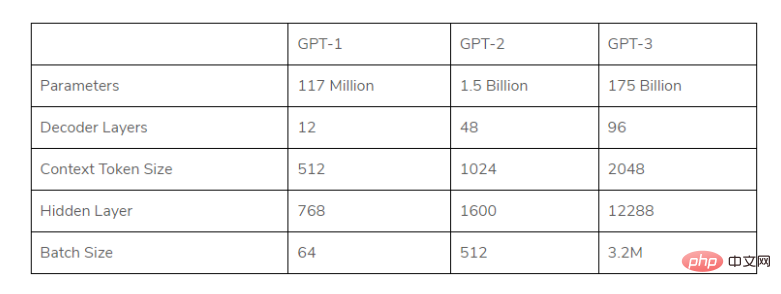

Figure: List of GPT parameters of each generation

(Under the framework of the large model, the GPT model used by ChatGPT, each The amount of parameters of each generation is expanding rapidly, and the data volume requirements and costs of pre-training are also increasing rapidly)

The first is the cost of reply: Musk once The Twitter platform asked ChatGPT "What is the average cost per chat?" Altman, CEO of OpenAI, expected to be slightly bitter: the average chat cost of ChatGPT is "single digit cents", and said that efforts need to be made to optimize costs.

So what is this "single digit"? The author got the answer based on the following data.

· In January 2023, an analysis report by investment bank Morgan Stanley stated that the cost of a reply to ChatGPT is approximately 6 times to 28 times the average cost of a Google search query.

· According to a latest analysis by Morgan Stanley, the average cost of Google’s 3.3 trillion searches last year was about 0.2 cents each.

Therefore, it can be seen that the ChatGPT chat reply cost is 1.2~5.6 cents.

So, how many consultations are there every day? , According to Similarweb data, as of the end of January 2023, the chat.openai.com website (the official website of ChatGPT) attracted as many as 25 million daily visitors in the week of January 27th to February 3rd.

Assuming that each user asks about 10 questions every day, there are about 250 million consultations every day. Then it can be concluded that just to support the cost of chatting, the daily expenditure is as high as 03 to 140 million US dollars. (Of course, the cost will slowly come down.)

Ten million US dollars is just the admission fee, and the operation of tens of millions or even over 100 million US dollars a day is unbearable!

Li Di, CEO of Xiaobing, made an calculation: “If we consider the cost of ChatGPT, I will burn 300 million yuan every day, and more than 100 billion yuan a year.

Recently, John Hennessy, chairman of Google’s parent company Alphabet, also expressed concern about cost: the cost of search based on large language models may be 10 times that of standard keyword searches. This technology Billions of dollars of additional costs will be incurred, which is expected to have an impact on Alphabet Group's profitability.

Therefore, the threshold for building a true "ChatGPT" is too high ( The "moats" of big companies will also be very deep). Even for international giants like Google, they have to think twice and carefully.

Can the huge cost of investment and expenditure bring expected revenue? OpenAI's turnaround seems to answer this question.

Last year, OpenAI's financial net loss reached 545 million . It is obvious that after people have witnessed the soul impact of ChatGPT, they do not doubt its monetization ability. Not long ago, OpenAI announced that it expects to have US$200 million in revenue this year and US$1 billion next year.

Currently, OpenAI’s profit model around ChatGPT is roughly divided into two types:

1) Provide API data interface, perform natural language processing tasks, and charge based on the number of characters;

2) ChatGPTPlus provides privileges such as priority response during busy periods of the server, and charges a subscription service fee of US$20/month.

In addition, if we combine the new Bing with ChatGPT Taken together, this kind of conversation-based intelligent search will also generate a lot of advertising revenue.

Speaking of which, Altmann, the head of OpenAI, doesn’t seem to have much idea about commercialization. He previously said on this issue: “I don’t know.” But with the deep integration of subsequent businesses, the ability to attract money far exceeds Our imagination.

Back in China, companies may be more concerned about business scenarios, whether to do the B side first or the C side first.

In an era when Internet traffic is at its peak, giants are busy breaking through. In the field of AIGC, it seems that major technology companies have seen a glimmer of light and begun to actively deploy, hoping to effectively transform this emerging technology trend into market competitive advantages.

Baidu Feipiao, Tencent Youtu, Alibaba Damo Academy, Byte, NetEase and other AI R&D departments have begun a new round of competition for ChatGPT-like products.

In fact, the track of large models is not new. Many domestic companies have their own accumulation and field advantages.

But at present, Baidu is undoubtedly the most prominent one in the near future.

On Thursday, Baidu founder Robin Li highlighted his excitement and expectations for the upcoming "Wen Xin Yiyan" during the Q4 and full-year financial report conference call, and even made bold statements. : "We believe it will change the rules of the game for cloud computing."

Judging from the plans announced at the meeting, "Wen Xinyiyan" has become the "C" for the subsequent business development. "bit" - multiple mainstream businesses, such as search, smart cloud, Apollo autonomous driving, Xiaodu smart devices, etc. will be integrated with it. Among them, Wen Xinyiyan will provide external services through Baidu Smart Cloud. March is approaching, and "Wen Xin Yi Yan", which is in the "sprint period", is about to break out of its cocoon.

More than 10 years of high investment in R&D are waiting for a breaking point. Search is oriented to the C-end, and intelligent cloud is oriented to the B-end. Baidu, an elephant that once missed the era of mobile Internet, is ushering in a new and comprehensive "dancing" opportunity.

Technical concepts emerge in endlessly, and more "buzzwords" cannot withstand tout.

The last time people mentioned the metaverse, they were limited to the imagination of "what is the metaverse", and then it was accompanied by people's repeated consideration of the "VUCA era" , the voice gradually drowned out.

If a possible technology trend wants to gain a foothold in society, it must ultimately provide real value. AIGC technology represented by ChatGPT also needs to overcome the pain points of "difficulty in implementing AI" in the past, allowing AI to think carefully and innovate in improving user experience, creating new user experience, and creating social value. And this point is also the most core.

The good news is that we see its value. Text-based tasks such as writing emails, brainstorming, rewriting code, and writing summaries can indeed save practitioners a lot of time and cost. I believe that in the future, AIGC will be seen in diverse content scenarios such as voice, images, and videos. Not a bad performance.

Of course, in addition to core values, it is also inseparable from the soil that encourages innovation. The Ministry of Science and Technology also responded to questions about ChatGPT yesterday: "Our country has taken corresponding measures in terms of ethics for any new technology, including AI technology, to seek advantages and avoid disadvantages in the development of science and technology, so as to make 'benefits' better." Show it off."

Then the perspective returns to developers, what do we technical people need to do? Abandon the impetuous mentality of following the trend and have a solid understanding of the problems to be solved. It is more pragmatic than just watching the excitement of "stock rises and falls". For example, OpenAI developers are working on practical ways to reduce model training costs.

#On the 25th, Wang Jian, academician of the Chinese Academy of Engineering and founder of Alibaba Cloud, stated at the 2023 Global Artificial Intelligence Pioneer Conference: Computing power is no longer a bottleneck or excuse for developers. Developers need to have a comprehensive understanding of the entire industry and understand the capabilities and boundaries of the algorithm.

Wang Jian said that developers who truly develop top-level artificial intelligence follow the idea of finding problems and using existing technologies to solve them. Solving the problems itself is actually a breakthrough.

“It’s important that you do the right thing at the right time.”

The above is the detailed content of Hold on, ChatGPT will not fall!. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

ChatGPT registration

ChatGPT registration

Domestic free ChatGPT encyclopedia

Domestic free ChatGPT encyclopedia

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to install chatgpt on mobile phone

How to install chatgpt on mobile phone

Can chatgpt be used in China?

Can chatgpt be used in China?

Windows 11 my computer transfer to the desktop tutorial

Windows 11 my computer transfer to the desktop tutorial

What is the role of sip server

What is the role of sip server