In the era of data intelligence, computing is both a necessity and a pain point. The most important feature is one word - big.

Split "big" into three characteristics, including:

##This includes the complexity of data and algorithms, and data, algorithms, and computing power are the three elements of the intelligent era. The complexity of the first two will ultimately be carried by computing power.

#This has caused the industry’s demand for computing power to expand rapidly in both space and time, like a tsunami.

GPU has a way to subdivide the tsunami into thousands of trickles in space, shorten the path of the water in time, streamline the path branches in structure, and convert large-scale The tasks are broken down into small-scale tasks layer by layer, carrying the massive computing power demand with ease and becoming the computing power foundation of the intelligent era. In response to the above three characteristics, the GPU uses three methods: parallelism, fusion, and simplification to accelerate at the operator level based on throughput, video memory and other indicators. The main methodology of GPU acceleration is also suitable for the industrialization of large models.

With the improvement and progress of underlying chips, computing power, data and other infrastructure, the global AI industry is gradually moving from computational intelligence to perceptual intelligence and cognitive intelligence. And accordingly, an industrial division of labor and collaboration system of "chips, computing power facilities, AI frameworks & algorithm models, and application scenarios" have been formed. Since 2019, AI large models have significantly improved the ability to generalize problem solving, and "large models and small models" have gradually become the mainstream technology route in the industry, driving the overall acceleration of the development of the global AI industry.

Not long ago, DataFun held a sharing event on "AI Large Model Technology Roadmap and Industrialization Implementation Practice". Six experts from NVIDIA, Baidu, ByteDance Volcano Translation, Tencent WeChat, etc. Model training technology and inference solutions, the application of multi-language machine translation, large-scale language model development and implementation, etc., bring exciting sharing of AI large model technology routes and industrial implementation practices.When they implemented large models in the industry, they largely adopted methods of parallelism, fusion, and simplification, and also extended from the training and inference levels to the algorithm modeling level.

The parallel method is a method of exchanging space for time. It breaks down the tsunami into trickles. Specifically, for calculations involving large batches of data, each calculation step takes a relatively long time. GPU utilizes parallel computing, that is, data without computational dependencies is parallelized as much as possible, and large batches are split into small batches to reduce the GPU idle waiting time for each calculation step and improve computing throughput.

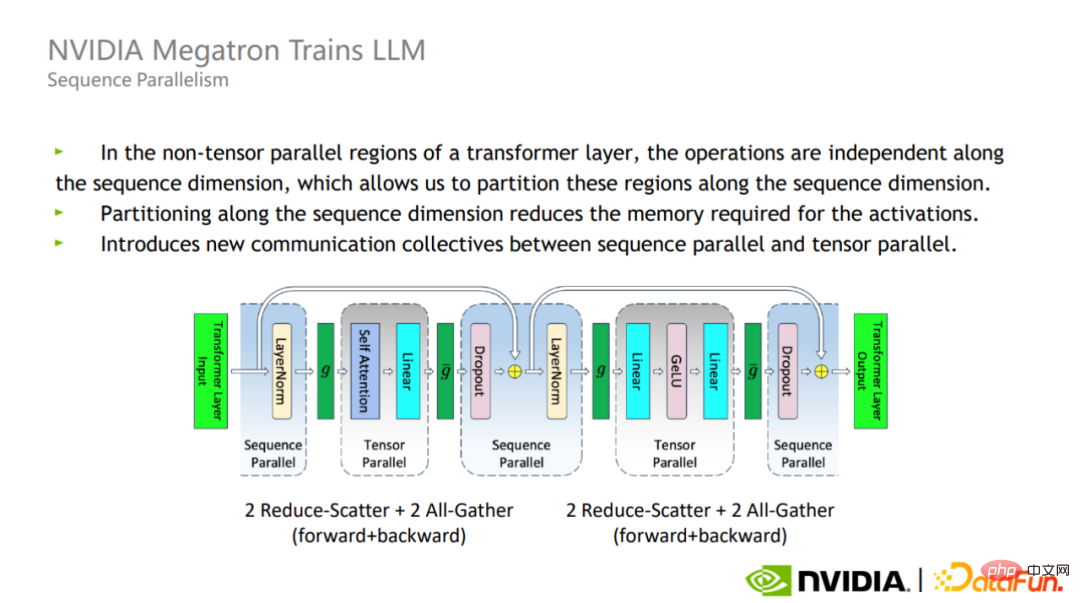

In order to actually complete the training of large models, a high-efficiency software framework is needed to make the training highly computationally efficient on a single GPU, a single node, or even on a large-scale cluster. . Therefore, NVIDIA developed the Megatron training framework. Megatron uses optimization methods such as model parallelism and sequence parallelism to efficiently train Transformer large models, and can train models with trillions of parameters.

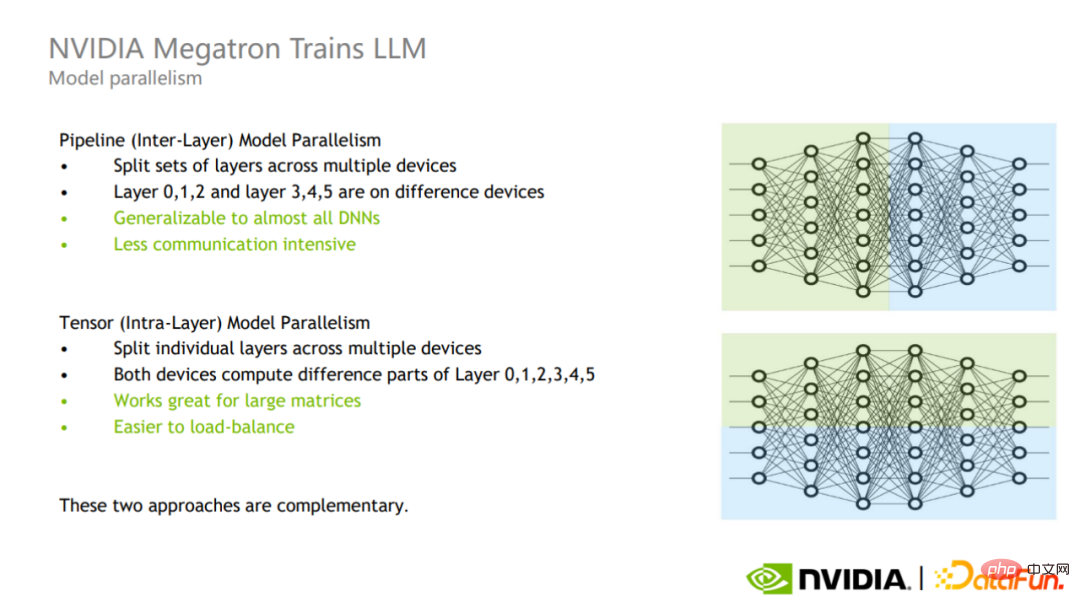

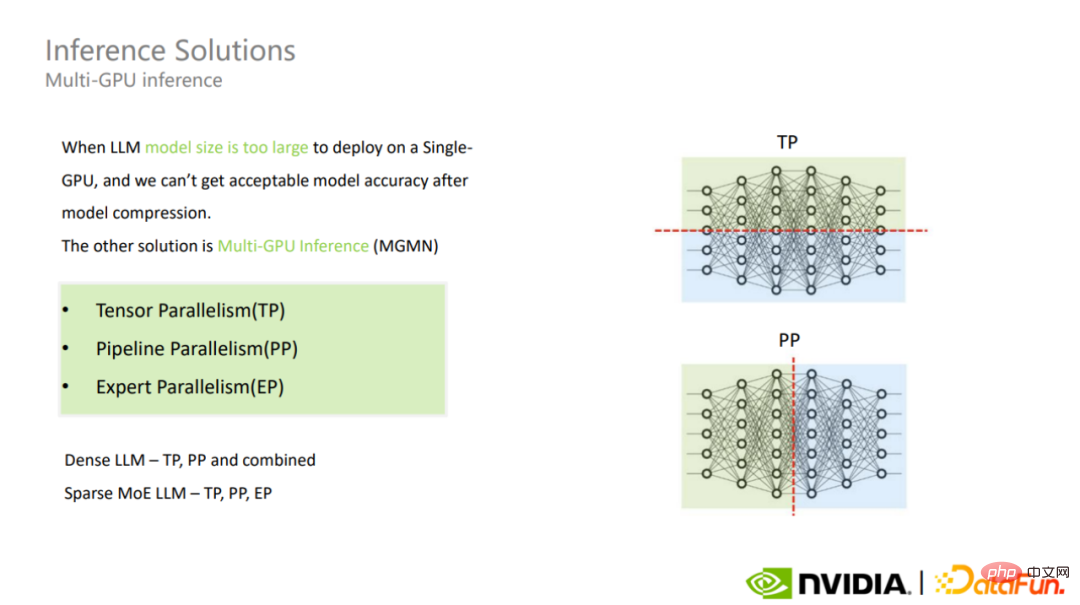

At the algorithm modeling level, Huoshan Translation and Baidu mainly explored modeling methods such as MoE models.Model parallelism can be divided into Pipeline parallelism and Tensor parallelism.

Pipeline parallelism is inter-layer parallelism (upper part of the figure), which divides different layers into different GPUs for calculation. Communication in this mode only occurs at the boundary of the layer, and the number of communications and the amount of communication data are small, but it will introduce additional GPU space waiting time.

Tensor parallelism is intra-layer parallelism (lower part of the figure), which divides the calculation of a layer into different GPUs. This mode is easier to implement, has better effects on large matrices, and can better achieve load balancing between GPUs, but the number of communications and the amount of data are relatively large.

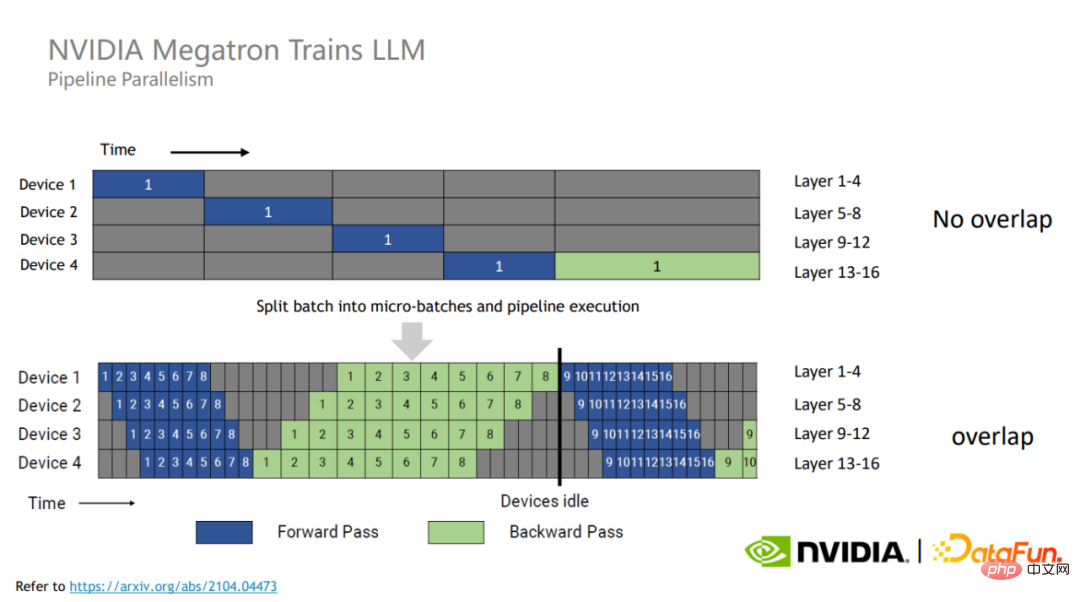

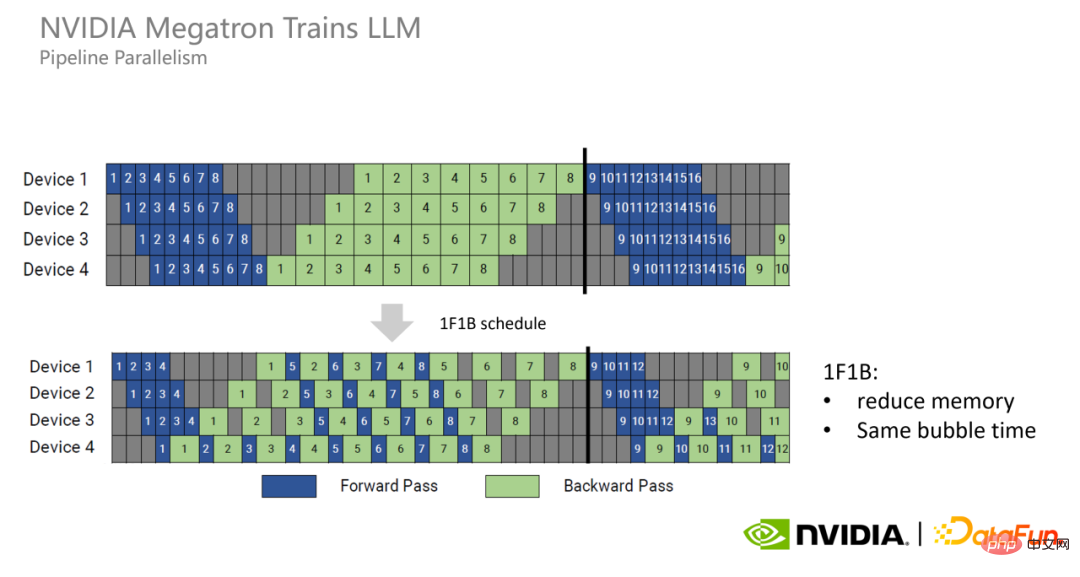

In order to make full use of GPU resources, Megatron divides each training batch into smaller micro batches.

Since there is no data dependency between different micro batches, they can cover each other's waiting time, thereby improving GPU utilization and thus improving overall training performance.

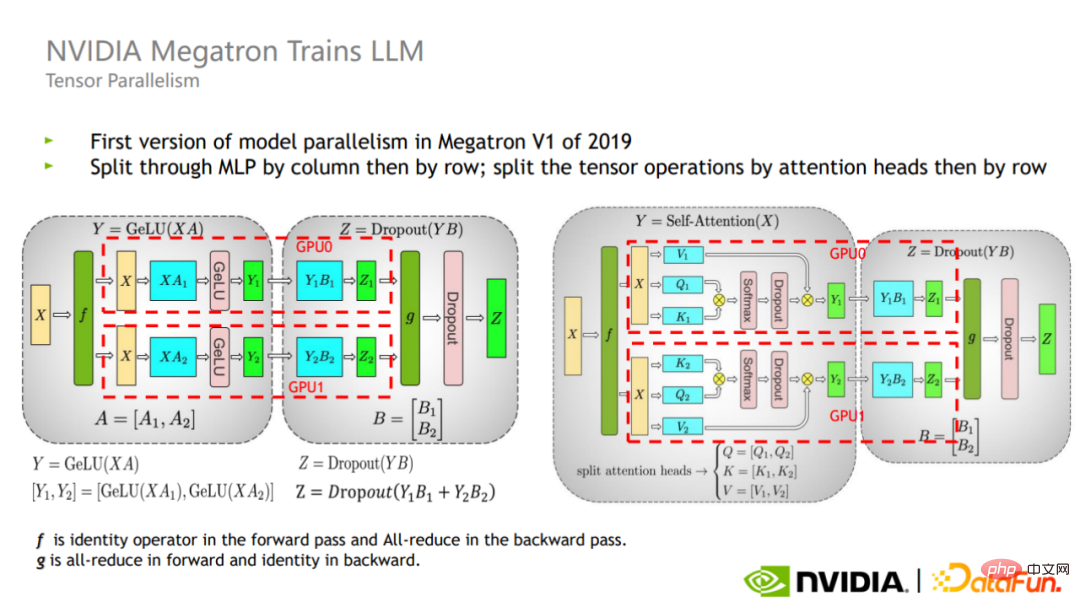

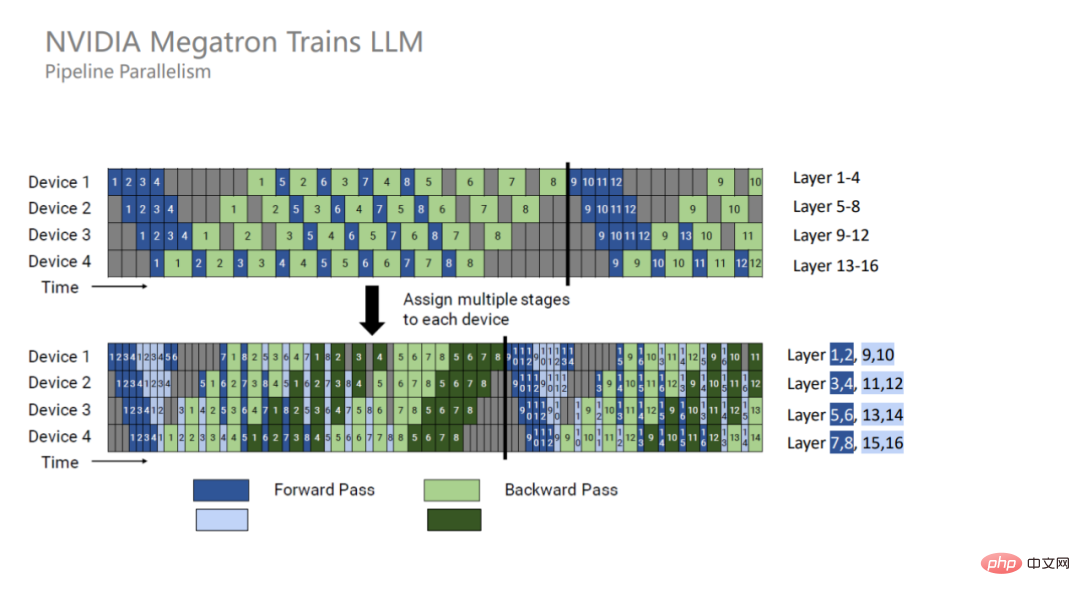

Tensor divides the calculation of each operator into different GPUs in parallel. For a matrix layer, there are two types: cross-cutting and vertical-cutting. way.

As shown in the figure, Megatron introduces these two segmentation methods in the attention and MLP parts of the Transformer block.

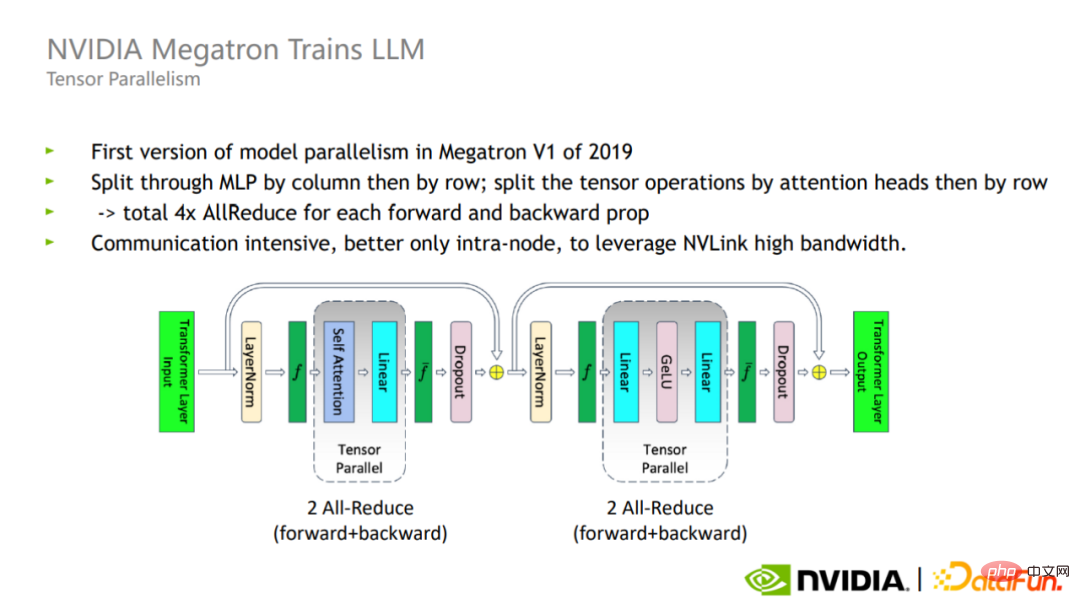

In Tensor parallel mode, the forward and reverse steps of each Transformer layer require a total of four All-reduce communications. Since All- The communication volume of reduce is large, so Tensor parallelism is more suitable for internal use of a single card.

Combining Pipeline parallelism and Tensor parallelism, Megatron can expand the training of a 170 billion parameter model on 32 GPUs to the training of 1 trillion parameter models on 3072 GPUs. Parametric scale model.

Tensor parallelism actually does not split Layer-norm and Dropout, so These two operators are replicated between each GPU.

# However, these operations themselves do not require a lot of calculations, but they occupy a lot of active video memory.

To this end, Megatron has proposed a Sequence parallel optimization method. The advantage of Sequence parallelism is that it does not increase the communication volume and can greatly reduce the memory usage

Since Layer-norm and Dropout are independent along the sequence dimension, they can be split according to the Sequence dimension.

After using Sequence parallelism, for very large-scale models, the memory usage is still very large. Therefore, Megatron introduced activation recalculation technology.

Megatron’s approach is to find some operators that require little calculation but occupy a large amount of video memory, such as operators such as Softmax and Dropout in Attention. By activating and recalculating these operators, the video memory can be significantly reduced. , and the computational overhead does not increase much.

The combination of Sequence parallelism and selective activation of recalculation can reduce the graphics memory usage to about 1/5 of the original. Compared with the original solution of directly recalculating all activations, the video memory is only twice that, and the computing overhead is significantly reduced. As the model scale increases, the proportion of computing overhead will gradually decrease. By the time the trillion-scale model is reached, the cost of recalculation only accounts for about 2% of the total.

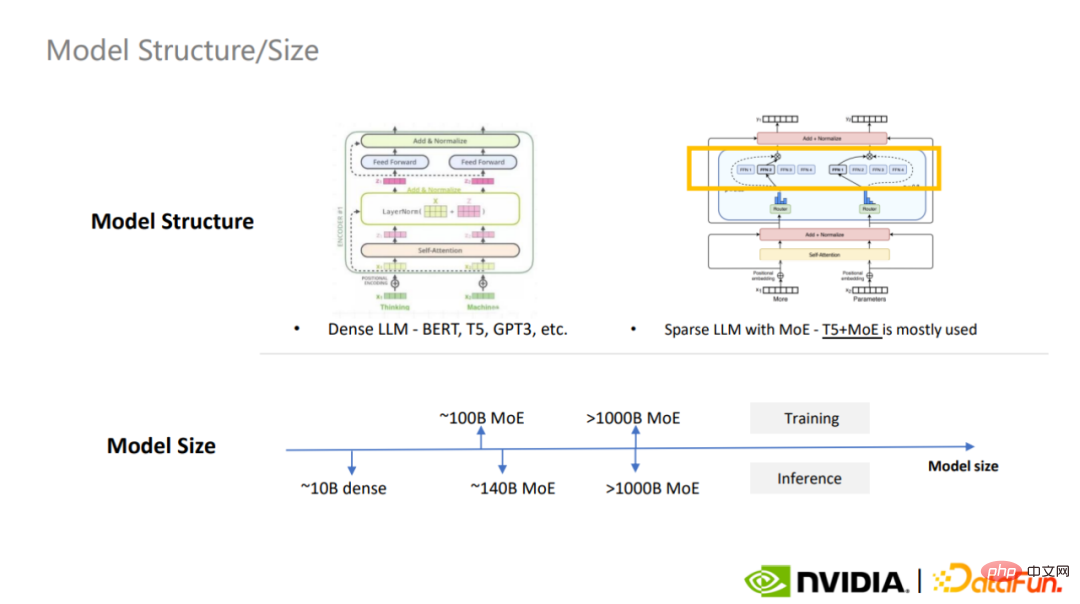

The MoE model has gained more and more popularity in the industry due to its simple design ideas and strong scalability. s concern.

The MoE model proposes the design idea of splitting a large model into multiple small models. Each sample only needs to activate part of the expert model for calculation, thus greatly saving computing resources.

The most commonly used dense large models are BERT, T5, and GPT-3, and the most commonly used sparse MoE model is T5 MoE, MoE is becoming a trend in large model building.

#It can be said that MoE combines the idea of parallel computing at the algorithm modeling level.

The versatility of large models is reflected in many aspects, in addition to the points we are already familiar with, such as the attention mechanism’s inductive bias is weaker and the model capacity Large, model data is large, etc., and the task modeling method can also be optimized. MoE is a typical representative.

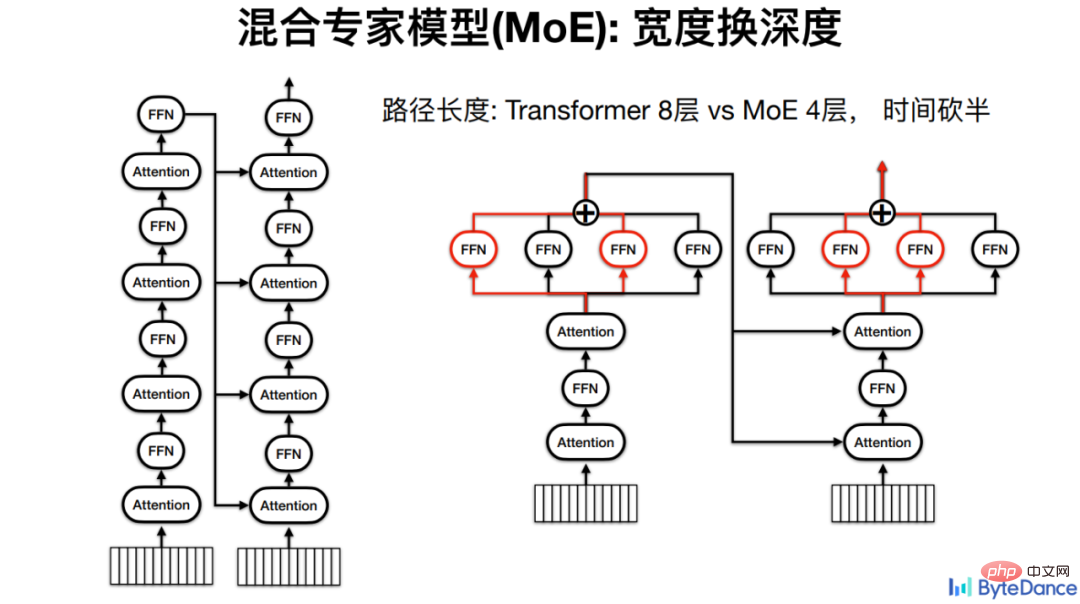

For volcano translation, the basic idea of MoE is to exchange width for depth, because the deeper the model, the more computing layers, and the longer the inference time.

For example, for a Transformer model with 4 layers of Encoder and 4 layers of Decoder, each calculation must go through the calculation of all 8 FFNs. If it is a mixed expert model, the FFN can be placed in parallel, ultimately halving the calculation path and thus the inference time.

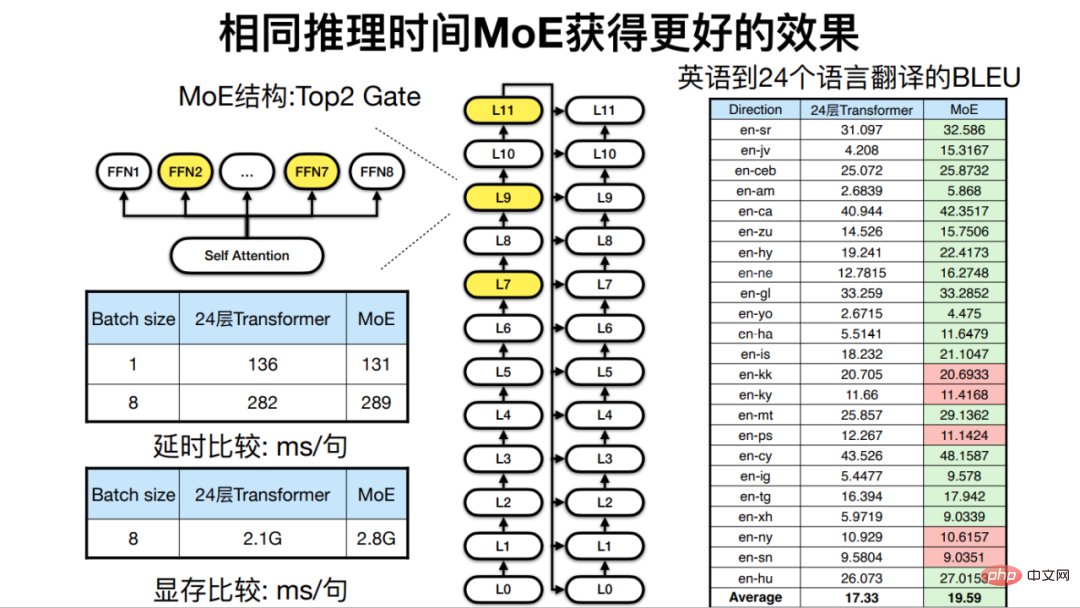

Under the same inference time, that is, when the model depth is similar, because MoE can increase the model width, the final effect of machine translation will be Also improved.

For multilingual translation tasks in 24 African languages and English and French languages, Huoshan Translation has developed a 128-layer Transformer, a MoE model with 24 expert layers, achieves better translation results than traditional architectures.

But the “expert model” in Sparse MoE may be a bit misnamed, because for one sentence, for example, the experts that each Token passes through have May be different.

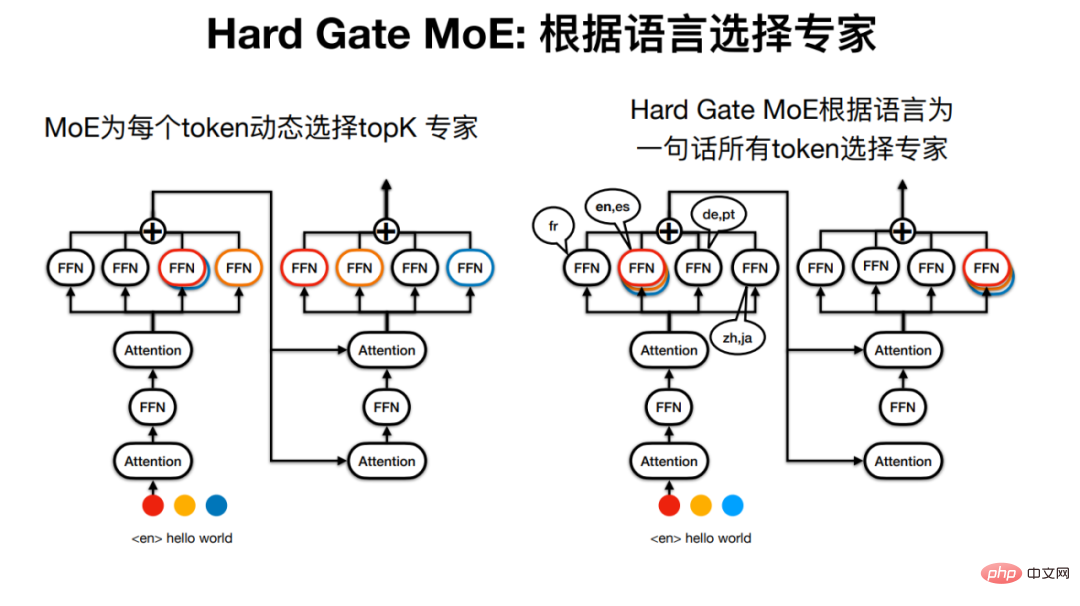

Huoso Translation has therefore developed Hard Gate MoE, so that the experts passed by the sentence are determined by the language. This makes the model structure simpler, and experimental results also show that its translation effect is better.

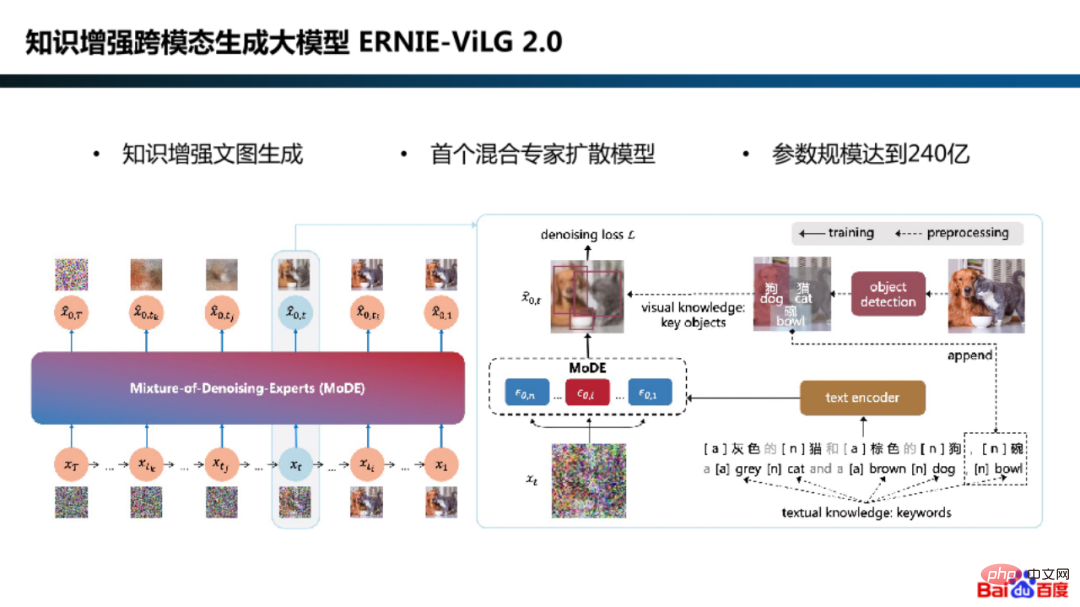

In the parallel exploration of algorithm modeling, Baidu also adopted the hybrid expert diffusion model framework in the knowledge-enhanced cross-modal generation large model ERNIE-ViLG 2.0.

# Why should we use expert models for diffusion models?

In fact, this is because the model modeling requirements are different at different generation stages. For example, in the initial stage, the model focuses on learning to generate semantic images from Gaussian noise, and in the final stage, the model focuses on recovering image details from noisy images.

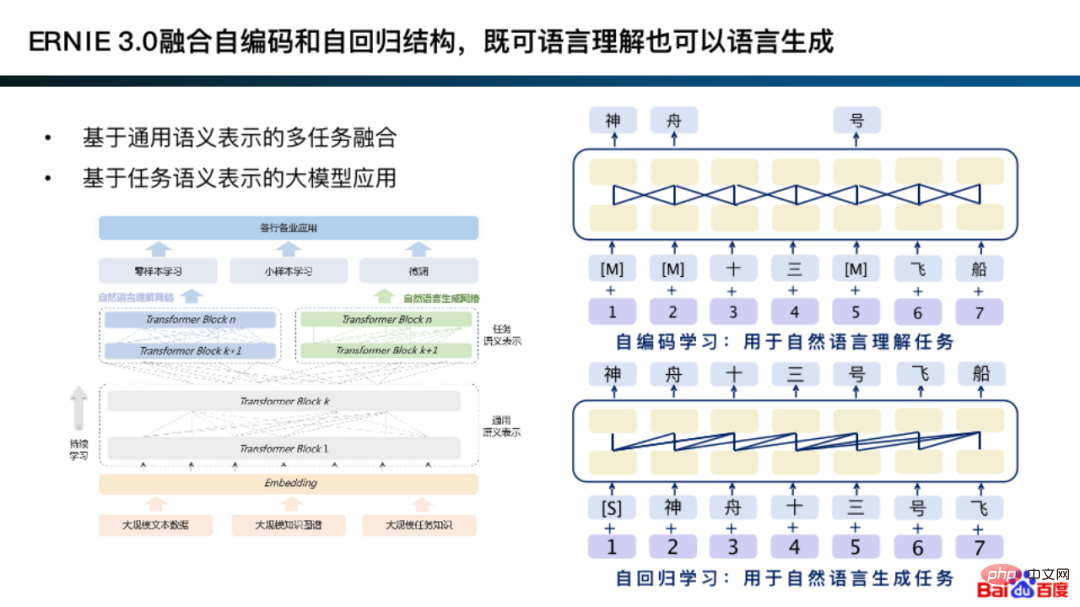

In fact, in the early version of ERNIE 3.0, autoencoding and autoregression were integrated, which can combine the two modeling methods on a general semantic representation for specific generation tasks and understanding tasks. .

The basic idea of integrating autoencoding and autoregression is actually similar to the modeling methodology of the expert model.

# Specifically, based on the universal representation, the understanding task is suitable for the autoencoding network structure, and the generation task is suitable for the autoregressive network structure. mold. In addition, this kind of modeling often learns better general representations.

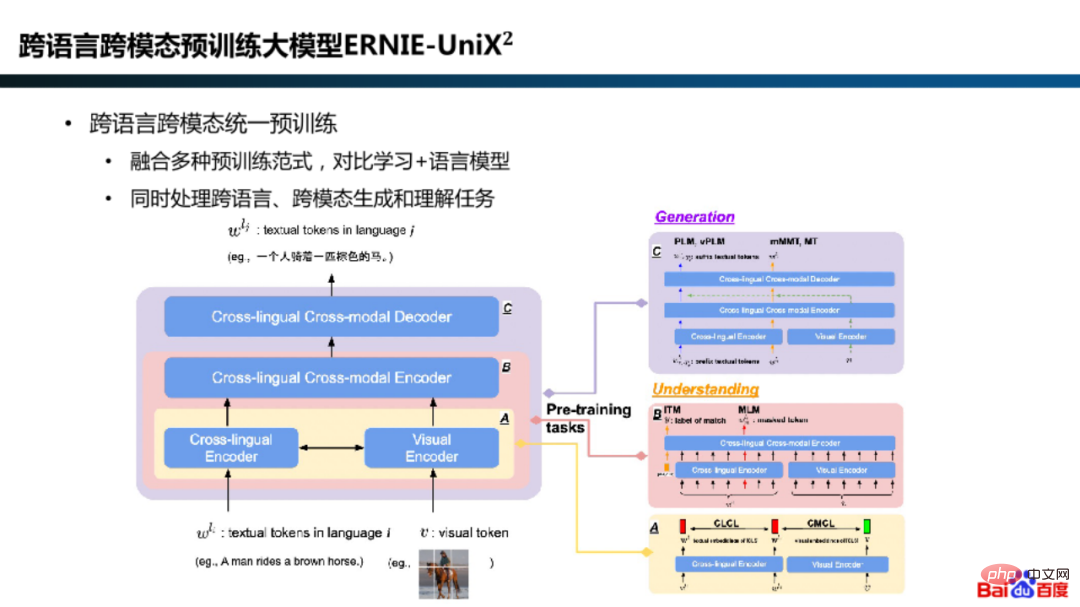

In addition, in the ERNIE-UniX2 model, Baidu By integrating pre-training paradigms such as contrastive learning and language models, multi-language and multi-modal understanding and generation tasks are unified.

After training the MoE model, inference deployment is also a link that attaches great importance to efficiency.

When selecting a deployment solution for ultra-large-scale model inference, the first decision to use a single card will be based on the model's parameter scale, model structure, GPU memory and inference framework, as well as the trade-off between model accuracy and inference performance. Reasoning is still Doka reasoning. If there is insufficient video memory, model compression or multi-card inference solutions will be considered.

Multi-card inference includes Tensor parallelism, Pipeline parallelism, Expert parallelism and other modes.

Adopting different modes for MoE very large models will encounter different challenges. Among them, the Tensor parallel and dense models of the MoE model are similar.

If you select the Expert parallel mode, the Experts of each MoE Layer will be divided into different GPUs, which may cause load balancing problems, resulting in A large number of GPUs are idle, ultimately resulting in low overall throughput. This is an important point to focus on in MoE Doka reasoning.

For Tensor parallelism and Pipeline parallelism, in addition to reducing inter-card communication through fine-tuning, a more direct method is to increase inter-card bandwidth. When using Expert parallelism for the MoE model causes load balancing problems, it can be analyzed and optimized through Profiling.

#The multi-card inference solution increases communication overhead and has a certain impact on model inference delay.

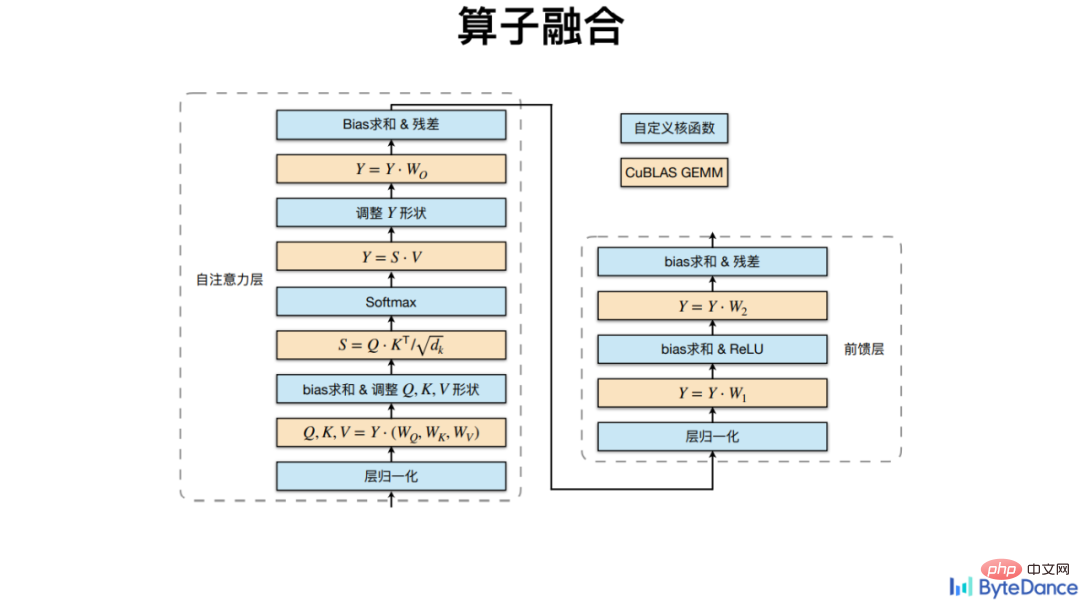

## Fusion is to solve the natural contradictions encountered in parallel computing Methods, parallel computing and serial computing are two basic computing modes. When applying parallel computing, the most typical difficulty is the large number of serial dependencies and the resulting intermediate value memory occupancy. GPU memory usually becomes one of the hardware performance bottlenecks for large model training and inference.

#For the problem of serial dependency in massive computing, the most important method is to shorten the path of the trickle, that is, to reduce the intermediate dwell process. Specifically, operator fusion is used to merge operators with sequential dependencies to reduce video memory usage.

Operator fusion is not only implemented at the computing level, but also at the operator design level.

#Pipeline If the forward and reverse processes are separated in parallel, video memory will appear Too much occupancy problem.

Therefore, Megatron has proposed a new model of Pipeline parallelism, 1F1B. Each GPU executes the forward and reverse processes of each micro batch in an alternating manner to release the video memory it occupies as early as possible, thereby reducing the video memory usage.

1F1B cannot reduce the bubble time. In order to further reduce the bubble time, Megatron proposed the interleaved 1F1B mode. That is to say, each GPU was originally responsible for the calculation of four consecutive layers, but now it is responsible for the calculation of two consecutive layers, which is only half of the original. Therefore, the bubble time has also become half of the original.

When doing GPU calculations, each calculation process can be encapsulated into a GPU Kernel and placed It is executed on the GPU and is sequential. For the sake of versatility, traditional operator libraries design operators to be very basic, so the number is very large. The disadvantage is that it takes up a lot of video memory because it needs to store a large amount of intermediate hidden representations. In addition, this requires relatively high bandwidth. High, which may eventually cause delays or performance losses.

Volcano Translation integrates other non-matrix multiplication operators based on the CuBLAS multiplication interface, including Softmax, LayerNorm, etc.

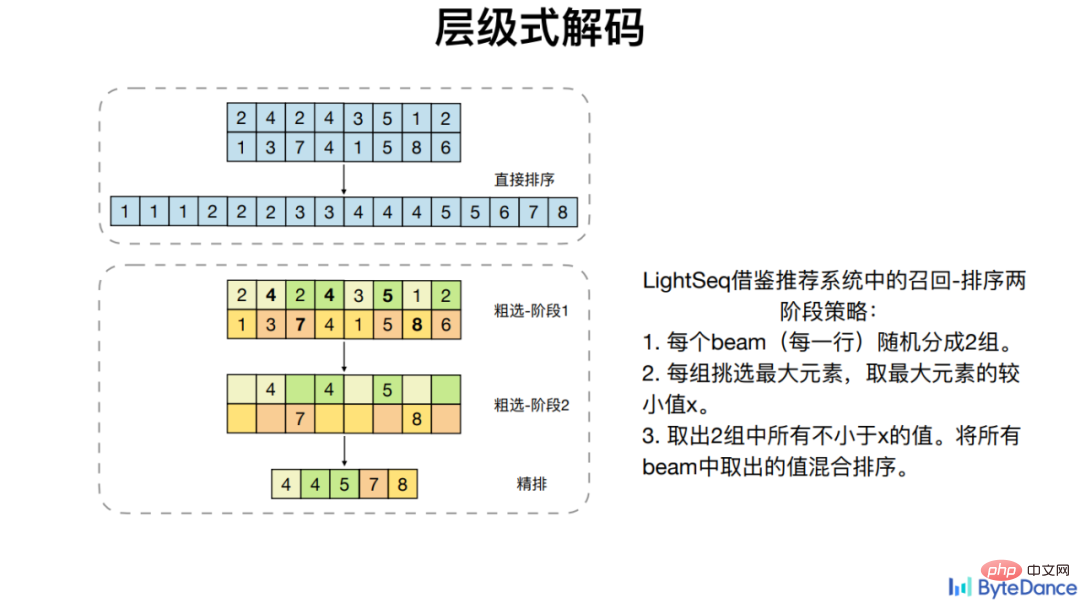

In addition to comparing the fusion of general operators, Huoshan Translation also targets some specific operators such as Beam Search, which cannot make good use of GPU parallelism. Optimize its computational dependencies to achieve acceleration.

On four mainstream Transformer models, LightSeq operator fusion achieved up to 8 times acceleration based on PyTorch.

##Simplification is a relatively simple and intuitive way to accelerate. Streamline the pipeline branches in details. Specifically, for high computational complexity, the operator complexity is simplified while ensuring performance, and ultimately the amount of calculation is reduced.

# Single-card inference of very large-scale models generally involves model compression.

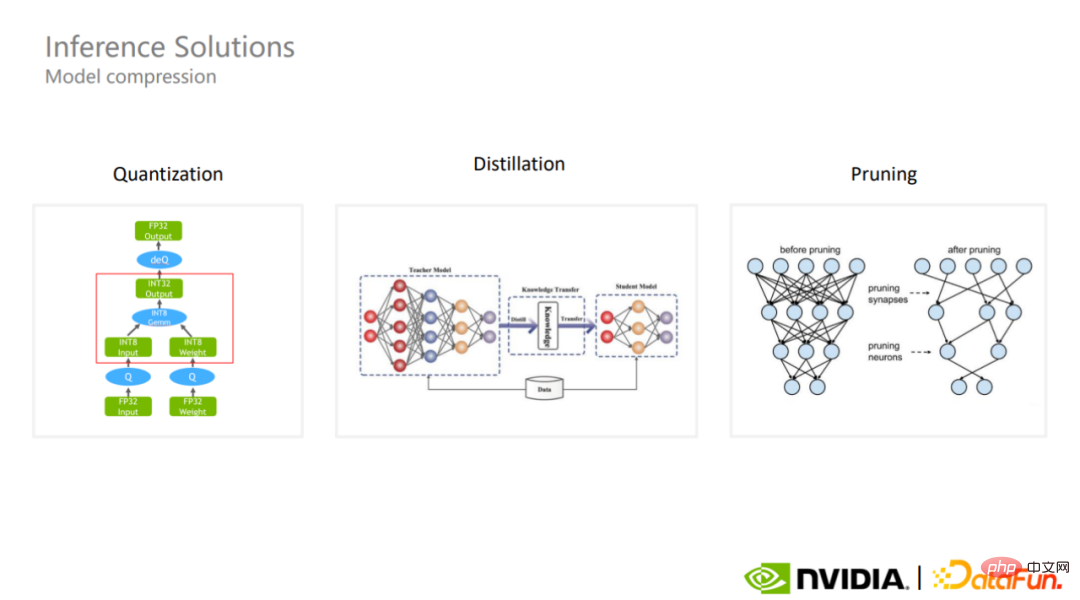

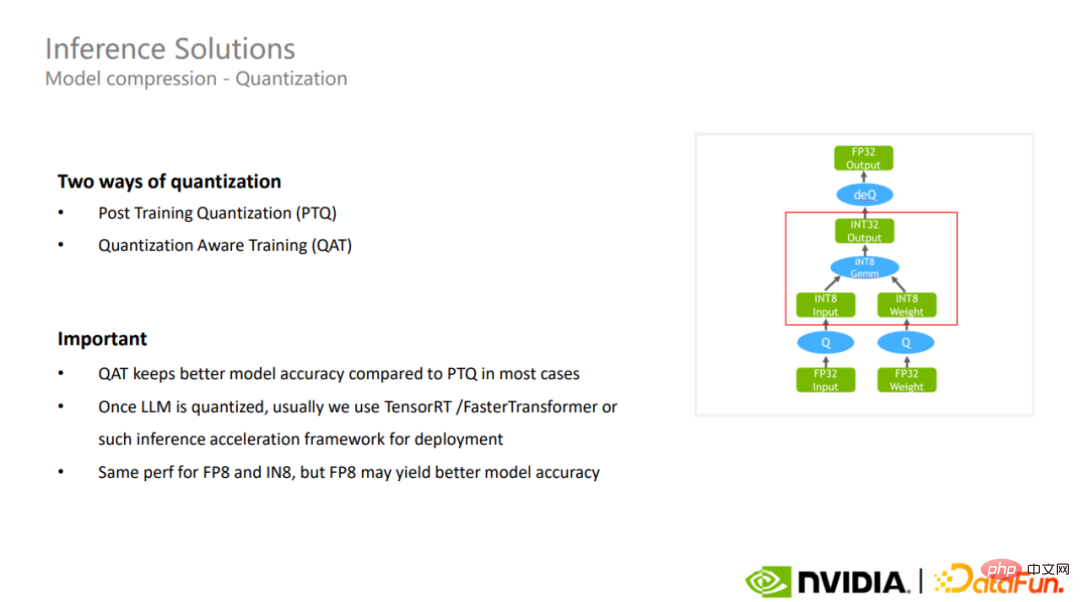

Common model compression schemes are quantization, distillation and pruning. Quantization is one of the most commonly used model compression schemes in the industry. Although the quantitative calculation uses lower precision, it can maintain the parameter magnitude of the model, and in some cases may better ensure the overall accuracy of the model.

##There are currently two quantification methods, one One is post-training quantization, and the other is quantization-aware training. The latter usually preserves the accuracy of the model better than the former.

#After quantization is completed, inference acceleration frameworks such as TensorRT or FasterTransformer can be used to further accelerate the inference of very large models.

LightSeq uses true int8 quantization in the quantization of the training process, that is, the quantization operation is performed before the matrix multiplication, and the inverse quantization operation is performed after the matrix multiplication. Unlike pseudo quantization in the past, quantization and dequantization operations are performed before matrix multiplication to allow the model to adapt to the losses and fluctuations caused by quantization. The latter does not bring acceleration in actual calculations, but may increase the delay or increase the memory usage. True int8 quantization also brings good acceleration effects in practical applications.

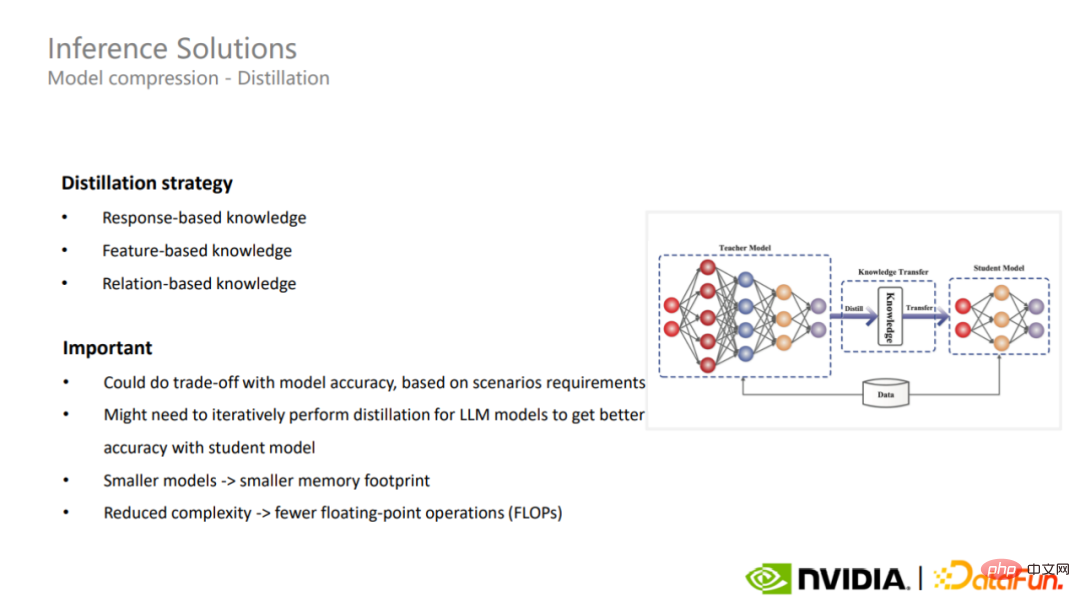

The second model compression method is distillation. Distillation can use different strategies to compress very large models for different application scenarios. In some cases, distillation can give very large models better generalization capabilities.

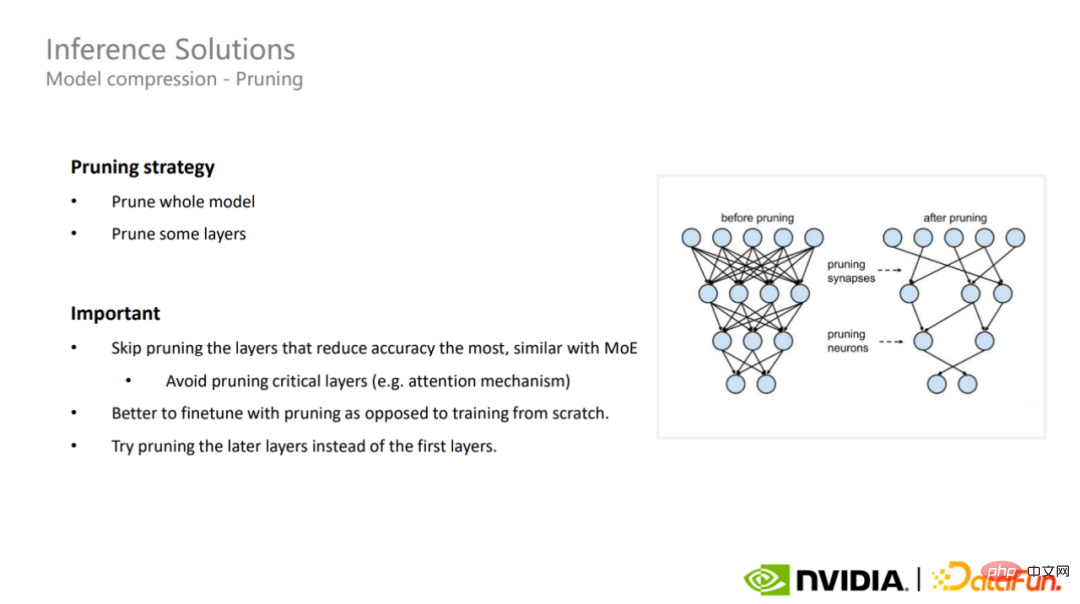

The last model compression solution is pruning. Pruning can be divided into full model pruning and partial layer pruning. For very large models, it is very important to understand the key layers of the model. It is necessary to avoid pruning of these parts that have the greatest impact on accuracy. This is also applicable to sparse MoE models.

The research and implementation of large models has become a trend. It is expected that in 2022, there will be more research on large-scale language models and There are more than 10,000 papers on Transformers, a seven-fold increase from when Transformers was first proposed five years ago. In addition, large models also have a wide range of applications, such as image generation, recommendation systems, machine translation, and even life sciences, code generation, etc.

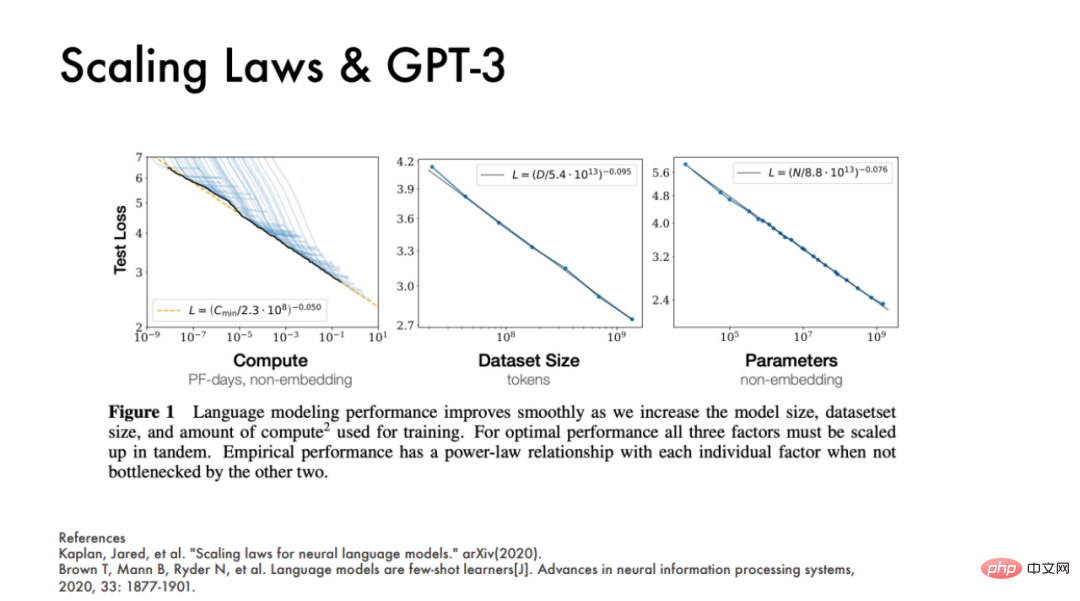

##OpenAI also published two papers in 2020, showing that the performance of one model is basically the same as that of three It is linked to three main factors, namely computing power, data set size, and model parameter quantity. These three indicators can well predict the effect of the model.

But nowadays, the challenges of large model training are self-evident. Take GPT-3 as an example. If you use the original mixed precision during training, you need to save the parameters and gradients during training and the main parameters of FP 32. If you use the Adam optimizer, you also need to save the momentum information of the two optimizers. , a total of 2.8 TB of video memory is ultimately required, which far exceeds the video memory capacity of a single card and requires more than 35 A100s to carry it.

NVIDIA's 2021 paper "Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM" derived an empirical formula indicating that the number of parameters in a single iteration is 175 billion The GPT-3 model requires 450 million FLOPs of computing power. If the entire training cycle consists of 95,000 iterations, 430 ZettaFLOPs are required. In other words, it takes an A100 to train for 16,000 days, which is a conclusion regardless of computational efficiency. In other words, simply accumulating these three indicators will be a huge waste of resources in the era of large-scale model industrialization. DeepMind stated in ChinChilla’s paper published in 2022 that in fact, large models such as GPT-3, OPT, and PaLM are basically underfitting models. If based on the same computing resources, the number of model parameters is reduced and more steps are trained, the final model effect can be better. This is also the design philosophy that WeChat follows in the WeLM large-scale language model.

Companies in the industry are basically beginning to relax their focus from scale and focus instead on efficiency issues when implementing large models.

For example, from the perspective of overall execution efficiency, almost all models optimized by Megatron have a 30% throughput improvement, and as the model size increases, higher performance can be achieved. GPU utilization. On the 175 billion parameter GPT-3 model, the GPU utilization can reach 52.8%. On models with a parameter scale of more than 530 billion, the utilization rate can reach 57%.

In other words, according to Richard Sutton’s “Winner’s Law”, efficiency will become the main tone of large-scale model industrialization.

The above is the detailed content of The methodology for industrialization of large models is all hidden in the GPU. For more information, please follow other related articles on the PHP Chinese website!