2016 is the year of the rise of artificial intelligence.

Since AlphaGo defeated Go world champion Lee Sedol, the players representing the top level of Go are no longer human.

However, an article in today’s Financial Times has brought Go back into people’s horizons: Humans have found a way to defeat AI!

After seven years of dormancy, are human chess players going to make a comeback?

FT reported that Kellin Pelrine, an amateur four-dan chess player from the United States, defeated the top chess-playing AI-KataGo in one fell swoop.

Out of 15 offline matches, Perline won 14 of them without the help of a computer.

The plan to allow human players to regain the Go crown came from researchers at the California research company FAR AI. The team analyzed the weaknesses of the AI chess players and targeted them to achieve the final victory.

FAR AI CEO Adam Gleave said: "It is very easy for us to exploit this system."

Gleave said, After playing more than 1 million games with KataGo, the AI developed by the team discovered a "bug" that human players could exploit.

Pelrine said the winning strategy they discovered is "not that difficult for humans" and that intermediate players can use it to defeat machines. He also used this method to defeat Leela Zero, another top Go system.

Kellin Pelrine

FT wrote , albeit with the help of computers, this decisive victory opened the door for human chess players.

Seven years ago, artificial intelligence was miles ahead of humans in the most complex of games.

The AlphaGo system designed by DeepMind defeated Go world champion Lee Sedol 4-1 in 2016. Lee Sedol also announced his retirement three years after the disastrous defeat, calling AlphaGo "unbeatable."

As for the strength of artificial intelligence, Pelrine does not take it seriously. In his opinion, the large number of combinations and variations in a chess game means that it is impossible for a computer to evaluate all possible future moves of a chess player.

To put it simply, the strategy used by Pelrine is to "attack the east and attack the west".

On the one hand, Pelrine places stones in every corner of the chessboard to confuse the AI; on the other hand, Pelrine identifies an area of the AI player's area and gradually surrounds it.

Pelrine said that even though the siege was about to be completed, the AI chess players did not notice the danger in this area. He continued: "But as humans, these vulnerabilities are easy to find."

Stuart Russell, a computer science professor at the University of California, Berkeley, said that some of the most advanced Go game machines have been Weaknesses were found, suggesting that the deep learning systems that underpin today's most advanced AI are fundamentally flawed.

He said these systems can only "understand" the specific situations they have experienced, and cannot make simple generalizations about strategies like humans.

But strictly speaking, the researchers defeated AI through AI, or in other words, used AI to help humans defeat AI in Go.

The paper used as a reference source was first published in November 2022 and updated in January this year. The authors are from MIT, UC Berkeley and other institutions.

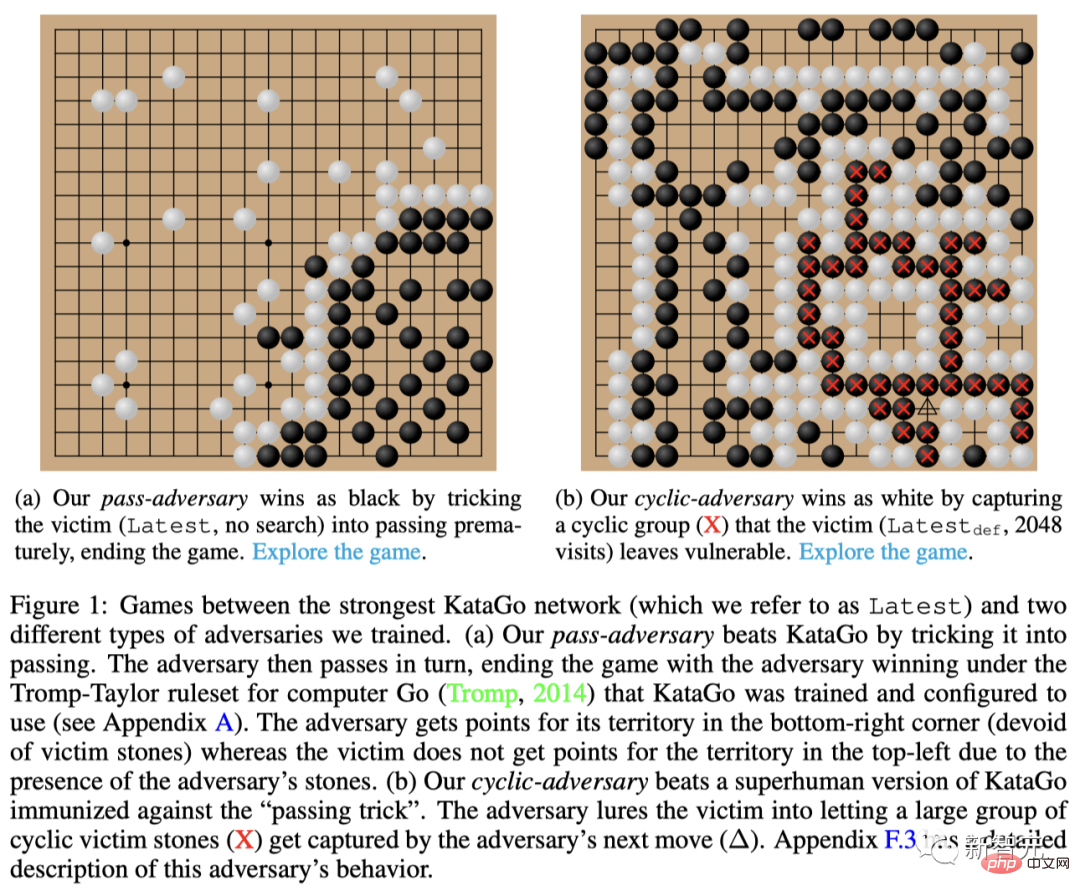

In the article, researchers trained an AI with a resistant strategy and defeated KataGo, the most advanced Go artificial intelligence system.

## Project address: https://goattack.far.ai/adversarial-policy-katago#contents

Paper address: https://arxiv.org/abs/2211.00241

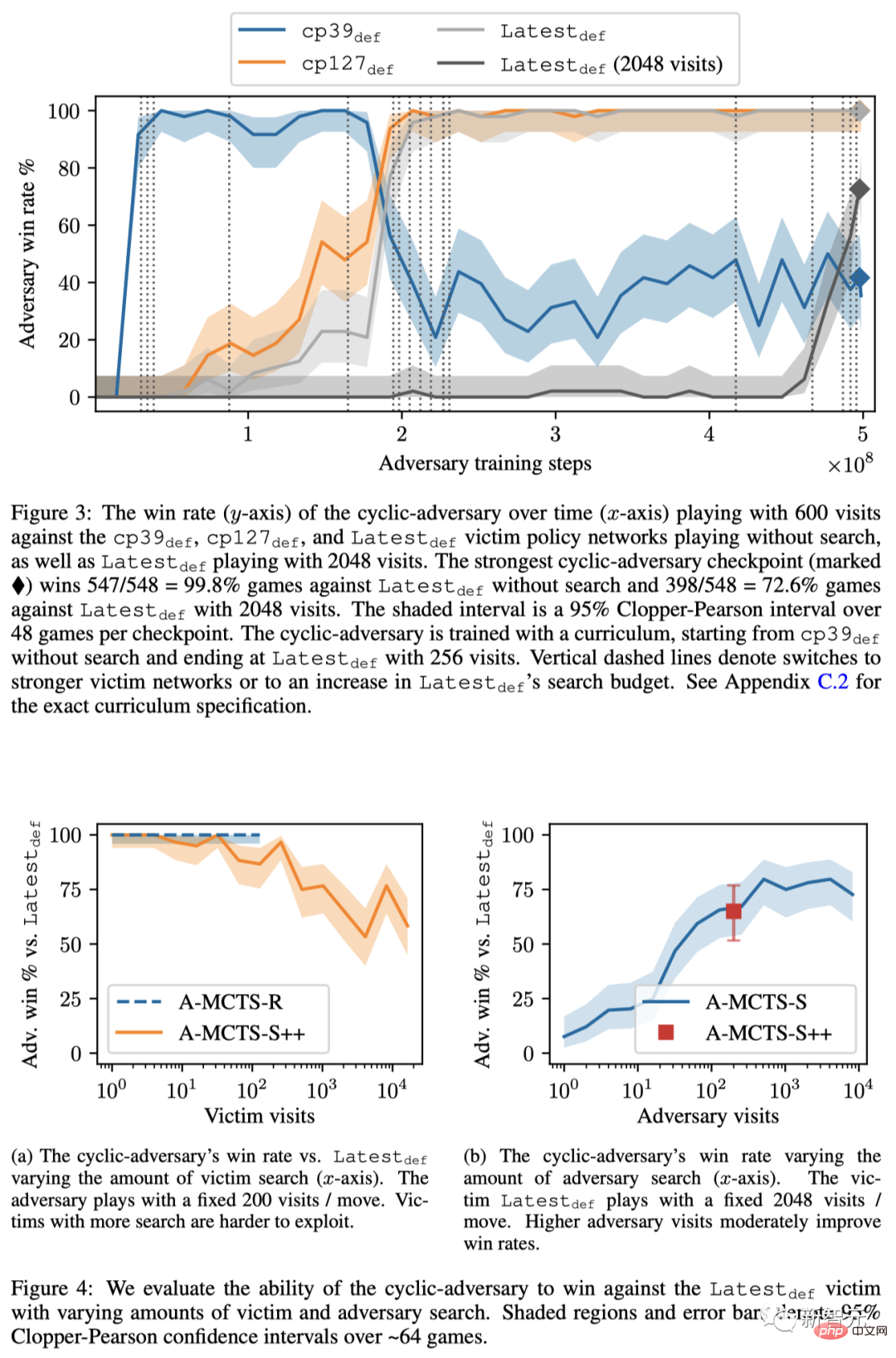

The results show, When KataGo does not use search trees, the attacker achieves a 100% winning rate in 1,000 games, and when KataGo uses enough searches, the winning rate exceeds 97%.

In this regard, the researchers emphasized that the adversarial strategy AI can defeat KataGo, but it will be defeated by human amateurs. At the same time, human amateurs cannot beat KataGo. of.

In other words, this AI can win not because it plays Go better, but because it can induce KataGo to make serious mistakes.

Before this, such as KataGo and AlphaZero These chess-playing AIs are all trained through self-play.

But in this study, which the author calls "victim-play", the attacker (adversary) needs to interact with a fixed victim (victim) Play games to train your own winning strategies (not imitate your opponent's moves).

In response, researchers introduced two different adversarial MCTS (A-MCTS) strategies to solve this problem.

Specifically, in A-MCTS-R, researchers simulate using a new (recursive) MCTS search on the victim node, replacing Victim sampling steps in A-MCTS-S.

While this is no longer a perfect victim model, it tends to be more accurate than A-MCTS-S, which incorrectly assumes that the victim does not search.

The evaluation results are as follows, please refer to the original text for specific details.

The above is the detailed content of Can humans beat the top Go AI again? With one move against the wind, this amateur 4-dan chess player won completely. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to connect php to mssql database

How to connect php to mssql database

PHP simple website building tutorial

PHP simple website building tutorial

The difference between wlan and wifi

The difference between wlan and wifi

Common situations of mysql index failure

Common situations of mysql index failure

What are the data types?

What are the data types?

Introduction to xmpp protocol

Introduction to xmpp protocol