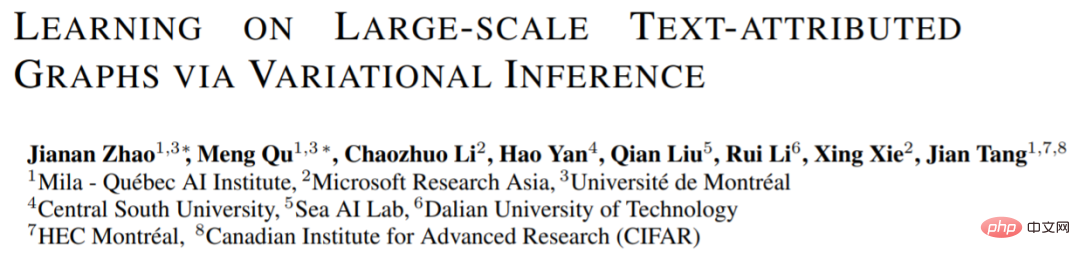

##Figure 1: (a) Text graph (b) Graph neural network (c) Language model

Graph is a universal data structure that models the structural relationship between nodes. In real life, many nodes contain rich text features, and this graph is called a text-attributed graph [2]. For example, the paper citation network contains the text of the paper and the citation relationship between the papers; the social network contains the user's text description and the user's direct interactive relationship. The representation learning model on text graphs can be applied to tasks such as node classification and link prediction, and has wide application value.

Text graph contains two aspects of information: text information of nodes and graph structure information between nodes. The modeling of traditional text graphs can be divided into two perspectives: text modeling and graph modeling. Among them, the text modeling method (shown in Figure 1.b) usually uses a Transformer-based language model (LM) to obtain the text representation of a single node and predict the target task; the modeling method of graph modeling ( As shown in Figure 1.c), a graph neural network (GNN) is usually used to model the interaction between node features and predict target tasks through a message propagation mechanism.

However, the two models can only model the text and graph structure in the text graph respectively: the traditional language model cannot directly consider the structural information, and the graph neural network cannot directly consider the original text information. Modeling. In order to model text and graph structures at the same time, researchers try to integrate language models and graph neural networks and update the parameters of the two models simultaneously. However, existing work [2, 3] cannot model a large number of neighbor texts at the same time, has poor scalability, and cannot be applied to large text graphs.

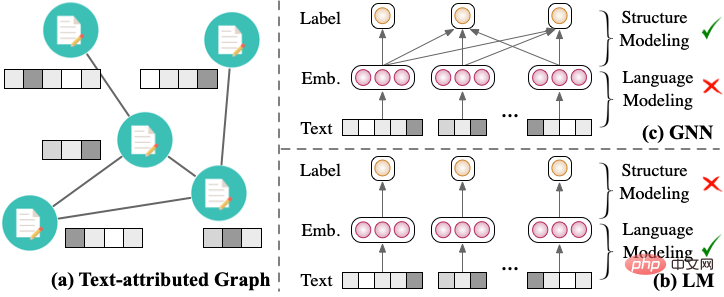

GLEM frameworkIn order to more effectively integrate graph neural networks and language models, this article proposes Graph and L anguage Learning by Expectation Maximization (GLEM) framework. The GLEM framework is based on the variational expectation maximum algorithm (Variational EM) and alternately learns graph neural networks and language models, thus achieving good scalability.

Figure 2: GLEM framework

Specifically, taking the node classification task as an example, in the E step, GLEM trains the language model based on the real labels and the pseudo labels predicted by the graph neural network; in the M step , GLEM trains a graph neural network based on real labels and pseudo-labels predicted by the language model . In this way, the GLEM framework effectively mines local textual information and global structural interaction information. Both graph neural networks (GLEM-GNN) and language models (GLEM-LM) trained through the GLEM framework can be used to predict node labels.

Experiment

The experimental part of the paper mainly discusses the GLEM framework from the following aspects:

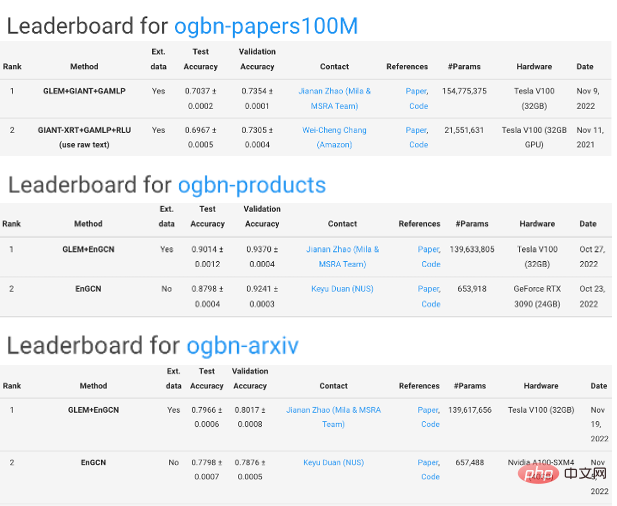

Figure 3: The GLEM framework won first place on the OGBN-arxiv, products, papers100M data set

The above is the detailed content of Effectively integrate language models, graph neural networks, and text graph training framework GLEM to achieve new SOTA. For more information, please follow other related articles on the PHP Chinese website!