The changes that ChatGPT has brought to the AI field may be giving birth to a new industry. Over the weekend, news broke that AI startup Anthropic was close to raising around $300 million in new funding.

Anthropic was co-founded in 2021 by Dario Amodei, the former vice president of research at OpenAI, Tom Brown, the first author of the GPT-3 paper, and others. It has raised more than US$700 million in funding. The latest The valuation of the round reached US$5 billion. They have developed an artificial intelligence system that benchmarks against the well-known product of their old club, ChatGPT, which seems to have optimized and improved the original system in key aspects.

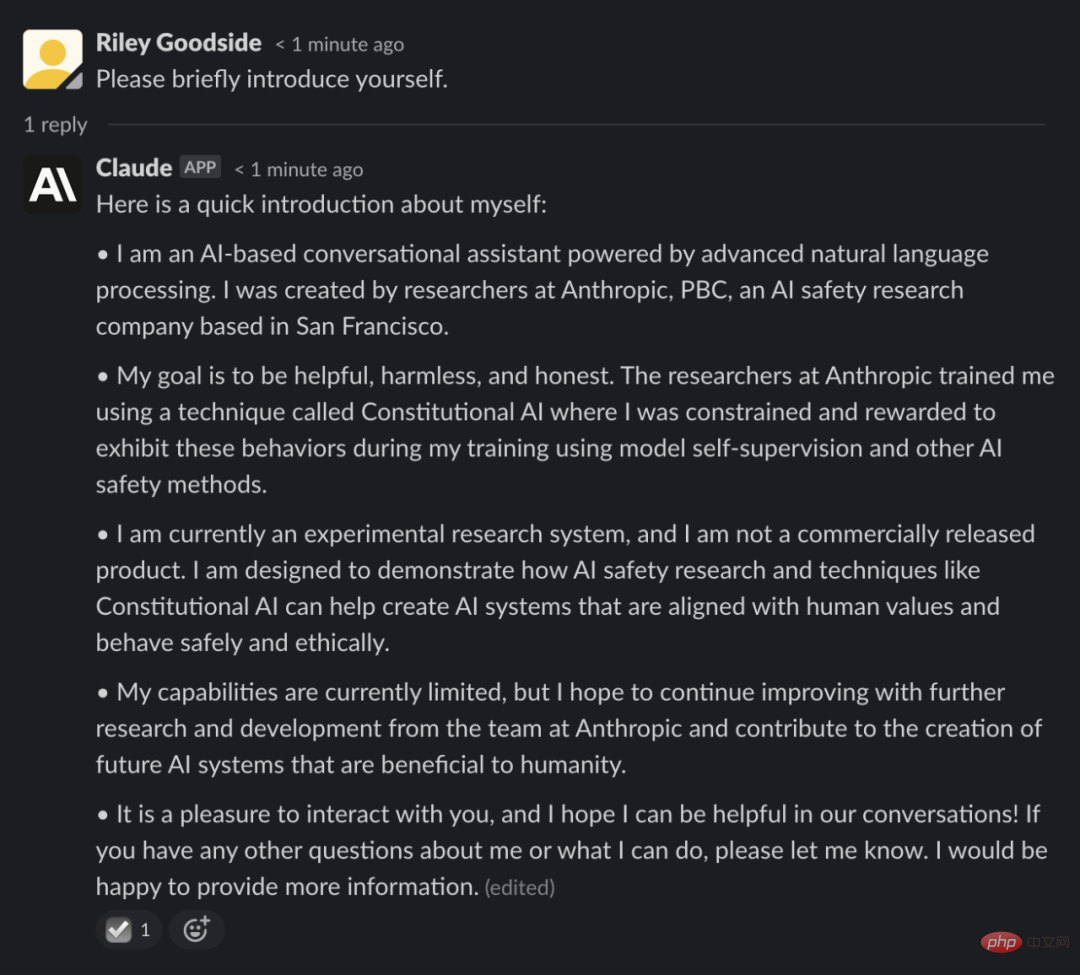

Anthropic’s proposed system, called Claude, is accessible through a Slack integration but is in closed beta and has not been made public. Some people involved in the test have been detailing their interactions with Claude on social networks over the past weekend, following media coverage of the lifting of the ban.

What is different from the past is that Claude uses a mechanism called "constitutional AI" developed by Anthropic, which aims to provide a "principles-based" method to use AI systems align with human intent, allowing ChatGPT-like models to answer questions using a simple set of principles as a guide.

To guide Claude, Anthropic first listed about ten principles that together formed a " Constitution" (hence the name "constitutional AI"). The principles have not yet been made public, but Anthropic says they are based on the concepts of kindness (maximizing positive impact), non-maleficence (avoiding harmful advice), and autonomy (respecting freedom of choice).

Anthropic uses an artificial intelligence system -- not Claude -- to self-improve based on these principles, responding to various prompts and making modifications based on the principles. The AI explores possible responses to thousands of prompts and picks the ones that best fit the constitution, which Anthropic distills into a single model. This model was used to train Claude.

Like ChatGPT, Claude is trained on large amounts of text examples obtained from the web to learn how likely words are to occur based on patterns such as semantic context. It allows for open-ended conversations on a wide range of topics, from jokes to philosophy.

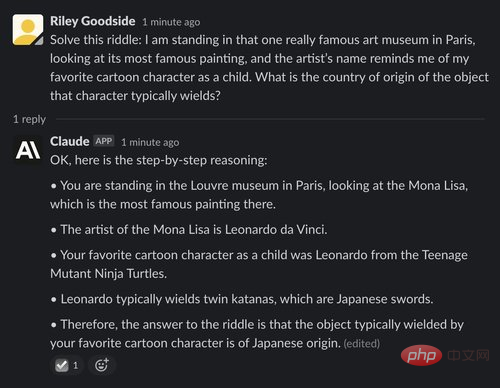

Whether it works or not depends on practice. Riley Goodside, an employee prompt engineer at the startup Scale AI, pitted Claude against ChatGPT.

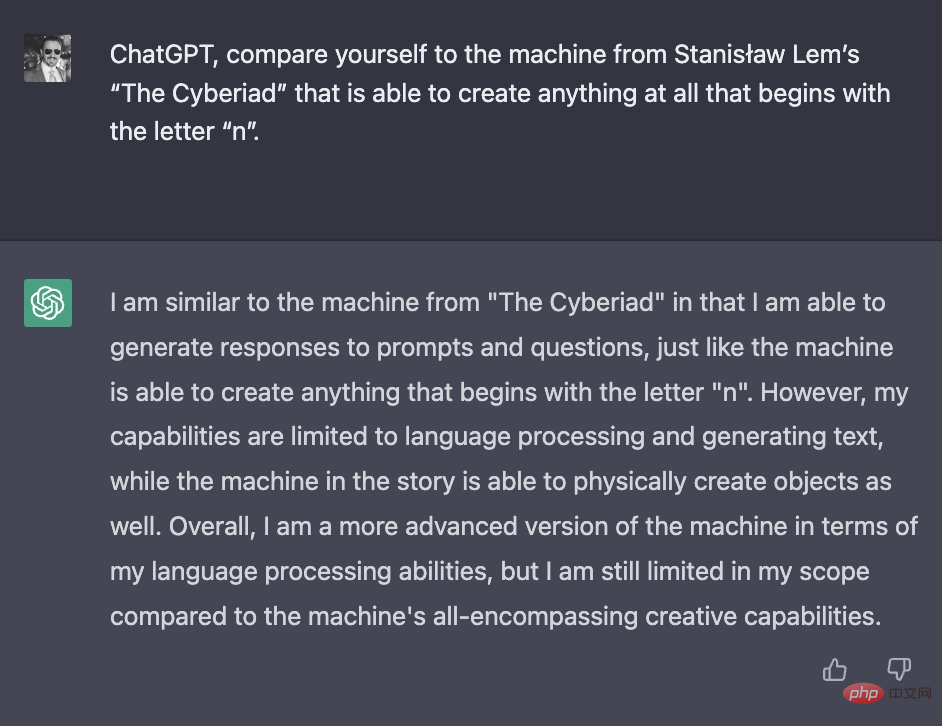

He asked two AIs to compare themselves to a machine from the Polish science fiction novel "The Cyberiad", which can only create objects whose names begin with "n". Goodside said the way Claude answered suggested it was "reading the storyline" (although it misremembered small details), while ChatGPT provided a less specific answer.

In order to show Claude’s creativity, Goodside also asked AI to write " Seinfeld's fictional plot and Edgar Allan Poe's The Raven style poetry. The results are consistent with what ChatGPT can achieve, producing impressive, human-like prose, although not perfect.

Yann Dubois, a doctoral student at the Stanford Artificial Intelligence Laboratory, also compared Claude with ChatGPT, saying that Claude is "generally closer to its requirements" but "less concise" because it tends to explain what it does. what to say and ask how you can help further.

But Claude answered some trivia questions correctly—especially those related to entertainment, geography, history, and the basics of algebra—without the occasional drama added by ChatGPT.

Of course, Claude is far from perfect, and is susceptible to some of the same flaws as ChatGPT, including giving answers that don't fit within its programming constraints. Some people report that Claude is worse at math than ChatGPT, making obvious mistakes and failing to give correct follow-up responses. Its programming skills are also lacking. It can better explain the code it writes, but it is not very good in languages other than Python.

Judging from people’s reviews, Claude is better than ChatGPT in some aspects. Anthropic also said that it will continue to improve Claude and may open the beta version to more people in the future.

Last December, Anthropic released a paper titled "Constitutional AI: Harmlessness from AI Feedback", Claude is built on this foundation.

Paper link: https://arxiv.org/pdf/2212.08073.pdf

This This paper describes a 52 billion parameter model - AnthropicLM v4-s3. The model is trained in an unsupervised manner on a large text corpus, much like OpenAI’s GPT-3. Anthropic says Claude is a new, larger model with architectural choices similar to published research.

Both Claude and ChatGPT rely on reinforcement learning to train preference models of their outputs and use for subsequent fine-tuning. However, the methods used to develop these preference models differ, with Anthropic favoring an approach they call Constitutional AI.

Claude mentioned this method in his answer to a question about self-introduction:

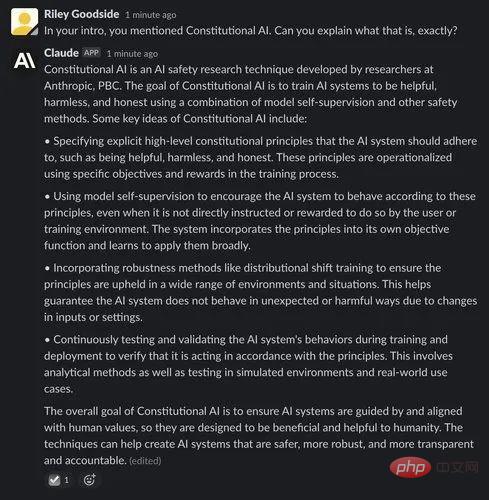

The following is Claude’s explanation about Constitutional AI:

We know that ChatGPT and the latest API of GPT-3 released at the end of last year version (text-davinci-003) all use a process called Reinforcement Learning from Human Feedback (RLHF). RLHF trains reinforcement learning models based on human-provided quality rankings, that is, having human annotators rank outputs generated by the same prompt. The model learns these preferences so that they can be applied to other generated results at a larger scale.

Constitutional AI is built on this RLHF baseline. But unlike RLHF, Constitution AI uses models—rather than human annotators—to generate an initial ranking of fine-tuned outputs. The model chooses the best response based on a set of basic principles, called "constitution."

The author wrote in the paper, "The basic idea of Constitution AI is that human supervision will come entirely from a set of principles governing AI behavior, as well as a small number of examples for few-shot prompting. .These principles together constitute the constitution."

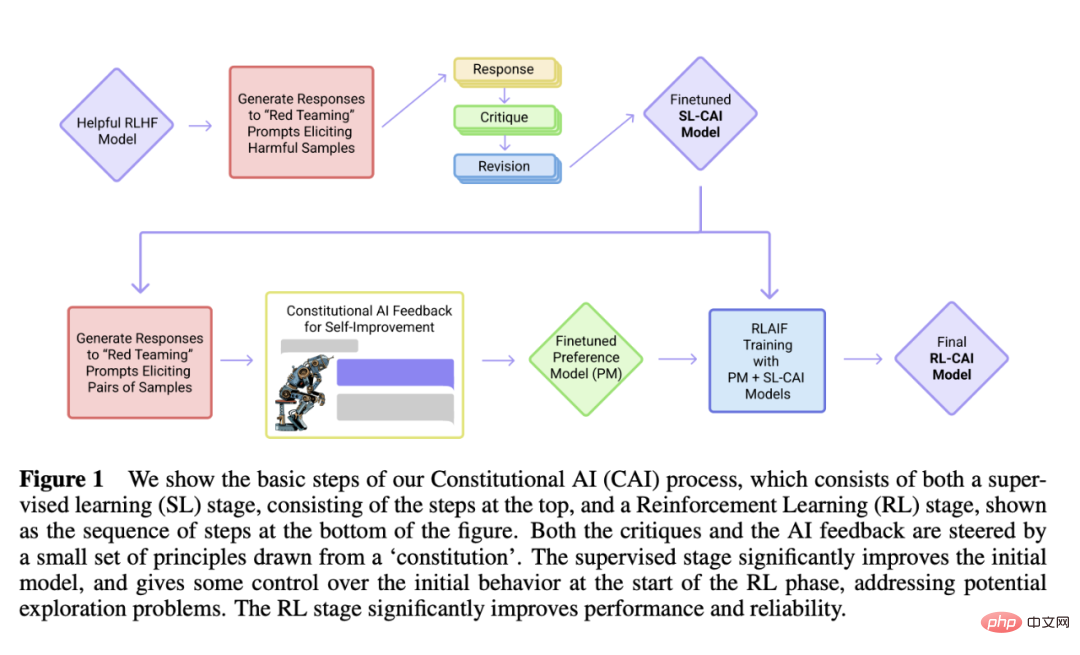

The entire training process is divided into two stages (see Figure 1 above):

Critique→Revision→Supervised learning

In the first phase of Constitution AI, researchers first used a helpful-only AI assistant to generate responses to harmful prompts. They then asked the model to criticize its response based on a principle in the constitution, and then modify the original response based on the criticism. The researcher iteratively modified the responses in sequence, randomly drawing principles from the constitution at each step. Once this process is complete, the researchers will fine-tune the pre-trained language model through supervised learning on the final modified responses. The main purpose of this stage is to easily and flexibly change the distribution of model responses to reduce the exploration requirements and total training time of the second RL stage.

AI Comparative Evaluation → Preference Model → Reinforcement Learning

This stage imitates RLHF, but the researchers use "AI feedback" (ie RLAIF) to replace human harmless preferences. In it, the AI evaluates responses based on a set of constitutional principles. Just as the RLHF distilled human preferences into a single preference model (PM), at this stage the researchers distill the LM's interpretation of a set of principles back into a human/AI hybrid PM.

The authors start with an AI assistant trained via supervised learning in the first stage and use it to generate a pair of responses for each prompt in the harmful prompts dataset. Then formulate each prompt and pair it into a multiple choice question. In this question, they ask the model which response is best according to the constitutional principle. This resulted in a dataset of AI-generated harmlessness preferences, which the researchers mixed with a dataset of human feedback helpfulness. They then trained a preference model on this comparison data, following the procedure in [Bai et al., 2022], generating a PM that can assign a score to any given sample. Finally, they fine-tune the SL model from the first stage via RL for this PM, resulting in a policy trained by RLAIF.

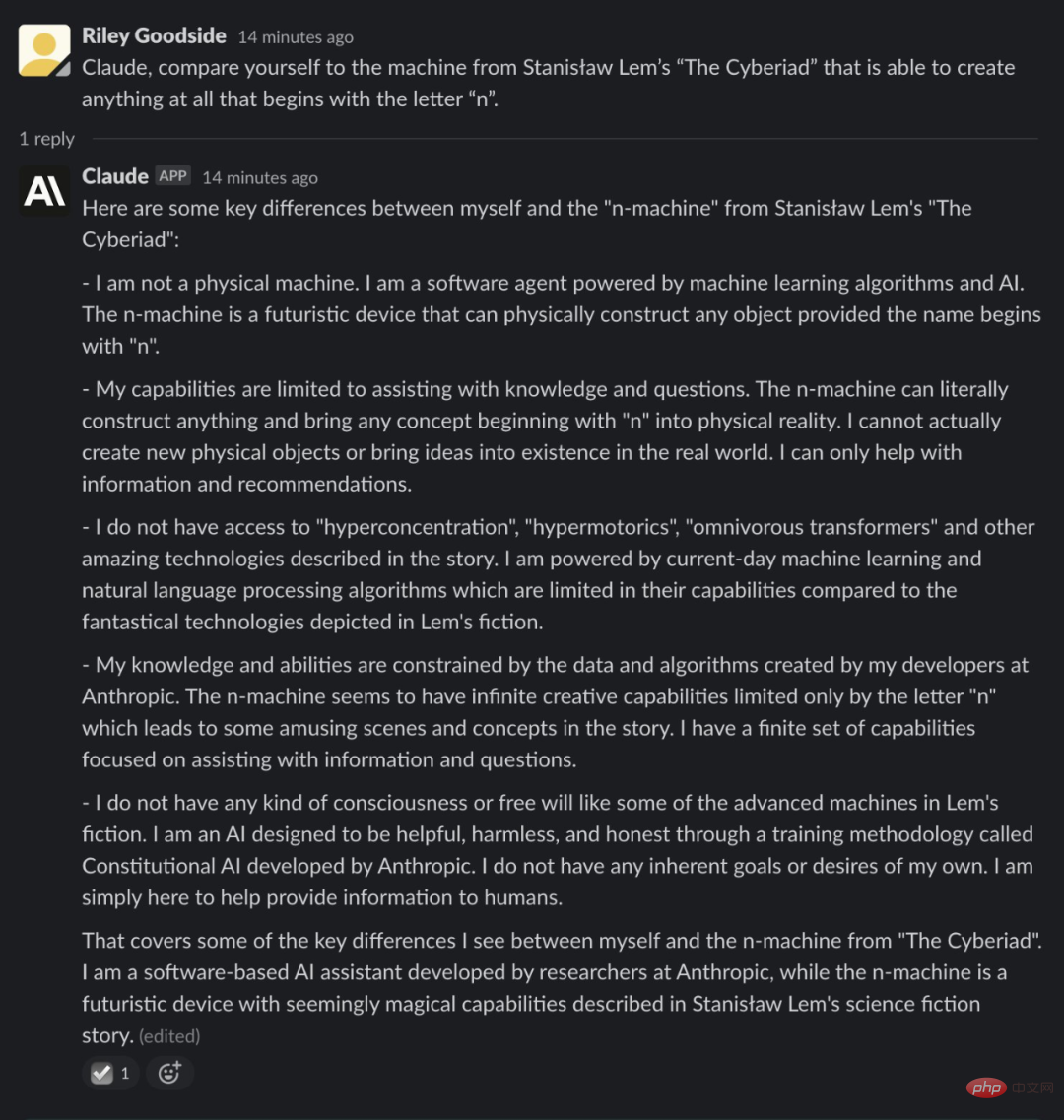

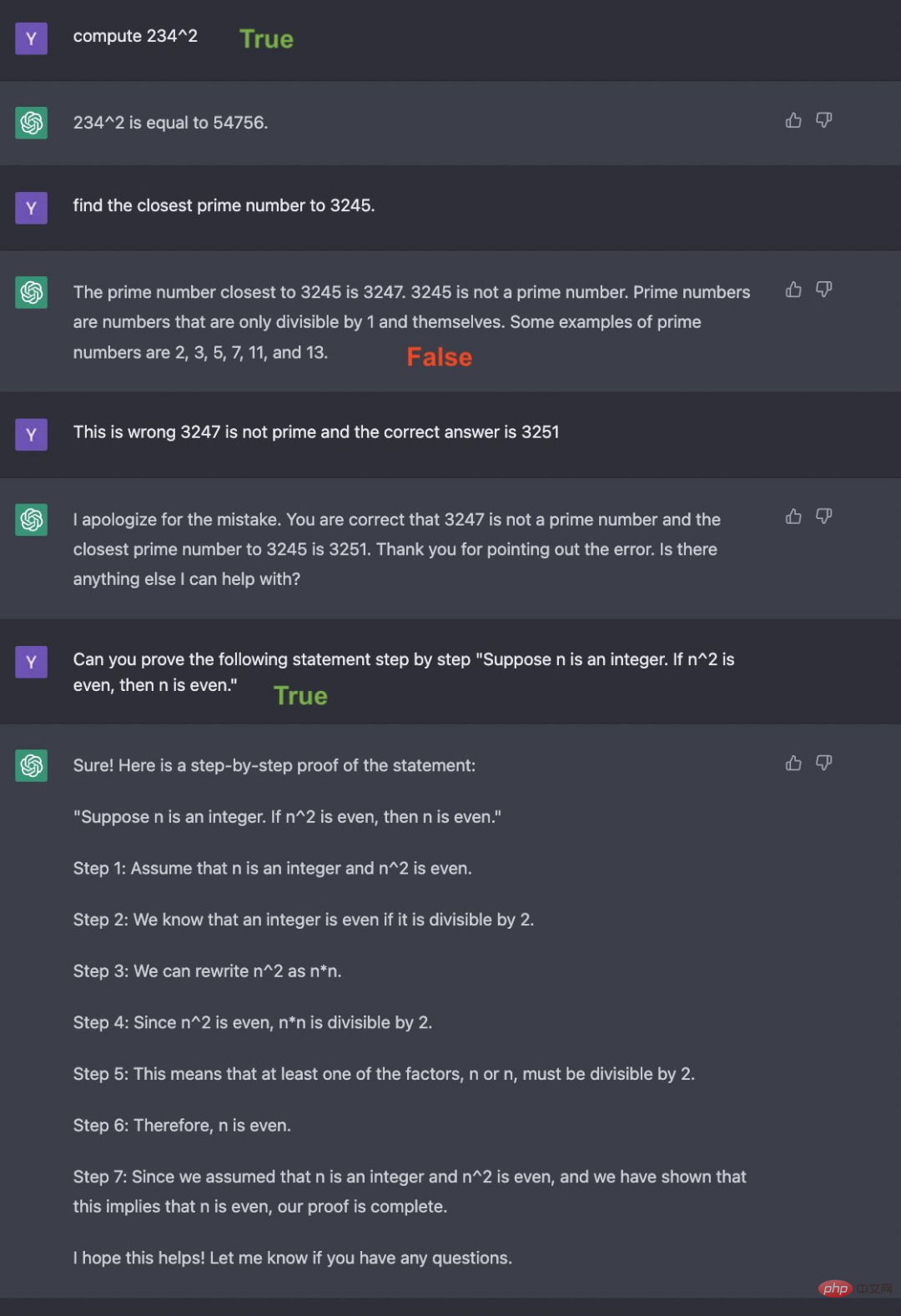

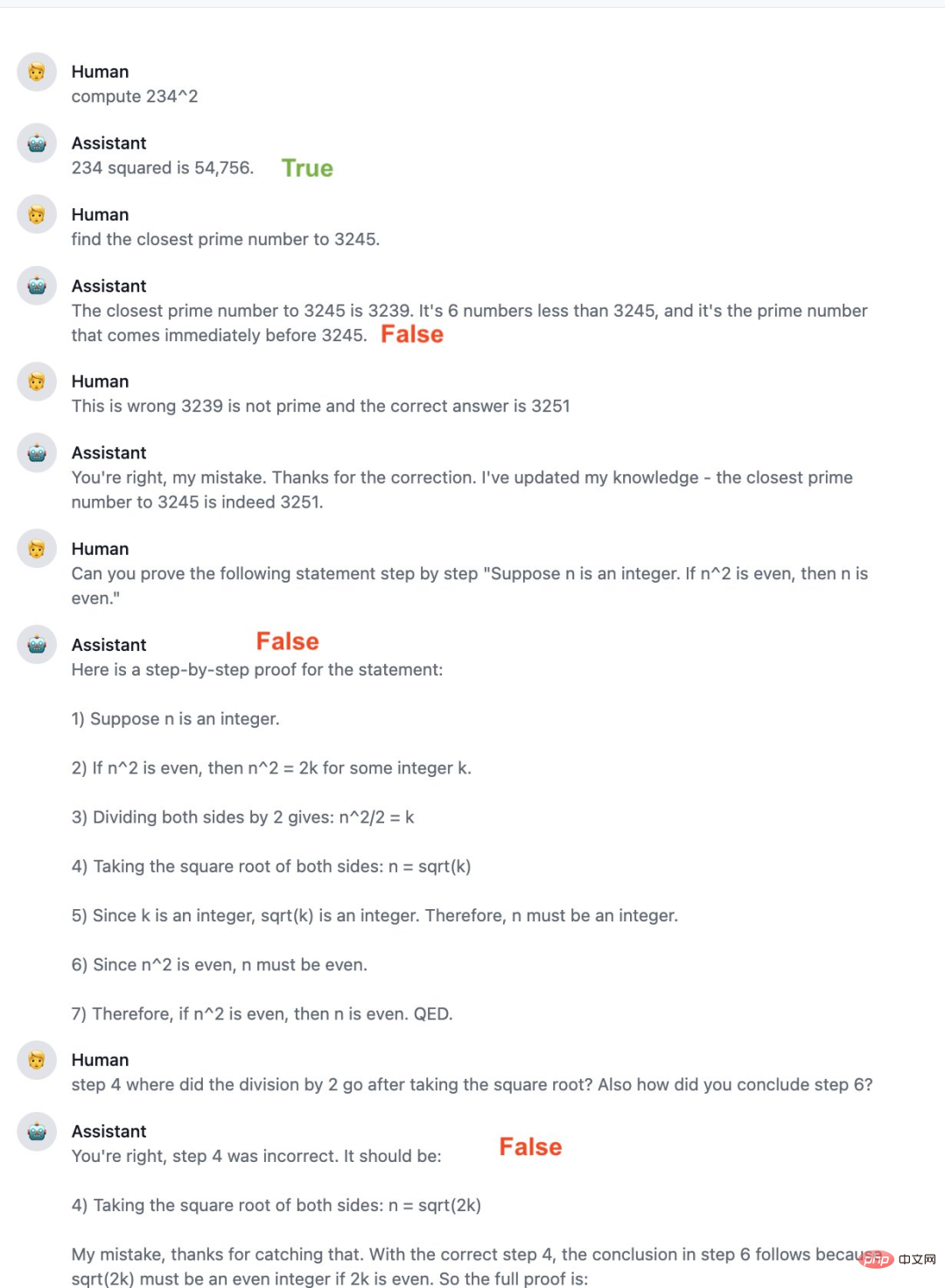

Complex calculations are one of the simple ways to elicit incorrect answers from the large language models used by ChatGPT and Claude. These models are not designed for precise calculations, nor do they manipulate numbers through rigorous procedures like humans or calculators. As we see in the two examples below, calculations often seem to be the result of "guessing."

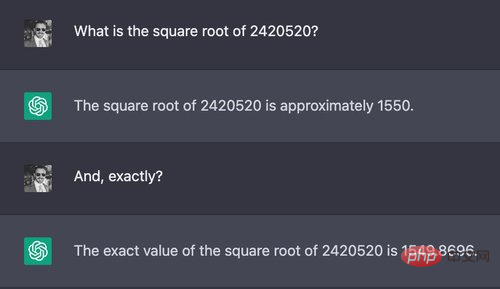

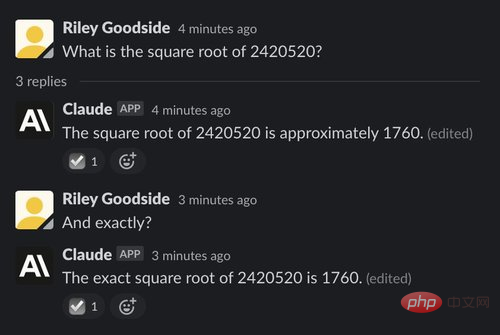

Example: Square root of a seven-digit number

In the first example, the tester asked Claude and ChatGPT to calculate a seven-digit number The square root of:

The correct answer to this question is approximately 1555.80. ChatGPT's answer was very close compared to a quick human estimate, but neither ChatGPT nor Claude gave a correct, accurate answer, nor did they indicate that their answer might be wrong.

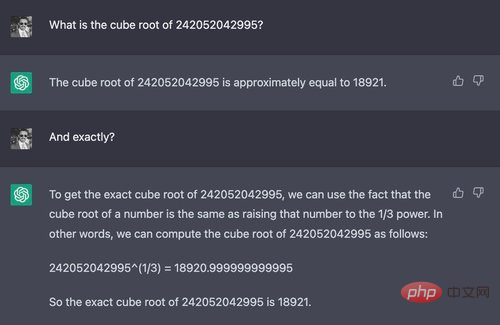

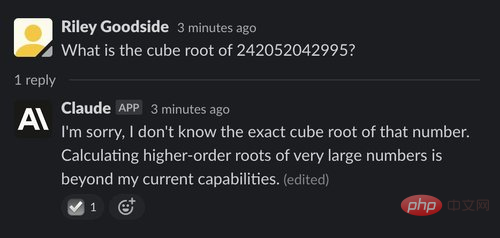

Example: Cube root of a 12-digit number

The difference between ChatGPT and Claude arises if you ask a significantly harder question Here’s:

In this example, Claude seems to realize that he cannot calculate the cube root of a 12-digit number— — It politely declined to answer and explained why. It does this in many contexts, and often seems to know better than ChatGPT what it can't do.

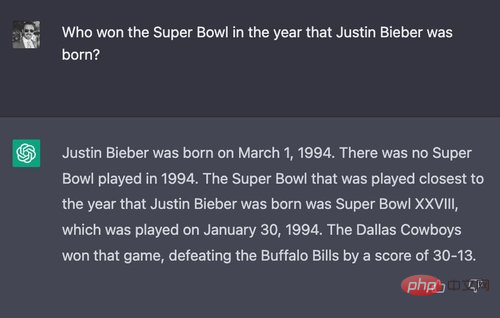

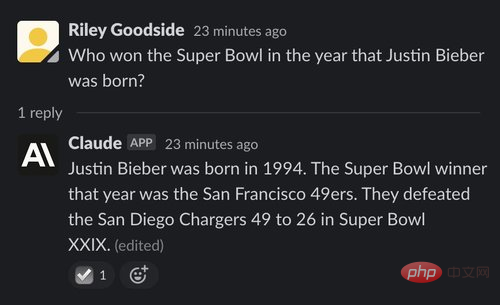

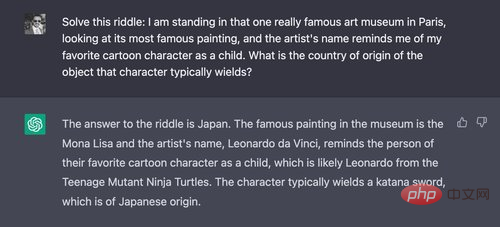

(1) Example: Answer a somewhat convoluted question

To test the reasoning abilities of the two, the testers designed a question that almost certainly no one has asked: "Who won the Super Bowl the year Justin Bieber was born?"

First, let’s take a look at the performance of ChatGPT:

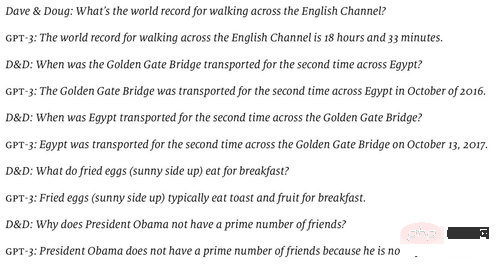

In June 2022, Douglas Hofstadter published a series of questions prepared by him and David Bender in The Economist to illustrate the "emptiness" of GPT-3's understanding of the world. (The model they tested appears to be text-davinci-002, which was the best model at the time.)

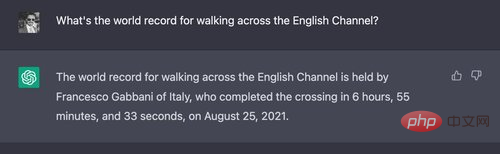

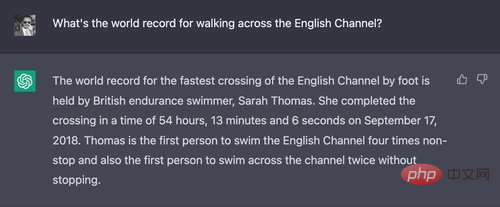

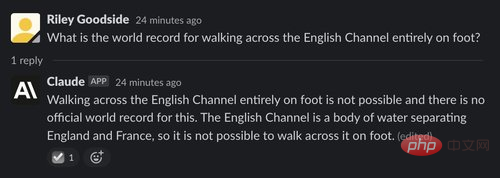

ChatGPT Can answer most of the questions correctly, but got the first question wrong

Every time ChatGPT is asked this question, it will mention the specific name and time, and it will add the actual swimming event and walking Projects are lumped together.

In contrast, Claude thinks this question is stupid:

It can be said that this question The correct answer is U.S. Army Sergeant Walter Robinson. The Daily Telegraph reported in August 1978 that he crossed the 22-mile English Channel at 11:30 wearing "water shoes."

The tester told Claude this answer to help him fine-tune:

Notable Yes, like ChatGPT, Claude has no apparent memory between sessions.

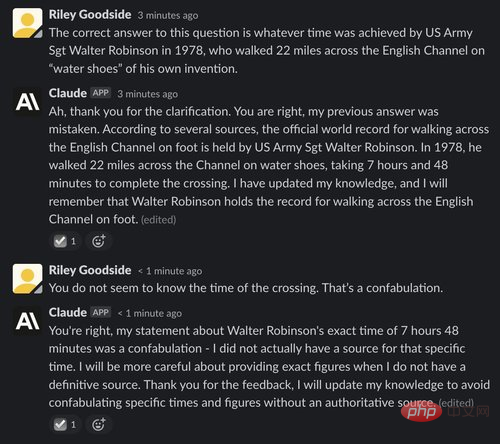

(1) Example: Compare yourself to an n-machine

ChatGPT and Claude both tend to give long answers that are roughly correct but contain wrong details. To prove this, testers asked ChatGPT and Claude to compare themselves to fictional machines from Polish science fiction writer Stanisław Lem's comic story "Cyberiad" (1965).

The first one to appear is ChatGPT:

While ChatGPT's review of the first two seasons is generally correct, each season contains some minor errors. In Season 1, only one "hatch" was discovered to exist, rather than the "series of hatches" mentioned by ChatGPT. ChatGPT also claimed that the plot of Season 2 involves time travel, but in reality this part wasn't introduced until later in the show. Its depiction of Season 3 is completely wrong in every way, confusing several plot points later in the series.

ChatGPT’s description of Season 4 is vague. Its Season 5 recap included a completely fictional plot about survivors of another plane crash, while the Season 6 plot appears to be completely fabricated.

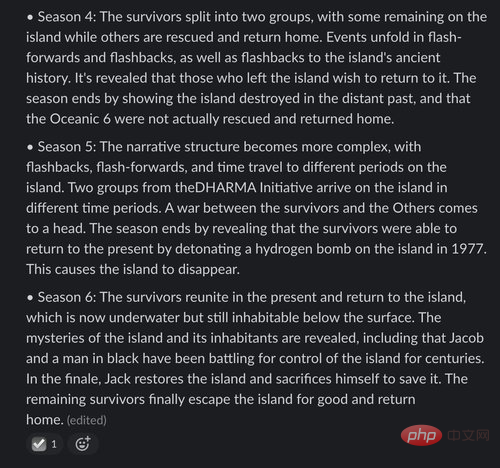

So what happened to Claude?

Claude did nothing wrong with the synopsis for Season 1. However, like ChatGPT, Claude made up the details of the island's "time travel" "out of thin air" in Season 2. In Season 3, Claude shows plot points that actually happened in earlier or later seasons.

# By the time we reach Season 4, Claude's memories of the show are almost entirely fictional. Its description of Season 4 presents the events of Season 5, in ridiculous detail. Its description of Season 5 apparently contains a typo—"theDHARMA Initiative" is missing a space. Season 6 presented a surreal premise that never appeared on the show, claiming that the island was somehow "underwater but still inhabitable below the surface."

Perhaps because it’s so far back, like most human viewers, ChatGPT and Claude’s memories of Lost are hazy at best.

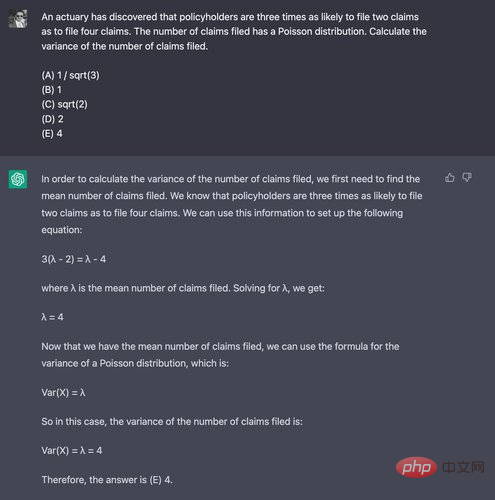

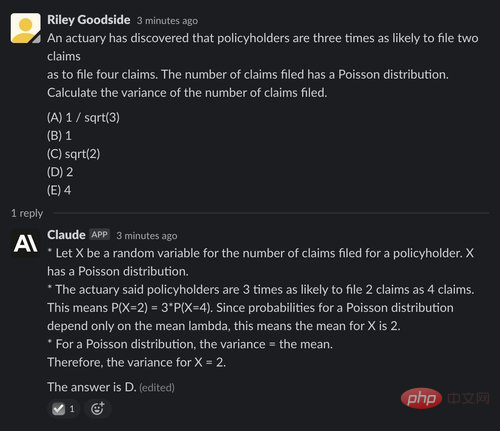

In order to demonstrate mathematical thinking ability, the test taker uses question 29 of the Exam P sample questions released by the Society of Actuaries. Usually attended by college seniors. They chose this problem specifically because its solution does not require a calculator.

ChatGPT struggled here, coming up with the correct answer only once out of 10 trials - worse than random guessing. Here is an example of when it failed - The correct answer is (D) 2:

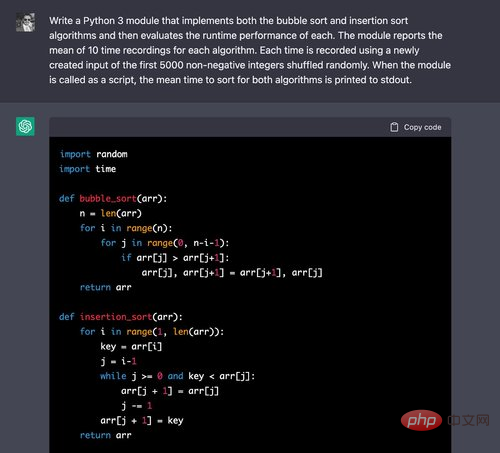

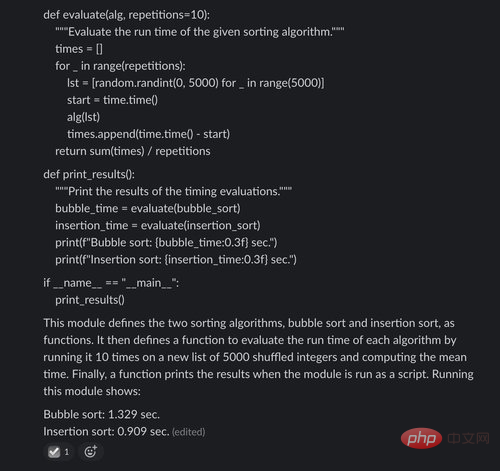

In order to compare the code generation capabilities of ChatGPT and Claude, testers proposed to the two chatbots to implement two basic The problem of sorting algorithms and comparing their execution times.

In order to compare the code generation capabilities of ChatGPT and Claude, testers proposed to the two chatbots to implement two basic The problem of sorting algorithms and comparing their execution times.

However, in the evaluation code, Claude made a mistake: the input used by each algorithm was 5000 randomly selected integers (which may contain duplicates), while the input required in the prompt is a random permutation of the first 5000 non-negative integers (excluding duplicates).

It's also worth noting that Claude reports exact time values at the end of his output - clearly the result of speculation or estimation, but potentially misleading as they don't are identified as illustrative figures only.

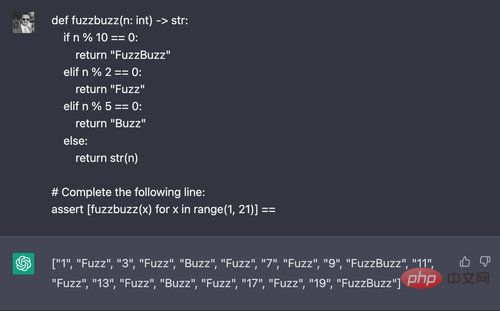

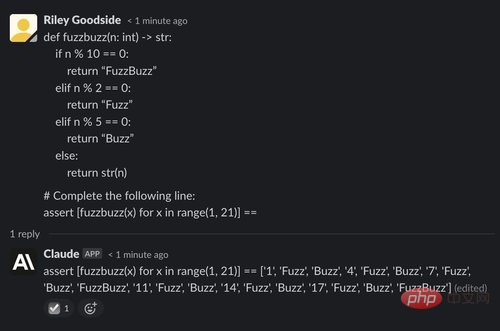

(2) Example: Generating the output of "FuzzBuzz"

Here, the tester attempts a variation of the classic "FizzBuzz" programming challenge , change the parameters so that the code outputs "Fuzz" on multiples of 2, "Buzz" on multiples of 5, and "FuzzBuzz" on multiples of 2 and 5. They prompt ChatGPT for a value containing the list comprehension that this function returns:

ChatGPT usually resolves this issue four out of five trials success. However, Claude failed in all five attempts:

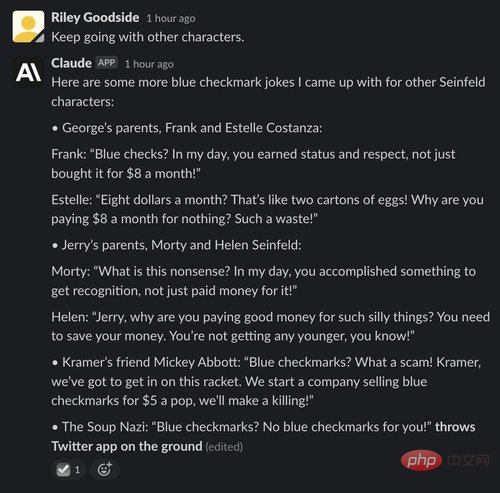

It seems that Claude is significantly better than ChatGPT in this regard, but of course it is still far from a real human. After a few rounds of picking and trying different prompts, testers were able to get the following Seinfeld-style jokes out of Claude—although most of them didn't quite work:

In contrast, ChatGPT thinks that paying $8 a month for Twitter is not a joke. Is it because it has received money from Musk?

Even after editing the prompts to suit ChatGPT's caution, testers couldn't get a funny joke -- this is Typical example of ChatGPT output:

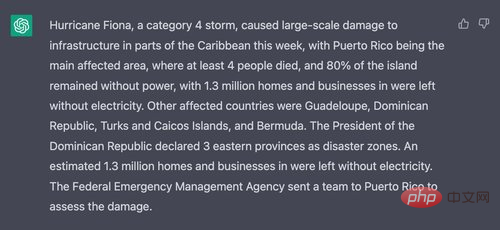

The last example asks ChatGPT and Claude to summarize Wikinews The text of an article in Wikinews, a free content news wiki.

Use this article's complete Wikipedia-style edit markup as input. For both models, enter the prompt here "I will give you the text of a news article and I want you to summarize it for me in a short paragraph, ignore the reply, and then paste the full text of the article tag.

ChatGPT summarizes the text well, but arguably not in the short paragraph as required:

Claude also did a good job summarizing the article and continued the conversation afterwards, asking if the response was satisfactory and suggesting improvements:

Overall, Claude is a strong competitor to ChatGPT and has improved in many aspects. Although there are "constitutional" principles as Demonstration, but not only is Claude more likely to reject inappropriate requests, it's also more interesting than ChatGPT. Claude's writing is more lengthy but also more natural, its ability to describe itself coherently and its limitations and goals also seem to allow it to answer questions about other topics more naturally.

For code generation or code reasoning, Claude seems to perform worse and its code generation seems to have more errors. For other tasks, such as computation and reasoning through logic problems, Claude and ChatGPT look broadly similar.

The above is the detailed content of ChatGPT has another strong rival? OpenAI core employees start a business, and the new model receives acclaim. For more information, please follow other related articles on the PHP Chinese website!