This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

Large-scale neural networks are one of the hot topics in the current field of artificial intelligence. So, how to train large models?

Recently, OpenAI, which has launched the large-scale pre-training model GPT-3, published a blog post introducing four memory-saving parallel training methods based on GPU, which are:

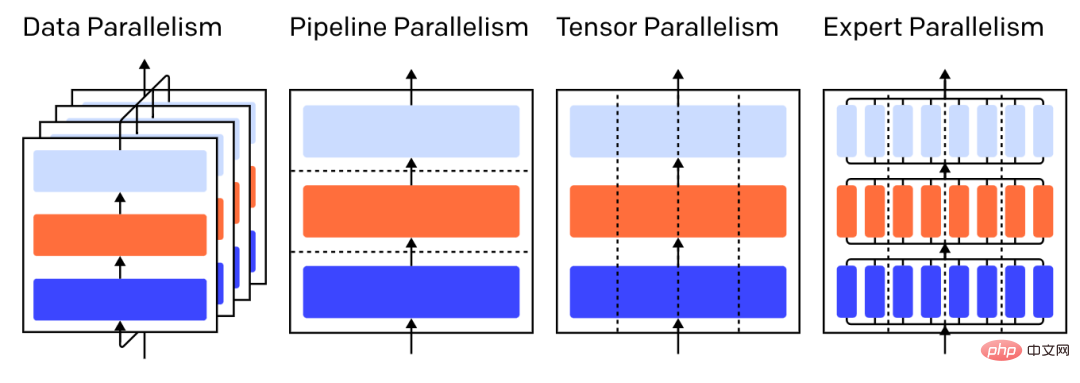

Note: Various parallel strategies on the three-layer model, each color represents one layer, and dotted lines separate different GPU.

"Data Parallel Training" means copying the same parameters to multiple GPUs (often called "workers") and assign different examples to each GPU to process simultaneously.

Data parallelism alone requires the model to fit into a single GPU memory, but when you leverage multiple GPUs for computation, the cost is storing multiple copies of the parameters. That being said, however, there are strategies to increase the effective RAM available to the GPU, such as temporarily offloading parameters to CPU memory between uses.

As each data parallel worker updates its copy of parameters, they need to coordinate with each other to ensure that each worker continues to have similar parameters. The simplest method is to introduce "blocking communication" between workers:

Step 1: Calculate the gradient on each worker independently;

Step 2: Average the gradients of different workers;

Step 3: Calculate the same new parameters independently on each worker.

Step 2 is a blocking average that requires transferring a large amount of data (proportional to the number of workers times the parameter size), which may hurt the throughput of the training. There are various asynchronous synchronization schemes that can eliminate this loss, but at the expense of learning efficiency; so in practice, people generally stick to synchronous methods.

In pipeline parallel training, researchers will divide the sequential blocks of the model into GPUs, and each GPU only A small set of parameters is saved, so each GPU consumes proportionally less memory for the same model.

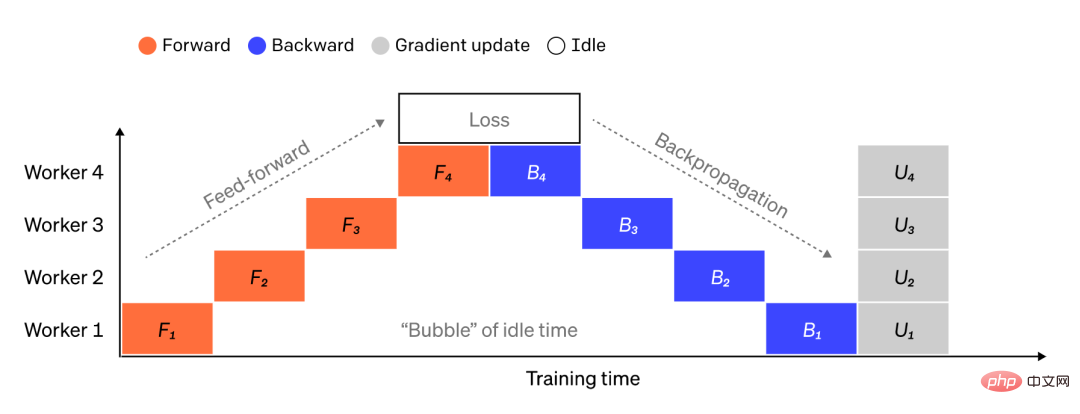

Splitting a large model into chunks of consecutive layers is simple, but since there are sequential dependencies between the inputs and outputs of a layer, the worker waits for the output of the previous machine. A naive execution may result in a large amount of idle time when used as its input. These blocks of waiting time are called "bubbles" and are wasted computations that could be completed by idle machines.

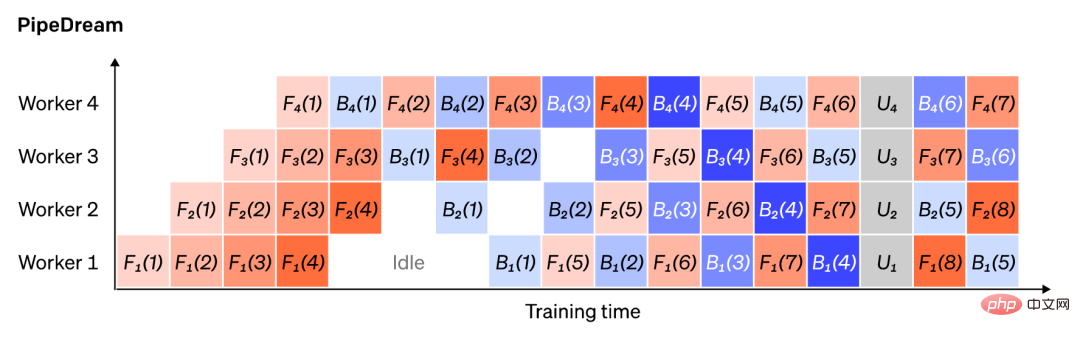

Caption: Illustration of a simple pipeline parallel setup where the model is vertically divided into 4 partitions. Worker 1 hosts the model parameters of layer 1 (closest to the input), while worker 4 hosts layer 4 (closest to the output). "F", "B" and "U" represent forward, backward and update operations respectively. The subscript indicates which worker the operation is to run on. Due to sequential dependencies, data is processed by one worker at a time, resulting in large idle time "bubbles".

We can reuse the idea of data parallelism to reduce the cost of generating time bubbles by having each worker process only a subset of data elements at a time, allowing us to cleverly overlap new computations with waiting times . The core idea is that by splitting a batch into multiple micro-batches, each micro-batch should be processed proportionally faster and each worker starts working as soon as the next micro-batch becomes available, thus speeding up the pipeline implement. With enough microbatches, workers can be utilized most of the time, with minimal "bubbles" at the beginning and end of steps. Gradients are averaged across microbatches, and parameters are updated only after all microbatches are completed.

The number of workers split into a model is often called "pipeline depth".

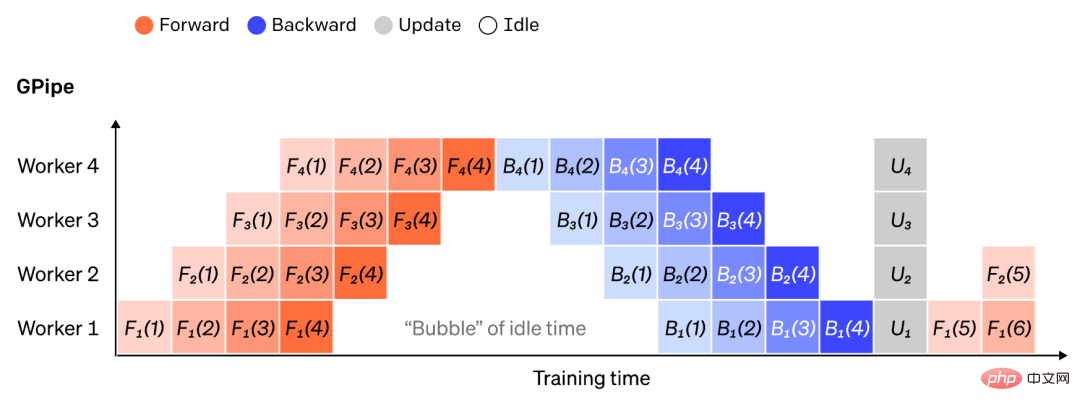

During the forward pass, a worker only sends the outputs of its layer blocks (called "activations") to the next worker; during the backward pass, it only sends these The activated gradient is sent to the previous worker. There is a large design space in how to arrange these channels and how to aggregate gradients across micro-batches. For example, the method GPipe has each worker process pass forward and backward continuously, and then synchronously aggregates the gradients from multiple micro-batches at the end; while PipeDream arranges for each worker to process forward and backward passes alternately.

Note: Comparison of GPipe and PipeDream pipeline solutions, each batch uses 4 microprocessors batch. Microbatches 1-8 correspond to two consecutive batches of data. "Number" in the figure indicates which micro-batch is operated on, and the subscript marks the worker ID. Note that PipeDream achieves greater efficiency by performing some calculations using stale parameters.

Pipeline parallelism splits the model "vertically" layer by layer, or within a layer Splitting certain operations "horizontally" is often called tensor training.

For many modern models (e.g. Transformer), the computational bottleneck is multiplying the activation batch matrix with a large weight matrix. Matrix multiplication can be thought of as a dot product between pairs of rows and columns; the dot products can be computed independently on different GPUs, or each part of the dot product can be computed on different GPUs and the results summed. Regardless of the strategy, we can split the weight matrix into evenly sized "shards", host each shard on a different GPU, and use that shard to compute the relevant part of the entire matrix product before communicating it to combine result.

One example is Megatron-LM, which parallelizes matrix multiplication within the Transformer's self-attention and MLP layers. PTD-P uses tensor, data and pipeline parallelism, and its pipeline scheduling allocates multiple discrete layers to each device to reduce bubble loss at the expense of increased network communication.

Sometimes network inputs can be parallelized across dimensions, with a high degree of parallel computation relative to cross-communication. Sequence parallelism is the idea where an input sequence is divided in time into multiple sub-examples, proportionally reducing peak memory consumption by allowing computation to continue on more fine-grained examples.

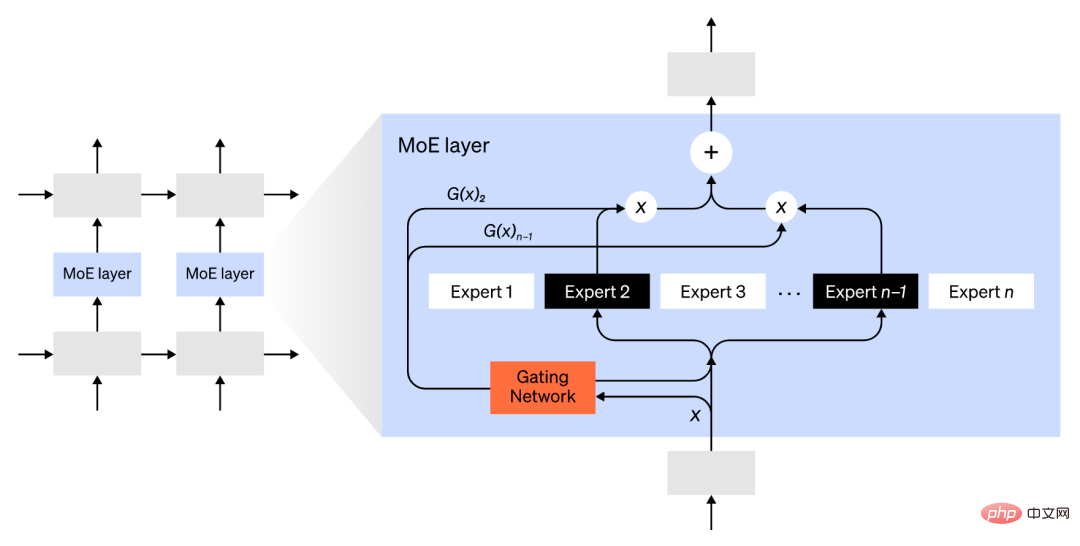

Using the Mixture of Experts (MoE) method, only a small part of the network is used to calculate the output of any one input .

One example approach is to have multiple sets of weights, and the network can select which set to use at inference time through a gating mechanism, which enables more accurate sets of weights without increasing computational cost. Multiple parameters. Each set of weights is called an "expert," and the hope is that the network will learn to assign specialized computations and skills to each expert. Different experts can host different GPUs, providing a clear way to scale the number of GPUs used for a model.

Illustration: The gated network only selects 2 out of n experts.

GShard expands the parameters of MoE Transformer to 600 billion parameters, where only the MoE layer is split across multiple TPU devices and other layers are fully replicated. Switch Transformer scales model size to trillions of parameters with higher sparsity by routing one input to a single expert.

There are many other computational strategies that can make training larger and larger neural networks easier deal with. For example:

To calculate gradients, the original activations need to be saved, which consumes a lot of device RAM. Checkpointing (also called activation recomputation) stores any subset of activations and, during a backward pass, recomputes intermediate activations in time, saving significant memory at the computational cost of at most one additional full forward pass. One can also continually trade off computational and memory costs by selectively activating recomputation, which is checking a subset of activations for which the storage cost is relatively high but the computational cost is low.

Mixed precision training is using lower precision numbers (most commonly FP16) to train the model. Modern accelerators can achieve higher FLOP counts using lower precision numbers and also save device RAM. With proper care, the resulting model can be produced with virtually no loss of accuracy.

Offloading is to temporarily offload unused data to the CPU or between different devices, and read it back when needed. A naive implementation will significantly slow down training, but a sophisticated implementation will prefetch the data so that the device never has to wait. One implementation of this idea is ZeRO, which partitions parameters, gradients, and optimizer states across all available hardware and reifies them as needed.

Memory Efficient Optimizers Memory efficient optimizers have been proposed to reduce the memory footprint of the running state maintained by the optimizer, such as Adafactor.

Compression can also be used to store intermediate results in the network. For example, Gist compresses activations saved for backward passes; DALL-E compresses gradients before synchronizing them.

The above is the detailed content of OpenAI: Four basic methods for training large neural networks. For more information, please follow other related articles on the PHP Chinese website!