The artificial intelligence department of Meta Platforms company recently stated that they are teaching AI models how to learn to walk in the physical world with the support of a small amount of training data, and have made rapid progress.

This research can significantly shorten the time for the AI model to acquire visual navigation capabilities. Previously, achieving such goals required repeated "reinforcement learning" using large data sets.

Meta AI researchers said that this exploration of AI visual navigation will have a significant impact on the virtual world. The basic idea of the project is not complicated: to help AI navigate physical space just like humans do, simply through observation and exploration.

Meta AI department explained, “For example, if we want AR glasses to guide us to find keys, we must find a way to help AI understand the layout of unfamiliar and changing environments. After all, this is a very detailed and small requirement. , it is impossible to rely forever on high-precision preset maps that consume a lot of computing power. Humans do not need to know the exact location or length of the coffee table to easily move around the corners of the table without any collision."

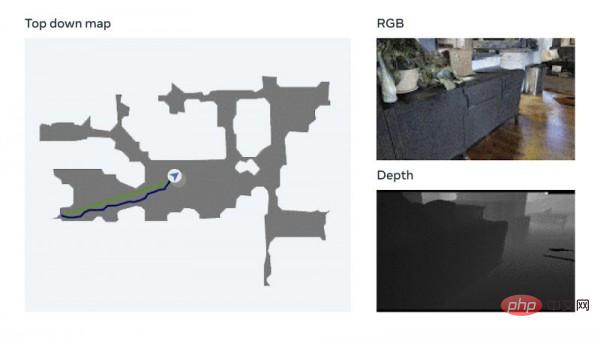

To this end, Meta decided to focus its efforts on "embodied AI", that is, training AI systems through interactive mechanisms in 3D simulations. In this area, Meta said it has established a promising "point target navigation model" that can navigate in new environments without any maps or GPS sensors.

The model uses a technology called visual measurement, which allows the AI to track its current position based on visual input. Meta said that this data augmentation technology can quickly train effective neural models without the need for manual data annotation. Meta also mentioned that they have completed testing on their own Habitat 2.0 embodied AI training platform (which uses the Realistic PointNav benchmark task to run virtual space simulations), with a success rate of 94%.

Meta explained, “Although our method has not fully solved all scenarios in the data set, this research has initially proved that the ability to navigate in real-world environments is not necessarily Explicit mapping is required to implement."

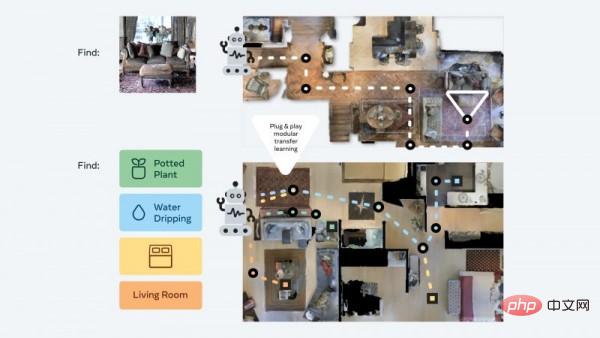

In order to further improve AI navigation training without relying on maps, Meta has established a training data set called Habitat-Web, which contains more than 100,000 Different object-goal navigation methods demonstrated by humans. The Habitat simulator running on a web browser can smoothly connect to Amazon.com's Mechanical Turk service, allowing users to safely operate virtual robots remotely. Meta said the resulting data will be used as training material to help AI agents achieve "state-of-the-art results." Scanning the room to understand the overall spatial characteristics, checking whether there are obstacles in corners, etc. are all efficient object search behaviors that AI can learn from humans.

In addition, the Meta AI team has also developed a so-called "plug and play" modular approach that can help robots navigate a variety of semantic navigation tasks and goal modes through a unique set of "zero-sample experience learning framework" Achieve generalization. In this way, AI agents can still acquire basic navigation skills without the need for resource-intensive maps and training, and can perform different tasks in a 3D environment without additional adjustments.

#Meta explains that these agents continuously search for image targets during training. They receive a photo taken at a random location in the environment and then use autonomous navigation to try to find the location. Meta researchers said, "Our method reduces the training data to 1/12.5, and the success rate is 14% higher than the latest transfer learning technology."

Constellation Research analyst Holger Mueller said in an interview Zhong said that this latest development of Meta is expected to play a key role in its metaverse development plan. He believes that if the virtual world can become the norm in the future, AI must be able to understand this new space, and the cost of understanding should not be too high.

Mueller added, “AI’s ability to understand the physical world needs to be expanded by software-based methods. Meta is currently taking this path, and has made progress in embodied AI, developing AI that does not require training. Software that can autonomously understand its surrounding environment. I'm excited to see early practical applications of this."

These real-life use cases may not be far away from us. Meta said the next step is to advance these results from navigation to mobile operations and develop AI agents that can perform specific tasks (such as identifying a wallet and returning it to its owner).

The above is the detailed content of Meta researchers make a new AI attempt: teaching robots to navigate physically without maps or training. For more information, please follow other related articles on the PHP Chinese website!