Recently, OpenAI, which seems to have left GPT behind, has started a new life.

After training on massive unlabeled videos and a little bit of labeled data, the AI finally learned how to make a diamond pickaxe in Minecraft.

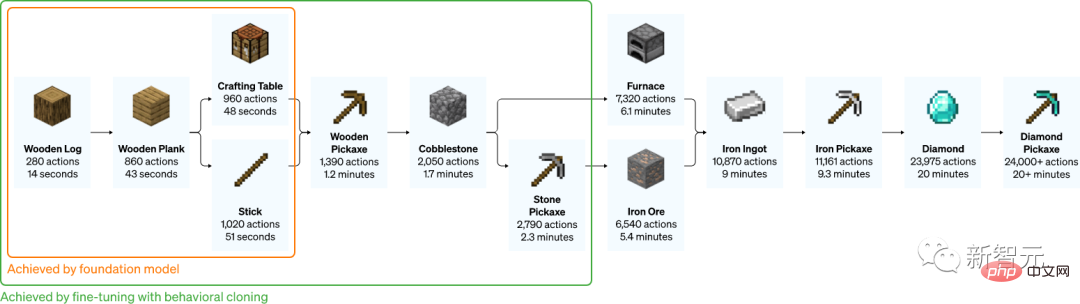

The entire process takes a hardcore player at least 20 minutes to complete, and requires a total of 24,000 operations.

This thing seems simple, but it is very difficult for AI.

A 7-year-old child can learn it after watching it for 10 minutes

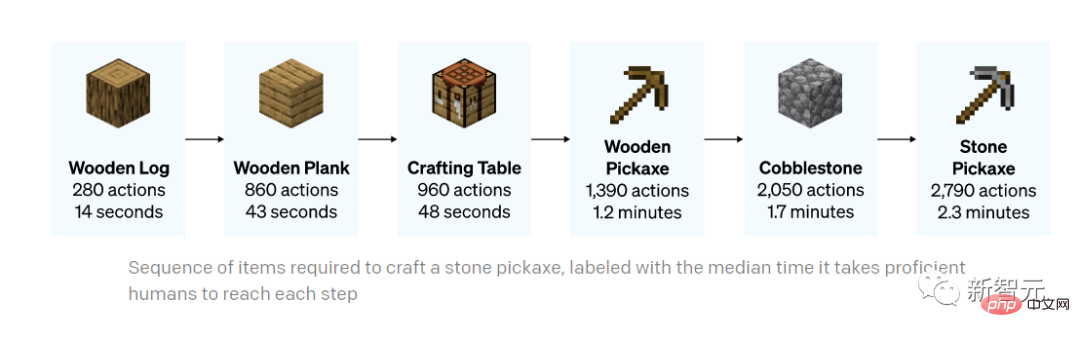

#For the simplest wooden pickaxe, let human players learn the process from scratch Not too difficult.

One nerd can teach the next one in less than 3 minutes with a single video.

The demonstration video is 2 minutes and 52 seconds long

However, Diamond Making a pickaxe is much more complicated.

But even so, a 7-year-old child only needs to watch a ten-minute demonstration video to learn it.

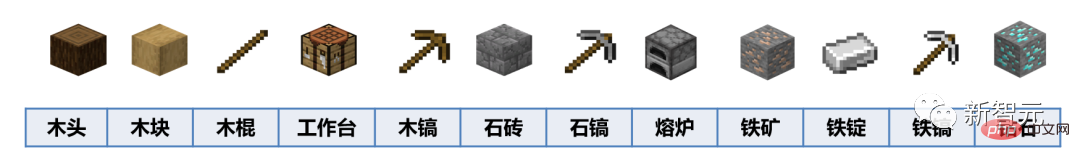

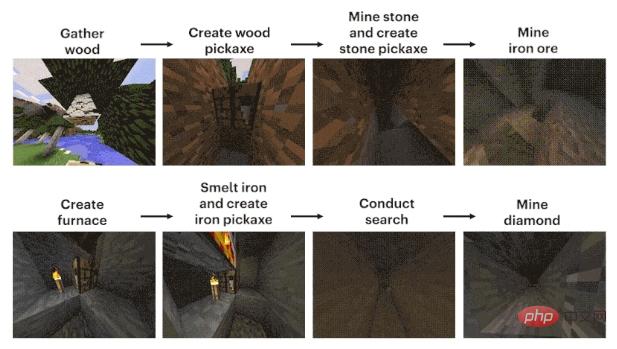

The difficulty of this mission is mainly how to dig the diamond mine.

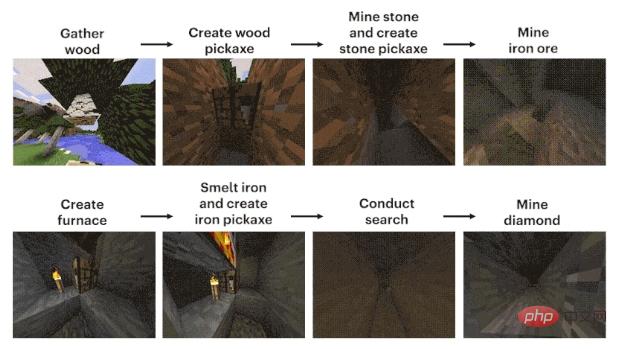

The process can be roughly summarized into 12 steps: first, plan out the pixel block "wood" with bare hands, then synthesize wood blocks from the logs, make wooden sticks from the wooden blocks, and make workshop equipment from the wooden sticks Bench, workbench to make wooden pickaxe, wooden pickaxe to knock stones, stones and sticks to make stone pickaxe, stone pickaxe to make furnace, furnace to process iron ore, iron ore to melt and cast iron ingot, iron ingot to make iron pickaxe, iron pickaxe to Dig diamonds.

Now, the pressure is on the AI side.

Coincidentally, CMU, OpenAI, DeepMind, Microsoft Research and other institutions have launched a related competition-MineRL since 2019.

Contestants need to develop an artificial intelligence agent that can "create tools independently from scratch and automatically find and mine diamond mines". The winning conditions are also very simple - the fastest one wins. .

How is the result?

After the first MineRL competition, "a 7-year-old child learned it after watching a 10-minute video, but the AI still couldn't figure it out after 8 million steps." However, it was published in Nature magazine.

As a sandbox construction game, “Minecraft” is highly open to player strategies and in-game virtual environments. It is particularly suitable as a testing ground and touchstone for various AI model learning and decision-making capabilities.

And as a "national-level" game, it is easy to find videos related to "Minecraft" online.

However, whether it is building a tutorial or showing off your own work, to a certain extent it is only the result shown on the screen.

In other words, people watching the video can only know what the up leader did and how he did it, but they have no way of knowing how he did it.

To be more specific, what is shown on the computer screen is only the result, and the operation steps are the up owner’s constant clicking on the keyboard and the constant movement of the mouse. This part is to see less than.

Even this process has been edited, and it is unlikely that anyone would be able to learn it after watching it, let alone AI.

To make matters worse, many players complain that planing wood in the game is boring, too much like doing homework and completing tasks. As a result, after a wave of updates, there are many tools that can be picked up for free... Now, even the data is hard to find.

If OpenAI wants AI to learn to play "Minecraft", it must find a way to put these massive unlabeled video data to use.

So, VPT came into being.

Paper address: https://cdn.openai.com/vpt/Paper.pdf

This thing is new, but it is not complicated. It is a semi-supervised imitation learning method.

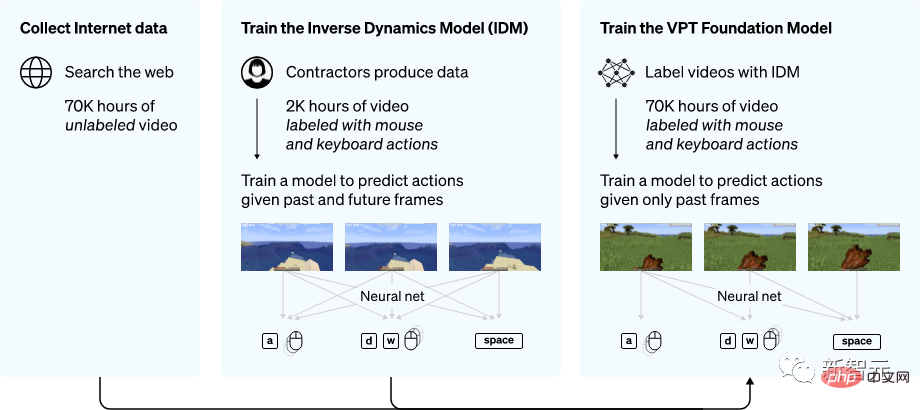

First, collect a wave of data to annotate the data of the outsourcers playing games, which includes videos and records of keyboard and mouse operations.

Overview of the VPT method

The researchers then used the data to Using an inverse dynamics model (IDM), we can infer how the keyboard and mouse move during each step in the video.

In this way, the entire task becomes much simpler, and only a lot less data is needed to achieve the goal.

After completing IDM with a small amount of outsourced data, you can use IDM to label a larger unlabeled data set.

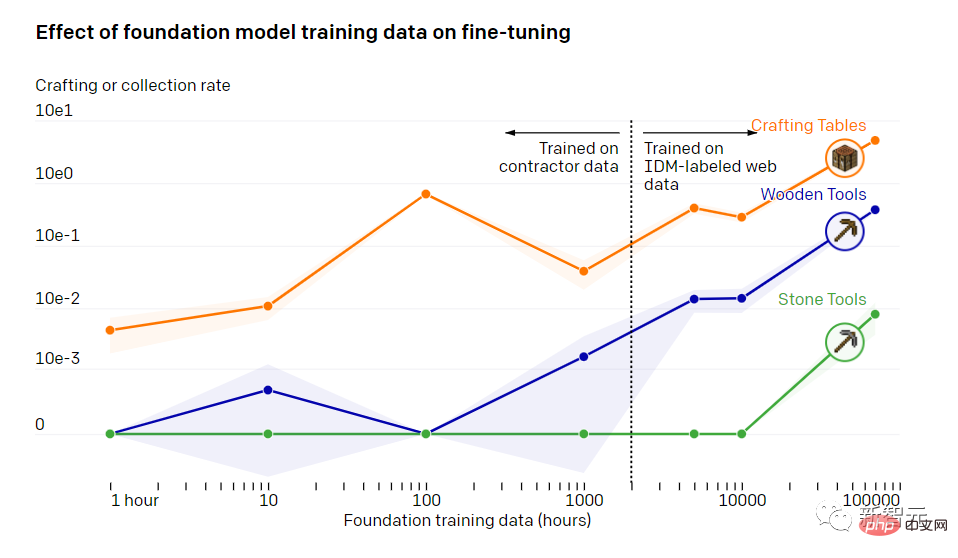

The impact of basic model training data on fine-tuning

In training After 70,000 hours, OpenAI's behavioral cloning model can achieve various tasks that other models cannot.

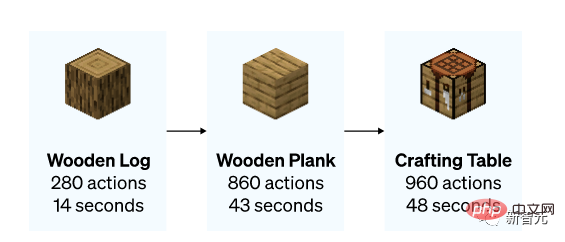

The model learned how to chop down trees and collect wood, how to use wood to make wooden strips, and how to use wooden strips to make tables. This set of things requires a relatively skilled player to operate for less than 50 seconds.

In addition to making a table, the model can also swim, hunt, and eat.

There is even a cool operation of "running, jumping and building", that is, placing a brick or wood block under your feet when jumping, and you can build a pillar while jumping. This is a required course for hardcore players.

Making a table (0 shot)

##Hunting (0 shot)

##"Running and jumping" simple version (0 shot)

In order to allow the model to complete some more precise tasks, the data set is generally fine-tuned to a smaller size and distinguishes small directions.OpenAI did a study that showed how well a model trained with VPT can adapt to downstream data sets after fine-tuning.

The researchers invited people to play "Minecraft" for 10 minutes and build a house using basic materials. They hope that in this way they can enhance the model's ability to perform some early-game tasks, such as building a workbench.

After fine-tuning the data set, the researchers not only found that the model was more efficient at performing initial tasks, but also found that the model itself understood how to make a piece of wood respectively. A workbench made of stone, and a tool table made of stone.

Sometimes, researchers can see models building crude shelters, searching villages, and looting boxes.

The whole process of making a stone pickaxe (the time marked below is the time it takes for a skilled player to perform the same task)

Making a Stone Pickaxe

Then let’s take a look , how OpenAI experts fine-tuned it.The method they use is reinforcement learning (RL).

Most RL methods address these challenges by stochastically exploring priors, i.e. models are often incentivized to reward random actions through entropy. The VPT model should be a better prior model for RL because simulating human behavior may be more helpful than taking random actions.

The researchers set up the model for the arduous task of collecting a diamond pickaxe, a feature never seen before in Minecraft because the entire task is performed using the native human-machine interface. It will become more difficult.

Crafting a diamond pickaxe requires a long and complex series of subtasks. To make this task tractable, the researchers rewarded the agent for each item in the sequence.

In stark contrast, the VPT model was fine-tuned to not only learn how to craft a diamond pickaxe, but also achieved even human-level success in collecting all items.

This is the first time someone has demonstrated a computer model capable of crafting diamond tools in Minecraft.

The above is the detailed content of shocked! After 70,000 hours of training, OpenAI's model learned to plan wood in 'Minecraft”. For more information, please follow other related articles on the PHP Chinese website!