Search recall, as the basis of the search system, determines the upper limit of effect improvement. How to continue to bring differentiated incremental value to the existing massive recall results is the main challenge we face. The combination of multi-modal pre-training and recall opens up new horizons for us and brings significant improvement in online effects.

Multimodal pre-training is the focus of research in academia and industry. By pre-training on large-scale data, Obtaining the semantic correspondence between different modalities can improve the performance in a variety of downstream tasks such as visual question answering, visual reasoning, and image and text retrieval. Within the group, there is also some research and application of multi-modal pre-training. In the Taobao main search scenario, there is a natural cross-modal retrieval requirement between the Query entered by the user and the products to be recalled. However, in the past, more titles and statistical features were used for products. , ignoring more intuitive information such as images. But for some queries with visual elements (such as white dress, floral dress), I believe everyone will be attracted by the image first on the search results page.

Our solution is as follows:

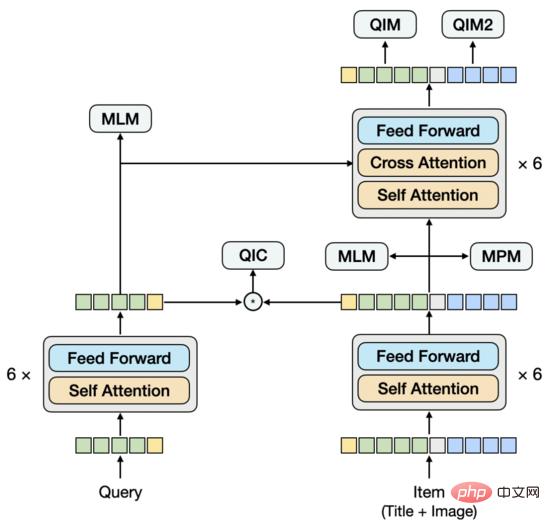

The multi-modal pre-training model needs to extract features from images and then fuse them with text features. There are three main ways to extract features from images: using a model trained in the CV field to extract RoI features, Grid features and Patch features of the image. From the perspective of model structure, there are two main types according to the different fusion methods of image features and text features: single-stream model or dual-stream model. In the single-stream model, image features and text features are spliced together and input into the Encoder at an early stage, while in the dual-stream model, image features and text features are input into two independent Encoder respectively, and then input into the cross-modal Encoder for processing. Fusion.

The way we extract image features is: divide the image For the patch sequence, use ResNet to extract the image features of each patch. In terms of model structure, we tried a single-stream structure, that is, splicing Query, title, and image together and inputting them into the Encoder. After multiple sets of experiments, we found that under this structure, it is difficult to extract pure Query vectors and Item vectors as input for the downstream twin-tower vector recall task. If you mask out unnecessary modes when extracting a certain vector, the prediction will be inconsistent with the training. This problem is similar to directly extracting the twin-tower model from an interactive model. According to our experience, this model is not as effective as the trained twin-tower model. Based on this, we propose a new model structure.

where represents the remaining text token

where represents the remaining text token

where represents the remaining image token

where represents the remaining image token

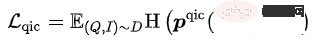

where

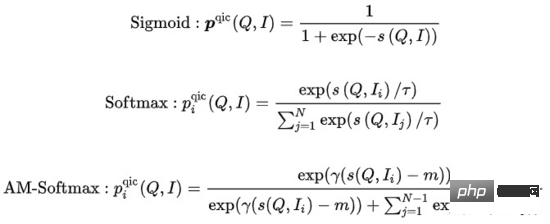

where  can be calculated in many ways:

can be calculated in many ways:

Among them represents similarity calculation, represents temperature hyperparameter, and m represent respectively Scaling factor and relaxation factor

The training goal of the model is to minimize the overall Loss:

In these 5 pre-conditions In the training task, the MLM task and the MPM task are located above the Item tower, modeling the ability to use cross-modal information to recover each other after part of the Token of the Title or Image is Masked. There is an independent MLM task above the Query tower. By sharing the Encoder of the Query tower and the Item tower, the semantic relationship and gap between Query and Title are modeled. The QIC task uses the inner product of two towers to align the pre-training and downstream vector recall tasks to a certain extent, and uses AM-Softmax to close the distance between the representation of Query and the representation of the most clicked items under Query, and push away the distance between Query and the most clicked items. The distance of other Items. The QIM task is located above the cross-modal Encoder and uses cross-modal information to model the matching of Query and Item. Due to the calculation amount, the positive and negative sample ratio of the usual NSP task is 1:1. In order to further expand the distance between positive and negative samples, a difficult negative sample is constructed based on the similarity calculation results of the QIC task. The QIM2 task sits in the same position as the QIM task, explicitly modeling the incremental information brought by images relative to text.

In large-scale information retrieval systems, the recall model is at the bottom and needs to score in a massive candidate set. For performance reasons, the structure of User and Item twin towers is often used to calculate the inner product of vectors. A core issue of the vector recall model is: how to construct positive and negative samples and the scale of negative sample sampling. Our solution is to use the user's click on an Item on a page as a positive sample, sample tens of thousands of negative samples based on the click distribution in the full product pool, and use Sampled Softmax Loss to deduce from the sampling sample that the Item is in the full product pool. click probability in .

represents the similarity calculation, represents the temperature Hyperparameters

Following the common FineTune paradigm, we tried to convert the pre-trained vectors into Directly input to the Twin Towers MLP, combined with large-scale negative sampling and Sampled Softmax to train a multi-modal vector recall model. However, in contrast to the usual small-scale downstream tasks, the training sample size of the vector recall task is huge, in the order of billions. We observed that the parameter amount of MLP cannot support the training of the model, and it will soon reach its own convergence state, but the effect is not good. At the same time, pre-trained vectors are used as inputs rather than parameters in the vector recall model and cannot be updated as training progresses. As a result, pre-training on relatively small-scale data conflicts with downstream tasks on large-scale data.

There are several solutions. One method is to integrate the pre-training model into the vector recall model. However, the number of parameters of the pre-training model is too large, and coupled with the sample size of the vector recall model, it cannot be used in the vector recall model. Under the constraints of limited resources, regular training should be carried out within a reasonable time. Another method is to construct a parameter matrix in the vector recall model, load the pre-trained vectors into the matrix, and update the parameters of the matrix as training progresses. After investigation, this method is relatively expensive in terms of engineering implementation. Based on this, we propose a model structure that simply and feasibly models pre-training vector updates.

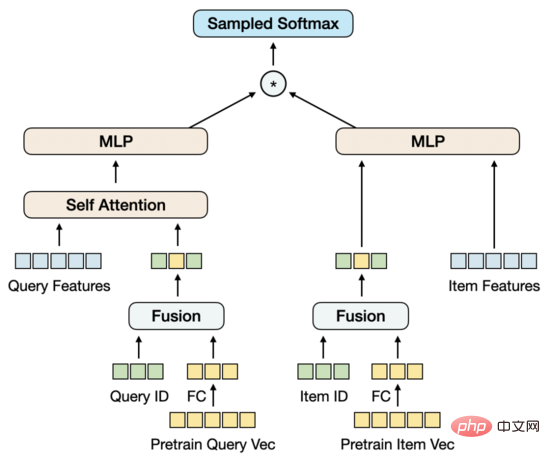

Let’s start with Reduce the dimensionality of the pre-training vector through FC. The reason why the dimensionality is reduced here rather than in pre-training is because the current high-dimensional vector is still within the acceptable performance range for negative sample sampling. In this case, Dimensionality reduction in vector recall tasks is more consistent with training goals. At the same time, we introduce the ID Embedding matrix of Query and Item. The Embedding dimension is consistent with the dimension of the reduced pre-training vector, and then the ID and pre-training vector are merged together. The starting point of this design is to introduce a parameter amount sufficient to support large-scale training data, while allowing the pre-training vector to be adaptively updated as training progresses.

When only ID and pre-training vectors are used to fuse, the effect of the model not only exceeds the effect of the twin-tower MLP using only pre-training vectors, but also exceeds the Baseline model MGDSPR, which contains more features. Going further, introducing more features on this basis can continue to improve the effect.

Recall@K : The evaluation data set is composed of the next day’s data of the training set. First, the click and transaction results of different users under the same Query Aggregate into  , and then calculate the proportion of Top K results predicted by the modelhit:

, and then calculate the proportion of Top K results predicted by the modelhit:

For the vector recall model, after Recall@K increases to a certain level, you also need to pay attention to the correlation between Query and Item. A model with poor relevance, even if it can improve search efficiency, will also face a deterioration in user experience and an increase in complaints and public opinion caused by an increase in Bad Cases. We use an offline model consistent with the online correlation model to evaluate the correlation between Query and Item and between Query and Item categories.

We select 1 from some categories A billion-level product pool is constructed to construct a pre-training data set.

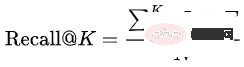

Our Baseline model is an optimized FashionBert, adding QIM and QIM2 tasks. When extracting Query and Item vectors, we only use Mean Pooling for non-Padding Tokens. The following experiments explore the gains brought by modeling with two towers relative to a single tower, and give the role of key parts through ablation experiments.

From these experiments, we can draw the following conclusions:

We select 1 billion level Clicked pages construct a vector recall dataset. Each page contains 3 click items as positive samples, and 10,000 negative samples are sampled from the product pool based on the click distribution. On this basis, no significant improvement in the effect was observed by further expanding the amount of training data or negative sample sampling.

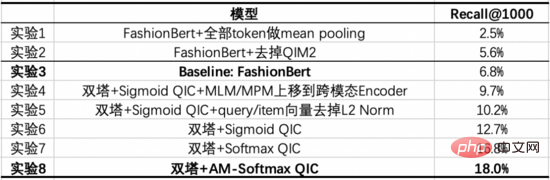

Our Baseline model is the MGDSPR model of the main search. The following experiments explore the gains brought by combining multi-modal pre-training with vector recall relative to Baseline, and give the role of key parts through ablation experiments.

From these experiments, we can draw the following conclusions:

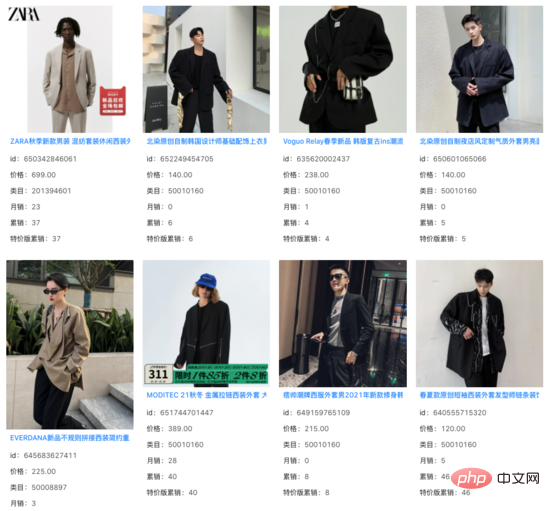

Among the Top 1000 results of the vector recall model, we filtered out the items that the online system has been able to recall, and found that the correlation of the remaining incremental results is basically unchanged. Under a large number of Query, we see that these incremental results capture image information beyond the product Title, and play a certain role in the semantic gap between Query and Title. . query: handsome suit

##query: women’s waist-cinching shirt

In response to the application requirements of the main search scenario, we proposed a text-image pre-training model, using the Query and Item twin-tower input cross-modal Encoder. Structure, where the Item tower is a single-flow model containing multi-modal graphics and text. Through the Query-Item and Query-Image matching tasks, as well as the Query-Item multi-classification task modeled by the inner product of Query and Item twin towers, the pre-training is closer to the downstream vector recall task. At the same time, the update of pre-trained vectors is modeled in vector recall. In the case of limited resources, pre-training using a relatively small amount of data can still improve the performance of downstream tasks that use massive data.

In other scenarios of main search, such as product understanding, relevance, and sorting, there is also a need to apply multi-modal technology. We have also participated in the exploration of these scenarios and believe that multi-modal technology will bring benefits to more and more scenarios in the future.

Taobao main search recall team: The team is responsible for the recall and rough sorting links in the main search link. The current main technical direction is based on full-space samples Multi-objective personalized vector recall, multi-modal recall based on large-scale pre-training, similar Query semantic rewriting based on contrastive learning, and coarse ranking models, etc.

The above is the detailed content of Exploration of multi-modal technology in Taobao main search recall scenarios. For more information, please follow other related articles on the PHP Chinese website!

What does Taobao b2c mean?

What does Taobao b2c mean?

Taobao password-free payment

Taobao password-free payment

What skills are needed to work in the PHP industry?

What skills are needed to work in the PHP industry?

What does legacy startup mean?

What does legacy startup mean?

What are the dos commands?

What are the dos commands?

How to shut down your computer quickly

How to shut down your computer quickly

The difference between ++a and a++ in c language

The difference between ++a and a++ in c language

How to clean up the computer's C drive when it is full

How to clean up the computer's C drive when it is full