Translator | Bugatti

Reviewer | Sun Shujuan

Currently, there are no standard practices for building and managing machine learning (ML) applications. Machine learning projects are poorly organized, lack repeatability, and tend to fail outright in the long run. Therefore, we need a process to help us maintain quality, sustainability, robustness, and cost management throughout the machine learning lifecycle.

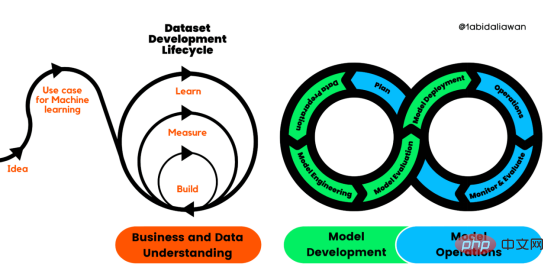

Figure 1. Machine Learning Development Lifecycle Process

Cross-industry standard process for developing machine learning applications using quality assurance methods (CRISP-ML(Q )) is an upgraded version of CRISP-DM to ensure the quality of machine learning products.

CRISP-ML (Q) has six separate phases:

1. Business and data understanding

2. Data preparation

3. Model Engineering

4. Model Evaluation

5. Model Deployment

6. Monitoring and Maintenance

These stages require continuous iteration and exploration to build better s solution. Even if there is order in the framework, the output of a later stage can determine whether we need to re-examine the previous stage.

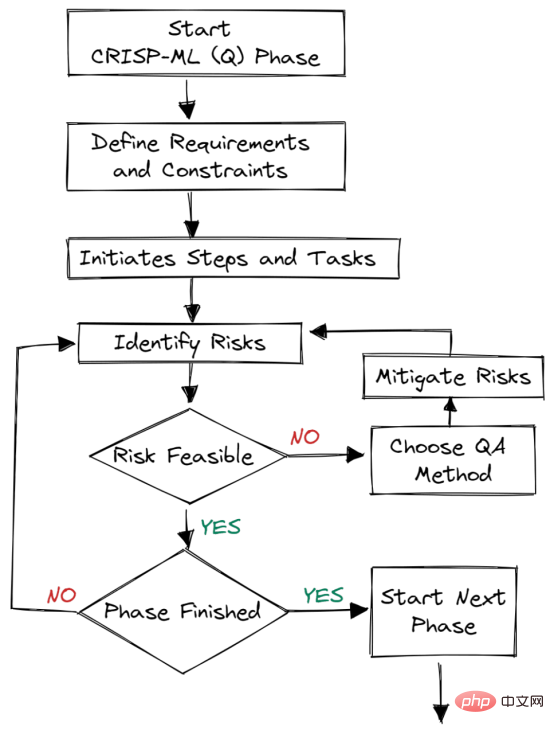

Figure 2. Quality assurance at each stage

Quality assurance methods are introduced into each stage of the framework. This approach has requirements and constraints, such as performance metrics, data quality requirements, and robustness. It helps reduce risks that impact the success of machine learning applications. It can be achieved by continuously monitoring and maintaining the entire system.

For example: In e-commerce companies, data and concept drift will lead to model degradation; if we do not deploy a system to monitor these changes, the company will suffer losses, that is, lose customers.

At the beginning of the development process, we need to determine the project scope, success criteria, and feasibility of the ML application. After that, we started the data collection and quality verification process. The process is long and challenging.

Scope: What we hope to achieve by using the machine learning process. Is it to retain customers or reduce operating costs through automation?

Success Criteria: We must define clear and measurable business, machine learning (statistical indicators) and economic (KPI) success indicators.

Feasibility: We need to ensure data availability, suitability for machine learning applications, legal constraints, robustness, scalability, interpretability, and resource requirements.

Data Collection: By collecting data, versioning it for reproducibility and ensuring a continuous flow of real and generated data.

Data Quality Verification: Ensure quality by maintaining data descriptions, requirements and validations.

To ensure quality and reproducibility, we need to record the statistical properties of the data and the data generation process.

The second stage is very simple. We will prepare the data for the modeling phase. This includes data selection, data cleaning, feature engineering, data enhancement and normalization.

1. We start with feature selection, data selection, and handling of imbalanced classes through oversampling or undersampling.

2. Then, focus on reducing noise and handling missing values. For quality assurance purposes, we will add data unit tests to reduce erroneous values.

3. Depending on the model, we perform feature engineering and data augmentation such as one-hot encoding and clustering.

4. Normalize and extend data. This reduces the risk of biased features.

To ensure reproducibility, we created data modeling, transformation, and feature engineering pipelines.

The constraints and requirements of the business and data understanding phases will determine the modeling phase. We need to understand the business problems and how we will develop machine learning models to solve them. We will focus on model selection, optimization and training, ensuring model performance metrics, robustness, scalability, interpretability, and optimizing storage and computing resources.

1. Research on model architecture and similar business problems.

2. Define model performance indicators.

3. Model selection.

4. Understand domain knowledge by integrating experts.

5. Model training.

6. Model compression and integration.

To ensure quality and reproducibility, we will store and version control model metadata, such as model architecture, training and validation data, hyperparameters, and environment descriptions.

Finally, we will track ML experiments and create ML pipelines to create repeatable training processes.

This is the stage where we test and ensure the model is ready for deployment.

Every step of the evaluation phase is documented for quality assurance.

Model deployment is the stage where we integrate machine learning models into existing systems. The model can be deployed on servers, browsers, software and edge devices. Predictions from the model are available in BI dashboards, APIs, web applications and plug-ins.

Model deployment process:

Models in production environments require continuous monitoring and maintenance. We will monitor model timeliness, hardware performance, and software performance.

Continuous monitoring is the first part of the process; if performance drops below a threshold, a decision is made automatically to retrain the model on new data. Furthermore, the maintenance part is not limited to model retraining. It requires decision-making mechanisms, acquiring new data, updating software and hardware, and improving ML processes based on business use cases.

In short, it is continuous integration, training and deployment of ML models.

Training and validating models is a small part of ML applications. Turning an initial idea into reality requires several processes. In this article we introduce CRISP-ML(Q) and how it focuses on risk assessment and quality assurance.

We first define the business goals, collect and clean data, build the model, verify the model with a test data set, and then deploy it to the production environment.

The key components of this framework are ongoing monitoring and maintenance. We will monitor data and software and hardware metrics to determine whether to retrain the model or upgrade the system.

If you are new to machine learning operations and want to learn more, read the free MLOps course reviewed by DataTalks.Club. You'll gain hands-on experience in all six phases, understanding the practical implementation of CRISP-ML.

Original title: Making Sense of CRISP-ML(Q): The Machine Learning Lifecycle Process, Author: Abid Ali Awan

The above is the detailed content of Interpreting CRISP-ML(Q): Machine Learning Lifecycle Process. For more information, please follow other related articles on the PHP Chinese website!