Machine learning models are only valid when deployed in a production environment; this is where machine learning deployment becomes indispensable.

Machine learning has become an integral part of many industries, from healthcare to finance and beyond. It gives us the tools we need to gain meaningful insights and make better decisions. However, even the most accurate and well-trained machine learning models are useless if not deployed in a production environment. This is where machine learning model deployment comes in.

Deploying machine learning models can be a daunting task, even for experienced engineers. From choosing the right deployment platform to ensuring your model is optimized for production, there are many challenges to overcome. But fear not; in this article, you'll learn advanced tips and techniques to help you optimize your machine learning model deployment process and avoid common pitfalls.

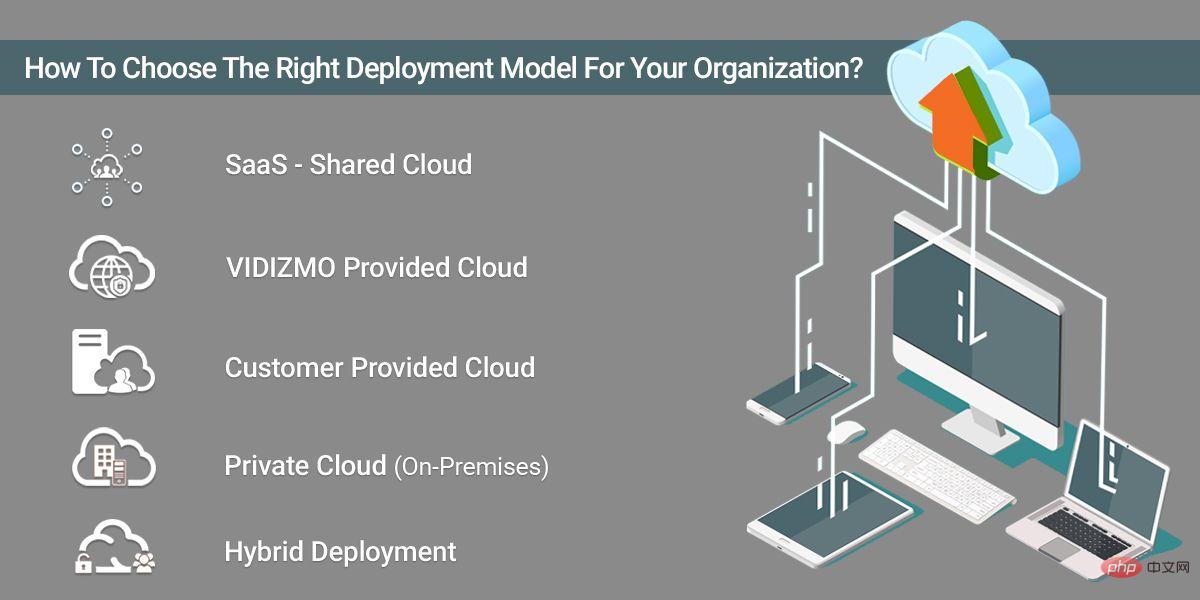

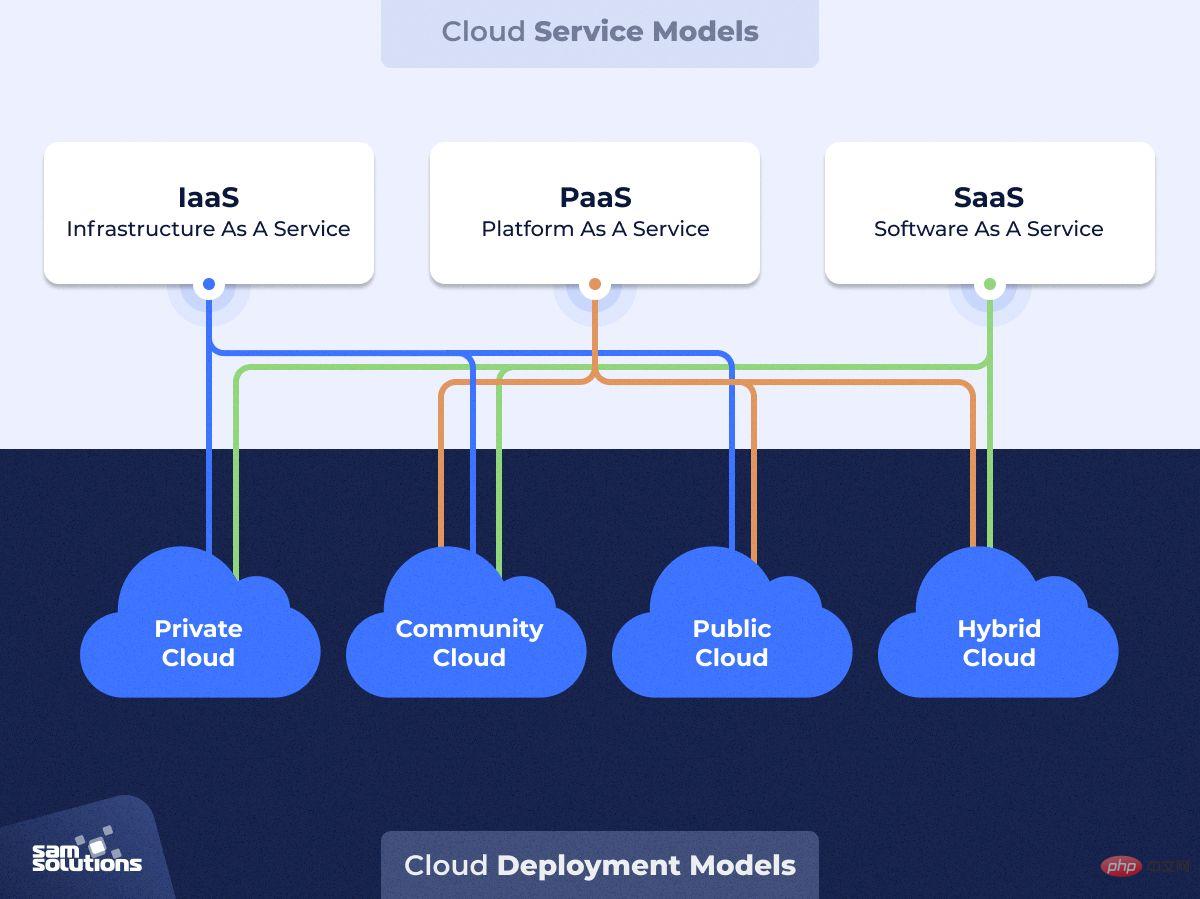

There are many different platforms to choose from when deploying machine learning models. The right platform for your project depends on a variety of factors, including your budget, the size and complexity of your model, and the specific requirements of your deployment environment.

Some popular deployment platforms include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each of these platforms provides a wide range of tools and services to help you deploy and manage your machine learning models.

An important consideration when choosing a deployment platform is the level of control you have over your deployment environment. Some platforms, such as AWS, allow you to highly customize your environment, while others may be more restrictive.

Another consideration is deployment cost. Some platforms offer pay-as-you-go pricing models, while others require a monthly subscription or upfront payment.

Overall, it’s important to choose a deployment platform that meets your specific needs and budget. Don’t be afraid to try different platforms to find the one that works best for you.

After selecting a deployment platform, the next step is to optimize the production model. This involves several key steps, including:

Reduce model complexity: Complex models may perform well in training, but can be slow and resource-intensive to deploy. By simplifying the model architecture and reducing the number of parameters, you can improve performance and reduce deployment time.

Ensure data consistency: In order for your model to perform consistently in a production environment, it is important to ensure that the input data is consistent and of high quality. This may involve preprocessing your data to remove outliers or handle missing values.

Optimize hyperparameters: Hyperparameters are settings that control the behavior of a machine learning model. By adjusting these deployment parameters, you can improve model performance and reduce deployment time.

Testing and Validation: Before deploying a model, it is important to test and validate its performance in a production-like environment. This can help you identify and resolve any issues before they cause production problems.

Following these steps will ensure that your machine learning model is optimized for production and performs consistently in your deployment environment.

After optimizing your deployment model, it’s time to choose the deployment strategy that best suits your use case. Some common deployment strategies include: API-based deployment: In this strategy, your machine learning model is deployed as a web service accessible through an API. This approach is typically used in applications that require real-time predictions.

Container-based deployment: Containerization involves packaging your machine learning model and its dependencies into a lightweight container that can be easily deployed to any environment. This approach is typically used for large-scale deployments or applications that need to run locally.

Serverless Deployment: In a serverless deployment, your machine learning model is deployed to a serverless platform such as AWS Lambda or Google Cloud Functions. For applications with variable requirements, this approach can be a cost-effective and scalable option.

Best Practices for Model DeploymentNo matter which deployment strategy you choose, there are some best practices you should follow to ensure a smooth deployment process:

Select The right deployment method: There are multiple deployment methods available, including cloud-based solutions (such as Amazon SageMaker and Microsoft Azure), container-based solutions (such as Docker and Kubernetes), and on-premises solutions. Choose the deployment method that best suits your organization's needs.Containerize your model: Containerization allows you to package your model and all of its dependencies into a container that can be easily deployed and scaled. This simplifies the deployment process and ensures consistency across different environments.

Use Version Control: Version control is essential for tracking code changes and ensuring that you can roll back to a previous version if necessary. Use a version control system like Git to track changes to your code and models.

Automated deployment: Automating the deployment process can help you reduce errors and ensure consistency across different environments. Use tools like Jenkins or CircleCI to automate the deployment process.

Implement Security Measures: Machine learning models are vulnerable to attacks, so it is important to implement security measures such as authentication and encryption to protect your model and data.

Continuously monitor performance: You already know that model monitoring is critical to identifying and resolving performance issues. Continuously monitor the model's performance and make changes as needed to improve its accuracy and reliability.

Following these best practices ensures that your machine learning models are deployed effectively and efficiently, and that they continue to run optimally in production environments.

Model monitoring involves tracking and analyzing the performance of machine learning models in a production environment. This allows you to identify and diagnose problems with your model, such as decreased accuracy or changes in data distribution.

When deploying a machine learning model, you should monitor several key metrics, including:

Prediction Accuracy: This measures how well your model predicts the target variable in your dataset accuracy.

Precision and Recall: These metrics are commonly used to evaluate binary classification models and measure the difference between correctly identifying positive examples (precision) and correctly identifying all positive examples (recall). trade off.

F1 Score: The F1 score is a metric that combines precision and recall to provide an overall measure of model performance.

Data Drift: Data drift occurs when the distribution of input data changes over time, which can have a negative impact on model performance.

Latency and Throughput: These metrics measure how quickly your model processes input data and generates predictions.

By monitoring these metrics, you can identify performance issues early and take steps to improve your model's performance over time. This may involve retraining your model based on updated data, modifying your model architecture, or fine-tuning your hyperparameters.

There are a variety of tools and platforms available for model monitoring, including open source libraries such as TensorFlow Model Analysis and commercial platforms such as Seldon and Algorithmia. By leveraging these tools, you can automate the model monitoring process and ensure your machine learning models run optimally in production.

Machine learning model deployment is a key component of the machine learning development process. It's important to ensure that your models are deployed effectively and efficiently, and that they continue to run optimally in production environments.

In this article, you learned the basics of machine learning model deployment, including the different deployment methods available, the importance of model monitoring, and best practices for model deployment.

This article also gives you a good understanding of the key concepts involved in deploying machine learning models and gives you some helpful tips to ensure your models are deployed effectively.

Remember that effective machine learning model deployment requires a combination of technical skills, best practices, and an understanding of the business context in which the model is deployed.

By following the best practices outlined in this article and continuously monitoring your model's performance, you can ensure that your machine learning models have a positive impact on your organization's goals.

The above is the detailed content of Optimizing Machine Learning Deployments: Tips and Tricks. For more information, please follow other related articles on the PHP Chinese website!