GPT4All is a chatbot trained based on a large amount of clean assistant data (including code, stories and conversations). The data includes ~800k pieces of GPT-3.5-Turbo generated data. It is completed based on LLaMa and can be used in M1 Mac, Windows and other environments. Can run. Perhaps as its name suggests, the time has come when everyone can use personal GPT.

Since OpenAI released ChatGPT, chatbots have become increasingly popular in recent months.

Although ChatGPT is powerful, it is almost impossible for OpenAI to open source it. Many people are working on open source, such as LLaMA, which was open sourced by Meta some time ago. It is a general term for a series of models with parameter quantities ranging from 7 billion to 65 billion. Among them, the 13 billion parameter LLaMA model can outperform the 175 billion parameter GPT-3 "on most benchmarks".

The open source of LLaMA has benefited many researchers. For example, Stanford added instruct tuning to LLaMA and trained a new 7 billion parameter model called Alpaca (based on LLaMA 7B ). The results show that the performance of Alpaca, a lightweight model with only 7B parameters, is comparable to very large-scale language models such as GPT-3.5.

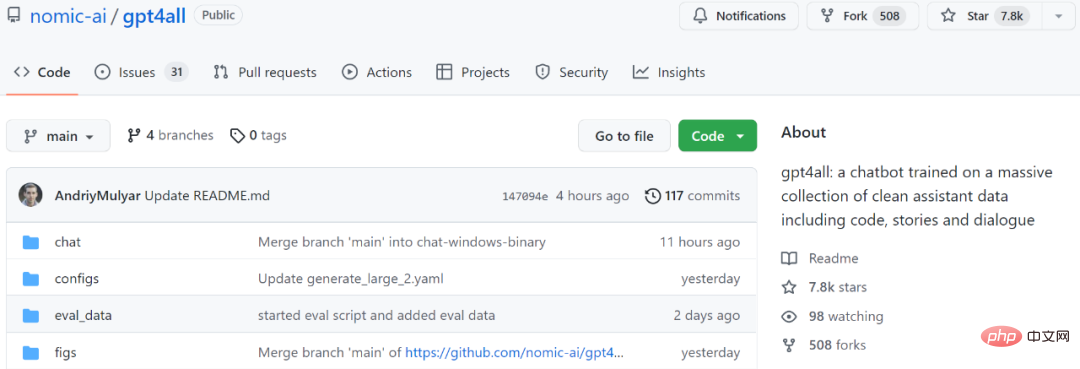

For another example, the model we are going to introduce next, GPT4All, is also a new 7B language model based on LLaMA. Two days after the project was launched, the number of Stars has exceeded 7.8k.

Project address: https://github.com/nomic-ai/gpt4all

To put it simply, GPT4All is 800k in GPT-3.5-Turbo Training is performed on pieces of data, including text questions, story descriptions, multiple rounds of dialogue and code.

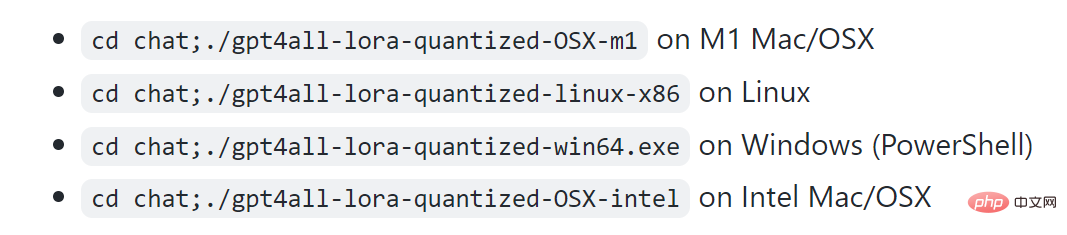

According to the project display, M1 can run in Mac, Windows and other environments.

Let’s take a look at the effect first. As shown in the figure below, users can communicate with GPT4All without any barriers, such as asking the model: "Can I run a large language model on a laptop?" GPT4All's answer is: "Yes, you can use a laptop to train and test neural networks Or machine learning models for other natural languages (such as English or Chinese). Importantly, you need enough available memory (RAM) to accommodate the size of these models..."

Next, if you don't know You can continue to ask GPT4All how much memory is needed, and it will give an answer. Judging from the results, GPT4All’s ability to conduct multiple rounds of dialogue is still very strong.

Real-time sampling on M1 Mac

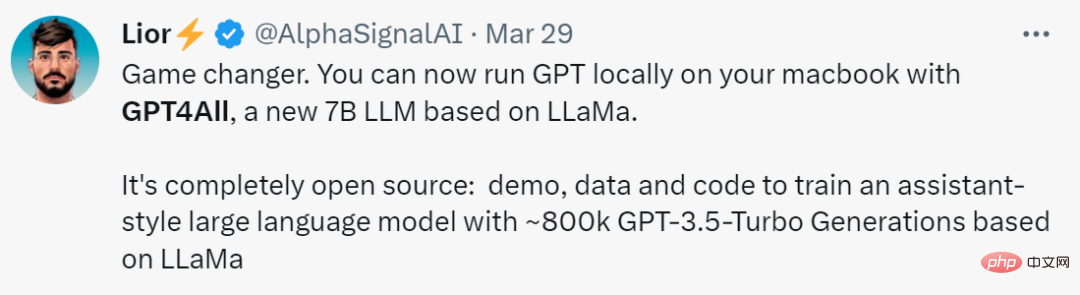

Some people call this research "game-changing" Rules, with the blessing of GPT4All, you can now run GPT locally on MacBook."

Similar to GPT-4, GPT4All also provides a "technical Report".

Technical report address: https://s3.amazonaws.com/static.nomic.ai/gpt4all/2023_GPT4All_Technical_Report.pdf

This preliminary The technical report briefly describes the construction details of GPT4All. The researchers disclosed the collected data, data wrangling procedures, training code, and final model weights to promote open research and reproducibility. They also released a quantized 4-bit version of the model, which means almost Anyone can run the model on a CPU.

Next, let’s take a look at what’s written in this report.

1. Data collection and sorting

During the period from March 20, 2023 to March 26, 2023, the research Researchers used the GPT-3.5-Turbo OpenAI API to collect approximately 1 million pairs of prompt responses.

First, the researchers collected different question/prompt samples by utilizing three publicly available data sets:

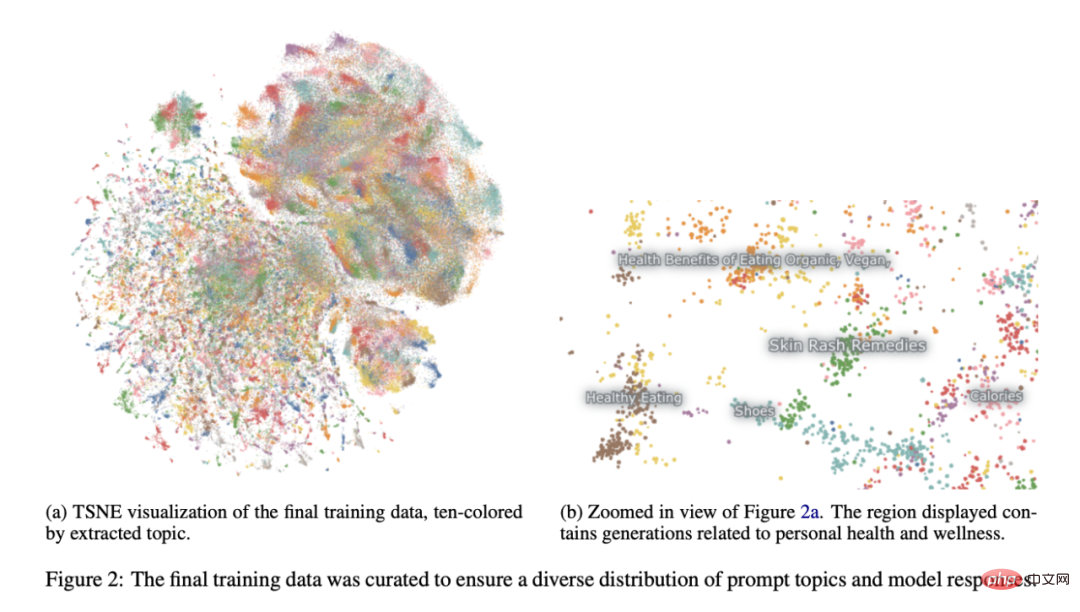

Referring to the Stanford University Alpaca project (Taori et al., 2023), researchers have paid a lot of attention to data preparation and organization. After collecting the initial dataset of prompt-generated pairs, they loaded the data into Atlas to organize and clean it, removing any samples in which GPT-3.5-Turbo failed to respond to prompts and produced malformed output. This reduces the total number of samples to 806199 high-quality prompt-generated pairs. Next, we removed the entire Bigscience/P3 subset from the final training dataset because its output diversity was very low. P3 contains many homogeneous prompts that generate short and homogeneous responses from GPT-3.5-Turbo.

This elimination method resulted in a final subset of 437,605 prompt-generated pairs, as shown in Figure 2.

Model training

The researchers combined several models in an instance of LLaMA 7B (Touvron et al., 2023) Make fine adjustments. Their original public release-related model was trained with LoRA (Hu et al., 2021) on 437,605 post-processed examples for 4 epochs. Detailed model hyperparameters and training code can be found in the relevant resource library and model training logs.

Reproducibility

The researchers released all data (including unused P3 generations), training code and model weights for the community to reproduce. Interested researchers can find the latest data, training details, and checkpoints in the Git repository.

Cost

It took the researchers about four days to create these models, and the GPU cost was $800 (rented from Lambda Labs and Paperspace, including several failed training), in addition to the $500 OpenAI API fee.

The final released model gpt4all-lora can be trained in approximately 8 hours on Lambda Labs’ DGX A100 8x 80GB, at a total cost of $100.

This model can be run on an ordinary laptop. As a netizen said, "There is no cost except the electricity bill."

The researchers conducted a preliminary evaluation of the model using human evaluation data in the SelfInstruct paper (Wang et al., 2022). The report also compares the ground truth perplexity of this model with the best known public alpaca-lora model (contributed by huggingface user chainyo). They found that all models had very large perplexities on a small number of tasks and reported perplexities up to 100. Models fine-tuned on this collected dataset showed lower perplexity in Self-Instruct evaluation compared to Alpaca. The researchers say this evaluation is not exhaustive and there is still room for further evaluation - they welcome readers to run the model on a local CPU (documentation available on Github) and get a qualitative sense of its capabilities.

Finally, it is important to note that the authors publish data and training details in the hope that it will accelerate open LLM research, especially in the areas of alignment and interpretability. GPT4All model weights and data are for research purposes only and are licensed against any commercial use. GPT4All is based on LLaMA, which has a non-commercial license. Assistant data was collected from OpenAI's GPT-3.5-Turbo, whose terms of use prohibit the development of models that compete commercially with OpenAI.

The above is the detailed content of A replacement for ChatGPT that can be run on a laptop is here, with a full technical report attached. For more information, please follow other related articles on the PHP Chinese website!

Computer Languages

Computer Languages

Computer application areas

Computer application areas

What is the encoding used inside a computer to process data and instructions?

What is the encoding used inside a computer to process data and instructions?

The main reason why computers use binary

The main reason why computers use binary

What are the main characteristics of computers?

What are the main characteristics of computers?

What are the basic components of a computer?

What are the basic components of a computer?

What keys do arrows refer to in computers?

What keys do arrows refer to in computers?

How to recover browser history on computer

How to recover browser history on computer