This article brings you a detailed introduction (code example) about the InnoDB storage engine in MySQL. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

InnoDB belongs to the storage engine layer in MySQL and is integrated into the database in the form of a plug-in. Starting from MySQL 5.5.8, InnoDB becomes its default storage engine. InnoDB storage engine supports transactions, and its design goal is mainly for OLTP applications. Its main features include: supporting transactions, row lock design to support high concurrency, foreign key support, automatic crash recovery, clustered index organization table structure, etc. (Related recommendations: MySQL Tutorial)

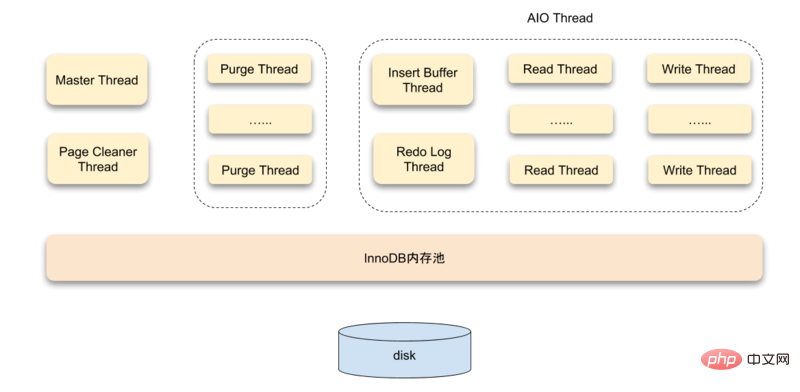

System Architecture

The InnoDB storage engine is composed of three parts: memory pool, background thread, and disk storage. .

Threads

InnoDB uses a multi-threading model, with multiple different threads in the background responsible for processing different tasks

Master Thread is the core background thread, which is mainly responsible for asynchronously refreshing the data in the buffer pool to the disk to ensure data consistency. Including dirty page refresh, merged insertion buffer, UNDO page recycling, etc.

In the InnoDB storage engine, asynchronous IO (Async IO) is used extensively to handle write IO requests. The job of IO Thread is mainly responsible for the callback of these IO requests.

After a transaction is committed, the undo log used by it may no longer be needed, so Purge Thread is required to recycle the UNDO pages that have been allocated and used. InnoDB supports multiple Purge Threads, which can speed up the recycling of UNDO pages, increase CPU usage and improve storage engine performance.

Page Cleaner Thread is used to replace the dirty page refresh operation in Master Thread. Its purpose is to reduce the work of the original Master Thread and the blocking of user query threads, and further improve Performance of the InnoDB storage engine.

Memory

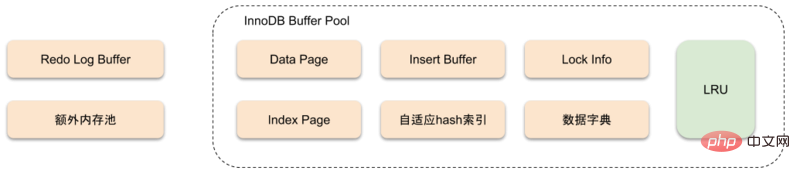

InnoDB Storage Engine Memory Structure

InnoDB Storage The engine is based on disk storage and manages the records in pages. But due to the gulf between CPU speed and disk speed, disk-based database systems often use buffer pool records to improve the overall performance of the database.

The buffer pool actually uses the speed of memory to compensate for the impact of slow disk speed on database performance. When the database performs a read operation, the page in the disk is first put into the buffer pool. The next time the same page is read, the page data is first obtained from the buffer pool to act as a cache.

For data modification operations, the page data in the buffer pool is first modified, and then flushed to the disk using a mechanism called Checkpoint.

The size of the buffer pool directly affects the overall performance of the database. For the InnoDB storage engine, the buffer pool configuration is set through the parameter innodb_buffer_pool_size. Use the SHOW VARIABLES LIKE 'innodb_buffer_pool_size' command to view the buffer pool configuration:

mysql> SHOW VARIABLES LIKE 'innodb_buffer_pool_size' \G *************************** 1. row *************************** Variable_name: innodb_buffer_pool_size Value: 134217728 1 row in set (0.01 sec)

The types of data pages cached in the buffer pool are: index pages, undo pages, insert buffers, adaptive hashing Index, InnoDB lock information, data dictionary information, etc. Index pages and data pages account for a large part of the buffer pool.

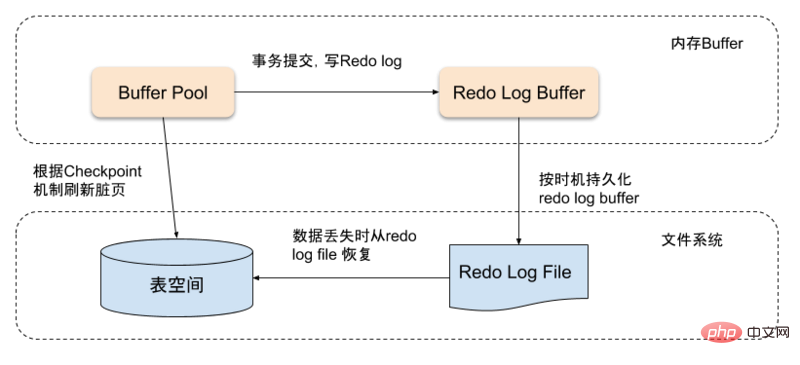

When the page data in the buffer pool is newer than the disk, the new data needs to be flushed to the disk. InnoDB uses the Write Ahead Log strategy to refresh data. That is, when a transaction is submitted, the redo log buffer is first written. The redo log buffer will be flushed to the reset log file at a certain frequency, and then the dirty pages will be flushed to the disk according to the checkpoint mechanism. .

The redo log buffer does not need to be set very large. Normally 8M can meet most application scenarios. The redo log supports the following three situations to trigger refresh:

Master Thread flushes the redo log buffer to the redo log file every second

When each transaction is committed, the redo log buffer is flushed to the redo log file

When the remaining space in the redo log buffer pool is less than 1/2, the redo log buffer is flushed to the redo log file. Make log files

Lock

The locks supported by InnoDB are:Gap lock

Auto-increment lock

Transaction

ACIDTransaction is the most important feature of the database as OLTP. When talking about transactions, we have to mention the four basic features of ACID:Repeatable Read, this level ensures that the results of reading the same record multiple times in the same transaction are consistent, and solves both phantom reads and non-repeatable in the InnoDB storage engine. Read the question.

The InnoDB engine solves the problem of phantom reads by using Next-Key Lock. Next-Key Lock is a combination of row lock and gap lock. When InnoDB scans the index record, it will first add a row lock (Record Lock) to the index record, and then add a gap to the gaps on both sides of the index record. Gap Lock. After adding the gap lock, other transactions cannot modify or insert records in this gap.

Serializable is the highest isolation level. It avoids the problem of phantom reads by forcing transactions to be executed serially. However, Serializable will be executed on each row of data read. All are locked, so it may cause a lot of timeout and lock contention problems, so the concurrency drops sharply, and it is not recommended to use it in MySQL InnoDB.

BEGIN, BEGIN WORK, START TRANSACTION

. This operation is only valid under the RR isolation level, otherwise an error will be reported. Undo log

Transaction submission

) to the global commit transaction in the linked list (

, if it is not satisfied and is insert undo, it will be marked as TRX_UNDO_TO_FREE, otherwise the undo is update undo and it will be marked as TRX_UNDO_TO_PURGE. Undos marked TRX_UNDO_CACHED will be recycled by the engine.

into the history list of undo segment, and increment rseg_history_len(global). At the same time, update TRX_UNDO_TRX_NO on the Page. If the data is deleted, reset delete_mark

from Delete from update_undo_list. If marked as TRX_UNDO_CACHED, add it to the update_undo_cached queue

(Log undo/redo is written to the public buffer). At this point, the file-level transaction is committed. Even if it crashes at this time, the transaction can still be guaranteed to be submitted after restarting. The next thing to do is to update the memory data status (trx_commit_in_memory)

from the global Remove it from the readview linked list, and then reset the information in the trx_t structure. A read-write transaction first needs to set the transaction status to TRX_STATE_COMMITTED_IN_MEMORY, release all row locks and remove trx_t from rw_trx_list, readview Removed from the global readview linked list. If there is insert undo, remove it here. If there is update undo, wake up the Purge thread to clean up the garbage. Finally, reset the information in trx_t for easy downloading. A transaction uses

and insert undo, and start rolling back from this undo

, then Records marked for deletion are marked for cleaning, and updated data is rolled back to the oldest version. If it is insert undo, delete the clustered index and secondary index directly

If all undo has been rolled back or rolled back to the specified undo, stop and delete the Undo log

Index

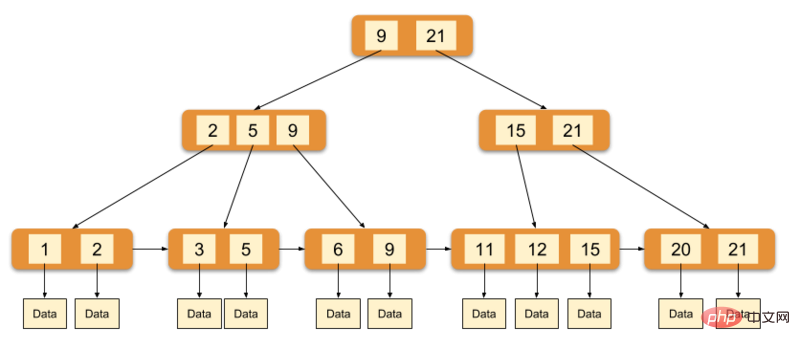

The InnoDB engine uses the B-tree as the index structure. The data field of the leaf node of the primary key index stores complete field data, and the leaf nodes of the non-primary key index store the value data pointing to the primary key.

The above picture is a schematic diagram of the InnoDB main index (also a data file). You can see that the leaf nodes contain complete data records. This index is called a clustered index. Because InnoDB's data files themselves are aggregated by primary key, InnoDB requires that the table must have a primary key. If not explicitly specified, the MySQL system will automatically select a column that can uniquely identify the data record as the primary key. If such a column does not exist, then MySQL automatically generates an implicit field as the primary key for the InnoDB table. The length of this field is 6 bytes and the type is long.

InnoDB's auxiliary index data field stores the value of the corresponding record's primary key instead of the address. In other words, all secondary indexes in InnoDB reference the primary key as the data field. The implementation of the clustered index makes searching by primary key very efficient, but the auxiliary index search requires retrieving the index twice: first, retrieve the auxiliary index to obtain the primary key, and then use the primary key to retrieve the records in the primary index.

The above is the detailed content of Detailed introduction to the InnoDB storage engine in MySQL (code example). For more information, please follow other related articles on the PHP Chinese website!