This article introduces to you a brief discussion of high concurrency and distributed clusters in node.js. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Before explaining why node can achieve high concurrency, you might as well understand several other features of node:

Let’s first clarify a concept, that is: node is single-threaded, which is the same as the characteristics of JavaScript in the browser, and the JavaScript main thread in node is the same as Other threads (such as I/O threads) cannot share state.

The advantage of single thread is:

There is no need to pay attention to the state synchronization between threads like multi-threading

There is no overhead caused by thread switching

There is no deadlock

Of course, single thread also has many disadvantages:

Unable to fully utilize multi-core CPU

A large number of calculations occupying the CPU will cause application blocking (that is, not suitable for CPU-intensive applications)

Errors will cause the entire application to exit

However, it seems today that these disadvantages are no longer problems or have been appropriately resolved:

(1) Create Process or subdivision example

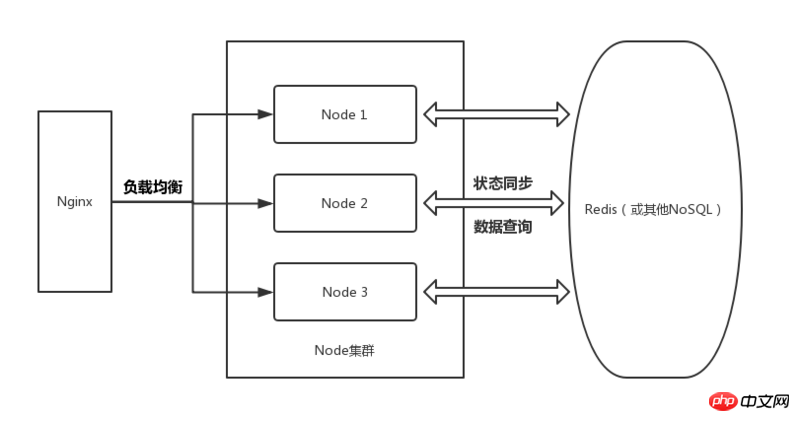

Regarding the first problem, the most straightforward solution is to use the child_process core module or cluster: child_process and net combined application. We can make full use of each core by creating multiple processes on a multi-core server (usually using a fork operation), but we need to deal with inter-process communication issues.

Another solution is that we can divide the physical machine into multiple single-core virtual machines, and use tools such as pm2 to manage multiple virtual machines to form a cluster architecture to efficiently run the required services. As for each I will not discuss the communication (status synchronization) between machines here, and will explain it in detail in the Node distributed architecture below.

(2) Time slice rotation

Regarding the second point, after discussing with my friends, I believe that we can use time slice rotation to simulate multi-threading on a single thread and appropriately reduce the risk of application blocking. Feeling (although this method will not really save time like multi-threading)

(3) Load balancing, dead pixel monitoring/isolation

As for the third point, my friends and I We have also discussed that the main pain point is that node is different from JAVA, and the logic it implements is mainly asynchronous.

This results in node being unable to use try/catch to catch and bypass errors as conveniently as JAVA, because it is impossible to determine when the asynchronous task will return the exception. In a single-threaded environment, failure to bypass errors means that the application will exit, and the gap between restarts and recovery will cause service interruptions, which we do not want to see.

Of course, now that the server resources are abundant, we can use tools such as pm2 or nginx to dynamically determine the service status. Isolate the bad pixel server when a service error occurs, forward the request to the normal server, and restart the bad pixel server to continue providing services. This is also part of Node's distributed architecture.

You may ask, since node is single-threaded and all events are processed on one thread, shouldn't it be inefficient and contrary to high concurrency?

On the contrary, the performance of node is very high. One of the reasons is that node has the asynchronous I/O feature. Whenever an I/O request occurs, node will provide an I/O thread for the request. Then node will not care about the I/O operation process, but will continue to execute the event on the main thread. It only needs to be processed when the request returns the callback. That is to say, node saves a lot of time waiting for requests.

This is also one of the important reasons why node supports high concurrency

In fact, not only I/O operations, most of the operations of node are asynchronous. carried out in a manner. It is like an organizer. It does not need to do everything personally. It only needs to tell members how to operate correctly, accept feedback, and handle key steps, so that the entire team can run efficiently.

You may want to ask again, how does node know that the request has returned a callback, and when should it handle these callbacks?

The answer is another feature of node: Transaction driver, that is, the main thread runs the program through the event loop event loop trigger

This is node support Another important reason for high concurrency

Illustration of Event loop in node environment:

┌───────────────────────┐ ┌─>│ timers │<p><strong>poll stage:</strong></p><p>When entering poll phase, and when no timers are called, the following situation will occur: </p><p> (1) If the poll queue is not empty: </p>

Event Loop will be executed synchronously Poll the callback (new I/O event) in the queue until the queue is empty or the executed callback comes online.

(2) If the poll queue is empty:

If the script calls setImmediate(), the Event Loop will end the poll phase and enter Execute the callback of setImmediate() in the check phase.

If the script is not called by setImmediate(), the Event Loop will wait for callbacks (new I/O events) to be added to the queue, and then execute them immediately.

When entering the poll phase and calling timers, the following situation will occur:

Once the poll queue is empty, the Event Loop will check Whether timers, if one or more timers have arrived, the Event Loop will return to the timer phase and execute the callbacks of those timers (ie, enter the next tick).

##Priority:

Next Tick Queue > MicroTask QueuesetTimeout, setInterval > setImmediateSince timer needs to take out the timer from the red-black tree to determine whether the time has arrived, the time complexity is O(lg(n)), so if you want to execute an event asynchronously immediately, it is best not to use setTimeout(func, 0) . Instead use process.nextTick() to do it. ## Of course, this should be an ideal architecture. Because although Redis's read/write is quite fast, this is because it stores data in the memory pool and performs related operations on the memory.

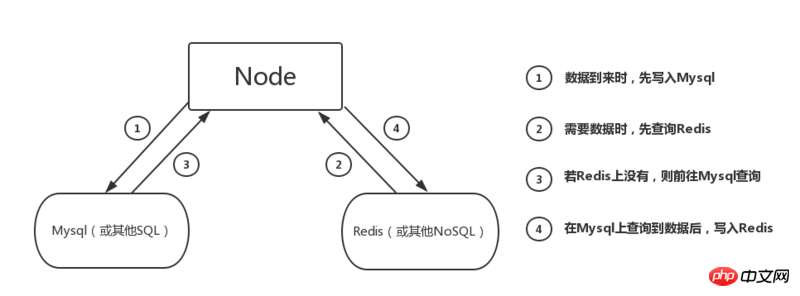

## Of course, this should be an ideal architecture. Because although Redis's read/write is quite fast, this is because it stores data in the memory pool and performs related operations on the memory.

This is quite high for the memory load of the server, so usually we still add Mysql to the architecture, as shown below:

Explain this picture first:

Explain this picture first:

The advantages of joining Mysql compared to only reading/writing in Redis are:

(1) Avoid writing useless data to Redis in the short term, occupying memory, and reducing the burden on Redis

(2) When specific queries and analysis of data are needed in the later stage (such as analyzing the increase in users of operational activities), SQL relational query can provide great help

Of course, when dealing with large traffic in a short period of time When writing, we can also write data directly to Redis to quickly store data and increase the server's ability to cope with traffic. When the traffic subsides, we can write the data to Mysql separately.

After briefly introducing the general architecture, let’s take a closer look at the details of each part:

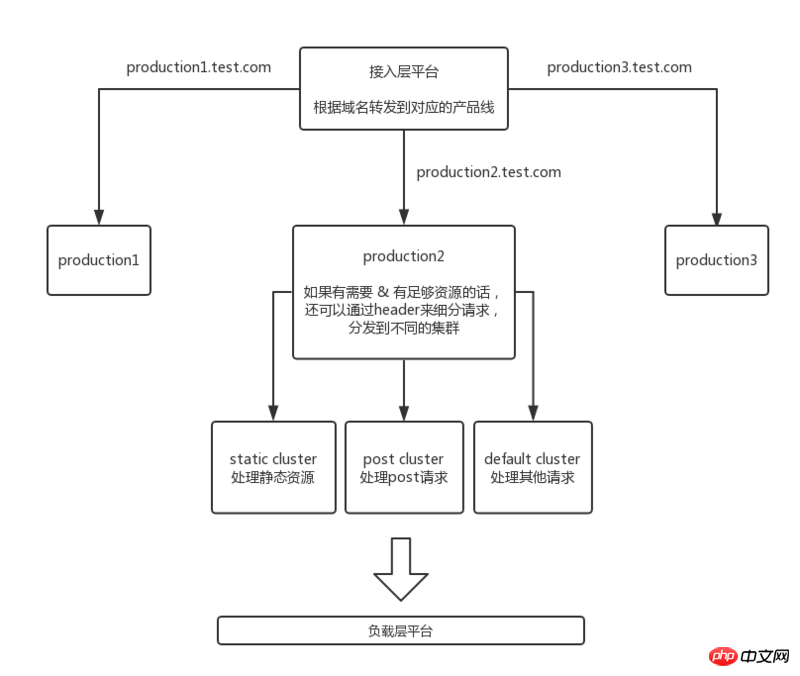

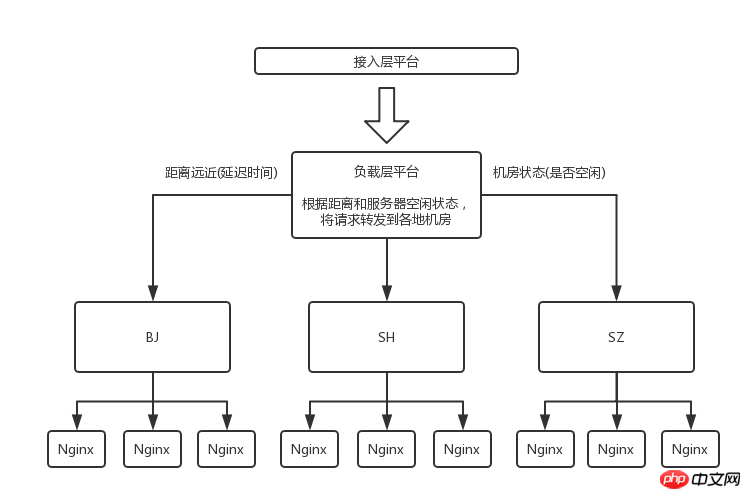

Traffic access layer

Of course, this platform does not only forward this function , you can understand it as a large private cloud system that provides the following services:

Of course, this platform does not only forward this function , you can understand it as a large private cloud system that provides the following services:

(2) Write high-performance query statements, interact with Redis and Mysql, and improve query efficiency

(3) Synchronize the status of each Node service in the cluster through Redis

(4) Pass Hardware management platform, manages/monitors the status of physical machines, manages IP addresses, etc. (In fact, it feels inappropriate to put this part of the work on this layer, but I don’t know which layer it should be placed on...)

(Of course, I only briefly list the entries in this part, it still takes time to accumulate and understand deeply)

The main work of this layer is:

(1) Create Mysql and design related pages and tables; establish necessary indexes and foreign keys to improve query convenience

(2) Deploy redis and provide corresponding interfaces to the Node layer

Related recommendations :

How vue uses axios to request back-end data

Analysis of form input binding and component foundation in Vue

The above is the detailed content of A brief discussion on high concurrency and distributed clustering in node.js. For more information, please follow other related articles on the PHP Chinese website!