The content of this article is about the million-level Zhihu user data crawling and analysis by PHP crawler. It has a certain reference value. Now I share it with everyone. Friends in need can refer to it

This article mainly introduces relevant information on PHP million-level Zhihu user data crawling and analysis. Friends who need it can Refer to the following

Preparation before development

Installing Linux system (Ubuntu14 .04), install an Ubuntu under the VMWare virtual machine;

Install PHP5.6 or above;

Install MySQL5.5 or above;

# #Install curl and pcntl extensions.

Use PHP’s curl extension to capture page data

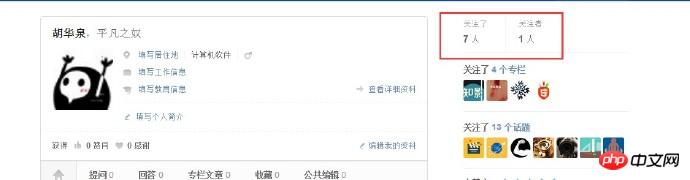

PHP’s curl extension is supported by PHP and allows you to interact with Libraries for various servers to connect and communicate using various types of protocols. This program captures Zhihu user data. To be able to access the user's personal page, the user needs to be logged in before accessing. When we click a user avatar link on the browser page to enter the user's personal center page, the reason why we can see the user's information is because when we click the link, the browser helps you bring the local cookies and submit them together. Go to a new page, so you can enter the user's personal center page. Therefore, before accessing the personal page, you need to obtain the user's cookie information, and then bring the cookie information with each curl request. In terms of obtaining cookie information, I used my own cookie. You can see your cookie information on the page:

##

|

1 2 3 4 5 6 7 8 9 |

$url = 'http://www.zhihu.com/people/mora-hu/about'; //此处mora-hu代表用户ID $ch = curl_init($url); //初始化会话 curl_setopt($ch, CURLOPT_HEADER, 0); curl_setopt($ch, CURLOPT_COOKIE, $this->config_arr['user_cookie']); //设置请求COOKIE curl_setopt($ch, CURLOPT_USERAGENT, $_SERVER['HTTP_USER_AGENT']); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); //将curl_exec()获取的信息以文件流的形式返回,而不是直接输出。 curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1); $result = curl_exec($ch); return $result; //抓取的结果 Copy after login |

Run the above code to get the personal center page of the mora-hu user. Using this result and then using regular expressions to process the page, you can obtain the name, gender and other information you need to capture.

1. Anti-hotlinking of pictures

When regularizing the returned results and outputting personal information, it was found that the user's avatar was output on the page. cannot be opened. After reviewing the information, I found out that it was because Zhihu had protected the pictures from hotlinking. The solution is to forge a referer in the request header when requesting an image.

After using the regular expression to obtain the link to the image, send another request. At this time, bring the source of the image request, indicating that the request is forwarded from the Zhihu website. Specific examples are as follows:

| 1234 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | function getImg($url, $u_id)

{

if (file_exists('./images/' . $u_id . ".jpg"))

{

return "images/$u_id" . '.jpg';

}

if (empty($url))

{

return '';

}

$context_options = array(

'http' =>

array(

'header' => "Referer:http://www.zhihu.com"//带上referer参数

)

);

$context = stream_context_create($context_options);

$img = file_get_contents('http:' . $url, FALSE, $context);

file_put_contents('./images/' . $u_id . ".jpg", $img);

return "images/$u_id" . '.jpg';

}Copy after login |

2. Crawl more users

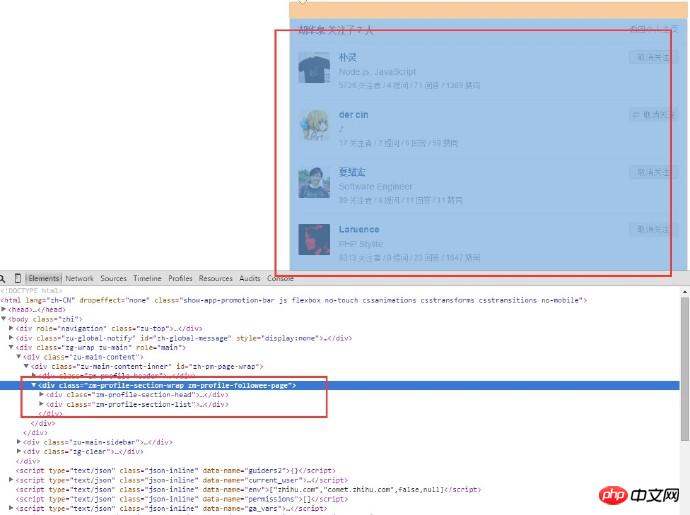

After grabbing your personal information, you need to access the user’s followers and followed users List to get more user information. Then visit layer by layer. You can see that in the personal center page, there are two links as follows:

There are two links here, one is for following, the other is for followers, ending with " "Follow" link as an example. Use regular matching to match the corresponding link. After getting the URL, use curl to bring the cookie and send another request. After crawling the list page that the user has followed, you can get the following page:

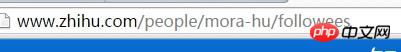

The html of the analysis page Structure, because we only need to get the user's information, we only need to frame the p content of this piece, and the user name is all in it. It can be seen that the URL of the page that the user followed is:

#The URL of different users is almost the same, the difference lies in the user name. Use regular matching to get the user name list, spell the URLs one by one, and then send requests one by one (of course, one by one is slower, there is a solution below, which will be discussed later). After entering the new user's page, repeat the above steps, and continue in this loop until you reach the amount of data you want.

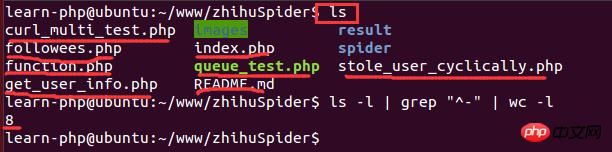

3. Number of Linux statistics files

After the script has been running for a while, you need to see how many pictures have been obtained. When comparing the data volume When it is large, it is a bit slow to open the folder and view the number of pictures. The script is run in the Linux environment, so you can use Linux commands to count the number of files:

1 | ls-l | grep"^-"wc -l Copy after login |

Among them, ls -l is a long list output of file information in the directory (the files here can be directories, links, device files, etc.); grep "^-" filters the long list output information, "^-" only retains For general files, if only the directory is kept, it is "^d"; wc -l is the number of lines of statistical output information. The following is a running example:

4. Processing of duplicate data when inserting into MySQL

Program running After a period of time, it was found that a lot of user data was duplicated, so it needed to be processed when inserting duplicate user data. The solution is as follows:

1) Check whether the data already exists in the database before inserting into the database;

2) Add a unique index and use INSERT INTO... ON DUPLICATE KEY UPDATE...# when inserting.

##3) Add a unique index, use INSERT INGNORE INTO when inserting...4) Add a unique index, use REPLACE INTO when inserting...The first solution It is the simplest but also the least efficient solution, so it is not adopted. The execution results of the second and fourth solutions are the same. The difference is that when encountering the same data, INSERT INTO ... ON DUPLICATE KEY UPDATE is updated directly, while REPLACE INTO first deletes the old data and then inserts the new one. During this process, the index needs to be re-maintained, so the speed is slow. So I chose the second option between two and four. The third solution, INSERT INGNORE, will ignore errors when executing the INSERT statement and will not ignore syntax problems, but will ignore the existence of the primary key. In this case, using INSERT INGNORE is better. Finally, considering the number of duplicate data to be recorded in the database, the second solution was adopted in the program.5. Use curl_multi to implement multi-threaded page capture

At the beginning, a single process and a single curl were used to capture data, which was very slow. After hanging up and crawling all night, I could only capture 2W of data, so I thought about whether I could request multiple users at once when entering a new user page and making a curl request. Later I discovered the good thing curl_multi. Functions such as curl_multi can request multiple URLs at the same time instead of requesting them one by one. This is similar to the function of a process in the Linux system to open multiple threads for execution. The following is an example of using curl_multi to implement a multi-threaded crawler:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 | $mh = curl_multi_init(); //返回一个新cURL批处理句柄

for ($i = 0; $i < $max_size; $i++)

{

$ch = curl_init(); //初始化单个cURL会话

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_URL, 'http://www.zhihu.com/people/' . $user_list[$i] . '/about');

curl_setopt($ch, CURLOPT_COOKIE, self::$user_cookie);

curl_setopt($ch, CURLOPT_USERAGENT, 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.130 Safari/537.36');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1);

$requestMap[$i] = $ch;

curl_multi_add_handle($mh, $ch); //向curl批处理会话中添加单独的curl句柄

}

$user_arr = array();

do {

//运行当前 cURL 句柄的子连接

while (($cme = curl_multi_exec($mh, $active)) == CURLM_CALL_MULTI_PERFORM);

if ($cme != CURLM_OK) {break;}

//获取当前解析的cURL的相关传输信息

while ($done = curl_multi_info_read($mh))

{

$info = curl_getinfo($done['handle']);

$tmp_result = curl_multi_getcontent($done['handle']);

$error = curl_error($done['handle']);

$user_arr[] = array_values(getUserInfo($tmp_result));

//保证同时有$max_size个请求在处理

if ($i < sizeof($user_list) && isset($user_list[$i]) && $i < count($user_list))

{

$ch = curl_init();

curl_setopt($ch, CURLOPT_HEADER, 0);

curl_setopt($ch, CURLOPT_URL, 'http://www.zhihu.com/people/' . $user_list[$i] . '/about');

curl_setopt($ch, CURLOPT_COOKIE, self::$user_cookie);

curl_setopt($ch, CURLOPT_USERAGENT, 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.130 Safari/537.36');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1);

$requestMap[$i] = $ch;

curl_multi_add_handle($mh, $ch);

$i++;

}

curl_multi_remove_handle($mh, $done['handle']);

}

if ($active)

curl_multi_select($mh, 10);

} while ($active);

curl_multi_close($mh);

return $user_arr;Copy after login |

6. HTTP 429 Too Many Requests

Use the curl_multi function to send multiple requests at the same time, but during the execution process, 200 requests are sent at the same time. At that time, it was found that many requests could not be returned, that is, packet loss was discovered. For further analysis, use the curl_getinfo function to print each request handle information. This function returns an associative array containing HTTP response information. One of the fields is http_code, which represents the HTTP status code returned by the request. I saw that the http_code of many requests was 429. This return code means that too many requests were sent. I guessed that Zhihu had implemented anti-crawler protection, so I tested it on other websites and found that there was no problem when sending 200 requests at once, which proved my guess. Zhihu had implemented protection in this regard. That is, the number of one-time requests is limited. So I kept reducing the number of requests and found that there was no packet loss at 5. It shows that in this program, you can only send up to 5 requests at a time. Although it is not many, it is a small improvement.

7. Use Redis to save users who have visited.

During the process of capturing users, it was found that some users have already been visited. Moreover, his followers and following users have already been obtained. Although repeated data processing is done at the database level, the program will still use curl to send requests, so sending repeated requests will cause a lot of repeated network overhead. Another thing is that the users to be captured need to be temporarily saved in one place for next execution. At first, they were placed in an array. Later, I found that multiple processes need to be added to the program. In multi-process programming, sub-processes will share the program code. , function library, but the variables used by the process are completely different from those used by other processes. Variables between different processes are separated and cannot be read by other processes, so arrays cannot be used. Therefore, I thought of using Redis cache to save processed users and users to be captured. In this way, every time the execution is completed, the user is pushed into an already_request_queue queue, and the users to be captured (that is, each user's followers and the list of followed users) are pushed into the request_queue, and then before each execution, the user is pushed into the already_request_queue queue. Pop a user in request_queue, and then determine whether it is in already_request_queue. If so, proceed to the next one, otherwise continue execution.

Example of using redis in PHP:

1 2 3 4 5 6 7 8 | <?php

$redis = new Redis();

$redis->connect('127.0.0.1', '6379');

$redis->set('tmp', 'value');

if ($redis->exists('tmp'))

{

echo $redis->get('tmp') . "\n";

}Copy after login |

8. Use PHP's pcntl extension to implement multi-process

After using the curl_multi function to implement multi-threaded grabbing of user information, the program ran for one night. , the final data obtained is 10W. Still unable to achieve my ideal goal, I continued to optimize, and later discovered that there is a pcntl extension in PHP that can achieve multi-process programming. Here is an example of multiprogramming:

| 1234567 8 9 10 11 12 13 14 15 16 17 18 19 20 |

//PHP多进程demo

//fork10个进程

for ($i = 0; $i < 10; $i++) {

$pid = pcntl_fork();

if ($pid == -1) {

echo "Could not fork!\n";

exit(1);

}

if (!$pid) {

echo "child process $i running\n";

//子进程执行完毕之后就退出,以免继续fork出新的子进程

exit($i);

}

}

//等待子进程执行完毕,避免出现僵尸进程

while (pcntl_waitpid(0, $status) != -1) {

$status = pcntl_wexitstatus($status);

echo "Child $status completed\n";

}Copy after login |

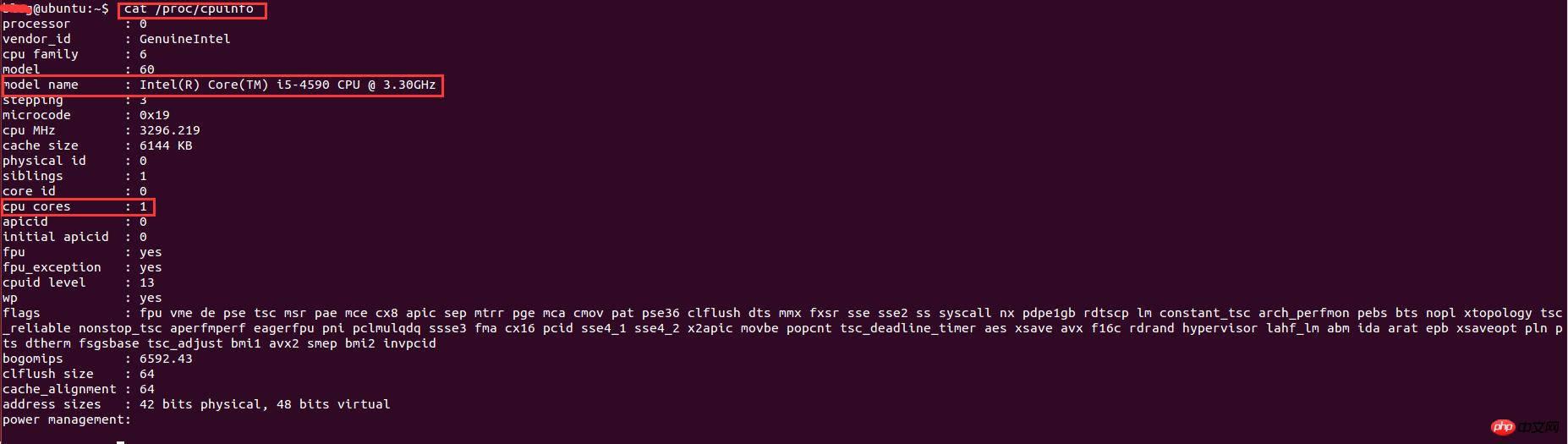

9、在Linux下查看系统的cpu信息

实现了多进程编程之后,就想着多开几条进程不断地抓取用户的数据,后来开了8调进程跑了一个晚上后发现只能拿到20W的数据,没有多大的提升。于是查阅资料发现,根据系统优化的CPU性能调优,程序的最大进程数不能随便给的,要根据CPU的核数和来给,最大进程数最好是cpu核数的2倍。因此需要查看cpu的信息来看看cpu的核数。在Linux下查看cpu的信息的命令:

1 | cat /proc/cpuinfo Copy after login |

The results are as follows:

Among them, model name represents cpu type information, and cpu cores represents the number of cpu cores. The number of cores here is 1. Because it is running under a virtual machine, the number of CPU cores allocated is relatively small, so only 2 processes can be opened. The final result was that 1.1 million user data was captured in just one weekend.

10. Redis and MySQL connection issues in multi-process programming

Under multi-process conditions, after the program has been running for a period of time, data is found It cannot be inserted into the database, and an error of mysql too many connections will be reported. The same is true for redis.

The following code will fail to execute:

| 123 4 5 6 7 8 9 10 11 12 13 | <?php

for ($i = 0; $i < 10; $i++) {

$pid = pcntl_fork();

if ($pid == -1) {

echo "Could not fork!\n";

exit(1);

}

if (!$pid) {

$redis = PRedis::getInstance();

// do something

exit;

}

}Copy after login |

The fundamental reason is that when each child process is created, it has inherited an identical copy of the parent process. Objects can be copied, but created connections cannot be copied into multiple ones. The result is that each process uses the same redis connection and does its own thing, eventually causing inexplicable conflicts.

Solution: >The program cannot fully guarantee that the parent process will not create a redis connection instance before the fork process. Therefore, the only way to solve this problem is by the child process itself. Just imagine, if the instance obtained in the child process is only related to the current process, then this problem will not exist. So the solution is to slightly modify the static method of redis class instantiation and bind it to the current process ID.

The modified code is as follows:

2 3 4 5 6 7 8 9 10 | <?php

public static function getInstance() {

static $instances = array();

$key = getmypid();//获取当前进程ID

if ($empty($instances[$key])) {

$inctances[$key] = new self();

}

return $instances[$key];

}Copy after login |

11. PHP statistics script execution time

Because I want to know how much time each process takes, I write a function to count the script execution time:

| 1234567 8 9 10 11 12 13 14 15 16 17 | function microtime_float()

{

list($u_sec, $sec) = explode(' ', microtime());

return (floatval($u_sec) + floatval($sec));

}

$start_time = microtime_float();

//do something

usleep(100);

$end_time = microtime_float();

$total_time = $end_time - $start_time;

$time_cost = sprintf("%.10f", $total_time);

echo "program cost total " . $time_cost . "s\n";Copy after login |

The above is the entire content of this article for your reference. I hope it will be helpful to your study.

Related recommendations:

Use php crawler to analyze Nanjing housing prices

The above is the detailed content of PHP crawler's million-level Zhihu user data crawling and analysis. For more information, please follow other related articles on the PHP Chinese website!