When we create a regression algorithm and want to know how efficient this model was, we use error metrics to obtain values that represent the error of our machine learning model. The metrics in this article are important when we want to measure the error of prediction models for numerical values (real, integers).

In this article we will cover the main error metrics for regression algorithms, performing the calculations manually in Python and measuring the error of the machine learning model on a dollar quote dataset.

Both metrics are a little similar, where we have metrics for average and percentage of error and metrics for average and absolute percentage of error, differentiated so only that one group obtains the real value of the difference and the other obtains the absolute value of the difference. difference. It is important to remember that in both metrics, the lower the value, the better our forecast.

The SE metric is the simplest among all in this article, where its formula is:

SE = εR — P

Therefore, it is the sum of the difference between the real value (target variable of the model) and the predicted value. This metric has some negative points, such as not treating values as absolute, which will consequently result in a false value.

The ME metric is a "complement" of the SE, where we basically have the difference that we will obtain an average of the SE given the number of elements:

ME = ε(R-P)/N

Unlike SE, we just divide the SE result by the number of elements. This metric, like SE, depends on scale, that is, we must use the same set of data and can compare with different forecasting models.

The MAE metric is the ME but considering only absolute (non-negative) values. When we are calculating the difference between actual and predicted, we may have negative results and this negative difference is applied to previous metrics. In this metric, we have to transform the difference into positive values and then take the average based on the number of elements.

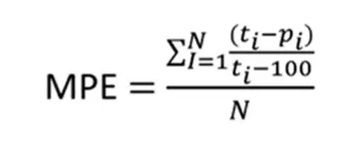

The MPE metric is the average error as a percentage of the sum of each difference. Here we have to take the percentage of the difference, add it and then divide it by the number of elements to obtain the average. Therefore, the difference between the actual value and the predicted value is made, divided by the actual value, multiplied by 100, we add up all this percentage and divide by the number of elements. This metric is independent of scale (%).

The MAPAE metric is very similar to the previous metric, but the difference between the predicted x actual is made absolutely, that is, you calculate it with positive values. Therefore, this metric is the absolute difference in the percentage of error. This metric is also scale independent.

Given an explanation of each metric, we will calculate both manually in Python based on a prediction from a dollar exchange rate machine learning model. Currently, most of the regression metrics exist in ready-made functions in the Sklearn package, however here we will calculate them manually for teaching purposes only.

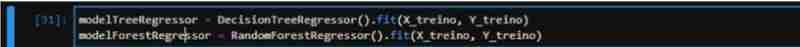

We will use the RandomForest and Decision Tree algorithms only to compare results between the two models.

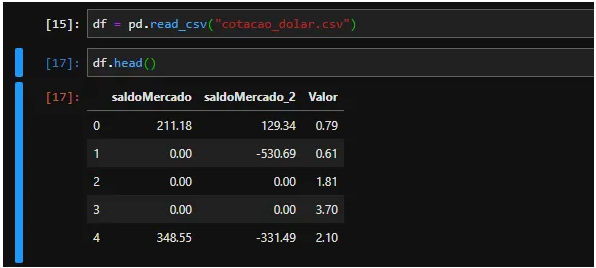

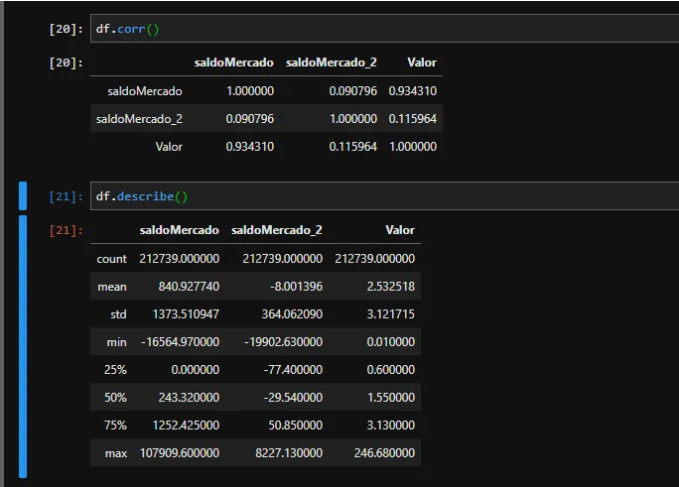

In our dataset, we have a column of SaldoMercado and saldoMercado_2 which are information that influence the Value column (our dollar quote). As we can see, the MercadoMercado balance has a closer relationship to the quote than theMerado_2 balance. It is also possible to observe that we do not have missing values (infinite or Nan values) and that the balanceMercado_2 column has many non-absolute values.

We prepare our values for the machine learning model by defining the predictor variables and the variable we want to predict. We use train_test_split to randomly divide the data into 30% for testing and 70% for training.

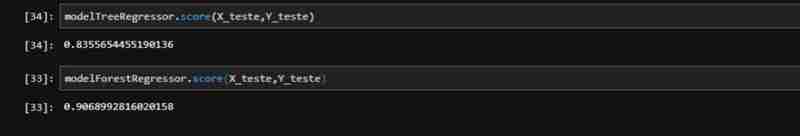

Finally, we initialize both algorithms (RandomForest and DecisionTree), fit the data and measure the score of both with the test data. We obtained a score of 83% for TreeRegressor and 90% for ForestRegressor, which in theory indicates that ForestRegressor performed better.

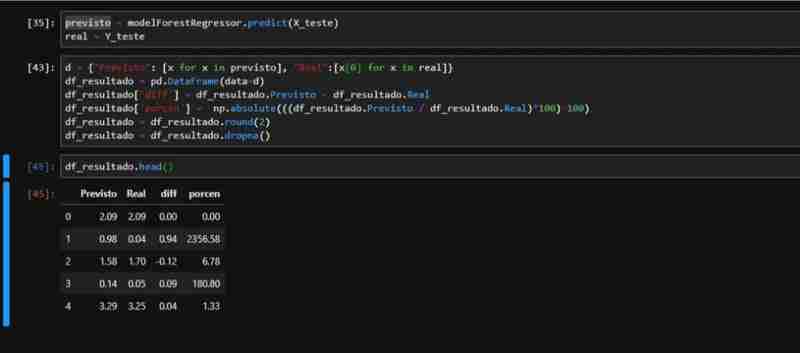

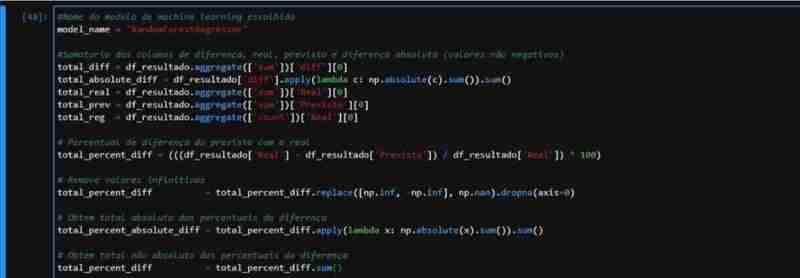

Given the partially observed performance of ForestRegressor, we created a dataset with the necessary data to apply the metrics. We perform the prediction on the test data and create a DataFrame with the actual and predicted values, including columns for difference and percentage.

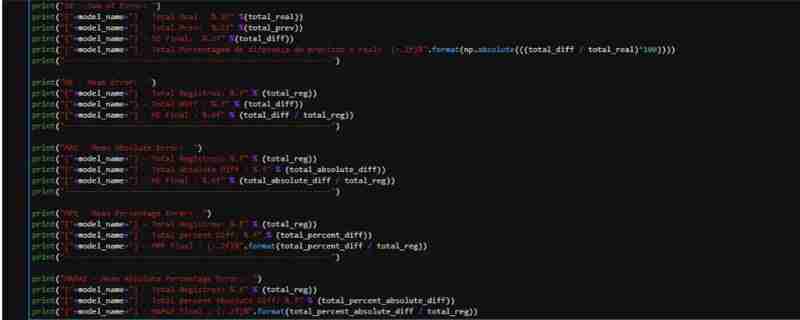

We can observe that in relation to the real total of the dollar rate vs the rate that our model predicted:

I reinforce that here we perform the calculation manually for teaching purposes. However, it is recommended to use the metrics functions from the Sklearn package due to better performance and low chance of error in the calculation.

The complete code is available on my GitHub: github.com/AirtonLira/artigo_metricasregressao

Author: Airton Lira Junior

LinkedIn: linkedin.com/in/airton-lira-junior-6b81a661/

The above is the detailed content of Metrics for regression algorithms. For more information, please follow other related articles on the PHP Chinese website!