Today I will explain how to get to work with Bedrock safely and reliably and, at the same time, learn a little about coffee.

You will learn how to consume the Amazon Bedrock API of Text and Multimodal models using Python to generate names, logo and menu for your cafe and to create an agent that connects to a Shopify API to take orders.

Shopify is (in my opinion) the best eCommerce platform that exists.

And just like AWS, Shopify has an API for everything and a platform for developers

Finally you will create a frontend using Streamlit to provide a unique user experience and give life to your agent.

The moment of opening a cafeteria or having creative ideas for any business is an excellent opportunity to rely on Generative AI (GenAI) and get the most out of it.

Through Amazon Bedrock you can use it, but... How is that service consumed?

Every service in AWS has an API, and Amazon Bedrock is no exception. Below I explain how to consume the Amazon Bedrock API through an example to generate names and a menu for a Cafeteria on the go.

And I also show you how to consume a multimodal model capable of analyzing images.

Instructions to program a Python script to run locally or in a Lambda function to invoke Amazon Bedrock:

First you must enable access to the models in Bedrock Instructions here

Requirements:

Step 1) Create a virtual Python environment Instructions here

In the bedrock_examples folder of this repository you will find different examples used below to invoke the foundational model.

In the prompts folder you will find the example prompts, which you will be able to use to generate the name, the Menu and a prompt to pass to an image generation model that you can invoke both in the Amazon Bedrock playground and by invoking the API from Python.

Step 2) Install the requirements

pip install -r requirements.txt

Step 3) Configure Boto3 More info about boto3

Here I configure the AWS client telling it to use the genaiday profile installed on my computer and call the bedrock-runtime client that will allow me to invoke the foundational model.

#Cambiar la region y el perfil de AWS

aws = boto3.session.Session(profile_name='genaiday', region_name=region)

client = aws.client('bedrock-runtime')

Step 4) Example: Invoke text model

This function calls the method invoke_model and I pass the prompt indicated by the user and return the response

The most important part is the messages sent:

pip install -r requirements.txt

#Cambiar la region y el perfil de AWS

aws = boto3.session.Session(profile_name='genaiday', region_name=region)

client = aws.client('bedrock-runtime')

Example:

{

"role": "user",

"content": [{

"type": "text",

"text": prompt

}]

}

Step 5) Example: Invoke a multimodal model.

Here the process is similar, only you have to add the mime type of the sent file, for this there is a function that obtains the mimetype

based on the file name

def call_text(prompt,modelId="anthropic.claude-3-haiku-20240307-v1:0"):

#esta función es para llamar un modelo de texto

config = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 4096,

"messages": [

{

"role": "user",

"content": [{

"type": "text",

"text": prompt

}]

}

]

}

body = json.dumps(config)

modelId = modelId

accept = "application/json"

contentType = "application/json"

response = client.invoke_model(

body=body, modelId=modelId, accept=accept, contentType=contentType)

response_body = json.loads(response.get("body").read())

results = response_body.get("content")[0].get("text")

return results

Then to invoke the model, the messages must be the following:

print("Haiku")

print(call_text("Estoy buscando armar un local de café al paso, dame 5 nombres para un local.")

The invocation of the model looks like this:

def read_mime_type(file_path):

# Este hack es para versiones de python anteriores a 3.13

# Esta función lee el mime type de un archivo

mimetypes.add_type('image/webp', '.webp')

mime_type = mimetypes.guess_type(file_path)

return mime_type[0]

Example:

"messages": [

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": read_mime_type(file),

"data": base64.b64encode(open(file, "rb").read()).decode("utf-8")

}

},

{

"type": "text",

"text": caption

}]

}

]

To create an Amazon Bedrock agent:

Make sure you have the Bedrock models you want to use with access enabled Instructions here, in this case we will use Claude 3 Haiku and Sonnet

Then create the Bedrock agent in the AWS console:

1) Go to Bedrock service

2) Agents

3) Create agent

4) Give the agent a name, in our case "Pause-Coffee-Agent

5) Description is optional.

6) One of the most important steps is to choose the foundational model that will make our agent work properly. If you want to know how to choose the best model that suits your use case, here is a guide on the Amazon Bedrock service. Model Evaluation .

7) The next step is the prompt that will guide your model, here you have to be as precise as possible and bring out your skills as a prompt engineer, if you don't know where to start, I recommend visiting this guide where you will find the best guidelines for the model you are using, and another very useful resource is the anthropic console.

This is the prompt that I used for the example agent, I recommend writing the prompt in English since the models were trained in English and sometimes writing in the training source language helps avoid erroneous behavior.

def call_multimodal(file,caption,modelId="anthropic.claude-3-haiku-20240307-v1:0"):

#esta funcion es para llamar a un modelo multimodal con una imagen y un texto

config = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 4096,

"messages": [

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": read_mime_type(file),

"data": base64.b64encode(open(file, "rb").read()).decode("utf-8")

}

},

{

"type": "text",

"text": caption

}]

}

]

}

body = json.dumps(config)

modelId = modelId

accept = "application/json"

contentType = "application/json"

response = client.invoke_model(

body=body, modelId=modelId, accept=accept, contentType=contentType)

response_body = json.loads(response.get("body").read())

results = response_body.get("content")[0].get("text")

return results

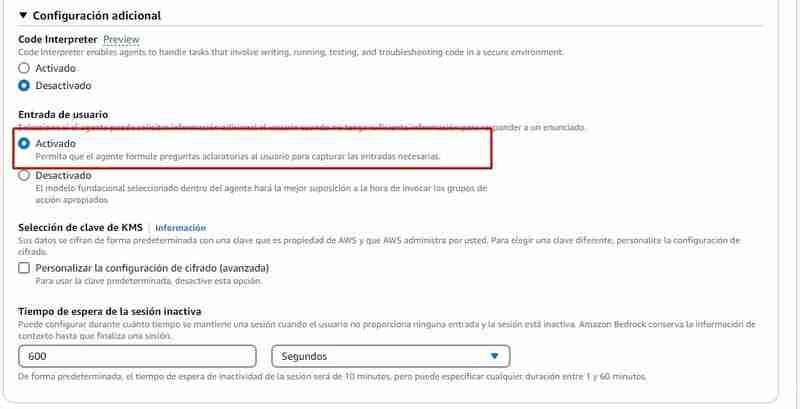

8) Additional configuration, you must allow the agent to capture input from the user, given that they will surely lack information to process the order, for example: They will need to ask for the products that the customer wants, the name, among other things.

9) Action Groups: An action group defines the actions in which the agent can help the user. For example, you can define an action group that says TakeOrder that can have the following actions

To create an action group you will need for each action:

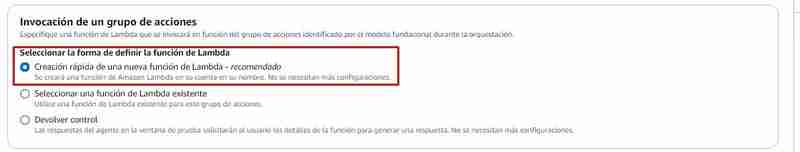

Action groups to run usually invoke a Lambda function, from Bedrock you can:

If you choose to create the lambda function from the Bedrock console, a function will be created in python with a basic source code that you will then have to modify, in this repo in the file agents/action_group/lambda.py you have the modified example code to make it work with the agent.

These are the variables that will give you the necessary information:

In the following example you can see that there are two actions:

| Parametro | Descripcion | Tipo | Obligatorio |

|---|---|---|---|

| customerEmail | Email of the customer | string | False |

| customerName | Name of the customer | string | True |

| products | SKUs and quantities to add to the cart in the format [{ variantId: variantId, quantity: QUANTITY }] | array | True |

So for example when you call the get_products function in the lambda function it is handled like this:

There is a get_products function defined that will be responsible for querying the Shopify API (For educational purposes we return all the products)

If you want this to work in Shopify you must replace the following variables with those of your store:

pip install -r requirements.txt

#Cambiar la region y el perfil de AWS

aws = boto3.session.Session(profile_name='genaiday', region_name=region)

client = aws.client('bedrock-runtime')

Then in the handler of the lambda function, the name of the called function is verified and the response is returned in the format that the action_group needs:

{

"role": "user",

"content": [{

"type": "text",

"text": prompt

}]

}

The code snippets above are part of the lambda function found here

10) Press Save and Exit, and that's it! The agent is ready to be tested.

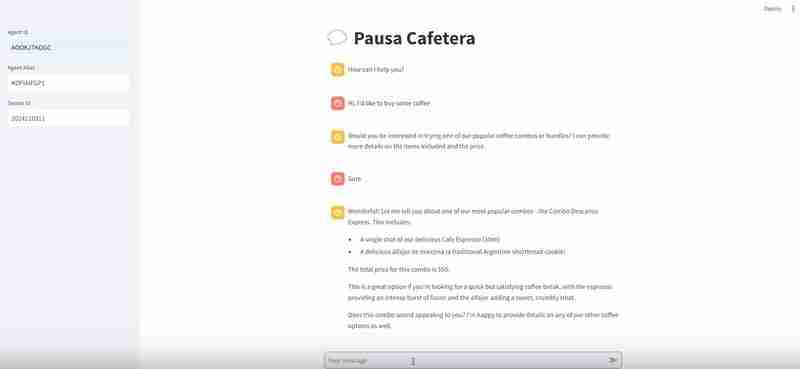

The next thing is to test the agent and validate that it works, from Bedrock you can test the agent, and if during the conversation you click "See trace or Show Trace" it will show you the reasoning process, this is where you should Pay special attention and make the adjustments you think are necessary in the prompt or look for another model if you see that the one you chose does not work as you expected.

Once you are satisfied with the agent, you can create an Alias, an alias is an ID through which you will be able to invoke the agent from the Amazon Bedrock API, when you create the alias, it will create a version of the agent automatically, or you can point to an already existing version, having different aliases and different versions will help you control the agent deployment process, for example:

Then all that remains is to write down the production alias corresponding to the version you want to be live.

How to invoke the agent

For this, in the agents/frontend folder I have left a file called agent.py.

This development uses Streamlit, a powerful framework to make sample machine learning applications

The part of the code that invokes the agent is the following:

def call_text(prompt,modelId="anthropic.claude-3-haiku-20240307-v1:0"):

#esta función es para llamar un modelo de texto

config = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 4096,

"messages": [

{

"role": "user",

"content": [{

"type": "text",

"text": prompt

}]

}

]

}

body = json.dumps(config)

modelId = modelId

accept = "application/json"

contentType = "application/json"

response = client.invoke_model(

body=body, modelId=modelId, accept=accept, contentType=contentType)

response_body = json.loads(response.get("body").read())

results = response_body.get("content")[0].get("text")

return results

We use boto3 to consume the AWS API, we call the bedrock-agent-runtime client to be able to invoke the agent.

The parameters we need to pass to it are:

In this example, I am defining the variables here:

print("Haiku")

print(call_text("Estoy buscando armar un local de café al paso, dame 5 nombres para un local.")

First you must enable access to the models in Bedrock Instructions here

Requirements:

I recommend creating a virtual Python environment Instructions here

pip install -r requirements.txt

#Cambiar la region y el perfil de AWS

aws = boto3.session.Session(profile_name='genaiday', region_name=region)

client = aws.client('bedrock-runtime')

This will start running streamlit on port 8501 and you can visit the following URL: http://localhost:8501/ to see the frontend that will invoke the agent

If you have followed all the steps you have:

Some links for you to follow your path within GenerativeAI

Workshop AWS generative AI

Bedrock Knowledge Bases

Anthropic Console (To debug our prompts)

Community.aws (more articles generated by and for the community)

The above is the detailed content of Creating a Coffee Shop Agent with Amazon Bedrock and Shopify. For more information, please follow other related articles on the PHP Chinese website!