Because phantomjs is a headless browser that can run js, it can also run dom nodes, which is perfect for web crawling.

For example, we want to batch crawl the content of "Today in History" on the web page. Website

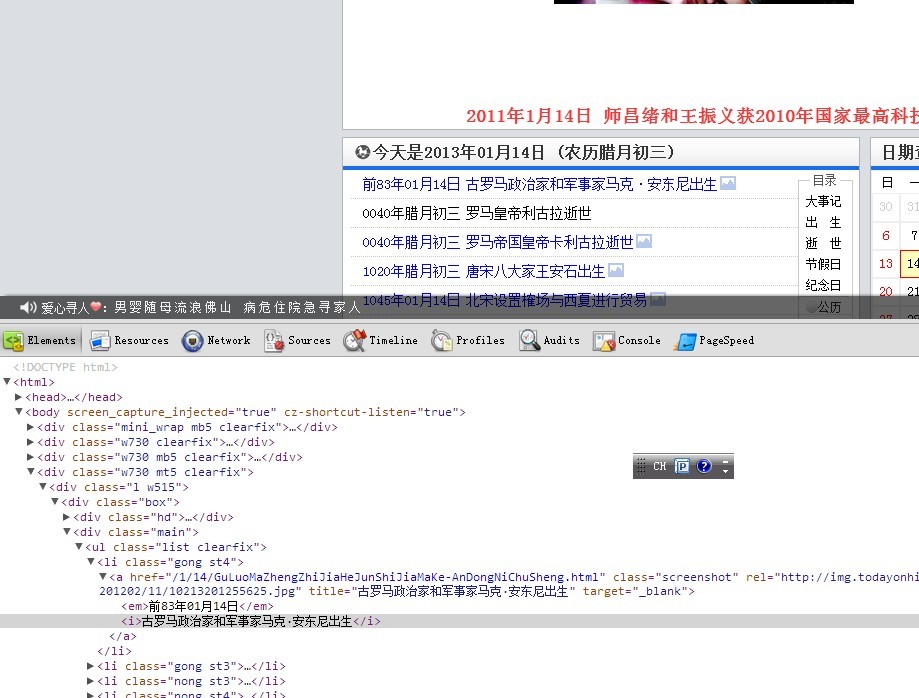

Observing the dom structure, we only need to get the title value of .list li a. So we use advanced selectors to build DOM fragments

var d= ''

var c = document.querySelectorAll('.list li a')

var l = c.length;

for(var i =0;i<l;i++){

d=d+c[i].title+'\n'

}After that, you only need to let the js code run in phantomjs~

var page = require('webpage').create();

page.open('http://www.todayonhistory.com/', function (status) { //打开页面

if (status !== 'success') {

console.log('FAIL to load the address');

} else {

console.log(page.evaluate(function () {

var d= ''

var c = document.querySelectorAll('.list li a')

var l = c.length;

for(var i =0;i<l;i++){

d=d+c[i].title+'\n'

}

return d

}))

}

phantom.exit();

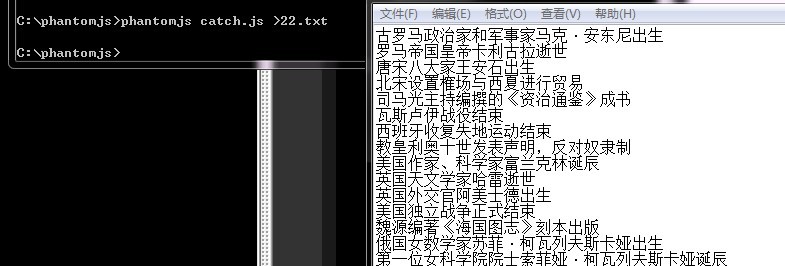

});Finally we save it as catch.js, execute it in dos, and output the content to a txt file (you can also use the file api of phantomjs to write)