Without OpenAI, Microsoft may also become the leader in AI!

Foreign media Information broke the news that Microsoft is internally developing its first large-scale model with 500 billion parameters, MAl-1.

This happens to be the time for Nadella to lead the team to prove himself.

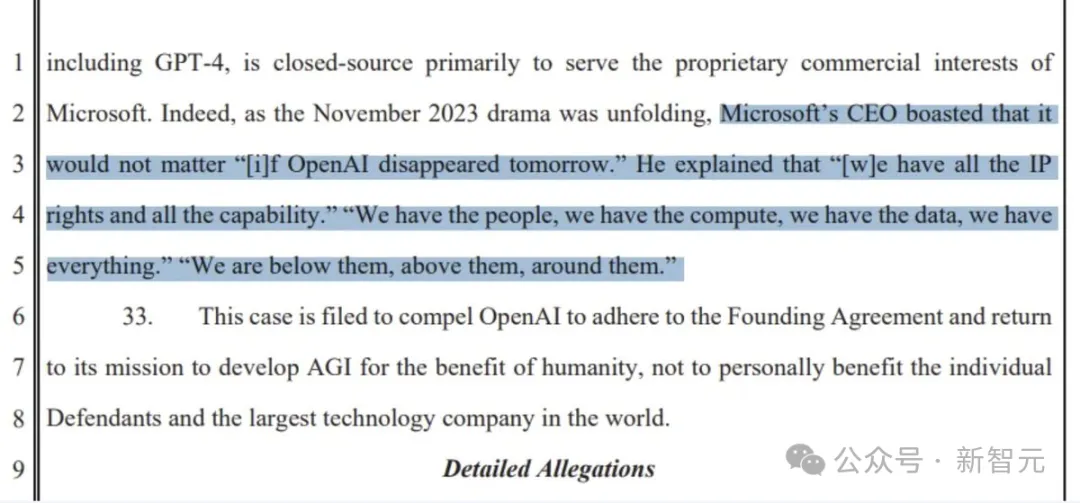

After investing more than 10 billion US dollars in OpenAI, Microsoft obtained the right to use the GPT-3.5/GPT-4 advanced model, but after all, it was not a long-term solution.

Even, there were rumors that Microsoft has been reduced to an IT department of OpenAI.

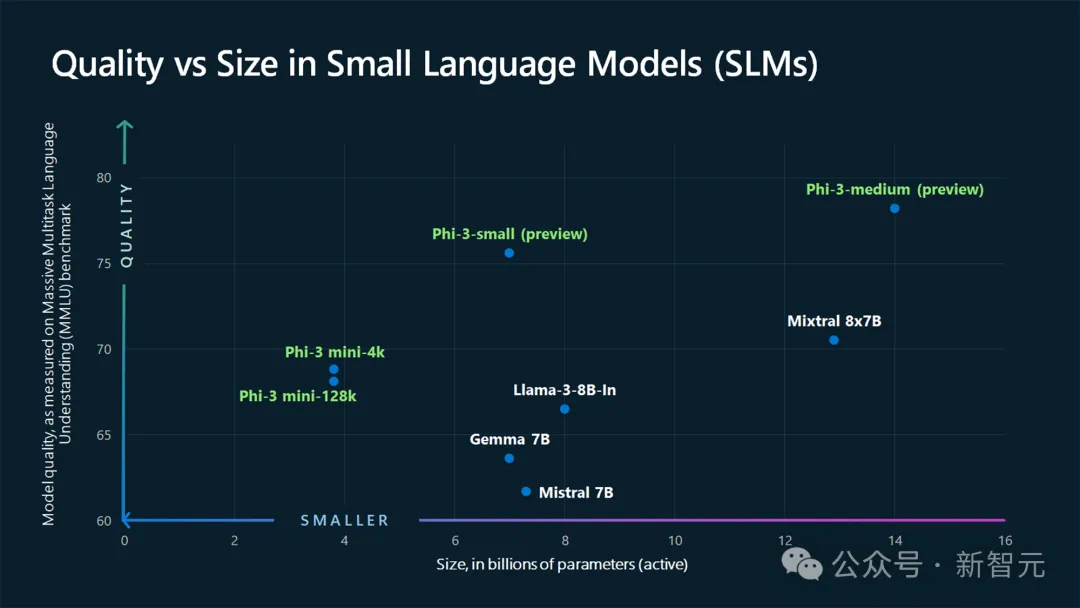

In the past year, as everyone knows, Microsoft's research on LLM has mainly focused on the update of small-scale phi, such as the open source of Phi-3.

Regarding the specialization of large models, except for the Turing series, Microsoft has not revealed any internal news.

Just today, Microsoft Chief Technology Officer Kevin Scott confirmed that the MAI large model is indeed under development.

Obviously, Microsoft’s secret plan to prepare large models is to develop a new LLM that can compete with top models from OpenAI, Google, and Anthropic compete.

After all, Nadella once said, "If OpenAI disappeared tomorrow, it wouldn't matter."

"We have talents, computing power, and data. We lack nothing. We are below them, above them, and around them."

It seems that Microsoft’s confidence is itself.

According to reports, the MAI-1 large model It is supervised by Mustafa Suleyman, the former head of Google DeepMind.

It is worth mentioning that Suleyman was the founder and CEO of Inflection AI, an AI startup, before joining Microsoft.

was founded in 2022. In one year, he led the team to launch the large model Inflection (currently updated to version 2.5), and the high-EQ AI assistant Pi with over one million daily users. .

However, because they could not find the right business model, Suleyman, another Lianchuang, and most of the employees joined Microsoft in March.

In other words, Suleyman and the team are responsible for this new project MAI-1, and will bring more experience in cutting-edge large models to this.

I still have to mention that the MAI-1 model is self-developed by Microsoft and is not inherited from the Inflection model.

According to two Microsoft employees, "MAI-1 is different from models previously released by Inflection." However, its training data and techniques may be used in the training process.

With 500 billion parameters, the parameter scale of MAI-1 will far exceed any small-scale open source model trained by Microsoft in the past.

This also means that it will require more computing power and data, and the training cost is also high.

To train this new model, Microsoft has set aside a large number of servers equipped with NVIDIA GPUs and has been compiling training data to optimize the model.

This includes text generated from GPT-4, as well as various datasets from external sources (Internet public data).

In contrast, GPT-4 has been exposed to have 1.8 trillion parameters, Meta, Mistral When AI companies release smaller open source models, there will be 70 billion parameters.

Of course, Microsoft adopts a multi-pronged strategy, that is, large and small models are developed together.

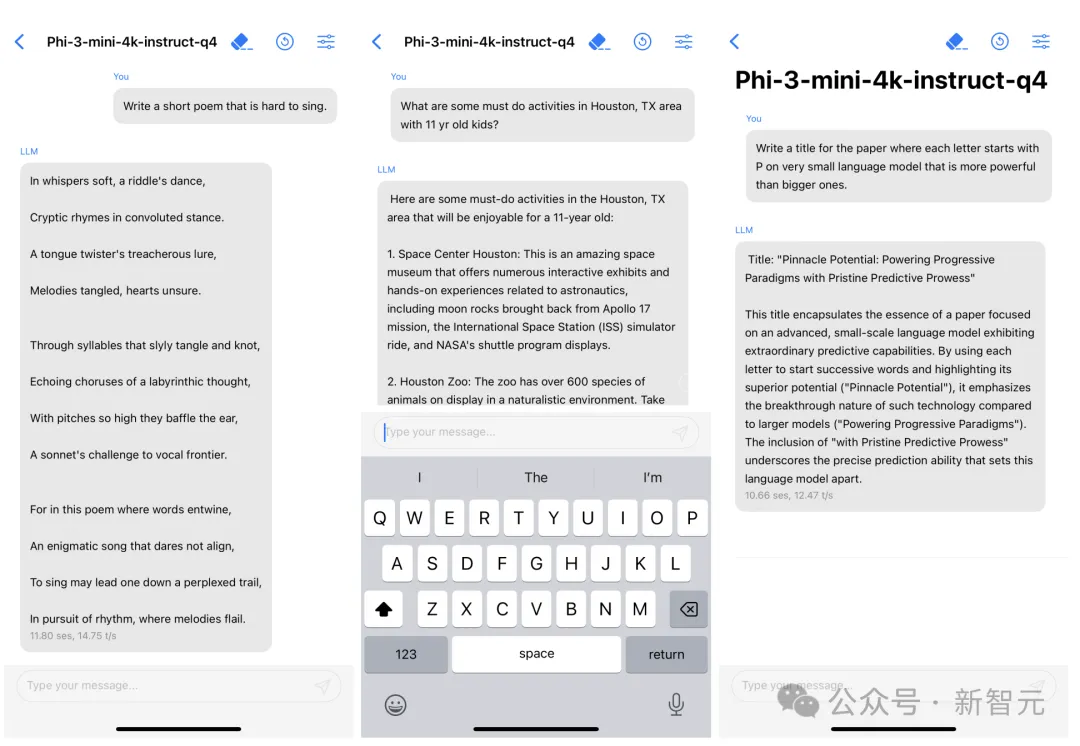

Among them, the most classic one is Phi-3 - a small model that can be inserted into a mobile phone, and its minimum size of 3.8B crushes GPT-3.5 in performance.

Phi-3 mini only takes up about 1.8GB of memory when quantized to 4bit, and can generate 12 tokens per second with iPhone14 .

After netizens raised the question "Wouldn't it be better to train AI at a lower cost?", Kevin Scott replied:

This is not an either/or relationship. In many AI applications, we use a combination of large cutting-edge models and smaller, more targeted models. We've done a lot of work to make sure SLM works well both on device and in the cloud. We have accumulated a lot of experience in training SLM, and we have even open sourced some of this work for others to study and use. I think this combination of big and small will continue for the foreseeable future.

This shows that Microsoft needs to develop SLM that is low-cost, can be integrated into applications, and can run on mobile devices. It is also necessary to develop larger and more advanced AI models.

Currently, Microsoft calls itself a "Copilot company." The Copilot chatbot, powered by AI, can complete tasks such as writing emails and quickly summarizing documents.

And in the future, where are the next opportunities?

Taking into account both large and small models reflects Microsoft’s willingness to explore new paths for AI, which is full of innovative energy.

Then again, self-developed MAI-1 does not mean that Microsoft will abandon OpenAI.

Chief Technology Officer Kevin Scott first affirmed the solid "friendship" between Microsoft and OpenAI for five years in his post this morning.

We have been building large-scale supercomputers for our partner OpenAI to train cutting-edge AI models. Then, both companies will apply the model to their own products and services to benefit more people.

Moreover, each new generation of supercomputer will be more powerful than the previous generation, so each cutting-edge model trained by OpenAI will be more advanced than the last one.

We will continue to follow this path - continue to build more powerful supercomputers so that OpenAI can train models that lead the entire industry. Our collaboration will have an ever-increasing impact.

Some time ago, foreign media revealed that Microsoft and OpenAI have joined forces to build the AI supercomputer "Stargate" and will spend up to 115 billion US dollars.

It is said that supercomputing will be launched as soon as 2028 and further expanded before 2030.

Including previously, Microsoft engineers broke the news to entrepreneur Kyle Corbitt that Microsoft is intensively building 100,000 H100s for OpenAI training GPT- 6.

Various signs indicate that the cooperation between Microsoft and OpenAI will only become stronger.

In addition, Scott also said, "In addition to cooperation with OpenAI, Microsoft has been letting MSR and various product teams develop AI models for many years."

AI models have penetrated into almost all of Microsoft’s products, services and operations. Teams also sometimes need to perform customization work, whether training a model from scratch or fine-tuning an existing model.

In the future, there will be more similar situations.

Some of these models are named Turing, MAI, etc., and some are named Phi. We will open source them.

Although my expression may not be that dramatic, it is reality. For us geeks, this is a very exciting reality given how complex it all is in practice.

In addition to the MAI and Phi series models, the codename "Turing" was started internally by Microsoft in 2017 The plan is to create one large model and apply it to all product lines.

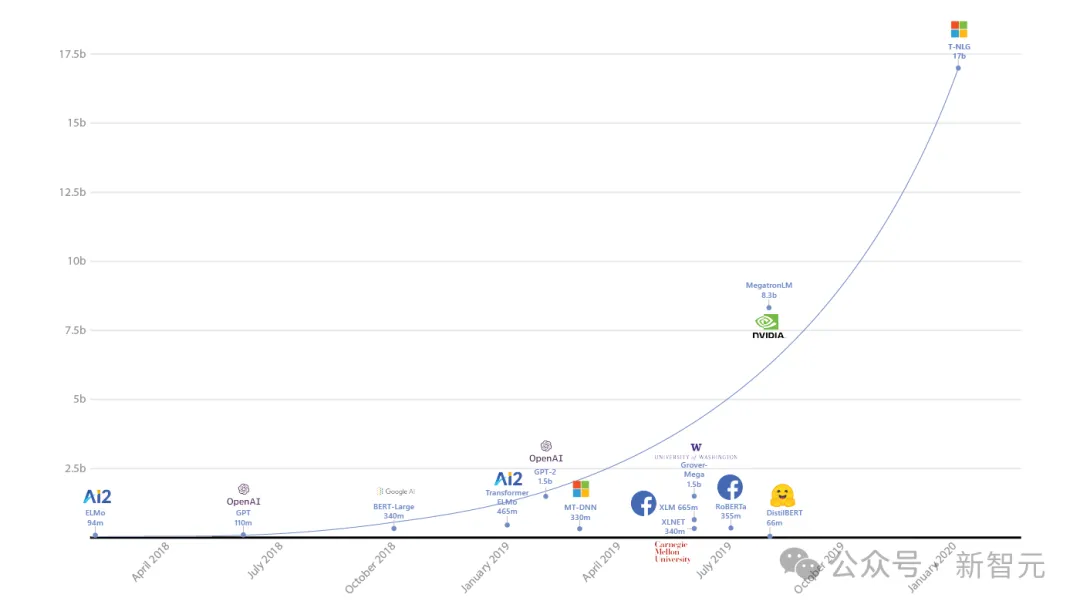

#After 3 years of research and development, they released the 17 billion parameter T-NLG model for the first time in 2020, creating the largest parameter scale LLM in history at that time Record.

In 2021, Microsoft teamed up with Nvidia to release Megatron-Turing (MT-NLP) with 530 billion parameters, which can be used in a wide range of natural languages. Demonstrated "unparalleled" accuracy in the task.

In the same year, the visual language model Turing Bletchley was first released.

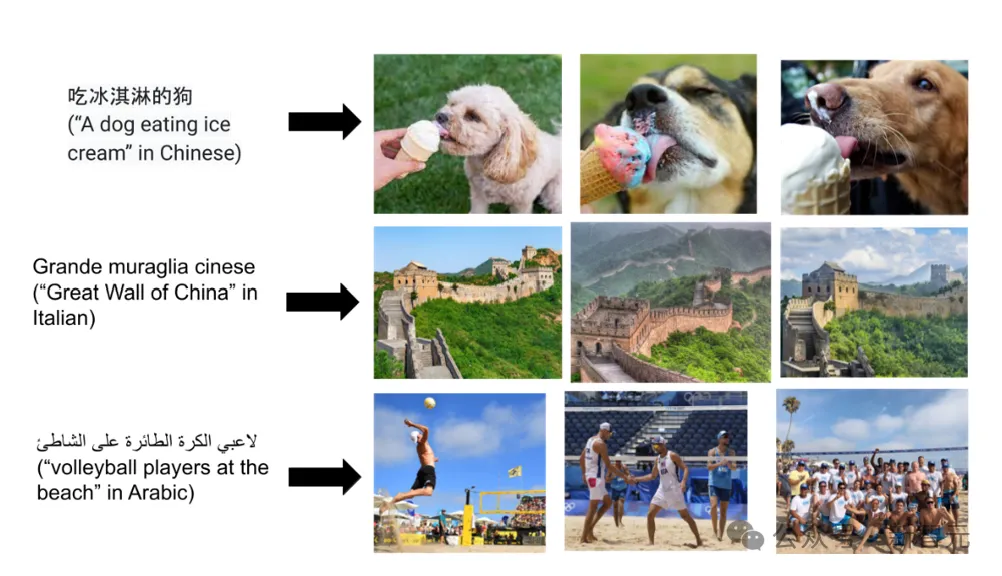

In August last year, the multi-modal model was iterated to the V3 version and has been integrated into related products such as Bing to provide a better image search experience.

In addition, Microsoft also released the "Turing Universal Language Representation Model" in 2021 and 2022 - T-ULRv5 and T-ULRv6 Two versions.

Currently, the "Turing" model has been used in smart query (SmartFind) in Word and question matching (Question Matching) in Xbox.

There is also the image super-resolution model Turing Image Super-Resolution (T-ISR) developed by the team, which has been applied in Bing Maps and can improve the quality of aerial images for global users. quality.

Currently, the specific application of the new MAI-1 model has not yet been determined and will depend on its performance.

By the way, more information about MAI-1 may be shown for the first time at the Microsoft Build Developer Conference from May 21st to 23rd.

The next step is to wait for the release of MAI-1.

The above is the detailed content of Challenging OpenAI, Microsoft's self-developed top-secret weapon with 500 billion parameters is exposed! Former Google DeepMind executive leads the team. For more information, please follow other related articles on the PHP Chinese website!