#This site publishes columns with academic and technical content. In recent years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com.

Explore a new realm of video understanding, the Mamba model leads a new trend in computer vision research! The limitations of traditional architectures have been broken. The state space model Mamba has brought revolutionary changes to the field of video understanding with its unique advantages in long sequence processing. A research team from Nanjing University, Shanghai Artificial Intelligence Laboratory, Fudan University, and Zhejiang University released a groundbreaking work. They take a comprehensive look at Mamba's multiple roles in video modeling, propose the Video Mamba Suite for 14 models/modules, and conduct an in-depth evaluation on 12 video understanding tasks. The results are exciting: Mamba shows strong potential in both video-specific and video-verbal tasks, achieving an ideal balance of efficiency and performance. This is not only a technological leap, but also a strong impetus for future video understanding research.

- Paper title: Video Mamba Suite: State Space Model as a Versatile Alternative for Video Understanding

- Paper link: https://arxiv.org/abs/2403.09626

- Code link: https://github.com/OpenGVLab/video-mamba-suite

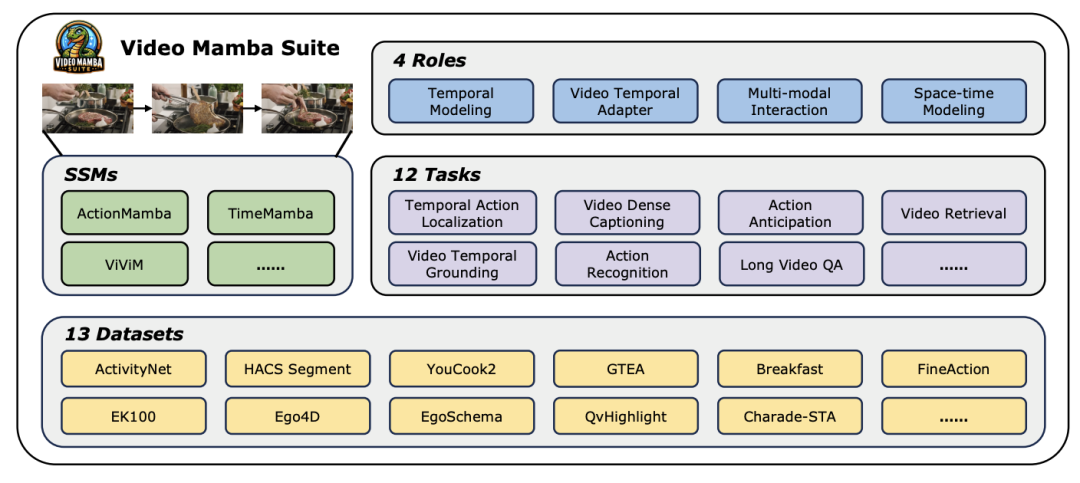

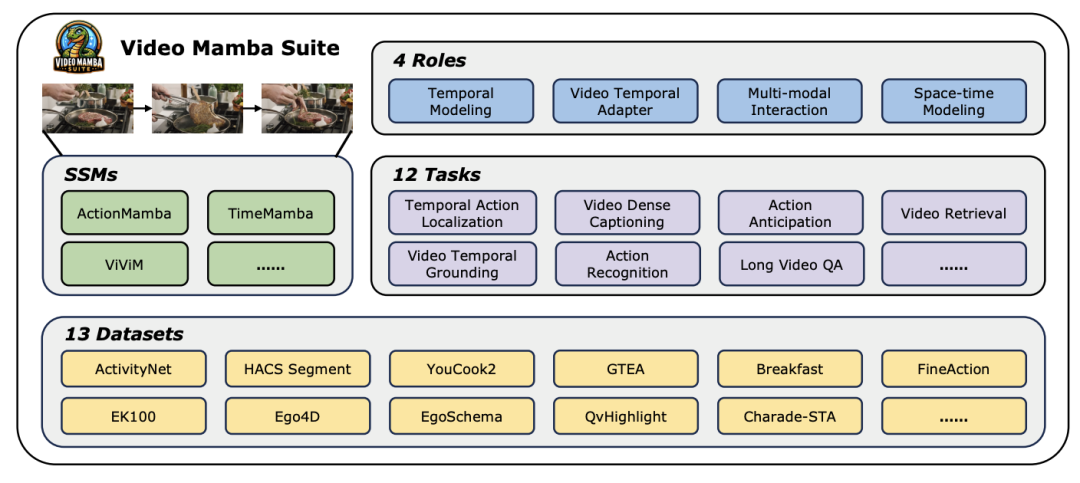

#In today’s rapidly developing field of computer vision, video understanding technology has become one of the key driving forces for industry progress. Many researchers are committed to exploring and optimizing various deep learning architectures in order to achieve deeper analysis of video content. From the early recurrent neural networks (RNN) and three-dimensional convolutional neural networks (3D CNN) to the currently highly anticipated Transformer model, each technological leap has greatly broadened our understanding and application of video data. In particular, the Transformer model has excellent performance in many fields of video understanding - including but not limited to target detection, image segmentation, and multi-modal question answering. ——Remarkable achievements have been made. However, in the face of the inherent ultra-long sequence characteristics of video data, the Transformer model also exposes its inherent limitations: due to its quadratic increase in computational complexity, it becomes extremely difficult to directly model ultra-long video sequences. In this context, the state space model architecture - represented by Mamba - emerged as the times require. With its advantage of linear computational complexity, it shows long processing times. The powerful potential of sequence data provides the possibility of replacing the Transformer model. Despite this, there are still some limitations in the current application of state space model architecture in the field of video understanding: first, it mainly focuses on global video understanding tasks, such as classification and retrieval; second, it mainly explores direct spatiotemporal modeling methods. However, the exploration of more diverse modeling methods is still insufficient. To overcome these limitations and fully evaluate the potential of the Mamba model in the field of video understanding, the research team carefully built the video-mamba-suite (video Mamba suite). This suite aims to complement existing research, exploring Mamba's diverse roles and potential benefits in video understanding through a series of in-depth experiments and analyses. The research team divided the application of the Mamba model into four different roles, and accordingly built a video Mamba suite containing 14 models/modules. After comprehensive evaluation on 12 video understanding tasks, the experimental results not only reveal Mamba's great potential in processing video and video-language tasks, but also demonstrate its excellent balance between efficiency and performance. The authors look forward to this work providing reference resources and insights for future research in the field of video understanding.

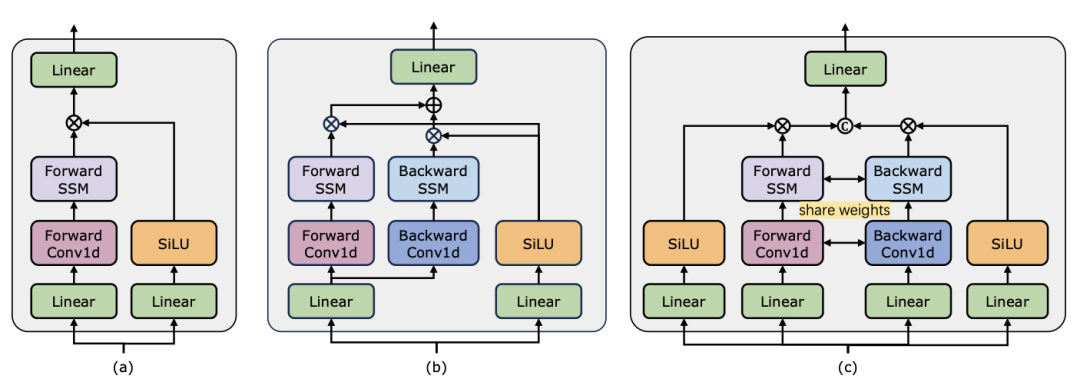

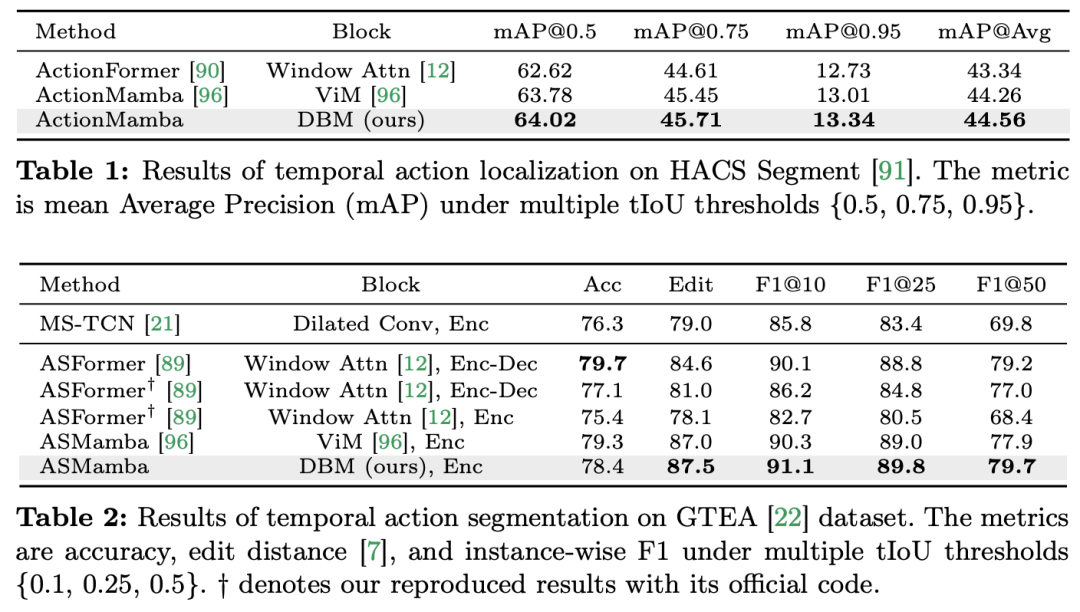

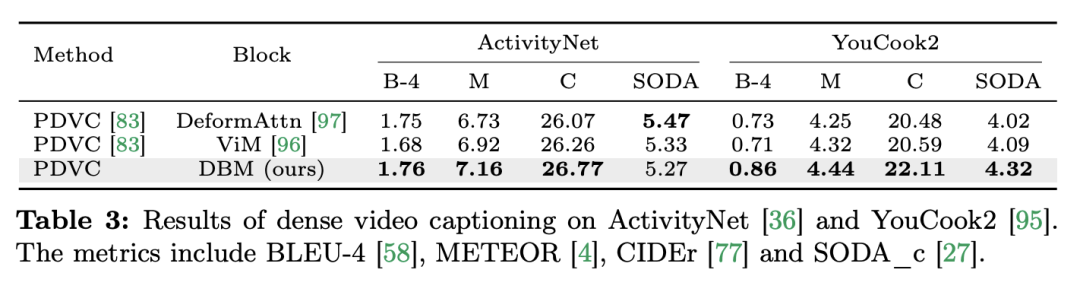

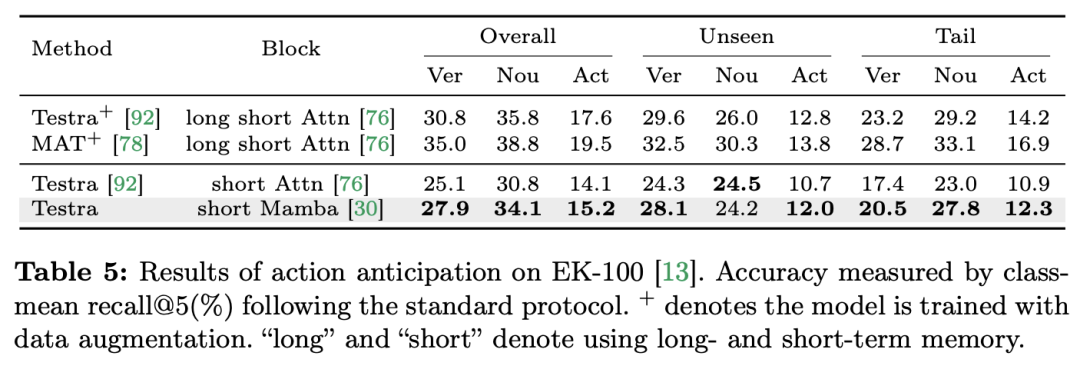

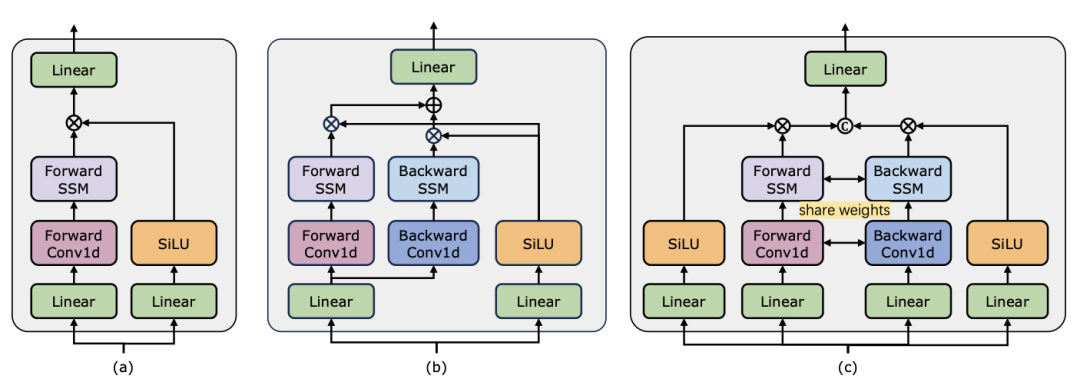

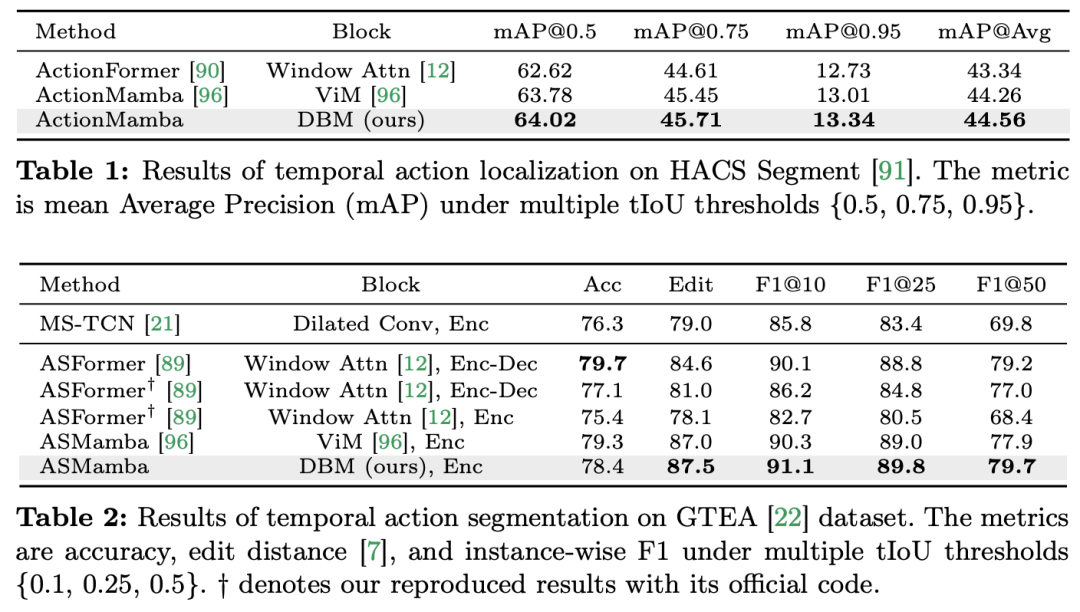

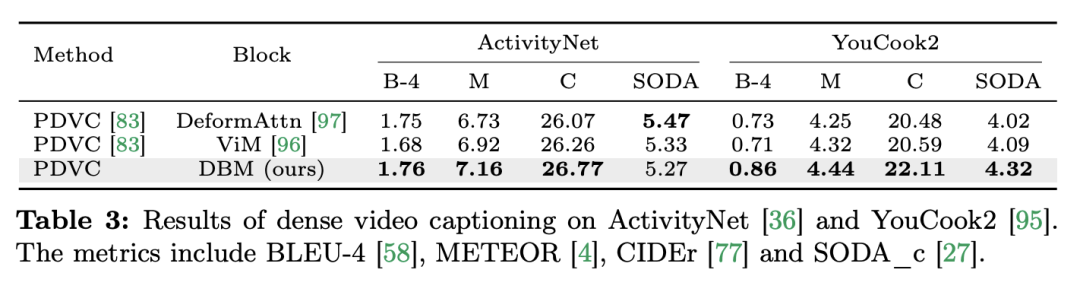

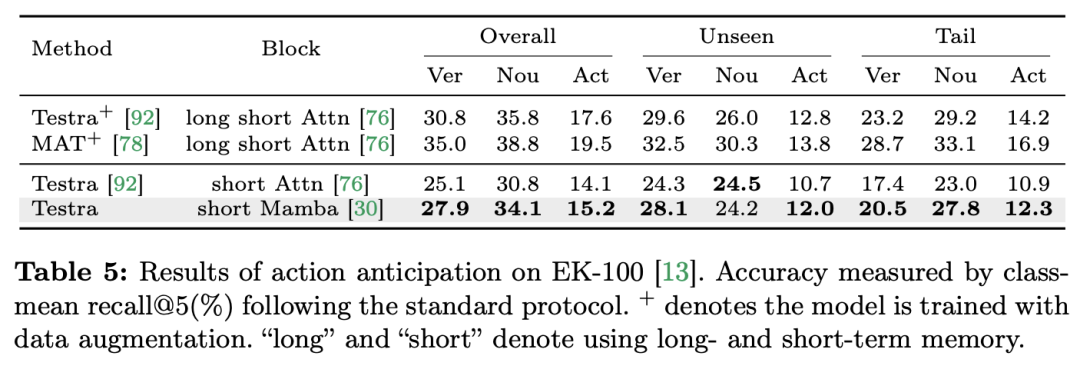

Video understanding is a basic issue in computer vision research. Its core is to capture the time and space in the video. Dynamics, used to identify and infer the nature of activities and their evolution. Currently, architecture exploration for video understanding is mainly divided into three directions. First, frame-based feature encoding methods perform temporal dependence modeling through recurrent networks (such as GRU and LSTM), but this segmented spatiotemporal modeling approach is difficult to capture Joint spatiotemporal information. Secondly, the use of three-dimensional convolution kernels enables simultaneous consideration of spatial and temporal correlations in convolutional neural networks. With the great success of Transformer models in the language and image fields, the video Transformer model has also made significant progress in the field of video understanding, showing performance beyond RNNs and 3D-CNNs. Ability. Video Transformer processes the time or spatiotemporal information in the video in a unified manner by encapsulating the video in a series of tokens and using the attention mechanism to implement global context interaction and data-dependent dynamic calculations. #However, due to the limited computational efficiency of Video Transformer when processing long videos, some variant models have emerged that strike a balance between speed and performance. Recently, state space models (SSMs) have demonstrated their advantages in the field of natural language processing (NLP). Modern SSMs exhibit strong representational capabilities in long sequence modeling while maintaining linear time complexity. This is because their selection mechanism eliminates the need to store the complete context. The Mamba model, in particular, incorporates time-varying parameters into SSM and proposes a hardware-aware algorithm for efficient training and inference. Mamba's excellent scaling performance shows that it can be a promising alternative to Transformer. #At the same time, Mamba’s high performance and efficiency make it well-suited for video understanding tasks. Although there have been some initial attempts to explore the application of Mamba in image/video modeling, its effectiveness in video understanding is still unclear. The lack of comprehensive research on Mamba’s potential in video understanding limits further exploration of its capabilities in diverse video-related tasks. In response to the above issues, the research team explored the potential of Mamba in the field of video understanding. The goal of their research is to evaluate whether Mamba can be a viable alternative to Transformers in this field. To do this, they first addressed the question of how to think about Mamba's different roles in understanding video. Based on this, they further studied which tasks Mamba performed better. The paper divides Mamba's role in video modeling into the following four categories: 1) Timing model, 2) Timing module, 3) Multi-modal interaction network, 4 ) space-time model. For each role, the research team studied its video modeling capabilities on different video understanding tasks. To fairly pit Manba against Transformer, the research team carefully selected models for comparison based on standard or modified Transformer architectures. Based on this, they obtained a Video Mamba Suite containing 14 models/modules suitable for 12 video understanding tasks. The research team hopes that the Video Mamba Suite can become a basic resource for exploring SSM-based video understanding models in the future. Mamba as a video timing model Tasks and Data: The research team evaluated Mamba’s performance on five video temporal tasks: Temporal Action Localization (HACS Segment), Temporal action segmentation (GTEA), dense video subtitles (ActivityNet, YouCook), video paragraph subtitles (ActivityNet, YouCook) and action prediction (Epic-Kitchen-100).  Baseline and Challenger: The research team selected the Transformer-based model as the baseline for each task. Specifically, these baseline models include ActionFormer, ASFormer, Testra, and PDVC. In order to build a Mamba challenger, they replaced the Transformer module in the baseline model with a Mamba-based module, including three modules as shown above, the original Mamba (a), ViM (b), and the DBM (c) originally designed by the research team ) module. It is worth noting that the paper compares the performance of the baseline model with the original Mamba module in an action prediction task involving causal inference. Results and Analysis: The paper shows the comparison results of different models on four tasks. Overall, although some Transformer-based models have incorporated attention variants to improve performance. The table below shows the superior performance of the Mamba series compared to the existing Transformer series methods.

Baseline and Challenger: The research team selected the Transformer-based model as the baseline for each task. Specifically, these baseline models include ActionFormer, ASFormer, Testra, and PDVC. In order to build a Mamba challenger, they replaced the Transformer module in the baseline model with a Mamba-based module, including three modules as shown above, the original Mamba (a), ViM (b), and the DBM (c) originally designed by the research team ) module. It is worth noting that the paper compares the performance of the baseline model with the original Mamba module in an action prediction task involving causal inference. Results and Analysis: The paper shows the comparison results of different models on four tasks. Overall, although some Transformer-based models have incorporated attention variants to improve performance. The table below shows the superior performance of the Mamba series compared to the existing Transformer series methods.

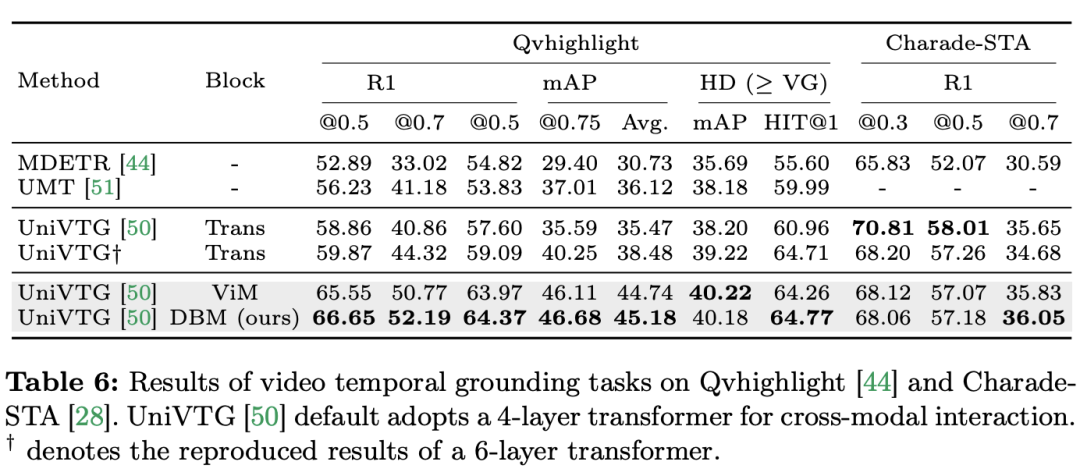

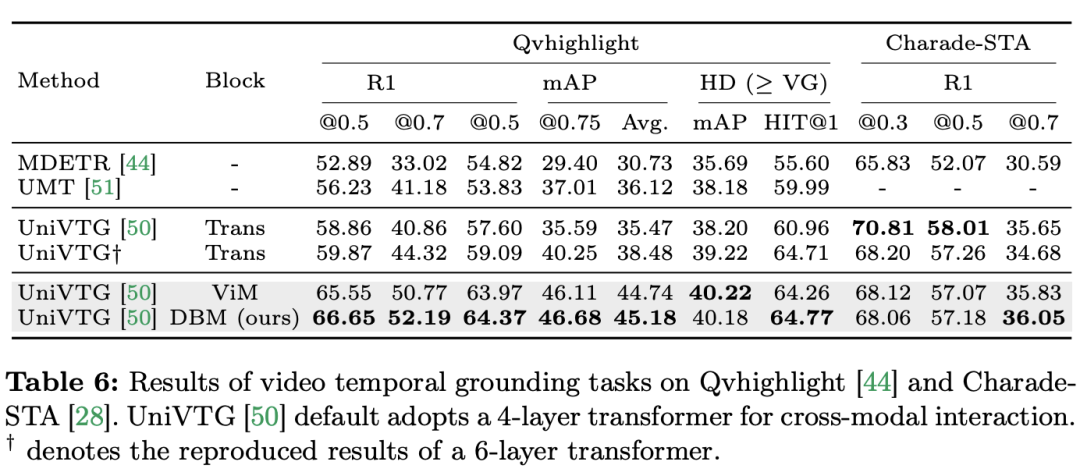

##Mamba for multi-modal interactionThe research team not only focused on single-modal tasks, but also evaluated Mamba's performance in cross-modal interaction tasks. The paper uses the video temporal localization (VTG) task to evaluate Mamba's performance. The datasets covered include QvHighlight and Charade-STA.

Tasks and Data: The research team evaluated Mamba’s performance on five video temporal tasks: Temporal Action Localization (HACS Segment), Temporal action segmentation (GTEA), dense video subtitles (ActivityNet, YouCook), video paragraph subtitles (ActivityNet, YouCook) and action prediction (Epic-Kitchen-100). Baseline and Challenger: The research team used UniVTG to build a Mamba-based VTG model. UniVTG adopts Transformer as a multi-modal interaction network. Given video features and text features, they first add learnable location embeddings and modality type embeddings for each modality to preserve location and modality information. The text and video tokens are then concatenated to form a joint input that is further fed into the multi-modal Transformer encoder. Finally, the text-augmented video features are extracted and fed into the prediction head. To create a cross-modal Mamba competitor, the research team chose to stack bidirectional Mamba blocks to form a multi-modal Mamda encoder to replace the Transformer baseline. Results and Analysis: This paper tested the performance of multiple models through QvHighlight. Mamba has an average mAP of 44.74, which is a significant improvement compared to Transformer. On Charade-STA, the Mamba-based method shows similar competitiveness to Transformer. This demonstrates that Mamba has the potential to effectively integrate multiple modalities.

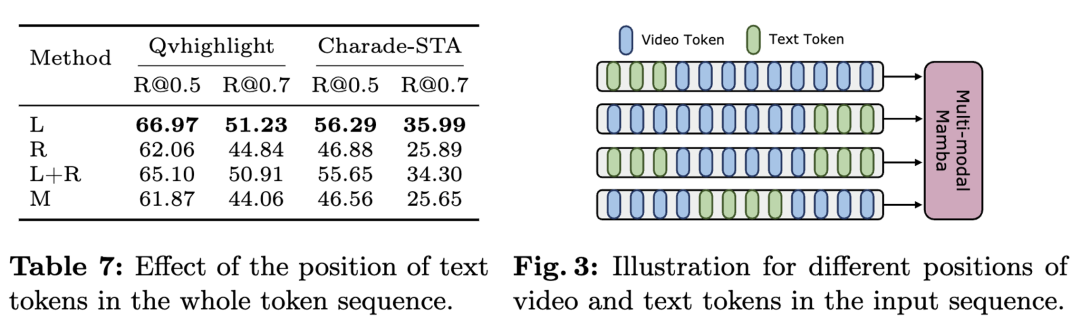

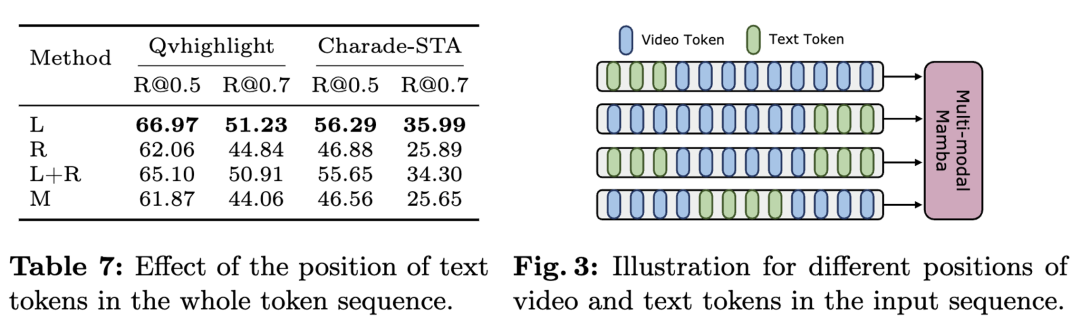

Considering that Mamba is a model based on linear scanning, and Transformer is based on global mark interaction, the research team intuitively believes that the position of text in the mark sequence may affect multimodality Aggregation effect. To investigate this, they include different text-visual fusion methods in the table and show four different mark arrangements in the figure. The conclusion is that the best results are obtained when textual conditions are fused to the left of visual features. QvHighlight has less impact on this fusion, while Charade-STA is particularly sensitive to the position of the text, which may be attributed to the characteristics of the dataset.

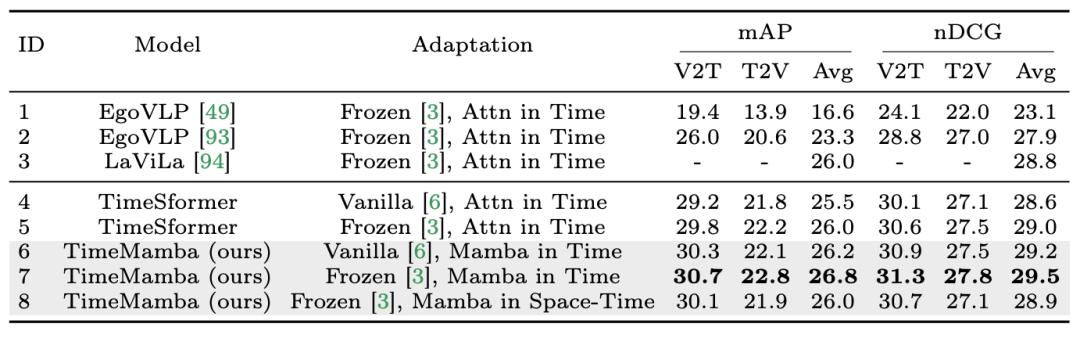

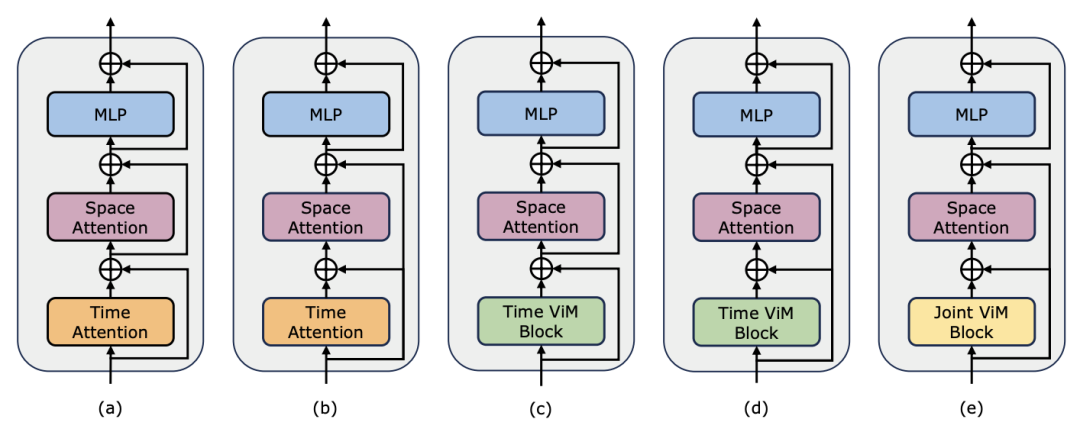

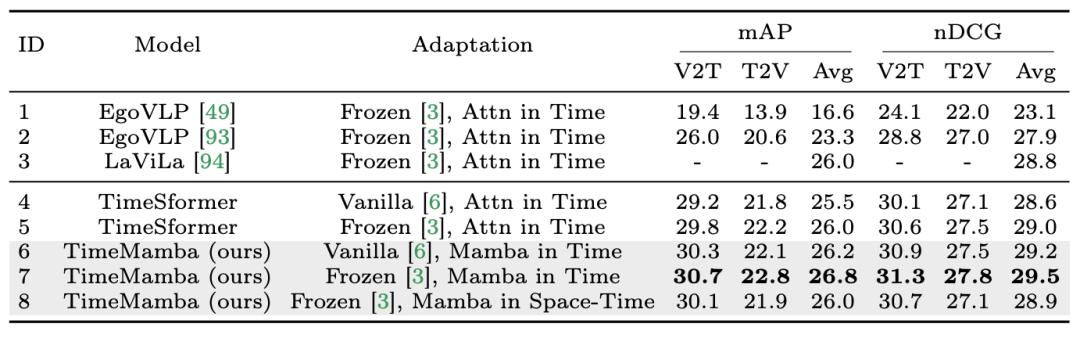

Mamba as a Video Timing AdapterIn addition to evaluating Mamba’s performance in post-timing modeling, the research team also examined its performance as a Availability of video time adapter. The Two Towers model is pre-trained by performing video-text contrastive learning on egocentric data, which contains 4 million video clips with fine-grained narration. Tasks and Data: The research team evaluated Mamba’s performance on five video temporal tasks, including: Temporal Action Localization (HACS) Segment), Temporal Action Segmentation (GTEA), Dense Video Captioning (ActivityNet, YouCook), Video Segment Captioning (ActivityNet, YouCook) and Action Prediction (Epic-Kitchen-100). Baseline and Challenger: TimeSformer adopts separate spatiotemporal attention blocks to separately model spatial and temporal relationships in videos. To this end, the research team introduced a bidirectional Mamba block as a timing adapter to replace the original timing self-attention and improve separate spatiotemporal interactions. For fair comparison, the spatial attention layer in TimeSformer remains unchanged. Here, the research team used ViM blocks as timing modules and called the resulting model TimeMamba. It is worth noting that the standard ViM block has more parameters (slightly more than

Mamba as a Video Timing AdapterIn addition to evaluating Mamba’s performance in post-timing modeling, the research team also examined its performance as a Availability of video time adapter. The Two Towers model is pre-trained by performing video-text contrastive learning on egocentric data, which contains 4 million video clips with fine-grained narration. Tasks and Data: The research team evaluated Mamba’s performance on five video temporal tasks, including: Temporal Action Localization (HACS) Segment), Temporal Action Segmentation (GTEA), Dense Video Captioning (ActivityNet, YouCook), Video Segment Captioning (ActivityNet, YouCook) and Action Prediction (Epic-Kitchen-100). Baseline and Challenger: TimeSformer adopts separate spatiotemporal attention blocks to separately model spatial and temporal relationships in videos. To this end, the research team introduced a bidirectional Mamba block as a timing adapter to replace the original timing self-attention and improve separate spatiotemporal interactions. For fair comparison, the spatial attention layer in TimeSformer remains unchanged. Here, the research team used ViM blocks as timing modules and called the resulting model TimeMamba. It is worth noting that the standard ViM block has more parameters (slightly more than  ) than the self-attention block, where C is the feature dimension. Therefore, the expansion ratio E of the ViM block is set to 1 in the paper, reducing its parameter size to

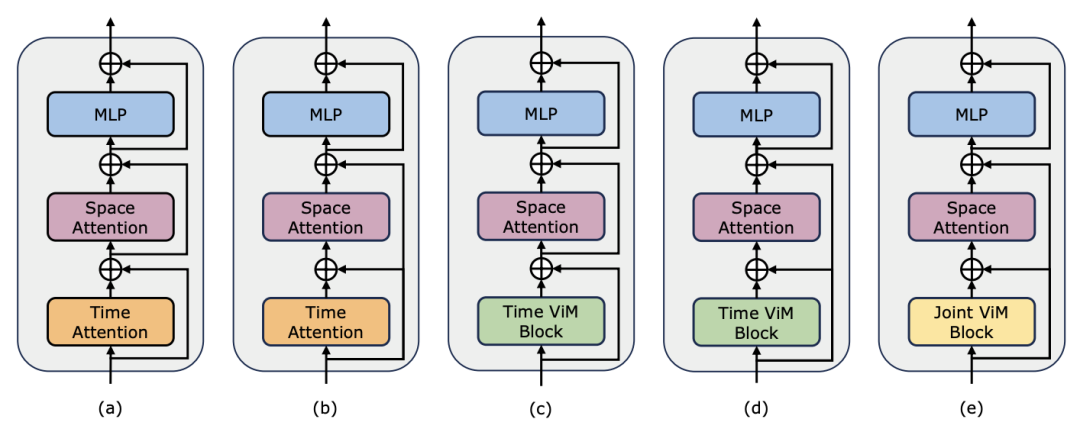

) than the self-attention block, where C is the feature dimension. Therefore, the expansion ratio E of the ViM block is set to 1 in the paper, reducing its parameter size to  for a fair comparison. In addition to the ordinary residual connection form used by TimeSformer, the research team also explored Frozen style adaptation. The following are 5 adapter structures:

for a fair comparison. In addition to the ordinary residual connection form used by TimeSformer, the research team also explored Frozen style adaptation. The following are 5 adapter structures:

1. Zero-sample multi-instance retrieval. The research team first evaluated different models with separate spatiotemporal interactions in the table and found that the Frozen-style residual connections reproduced in the paper were consistent with those of LaViLa. When comparing the original and Frozen styles, it is not difficult to observe that the Frozen style always produces better results. Furthermore, under the same adaptation method, the ViM-based temporal module always outperforms the attention-based temporal module. It is worth noting that the ViM temporal block used in the paper has fewer parameters than the temporal self-attention block, highlighting the better parameters of Mamba selective scanning Utilization and information extraction capabilities. In addition, the research team further verified the spatiotemporal ViM block. The spatiotemporal ViM block replaces the temporal ViM block with joint spatiotemporal modeling over the entire video sequence. Surprisingly, despite the introduction of global modeling, spatiotemporal ViM blocks actually resulted in performance degradation. To this end, the research team speculates that scan-based spatio-temporal may destroy the pre-trained spatial attention block to produce spatial feature distribution. The following are the experimental results:

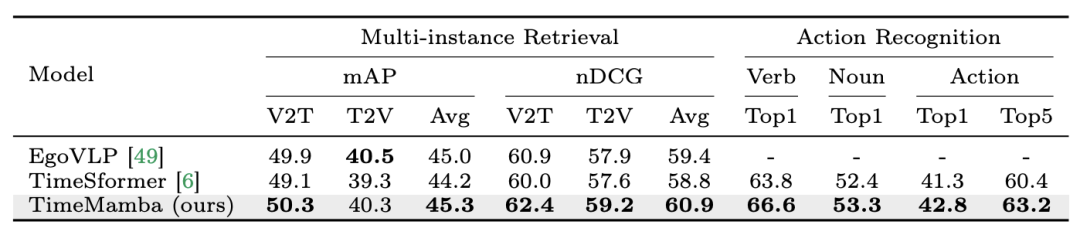

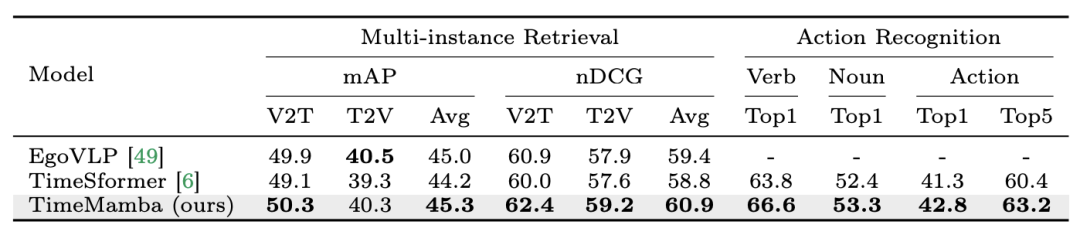

#2. Fine-tuning multi-instance retrieval and action recognition. The research team continues to use 16-frame fine-tuned pre-trained models on the Epic-Kitchens-100 dataset for multi-instance retrieval and action recognition. It can be observed from the experimental results that TimeMamba significantly outperforms TimeSformer in the context of verb recognition, exceeding 2.8 percentage points, which shows that TimeMamba can effectively model fine-grained timing.

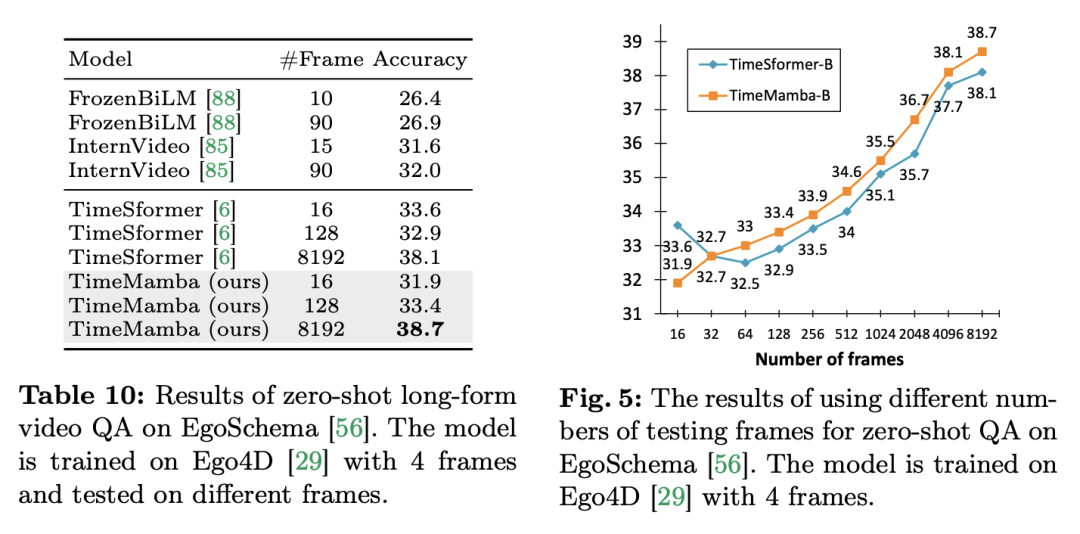

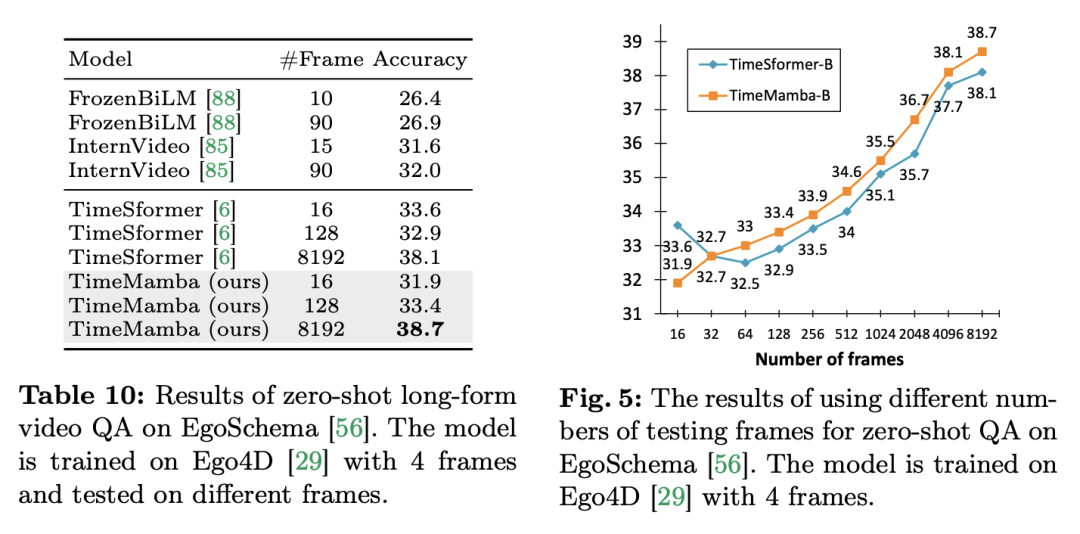

#3. Zero-sample long video Q&A. The research team further evaluated the model's long video Q&A performance on the EgoSchema dataset. The following are the experimental results:

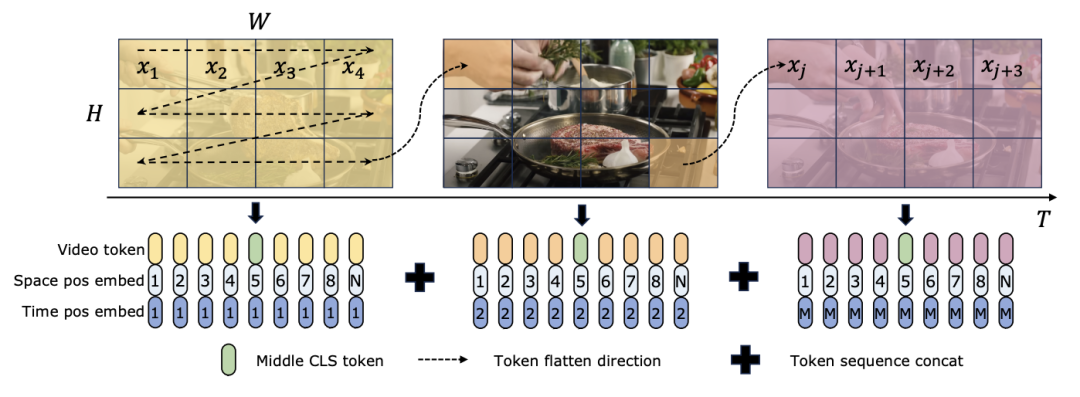

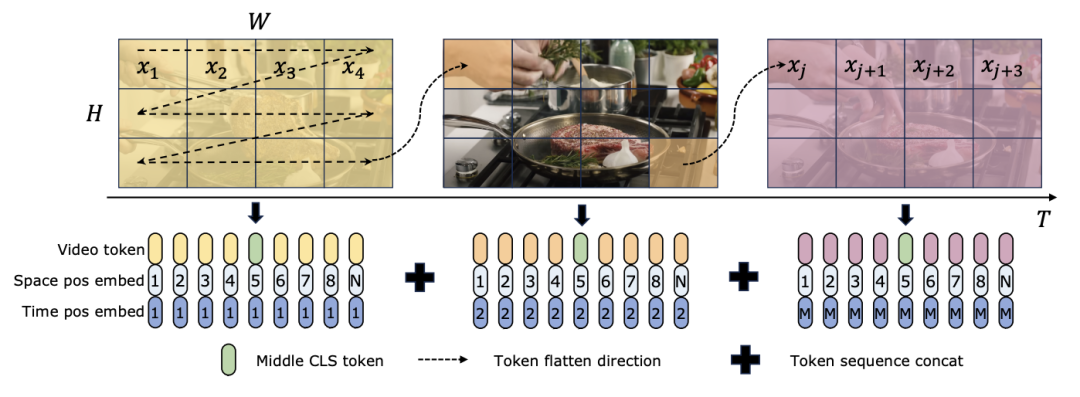

Both TimeSformer and TimeMamba, after pre-training on Ego4D, exceed the performance of large-scale pre-trained models (such as InternVideo). In addition, the research team continuously increased the number of test frames starting from the video at a fixed FPS to explore the impact of ViM blocks' long video temporal modeling capabilities. Although both models are pretrained with 4 frames, the performance of TimeMamba and TimeSformer steadily improves as the number of frames increases. Meanwhile, significant improvements can be observed when using 8192 frames. When the input frames exceed 32, TimeMamba generally benefits from more frames than TimeSformer, indicating the superiority of Temporal ViM blocks in temporal self-attention. Mamba for spatiotemporal modeling# #Tasks and Data: In addition, the paper also evaluates Mamba's ability in space-time modeling, specifically evaluating the model's performance in zero-shot multi-instance retrieval on the Epic-Kitchens-100 data set. Baselines and Competitors: ViViT and TimeSformer study the transformation of ViT with spatial attention into models with joint spatial-temporal attention. Based on this, the research team further expanded the spatial selective scanning of the ViM model to include spatiotemporal selective scanning. Name this extended model ViViM. The research team used the ViM model pretrained on ImageNet-1K for initialization. The ViM model contains a cls token that is inserted into the middle of the flat token sequence. The following figure shows how to convert a ViM model to ViViM. For a given input containing M frames, insert a cls token in the middle of the token sequence corresponding to each frame. In addition, the research team added temporal position embedding, initialized to zero for each frame. The flattened video sequence is then input into the ViViM model. The output of the model is obtained by calculating the average of cls tokens for each frame.

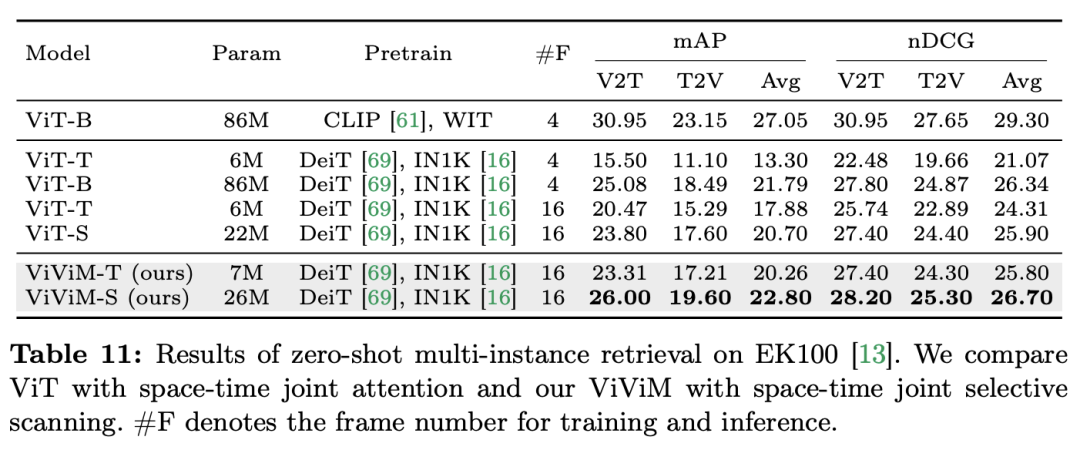

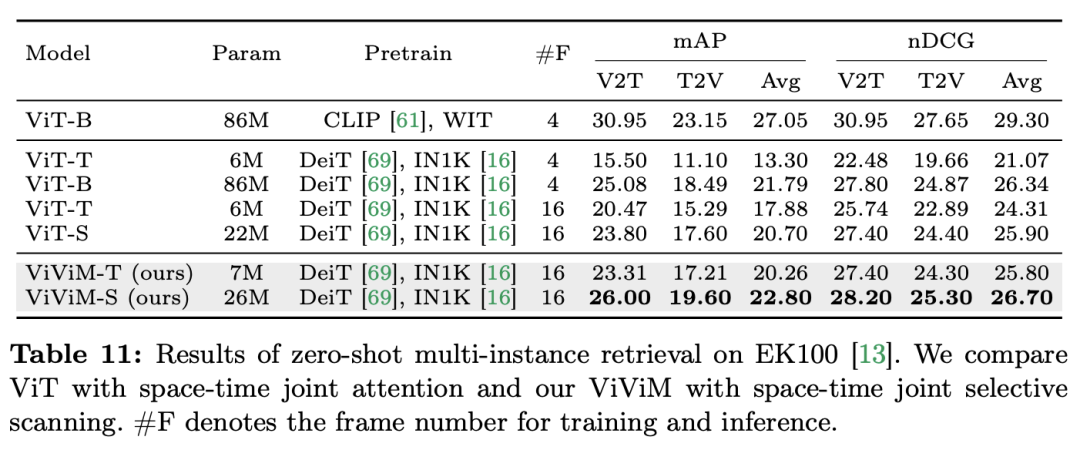

Results and analysis: The paper further studies the results of ViViM in zero-sample multi-instance retrieval. The experimental results are shown in the following table:

The results show the performance of different spatiotemporal models on zero-shot multi-instance retrieval. When comparing ViT and ViViM, both of which are pretrained on ImageNet-1K, it can be observed that ViViM outperforms ViT. Interestingly, although the performance gap between ViT-S and ViM-S on ImageNet-1K is small (79.8 vs 80.5), ViViM-S shows significant improvement on zero-shot multi-instance retrieval (2.1 mAP @Avg), which shows that ViViM is very effective in modeling long sequences, thus improving performance.

This paper comprehensively evaluates the performance of Mamba in the field of video understanding. Demonstrates the potential of Mamba as a viable alternative to traditional Transformers. Through the Video Mamba Suite, which consists of 14 models/modules for 12 video understanding tasks, the research team demonstrated Mamba's ability to efficiently handle complex spatiotemporal dynamics. Mamba not only delivers superior performance, but also achieves a better efficiency-performance balance. These findings not only highlight Mamba's suitability for video analysis tasks, but also open new avenues for its application in the field of computer vision. Future work can further explore Mamba's adaptability and extend its utility to more complex multimodal video understanding challenges.

The above is the detailed content of In 12 video understanding tasks, Mamba first defeated Transformer. For more information, please follow other related articles on the PHP Chinese website!

) than the self-attention block, where C is the feature dimension. Therefore, the expansion ratio E of the ViM block is set to 1 in the paper, reducing its parameter size to

) than the self-attention block, where C is the feature dimension. Therefore, the expansion ratio E of the ViM block is set to 1 in the paper, reducing its parameter size to  for a fair comparison. In addition to the ordinary residual connection form used by TimeSformer, the research team also explored Frozen style adaptation. The following are 5 adapter structures:

for a fair comparison. In addition to the ordinary residual connection form used by TimeSformer, the research team also explored Frozen style adaptation. The following are 5 adapter structures:

Build your own git server

Build your own git server

The difference between git and svn

The difference between git and svn

git undo submitted commit

git undo submitted commit

Commonly used permutation and combination formulas

Commonly used permutation and combination formulas

How to undo git commit error

How to undo git commit error

How to compare the file contents of two versions in git

How to compare the file contents of two versions in git

Introduction to laravel components

Introduction to laravel components

Virtual mobile phone number to receive verification code

Virtual mobile phone number to receive verification code