An open source model that can beat GPT-4 has appeared!

The latest battle report of the large model arena:

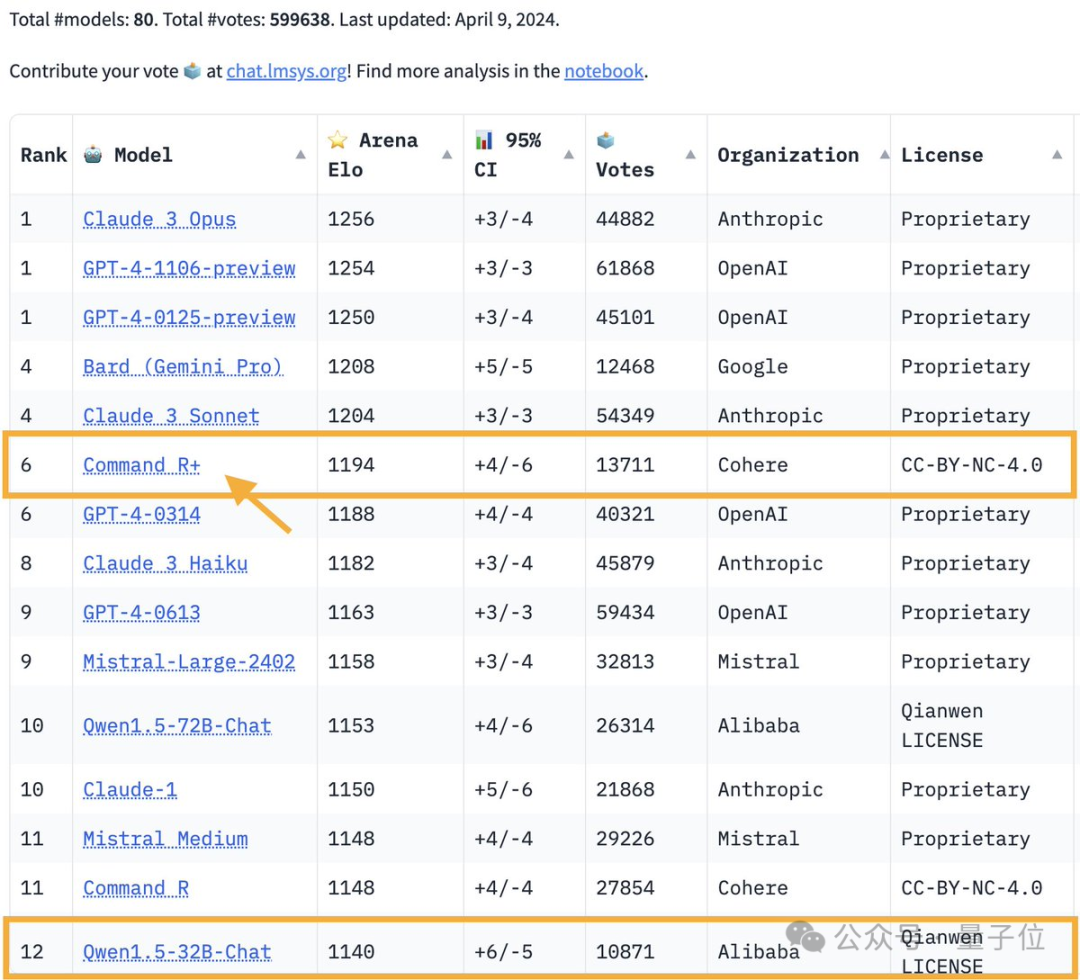

The 104 billion parameter open source model Command R climbed to 6th place, tying with GPT-4-0314 and surpassing GPT-4-0613.

Picture

Picture

This is also the first open-weight model to beat GPT-4 in the large model arena.

The large model arena is one of the only test benchmarks that the master Karpathy trusts.

Picture

Picture

Command R from AI unicorn Cohere. The co-founder and CEO of this large model startup is none other than Aidan Gomez, the youngest author of Transformer (referred to as the wheat reaper).

Picture

Picture

As soon as this battle report came out, it set off another wave of heated discussions in the large model community.

The reason why everyone is excited is simple: the basic large model has been rolled out for a whole year. Unexpectedly, the pattern will continue to develop and change in 2024.

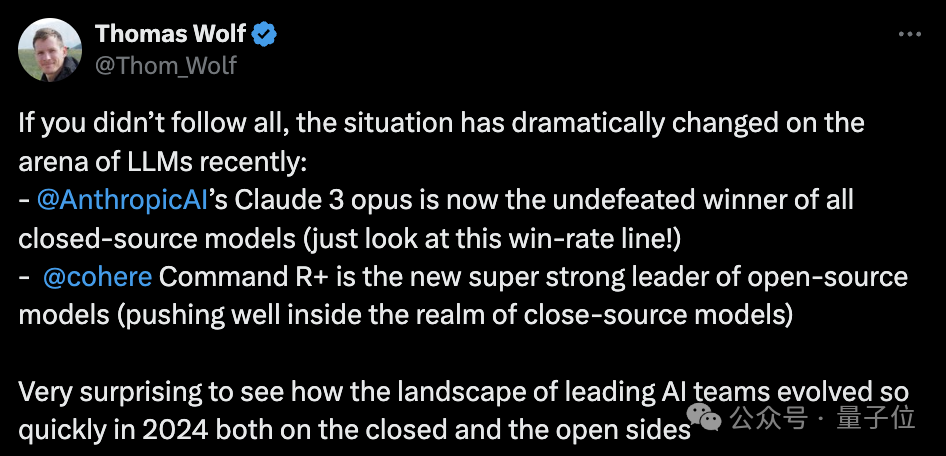

HuggingFace co-founder Thomas Wolf said:

The situation in the large model arena has changed dramatically recently:

Anthropic’s Claude 3 opus is in the closed source model Take the lead among them.

Cohere's Command R has become the strongest among the open source models.

Unexpectedly, in 2024, the artificial intelligence team will develop so fast on both open source and closed source routes.

Picture

Picture

In addition, Cohere Machine Learning Director Nils Reimers also pointed out something worthy of attention:

The biggest feature of Command R is The built-in RAG (Retrieval Enhanced Generation) has been fully optimized, but in the large model arena, plug-in capabilities such as RAG were not included in the test.

Picture

Picture

In Cohere’s official positioning, Command R is a “RAG optimization model” .

That is to say, this large model with 104 billion parameters has been deeply optimized for retrieval enhancement generation technology to reduce the generation of hallucinations and is more suitable for enterprise-level workloads.

Like the previously launched Command R, the context window length of Command R is 128k.

In addition, Command R also has the following features:

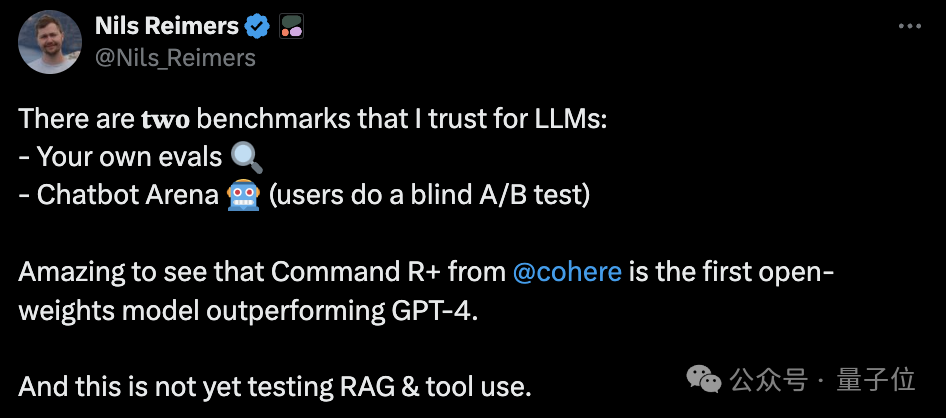

Judging from the test results, Command R has reached the level of GPT-4 turbo in the three dimensions of multi-language, RAG and tool usage.

But in terms of input cost, the price of Command R is only 1/3 of GPT-4 turbo.

In terms of output cost, Command R is 1/2 of GPT-4 turbo.

Picture

Picture

It is this that has attracted the attention of many netizens:

Picture

Picture

However, despite its eye-catching performance in human subjective evaluations such as the large model arena, some netizens still expressed some different opinions.

On HumanEval, Command R’s coding ability has not even surpassed GPT-3.5, ranking 32nd and 33rd respectively in the two sets of tests.

The latest version of GPT-4 turbo won the first place without any suspense.

Picture

Picture

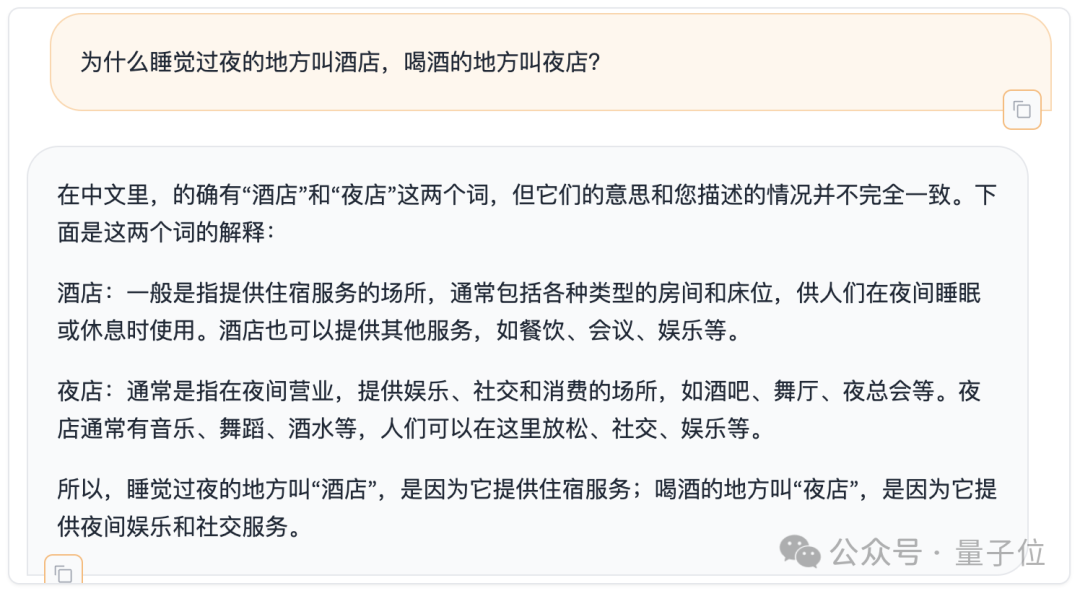

In addition, we also briefly tested the Chinese ability of Command R on the Mentally Retarded Benchmark, which has recently been listed in serious papers.

Picture

Picture

How would you rate it?

It should be noted that the open source of Command R is only for academic research and is not free for commercial use.

Finally, let’s talk more about the wheat-cutting guy.

Aidan Gomez, the youngest of the Transformer Knights of the Round Table, was just an undergraduate when he joined the research team——

However, he joined the Hinton experiment when he was a junior at the University of Toronto. The kind of room.

In 2018, Kaomaizi was admitted to Oxford University and began studying for a PhD in CS like his thesis partners.

But in 2019, with the founding of Cohere, he finally chose to drop out of school and join the wave of AI entrepreneurship.

Cohere mainly provides large model solutions for enterprises, and its current valuation has reached US$2.2 billion.

Reference link:

[1]//m.sbmmt.com/link/3be14122a3c78d9070cae09a16adcbb1[2]//m.sbmmt.com/ link/93fc5aed8c051ce4538e052cfe9f8692

The above is the detailed content of The open source model wins GPT-4 for the first time! Arena's latest battle report has sparked heated debate, Karpathy: This is the only list I trust. For more information, please follow other related articles on the PHP Chinese website!