JS-Torch is a deep learning JavaScript library whose syntax is very similar to PyTorch. It contains a fully functional tensor object (can be used with tracked gradients), deep learning layers and functions, and an automatic differentiation engine. JS-Torch is suitable for deep learning research in JavaScript and provides many convenient tools and functions to accelerate deep learning development.

Picture

Picture

PyTorch is an open source deep learning framework developed and maintained by Meta's research team. It provides a rich set of tools and libraries for building and training neural network models. The design concept of PyTorch is simplicity, flexibility and ease of use. Its dynamic calculation graph feature makes model construction more intuitive and flexible, while also improving the efficiency of model construction and debugging. The dynamic calculation graph feature of PyTorch also makes its model construction more intuitive and easy to debug and optimize. In addition, PyTorch also has good scalability and operating efficiency, making it popular and applied in the field of deep learning.

You can install js-pytorch through npm or pnpm:

npm install js-pytorchpnpm add js-pytorch

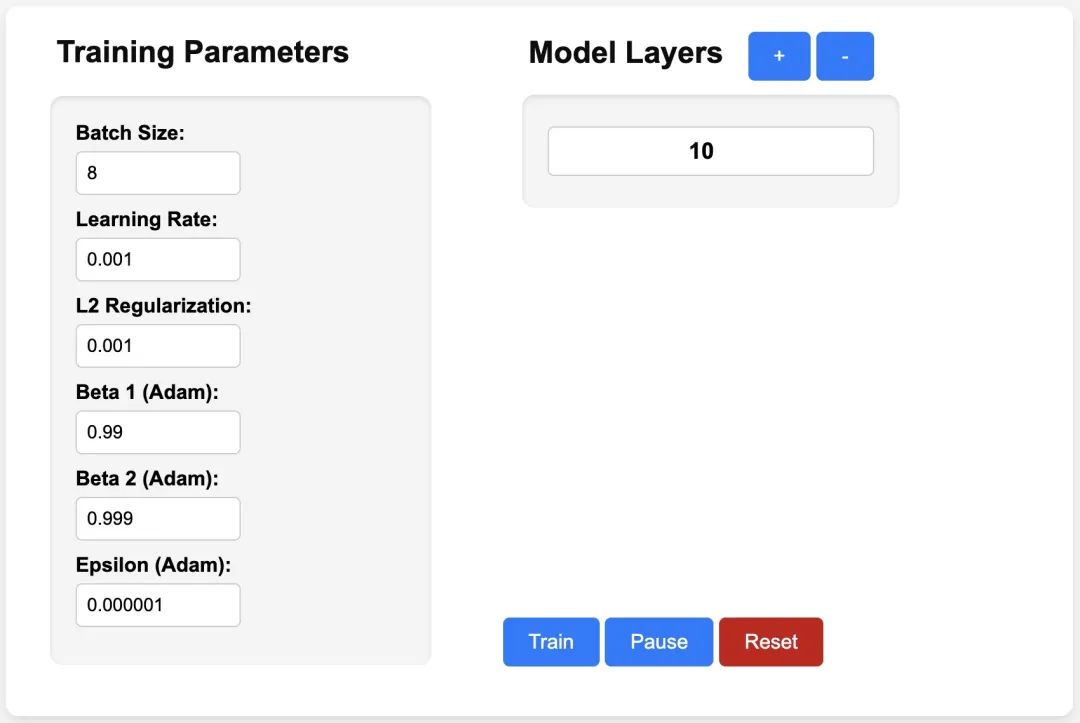

or experience the Demo[3] provided by js-pytorch online:

Picture

Picture

##https://eduardoleao052.github.io/js-torch/assets/demo/demo.htmlJS-Torch supported functions Currently, JS-Torch already supports tensor operations such as Add, Subtract, Multiply, and Divide, and also supports commonly used deep learning layers such as Linear, MultiHeadSelfAttention, ReLU, and LayerNorm. Tensor Operations

import { torch } from "js-pytorch";// Instantiate Tensors:let x = torch.randn([8, 4, 5]);let w = torch.randn([8, 5, 4], (requires_grad = true));let b = torch.tensor([0.2, 0.5, 0.1, 0.0], (requires_grad = true));// Make calculations:let out = torch.matmul(x, w);out = torch.add(out, b);// Compute gradients on whole graph:out.backward();// Get gradients from specific Tensors:console.log(w.grad);console.log(b.grad);

import { torch } from "js-pytorch";const nn = torch.nn;class Transformer extends nn.Module {constructor(vocab_size, hidden_size, n_timesteps, n_heads, p) {super();// Instantiate Transformer's Layers:this.embed = new nn.Embedding(vocab_size, hidden_size);this.pos_embed = new nn.PositionalEmbedding(n_timesteps, hidden_size);this.b1 = new nn.Block(hidden_size,hidden_size,n_heads,n_timesteps,(dropout_p = p));this.b2 = new nn.Block(hidden_size,hidden_size,n_heads,n_timesteps,(dropout_p = p));this.ln = new nn.LayerNorm(hidden_size);this.linear = new nn.Linear(hidden_size, vocab_size);}forward(x) {let z;z = torch.add(this.embed.forward(x), this.pos_embed.forward(x));z = this.b1.forward(z);z = this.b2.forward(z);z = this.ln.forward(z);z = this.linear.forward(z);return z;}}// Instantiate your custom nn.Module:const model = new Transformer(vocab_size,hidden_size,n_timesteps,n_heads,dropout_p);// Define loss function and optimizer:const loss_func = new nn.CrossEntropyLoss();const optimizer = new optim.Adam(model.parameters(), (lr = 5e-3), (reg = 0));// Instantiate sample input and output:let x = torch.randint(0, vocab_size, [batch_size, n_timesteps, 1]);let y = torch.randint(0, vocab_size, [batch_size, n_timesteps]);let loss;// Training Loop:for (let i = 0; i < 40; i++) {// Forward pass through the Transformer:let z = model.forward(x);// Get loss:loss = loss_func.forward(z, y);// Backpropagate the loss using torch.tensor's backward() method:loss.backward();// Update the weights:optimizer.step();// Reset the gradients to zero after each training step:optimizer.zero_grad();}

The above is the detailed content of The AI era of JS is here!. For more information, please follow other related articles on the PHP Chinese website!