The first AI software engineerDevinwas officially unveiled, immediately detonating the entire technology community.

Although Devin cannot easily solve coding tasks, he can complete the entire software development cycle independently - from project planning to deployment. He tries his best to dig, but is not limited to building websites, independently finding and fixing bugs, training and fine-tuning AI models, etc.

This kind of "unbelievably powerful" software development ability has made many programmers despair, calling out:"Is the end of programmers really coming?"

Among the test results, Devin’s performance in theSWE-Benchbenchmark test is particularly eye-catching.

SWE-Bench is a test to evaluate AI software engineering capabilities, focusing on the ability of large models to solve actual GitHub problems.

Devin topped the list with an independent problem solving rate of 13.86%, "instantly killing" GPT-4's only 1.74% score, leaving many large AI models far behind.

This powerful performance makes people wonder:"What role will AI play in future software development?"

Shanghai The Artificial Intelligence Laboratory, together with researchers from ByteDance SE Lab and the SWE-Bench team, proposed a new test benchmarkDevBench, which revealed for the first time the extent to which large models can be derived from PRD Set off andcomplete the design, development, and testing of a complete project.

Insufficient object programming ability,Unable to write more complex build scripts(build script),andfunction call parameters do not matchAnd other issues.

There is still a way to go before the large language model can independently complete the development of a small and medium-sized software project.Currently, DevBench papers have been published on the preprint platform arXiv, and the relevant code and data are open source on GitHub.

(Link at the end of the article)

What are the tasks of DevBench? △The picture shows an overview of the DevBench framework

△The picture shows an overview of the DevBench framework

DevBench is built around five key tasks. Each task focuses on a key stage of the software development life cycle. The modular design allows for each task to be Independent testing and evaluation.

Software design:Use the product requirements document PRD to create UML diagrams and architectural designs to display classes, attributes, relationships, and the structural layout of the software. This task refers to MT-Bench and adopts the LLM-as-a-Judge evaluation method. The evaluation is mainly based on two main indicators: general principles of software design(such as high cohesion and low coupling, etc.)and fidelity(faithfulness).

Environment settings:According to the requirements document provided, generate the dependency files required to initialize the development environment. During the evaluation process, the dependency file will be built in a given basic isolation environment(docker container)through benchmark instructions. Then, in the dependent environment built by this model, the task evaluates the success rate of executing the benchmark code by executing the benchmark example usage code(example usage)of the code warehouse.

Code implementation:Based on the requirements document and architecture design, the model needs to complete the code file generation of the entire code base. DevBench developed an automated testing framework and customized it for the specific programming language used, integrating Python's PyTest, C's GTest, Java's JUnit and JavaScript's Jest. This task evaluates the pass rate of the model-generated codebase executing benchmark integration and unit tests in a benchmark environment.

Integration testing:The model generates integration test code based on requirements to verify the external interface function of the code base. This task runs the generated integration tests on the baseline implementation code and reports the test pass rate.

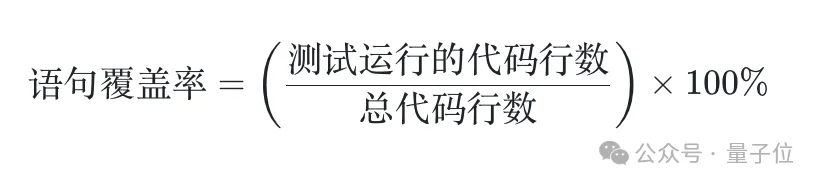

Unit testing:The model generates unit test code based on requirements. Again, this task runs the generated unit tests on the baseline implementation code. In addition to the pass rate indicator, this task also introduces statement coverage evaluation indicators to quantitatively evaluate the comprehensiveness of the test.

DevBench data preparation process includes three stages: warehouse preparation, code cleaning and document preparation.

In the end, the DevBench data set contains 4 programming languages, multiple fields, and a total of 22 code bases. The complexity of these code repositories and the diversity of programming paradigms used set up significant challenges for language models.

A few interesting examples:

TextCNN

The large model can completely write a TextCNN to make text A two-class model? Being able to pull the data set from HF yourself and run the training is a basic requirement. The model also needs to customize hyperparameters, record logs, and store checkpoints according to the requirements of the document, while ensuring the reproducibility of the experiment.

(https://github.com/open-compass/DevBench/tree/main/benchmark_data/python/TextCNN)

Registration & Login

Front-end projects often rely on many component libraries and front-end frameworks. Can the model cope with front-end projects that may have version conflicts?

(https://github.com/open-compass/DevBench/tree/main/benchmark_data/javascript/login-registration)

People Management

How well does the model master the creation and management of SQLite databases? In addition to basic add, delete, modify and query operations, can the model encapsulate the management and operation of campus personnel information and relational databases into easy-to-use command line tools?

(https://github.com/open-compass/DevBench/tree/main/benchmark_data/cpp/people_management)

Actor Relationship Game

Is the "Six Degrees of Separation Theory" conjecture verified in the film and television industry? The model needs to get data from the TMDB API and build a network of connections between popular actors through their collaborations in movies.

(https://github.com/open-compass/DevBench/tree/main/benchmark_data/java/Actor_relationship_game)

##ArXiv digest

Is the ArXiv paper retrieval tool easily accessible? ArXiv's API does not support the function of "filtering papers in the last N days", but it can "sort by publication time". Can the model be used to develop a useful paper search tool?(https://github.com/open-compass/DevBench/tree/main/benchmark_data/python/ArXiv_digest)

Experimental findings The research team used DevBench to conduct comprehensive testing on currently popular LLMs, including GPT-4-Turbo. Results show that although these models perform well on simple programming tasks, they still encounter significant difficulties when faced with complex, real-world software development challenges. Especially when dealing with complex code structures and logic, the performance of the model needs to be improved.

DevBench has now joined the OpenCompass Sinan large model capability evaluation system. OpenCompass is a product developed and launched by the Shanghai Artificial Intelligence Laboratory for large language models, multi-modal large models, etc. A one-stop evaluation platform for class models.

OpenCompass has the characteristics ofreproducibility, comprehensive capability dimensions, rich model support, distributed and efficient evaluation, diversified evaluation paradigms, and flexible expansion. Based on a high-quality, multi-level competency system and tool chain, OpenCompass has innovated a number of competency assessment methods and supports various high-quality bilingual assessment benchmarks in Chinese and English, covering language and understanding, common sense and logical reasoning, mathematical calculation and application, and more. Multiple aspects such as programming language coding capabilities, agents, creation and dialogue can achieve a comprehensive diagnosis of the true capabilities of large models. DevBench has broadened OpenCompass’s evaluation capabilities in the field of agents.

DevBench paper: https://arxiv.org/abs/2403.08604

GitHub: https://github.com/open-compass/devBench/

OpenCompass https://github.com/open-compass/opencompass

The above is the detailed content of GPT-4 scored only 7.1 points in each category, revealing three major shortcomings in large model code capabilities. The latest benchmark test is here. For more information, please follow other related articles on the PHP Chinese website!