Zero One, an AI company owned by Kai-Fu Lee, has another big model player on the stage:

9 billion parameter Yi-9B.

It is known as the "Science Number One" in the Yi series. It "makes up for" code mathematics, and at the same time, its comprehensive ability has not fallen behind.

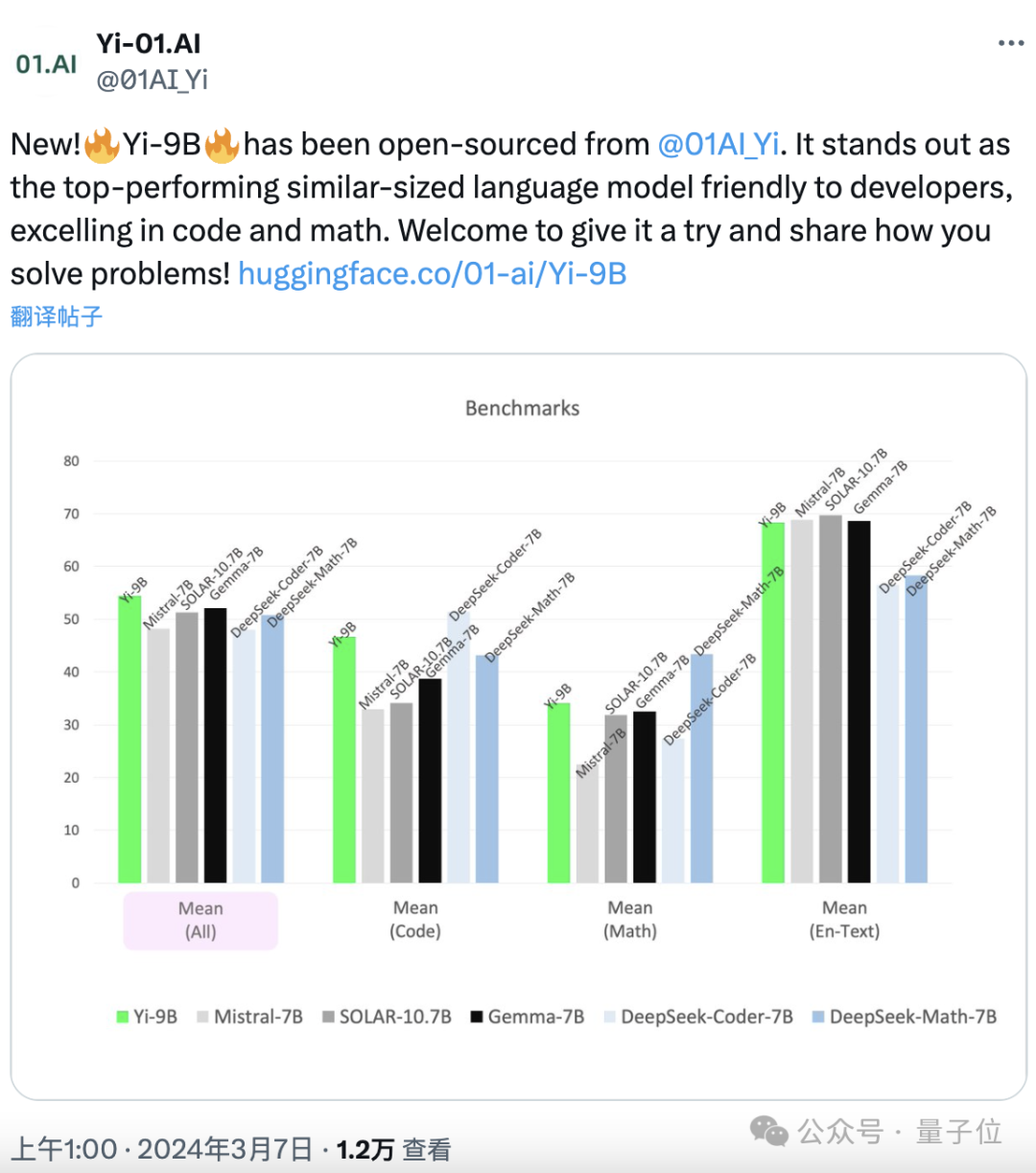

In a series of open source models of similar scale (including Mistral-7B, SOLAR-10.7B, Gemma-7B, DeepSeek-Coder-7B-Base-v1.5, etc.) , Best performance.

Old rule, release is open source, especially Friendly to developers:

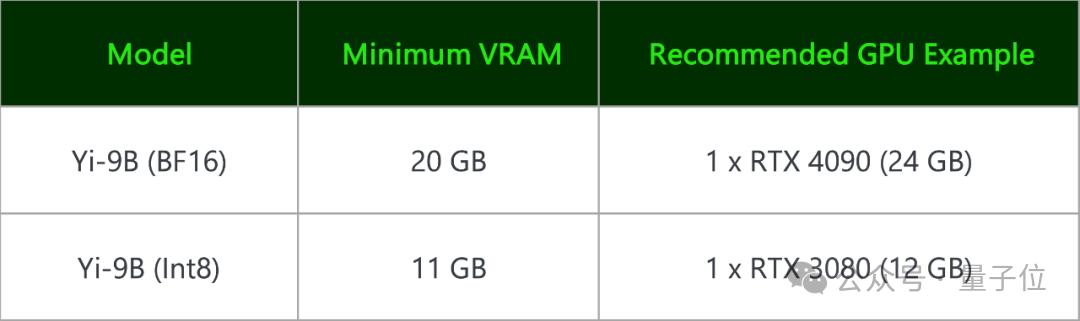

Yi-9B (BF 16) and its quantized version Yi- 9B (Int8) can be deployed on consumer-grade graphics cards.

An RTX 4090 or an RTX 3090 is enough.

The Yi family of Zero One Thousand Things has previously released the Yi-6B and Yi-34B series .

Both of these are pre-trained on 3.1T token Chinese and English data. Yi-9B is based on this and adds 0.8T token to continue training.

The deadline for data is June 2023.

It was mentioned at the beginning that the biggest improvement of Yi-9B lies in mathematics and coding, so how can these two abilities be improved?

Introduction to Zero One Thousand Things:

Simply increasing the amount of data cannot meet expectations.

relies on first increasing the model size, increasing it to 9B based on Yi-6B, then performing multi-stage data incremental training.

First of all, how to increase the model size?

One premise is that the team found through analysis:

Yi-6B has been fully trained, and the training effect may not improve no matter how much more tokens are added. So consider increasing its size. (The unit in the picture below is not TB but B)

How to increase? The answer is deep amplification.

Introduction to Zero One Thing:

Expanding the width of the original model will bring more performance losses. After depth amplification of the model by selecting the appropriate layer, add a new layer The closer the input/output cosine is to 1.0, that is, the performance of the amplified model can maintain the performance of the original model, and the model performance loss is slight.

According to this idea, Zero Yiwu chose to copy the relatively backward 16 layers (layers 12-28) of Yi-6B to form the 48-layer Yi-9B.

Experiments show that this method has better performance than using the Solar-10.7B model to copy the middle 16 layers (layers 8-24) .

Secondly, what is the multi-stage training method?

The answer is to first add 0.4T data containing text and code, but the data ratio is the same as Yi-6B.

Then add another 0.4T data, which also includes text and code, but focuses on increasing the proportion of code and mathematical data.

(Understood, it’s the same idea as our trick “think step by step” in large model questions)

After these two steps are completed, it’s not over yet , the team also referred to the ideas of two papers (An Empirical Model of Large-Batch Training and Don't Decay the Learning Rate, Increase the Batch Size) to optimize the parameter adjustment method.

That is, starting from a fixed learning rate, every time the model loss stops declining, the batch size is increased so that the decline is uninterrupted and the model learns more fully.

In the end, Yi-9B actually contained a total of 8.8 billion parameters, reaching a 4k context length.

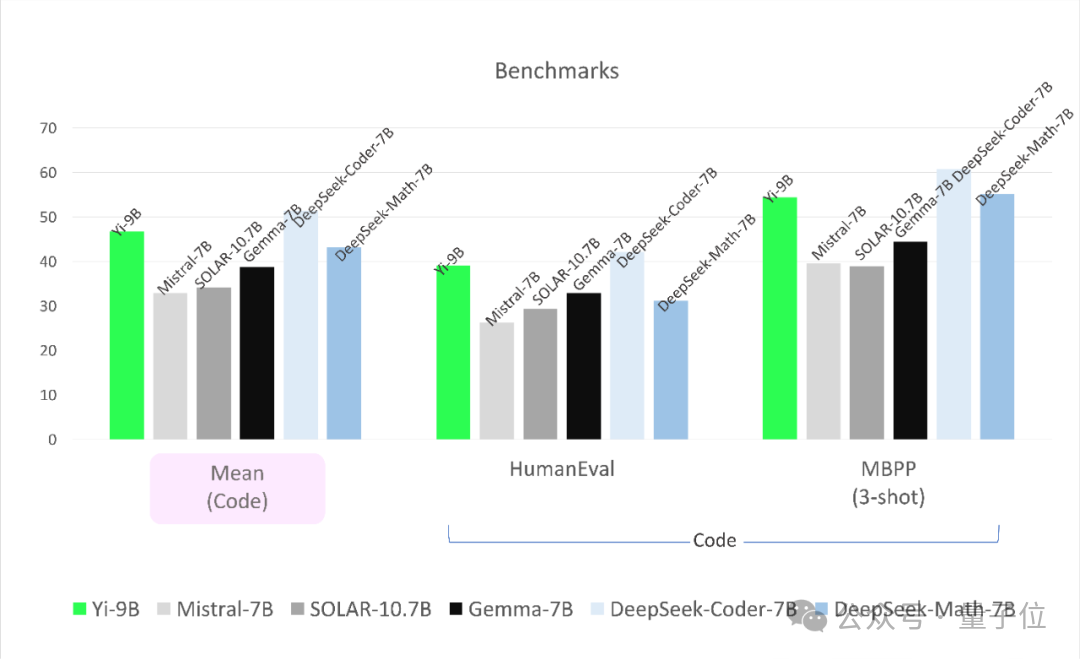

In actual testing, Zero Yiwu uses the greedy decoding generation method (that is, each time the word with the highest probability value is selected) to test.

The participating models are DeepSeek-Coder, DeepSeek-Math, Mistral-7B, SOLAR-10.7B and Gemma-7B:

(1)DeepSeek-Coder, from a domestic deep search company, its 33B instruction tuning version surpasses GPT-3.5-turbo in human evaluation, and the performance of the 7B version can reach the performance of CodeLlama-34B.

DeepSeek-Math relied on 7B parameters to overturn GPT-4, shocking the entire open source community.

(2)SOLAR-10.7BUpstage AI from South Korea was born in December 2023, and its performance surpasses Mixtral-8x7B-Instruct.

(3)Mistral-7B is the first open source MoE large model, reaching or even surpassing the level of Llama 2 70B and GPT-3.5.

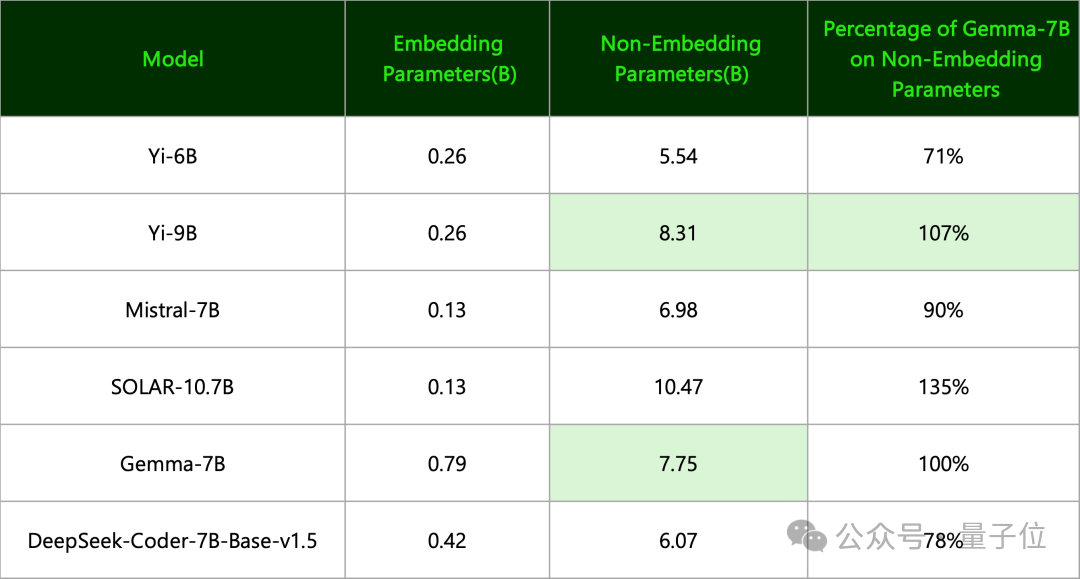

(4)Gemma-7BFrom Google, Zero Yiwu pointed out:

The number of effective parameters is actually at the same level as Yi-9B .

(The naming standards of the two are different. The former only uses Non-Embedding parameters, while the latter uses all parameters and rounds them up)

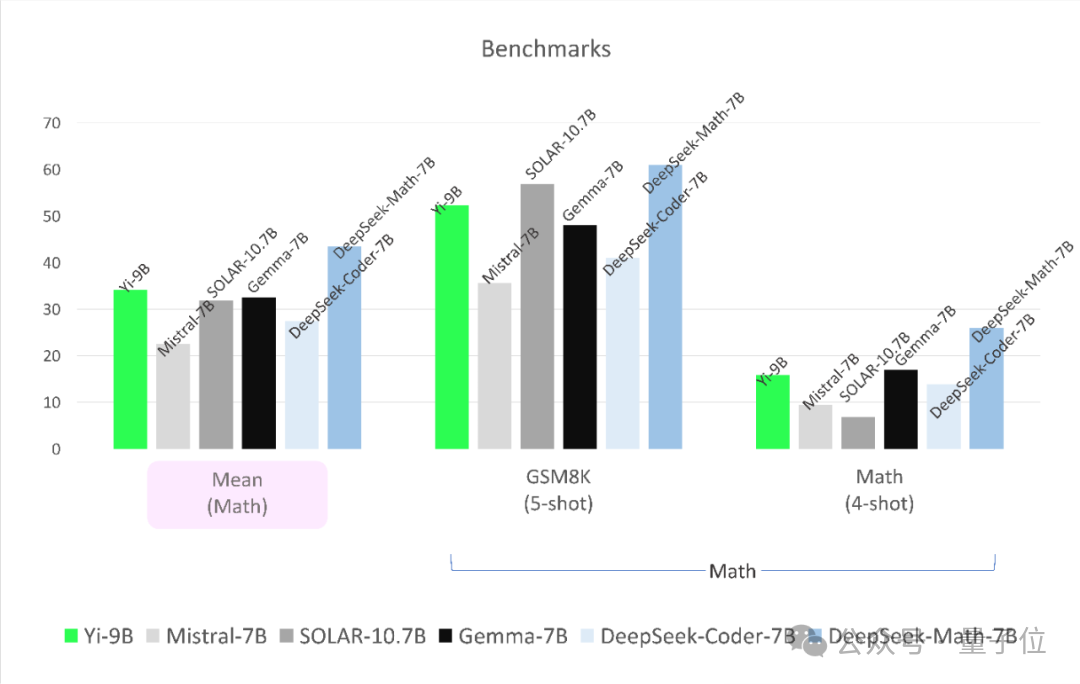

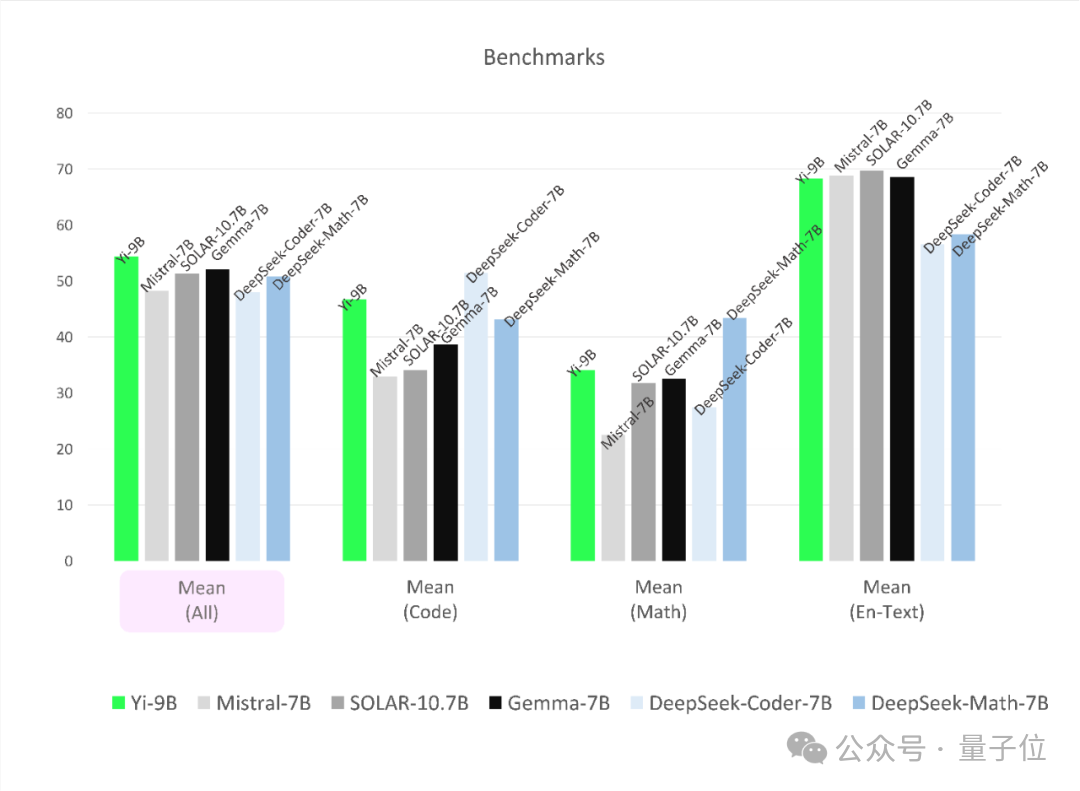

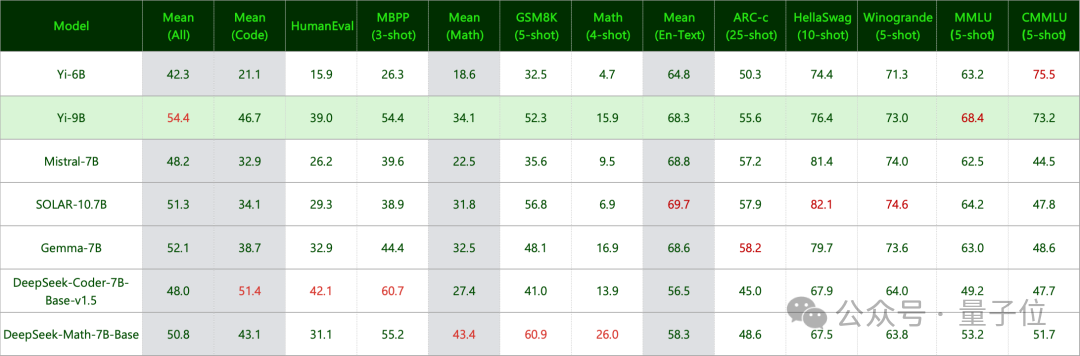

The results are as follows.

First of all, in terms of coding tasks, the performance of Yi-9B is second only to DeepSeek-Coder-7B, and the other four are all KO.

In terms of mathematical ability, the performance of Yi-9B is second only to DeepSeek-Math-7B, surpassing the other four.

The overall ability is not bad either.

Its performance is the best among open source models of similar size, surpassing all other five players.

Finally, common sense and reasoning ability were also tested:

The result is that Yi-9B is different from Mistral-7B, SOLAR-10.7B and Gemma-7B Up and down.

and language skills, not only English is good, but Chinese is also widely praised:

Finally, after reading these, some netizens said: I can’t wait to try it tried.

Others are worried about DeepSeek:

Hurry up and strengthen your "game". The overall dominance is gone==

The portal is here: https://huggingface.co/01-ai/Yi-9B

The above is the detailed content of Consumer grade graphics cards available! Li Kaifu released and open sourced the 9 billion parameter Yi model, which has the strongest code mathematical ability in history. For more information, please follow other related articles on the PHP Chinese website!