Collaborative sensing technology is of great significance in solving the perception problems of autonomous vehicles. However, existing research often ignores the possible heterogeneity among agents, that is, the diversity of sensors and perception models. In practical applications, there may be significant differences in modalities and models between agents, which leads to domain differences and makes collaborative sensing difficult. Therefore, future research needs to consider how to effectively handle the heterogeneity between agents to achieve more effective collaborative sensing. This will require the development of new methods and algorithms to accommodate the differences between different agents and ensure that they can work together to achieve a more efficient autonomous driving system.

In order to solve this practical challenge, the latest research article of ICLR 2024 "An Extensible Framework for Open Heterogeneous Collaborative Perception" defines the problem of open heterogeneous collaboration perception (Open Heterogeneous Collaborative Perception): how to integrate the constantly emerging new Can heterogeneous agent types join existing collaborative sensing systems while ensuring high sensing performance and low joining costs? Researchers from Shanghai Jiao Tong University, University of Southern California and Shanghai Artificial Intelligence Laboratory proposed HEAL (HEterogeneous ALliance) in this article: 1 A scalable heterogeneous agent collaboration framework that effectively solves the two major pain points of open heterogeneous collaborative sensing problems.

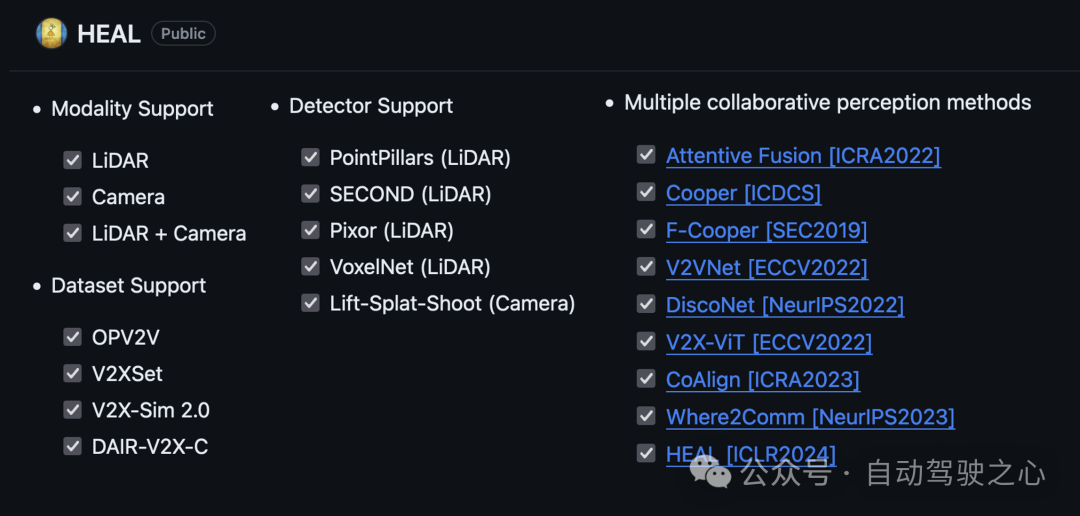

They created a code framework that contains multiple collaborative sensing data sets, multiple collaborative sensing algorithms, and supports multi-modality, which is now fully open source. The author believes that this is currently the most complete collaborative sensing code framework and is expected to help more people easily enter the multi-modal and heterogeneous collaborative sensing research field.

In recent years, autonomous driving technology has attracted much attention from academia and industry. However, actual road conditions are complex and changeable, and the sensors of a single vehicle may be blocked by other vehicles, posing challenges to the perception capabilities of the autonomous driving system. In order to solve these problems, collaborative sensing between multi-agents becomes a solution. With the advancement of communication technology, agents can share sensory information and combine their own sensor data with information from other agents to improve their perception of the surrounding environment. Through collaboration, each agent is able to obtain information beyond its own field of view, which helps improve perception and decision-making capabilities.

Figure 1. The "ghost probe" problem caused by line of sight occlusion, limited bicycle perception

In the current research field, most work is based on a A potentially oversimplified assumption: all agents must have the same structure; that is, their perception systems all use the same sensors and share the same detection model. However, in the real world, the patterns and models of different agents may be different, and new patterns and models may continue to emerge. Due to the rapid development of sensor technology and algorithms, it is unrealistic to initially identify all types of collaborative agents (including modes and models). When a heterogeneous agent that has never appeared in the training set wishes to join the collaboration, it will inevitably encounter domain differences with existing agents. This difference will limit its ability to perform feature fusion with existing agents, thereby significantly limiting the scalability of collaborative sensing.

Therefore, the problem of open heterogeneous cooperative sensing arises: How to add the emerging new agent types to the existing cooperative sensing system while ensuring high sensing performance and low integration cost?

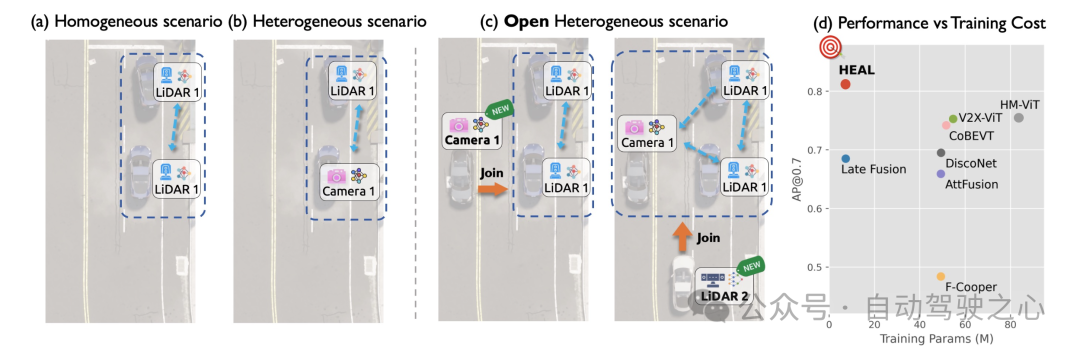

Figure 2. (a) Homogeneous collaborative sensing (b) Heterogeneous collaborative sensing (c) Open heterogeneous collaborative sensing considering the addition of new heterogeneous agents (d ) HEAL achieves the best collaborative perception performance while using minimal training cost

To solve this problem, a feasible solution is post-fusion. By fusing each agent's perceptual output (such as a 3D bounding box), post-fusion bypasses the heterogeneity between new and existing agents, and training only needs to occur on a single agent class. However, the performance of post-fusion is not ideal and has been shown to be particularly susceptible to interference factors such as positioning noise and communication delays. Another potential approach is fully collective training, which aggregates all agent types in a collaboration for collaborative training to overcome domain differences. However, this approach requires retraining all models every time a new agent type is introduced. With the continuous emergence of new heterogeneous agents, the cost of training increases sharply. HEAL proposes a new open heterogeneous collaboration framework that simultaneously has the high performance of fully collective training and the low training cost of post-fusion.

The open heterogeneous collaborative perception problem considers the following scenario: adding heterogeneous agent categories with previously unavailable modalities or models to existing in the collaboration system. Without loss of generality, we consider that the scene initially consists of homogeneous agents. They are equipped with the same type of sensors, deploy the same detection model, and all have the ability to communicate with each other. These isomorphic agents form an existing collaborative system. Subsequently, heterogeneous agents with modalities or perceptual models that have never appeared in the scene join the collaborative system. This dynamic nature is a distinctive feature of deploying collaborative sensing in the real world: agent classes are not completely determined at the beginning, and the number of types may increase over time. It is also quite different from previous heterogeneous collaborative sensing problems where the heterogeneous categories were determined and fixed in advance.

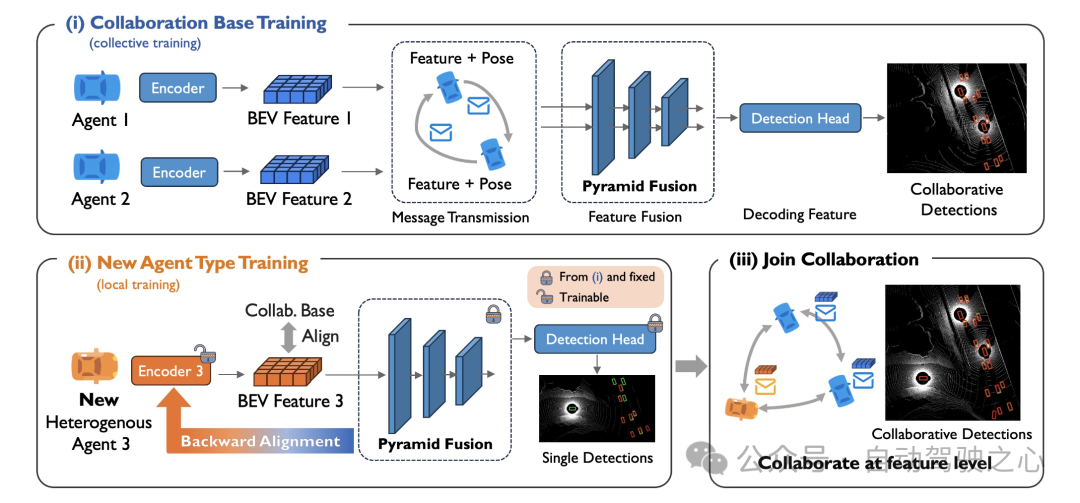

The open heterogeneous collaborative perception framework HEAL (HEterogeneous ALliance) proposed in this article designs a two-stage method to add new heterogeneous agents. to collaboration to achieve growing heterogeneous alliances: i) collaborative base class training, allowing the initial agent to train a feature fusion collaboration network and create a unified feature space; ii) new agent training, integrating the features of the new agent Aligned with the previously established unified feature space, allowing new agents and existing agents to collaborate at the feature level.

For each new agent type joining the collaboration, only the second phase of training is required. It is worth noting that the second phase of training can be conducted independently by the agent owner and does not involve collective training with existing agents. This allows the addition of new agents with lower training costs while protecting the new agents’ model details from being exposed.

Figure 3. The overall framework of HEAL

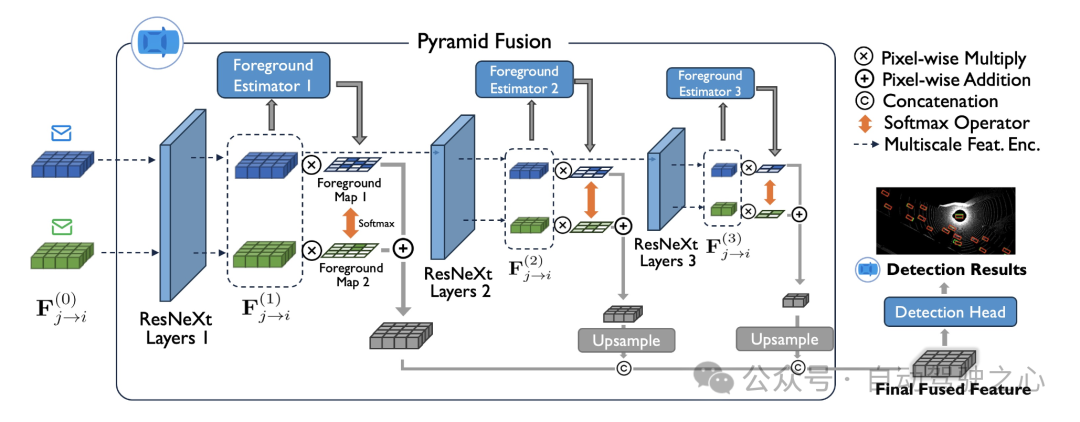

We will The isomorphic agents that exist at the beginning are used as collaborative base classes, and a collaborative sensing network based on feature fusion is trained. We propose a novel pyramid fusion network to extract and fuse the features of multiple agents. Specifically, for the BEV features encoded by the encoder of each isomorphic agent, we let it pass through multiple layers of ResNeXt networks of different scales to Extract coarse-grained and fine-grained feature information. For feature maps of different scales, we apply a foreground predictor network to them to estimate the probability that there is a foreground such as a vehicle at each feature position of the BEV. Across collaborators, the probability map of the foreground is normalized as a weight distribution from a pixel-by-pixel weighted fusion of the feature maps. After obtaining the fused feature maps at different scales, we use a series of upsampling networks to convert them to the same feature map size, and obtain the final fused feature map.

Figure 4. Pyramid Fusion Network

The fused feature map will pass through a detection head and be converted into the final collaborative detection result. Both the collaborative detection results and the probability map of the foreground are supervised by ground-truth. After training, the parameters of the collaboration network (pyramid fusion network) save the relevant feature information of the collaboration base class and construct a shared feature space for subsequent alignment of new heterogeneous agents.

We consider adding a new heterogeneous agent type. We propose a novel backward alignment method. The core idea is to utilize the pyramid fusion network and detection head of the previous stage as the detector backend of the new agent, and only update the parameters related to the front-end encoder.

It is worth noting that we conduct single-body training on single agents of new heterogeneous categories, and do not involve collaboration between agents. Therefore, the input of the pyramid fusion network is a single feature map, rather than the multi-agent feature map in one stage. As the pretrained pyramid fusion module and detection head are established as the backend and fixed, the training process evolves to adapt the frontend encoder to the parameters of the backend so that the features encoded by the new agent are consistent with the unified feature space. Since features are aligned with features of existing agents, they can achieve high-performance feature-level collaboration.

Backward alignment also shows a unique advantage: training is only performed on a new single agent. This greatly reduces the training cost and the data collection cost of spatio-temporal synchronization each time a new agent is added. Furthermore, it prevents the new agent’s model details from being exposed to others and allows the owner of the new agent to train the model using their own sensor data. This will significantly address many concerns that automotive companies may have when deploying collaborative vehicle-to-vehicle sensing technology (V2V).

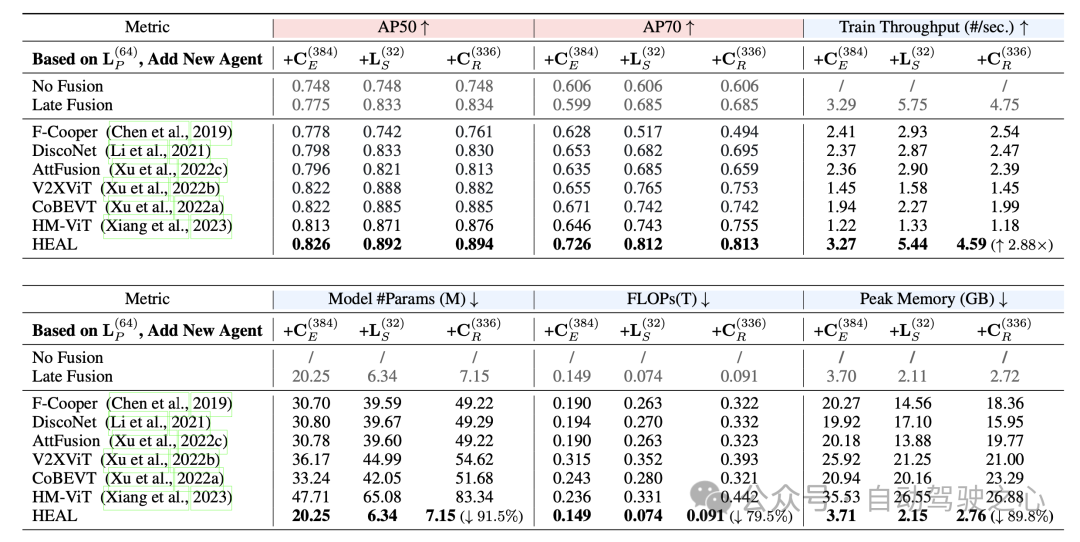

Based on the OPV2V data set, this paper proposes a more heterogeneous OPV2V-H data set, supplementing 16 lines for each vehicle. and 32 lines of lidar data, as well as data from 4 depth cameras. Experimental results on the OPV2V-H data set and the real data set DAIR-V2X show that HEAL significantly reduces a series of training costs (training parameters, FLOPs, training time, etc.) for heterogeneous agents to join collaboration, and also maintains Extremely high collaborative detection performance.

Figure 5. HEAL has both high performance and low training cost

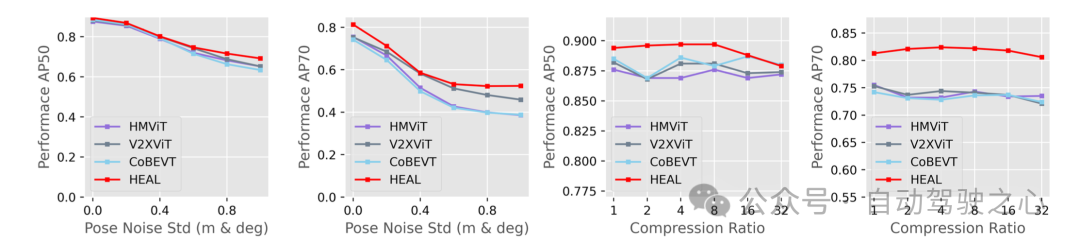

In the presence of positioning noise and feature compression, HEAL still maintains the best performance The detection performance shows that HEAL is currently the most effective collaborative sensing algorithm in a setting that is closer to reality.

The above is the detailed content of 'Rescue' open heterogeneous scenarios | HEAL: the latest scalable collaborative sensing framework. For more information, please follow other related articles on the PHP Chinese website!