You can train an AI painting model even if you don’t know how to write code!

With the help of this framework, everything from training to inference can be handled in one stop, and multiple models can be managed at one time.

The Alibaba team launched and open sourced SCEPTER Studio, a universal image generation workbench.

With it, you can complete model training and fine-tuning directly in the web interface without coding, and manage related data.

The team also launched a DEMO with three built-in models, allowing you to experience SCEPTER’s inference function online.

So let’s take a look at what SCEPTER can do specifically!

With SCEPTER, writing programs becomes no longer necessary. Training and fine-tuning can be completed simply by selecting the model and adjusting parameters on the web page.

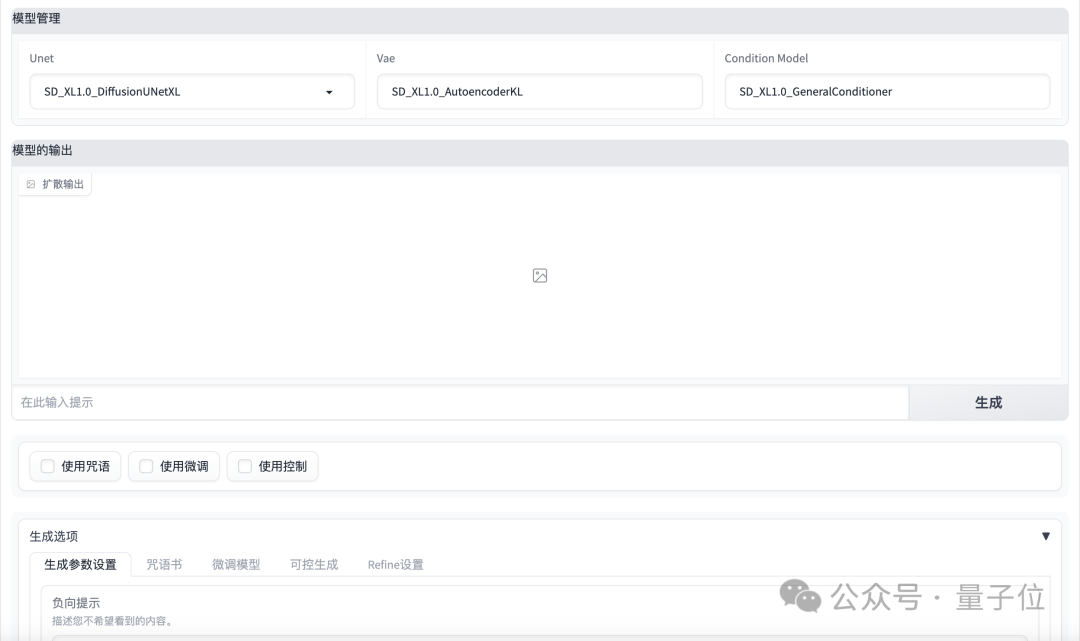

Specifically, in terms of models, SCEPTER currently supports the SD 1.5/2.1 and SDXL models of the Stable Diffusion series.

In terms of fine-tuning methods, it supports traditional full-volume fine-tuning, LoRA and other methods, as well as its own SCEdit fine-tuning framework. In the future, it will also add support for Res-Tuning tuning methods.

SCEdit allows the diffusion model to complete image generation tasks with higher efficiency through skip connections, saving 30%-50% of memory overhead compared to LoRA.

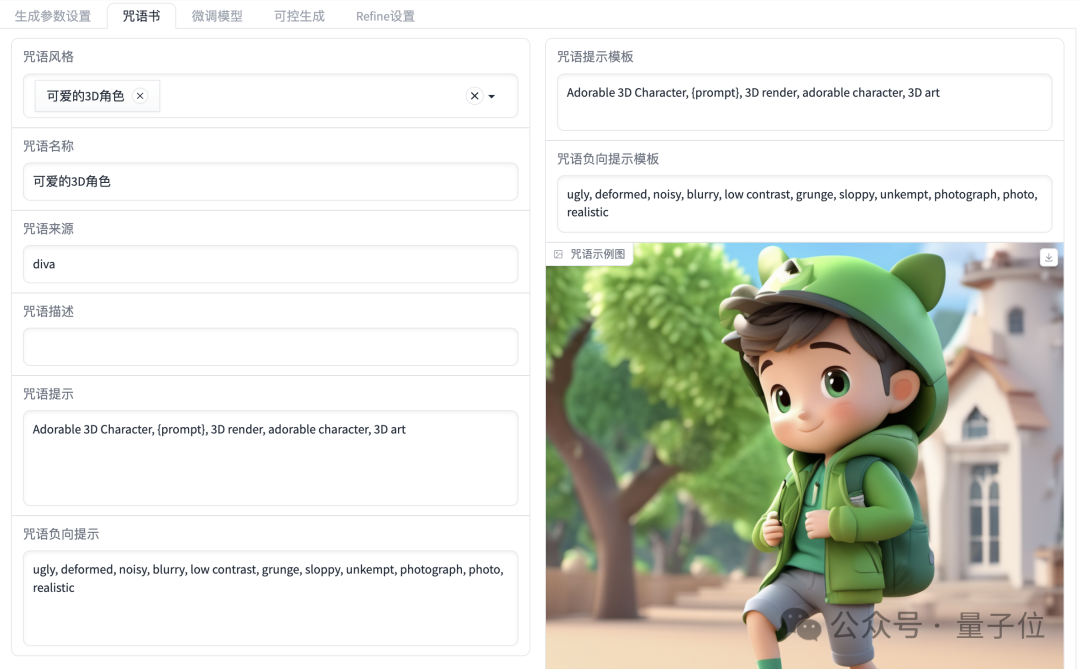

In terms of training data, SCEPTER has built-in self-created data sets containing 6 styles including 3D, Japanese comics, oil paintings, and sketches, with 30 image-text pairs for each style.

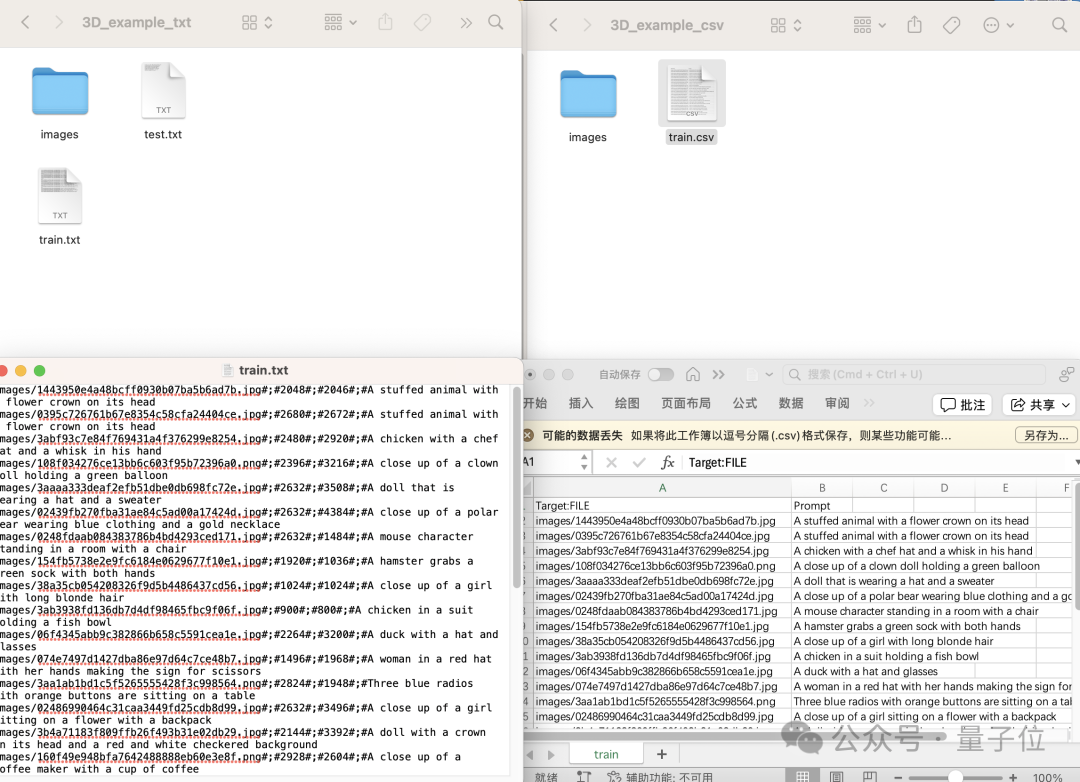

At the same time, you can also package and compress the pictures you prepared yourself, establish the corresponding relationship between the image (file name) and prompt with a csv or txt document, and import it into the SCEPTER platform.

If you don’t even want to write a document, you can also directly upload pictures and mark Prompt in the SCEPTER interface to manage, add, and delete pictures in the data set.

In the inference stage, SCEPTER supports downstream tasks including grammatical images and controllable image synthesis. In the future, it will also support image editing, and its usage is similar to the existing Web version of SD.

At the same time, the SCEPTER interface also integrates spell books (Prompt collection) and some ready-made fine-tuned models.

So, how should SCEPTER be consumed?

If you just want to play with the generation, the official DEMO on HuggingFace and Magic Community can meet the requirements, and the latter also has a Chinese interface.

If you want to use data management, training and other functions, you need to install and deploy the full version yourself. For specific steps, you can refer to the tutorial on the GitHub page.

In the entire process, only the installation and deployment process requires the use of some simple codes, and all subsequent processes can be operated directly in the web interface.

If you are interested, go and experience it!

Portal:https://github.com/modelscope/scepter

The above is the detailed content of AI drawing model training to reasoning can be done with a web page. For more information, please follow other related articles on the PHP Chinese website!

How to modify the text in the picture

How to modify the text in the picture What to do if the embedded image is not displayed completely

What to do if the embedded image is not displayed completely How to make ppt pictures appear one by one

How to make ppt pictures appear one by one How to make a round picture in ppt

How to make a round picture in ppt Dual graphics card notebook

Dual graphics card notebook Taobao password-free payment

Taobao password-free payment Implementation method of js barrage function

Implementation method of js barrage function SQL 5120 error solution

SQL 5120 error solution