High signal-to-noise ratio of fluorescence imaging is crucial for accurate visualization of biological phenomena, however, the noise issue remains one of the major challenges to imaging sensitivity.

The research team at Tsinghua University provides the spatial redundancy denoising Transformer (SRDTrans) to remove noise in fluorescence images in a self-supervised manner.

The team proposed a new sampling strategy to extract adjacent orthogonal training pairs based on spatial redundancy and eliminate the dependence on high imaging speed. In addition, they developed a lightweight spatiotemporal Transformer architecture capable of capturing distant dependencies and high-resolution features at low computational cost.

SRDTrans preserves high-frequency information without causing over-smoothing of structures or distortion of fluorescence traces. Furthermore, SRDTrans does not rely on specific imaging procedures and sample assumptions, making it suitable for expansion into various imaging modalities and biological applications.

The study was titled "Spatial redundancy transformer for self-supervised fluorescence image denoising" and was published in "Nature Computational Science" on December 11, 2023.

The rapid development of in vivo imaging technology allows researchers to observe biological structures and activities at the micron and even nanoscale. Fluorescence microscopy, as a popular imaging method, helps reveal new physiological and pathological mechanisms with its high spatiotemporal resolution and molecular specificity. The primary goal of fluorescence microscopy is to obtain clean, clear images that contain sufficient sample information to ensure the accuracy of downstream analysis and support confident conclusions.

However, due to the influence of various biophysical and biochemical factors, fluorescence imaging suffers from various limitations in practical operations. For example, the brightness, phototoxicity, and photobleaching of fluorophores can all have a negative impact on imaging results. In the case of photon limitation, the inherent photon shot noise can significantly reduce the signal-to-noise ratio (SNR) of the image, especially under low illumination and high-speed observation conditions. These factors make the quality and reliability of fluorescence imaging challenging and need to be overcome and optimized in practice.

A variety of methods have been proposed to remove noise in fluorescence images. Traditional denoising algorithms based on numerical filtering and mathematical optimization have unsatisfactory performance and limited applicability. In recent years, deep learning has shown remarkable achievements in the field of image denoising.

By iteratively training using ground truth (GT) datasets, deep neural networks are able to learn the mapping relationship between noisy images and their clean counterparts. The effectiveness of this supervision method mainly depends on the paired GT images.

Obtaining clean images with pixel-by-pixel registration is a huge challenge when observing the activity of living organisms because samples often undergo rapid dynamic changes. In order to alleviate this contradiction, some self-supervised methods have been proposed to achieve more applicable and practical denoising in fluorescence imaging.

In order to obtain better denoising performance, the ability to simultaneously extract global spatial information and long-range temporal correlation is crucial, and due to the locality of the convolution kernel, this is What neural networks (CNN) lack. In addition, the inherent spectral bias makes CNN tend to preferentially fit low-frequency features while ignoring high-frequency features, inevitably producing over-smooth denoising results.

The research team at Tsinghua University proposed the spatial redundancy denoising Transformer (SRDTrans) to solve these dilemmas.

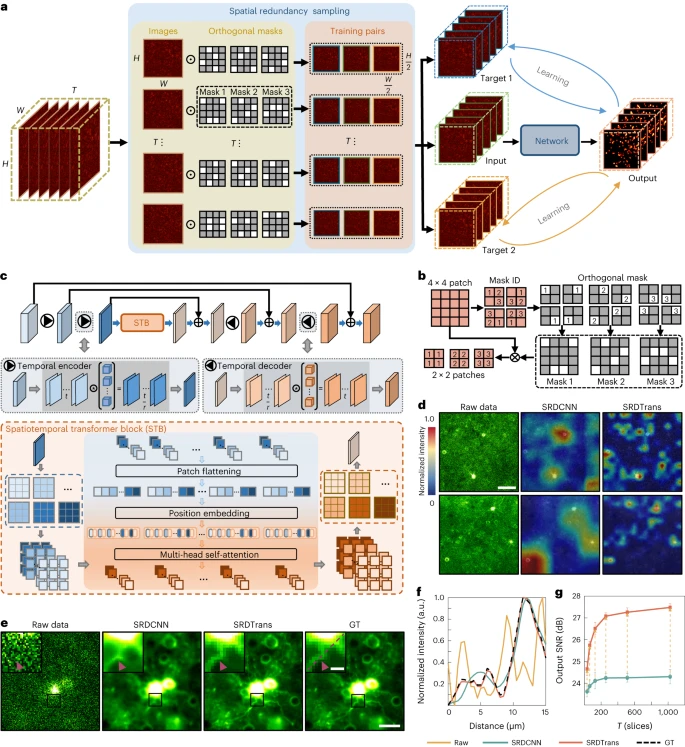

Figure: SRDTrans principle and performance evaluation. (Source: paper)

On the one hand, the researchers proposed a spatially redundant sampling strategy to extract three-dimensional (3D) training from raw time-lapse data in two orthogonal directions right.

This scheme does not rely on the similarity between two adjacent frames, so SRDTrans is suitable for very fast activities and extremely low imaging speeds, which is consistent with what the team previously proposed DeepCAD exploiting temporal redundancy is complementary.

Since SRDTrans does not rely on any assumptions about contrast mechanisms, noise models, sample dynamics and imaging speed. Therefore, it can be easily extended to other biological samples and imaging modalities, such as membrane voltage imaging, single protein detection, light sheet microscopy, confocal microscopy, light field microscopy, and super-resolution microscopy.

On the other hand, the researchers designed a lightweight spatiotemporal transformation network to fully exploit long-range correlation. The optimized feature interaction mechanism enables the model to obtain high-resolution features with a small number of parameters. Compared with classic CNN, the proposed SRDTrans has stronger global perception and high-frequency maintenance capabilities, and is able to reveal fine-grained spatiotemporal patterns that were previously difficult to discern.

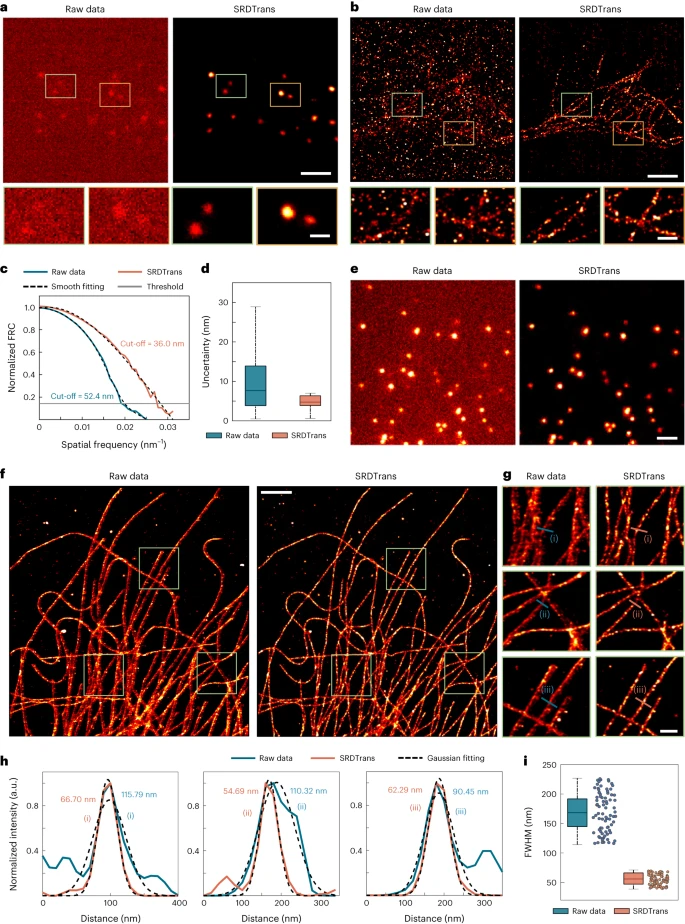

The team demonstrated the superior noise reduction performance of SRDTrans in two representative applications. The first is single-molecule localization microscopy (SMLM), where adjacent frames are random subsets of fluorophores.

Figure: Applying SRDTrans to experimental SMLM data. (Source: Paper)

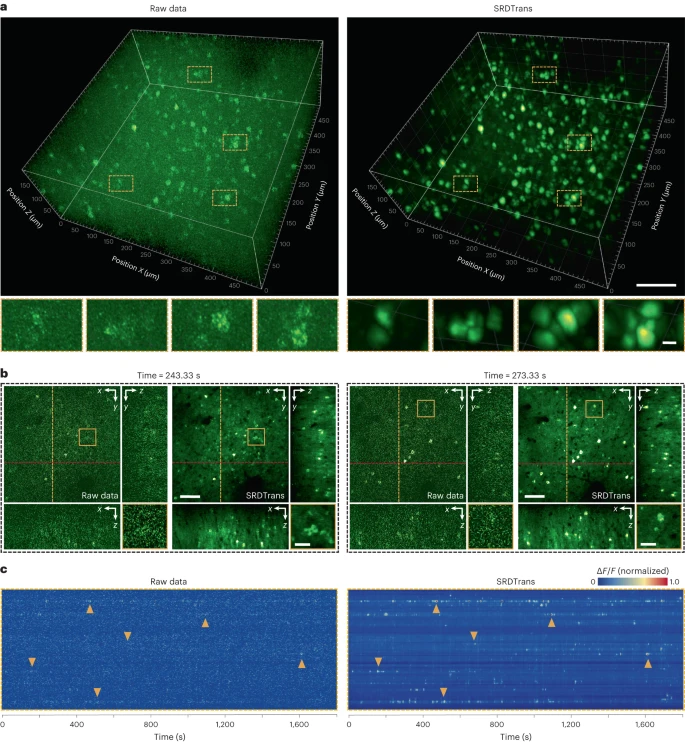

The other is two-photon calcium imaging of large 3D neuronal populations at volumetric velocities as low as 0.3Hz. Extensive qualitative and quantitative results demonstrate that SRDTrans can serve as an essential denoising tool for fluorescence imaging to observe a variety of cellular and subcellular phenomena.

Figure: High-sensitivity calcium imaging of large neural volumes. (Source: paper)

SRDTrans also has some limitations, mainly in the basic assumption that adjacent pixels should have approximate structure. SRDTrans will fail if the spatial sampling rate is too low to provide sufficient redundancy. Another potential risk is the ability to generalize, as SRDTrans’ lightweight network architecture is better suited to specific tasks.

It is believed that training a specific model for specific data is the most reliable way to use deep learning for fluorescence image denoising. Therefore, new models should be trained to ensure optimal results when imaging parameters, modalities, and samples change.

As the development of fluorescent indicators moves toward faster kinetics, the imaging speed required to monitor biological dynamics at the millisecond level to record these rapid activities continues to grow. Obtaining sufficient sampling rates becomes increasingly challenging for denoising methods that rely on temporal redundancy. The team's perspective is to fill this gap by seeking to exploit spatial redundancy as an alternative to enable self-supervised denoising in more imaging applications.

Although the perfect case for spatially redundant sampling is a spatial sampling rate that is twice as high as diffraction-limited Nyquist sampling, thus ensuring that two adjacent pixels have nearly identical optical signals ; but in most cases, the endogenous similarity between the two spatially downsampled subsequences is sufficient to guide the training of the network.

However, this does not mean that the proposed spatial redundant sampling strategy can completely replace temporal redundant sampling, because ablation studies show that if equipped with the same network architecture, temporal redundancy Sampling enables better performance in high-speed imaging. The advantage of SRDTrans over DeepCAD at high imaging speeds is actually due to the Transformer architecture.

Generally speaking, spatial redundancy and temporal redundancy are two complementary sampling strategies that can achieve self-supervised training of fluorescence time-lapse imaging denoising networks. Which sampling strategy is used depends on which redundancy is greater in the data. It is worth noting that in many cases neither redundancy is sufficient to support current sampling strategies. The development of specific or more general self-supervised denoising methods will be of lasting value for fluorescence imaging.

Paper link: https://www.nature.com/articles/s43588-023-00568-2

The above is the detailed content of To remove noise in fluorescence images in a self-supervised manner, the Tsinghua team developed the spatial redundancy denoising Transformer method. For more information, please follow other related articles on the PHP Chinese website!