A few days ago, OpenAI had a major update and announced 5 new models in one go, including two new text embedding models.

Embedding is the use of numerical sequences to represent concepts in natural language, code, etc. They help machine learning models and other algorithms better understand the relationships between content and make it easier to perform tasks such as clustering or retrieval.

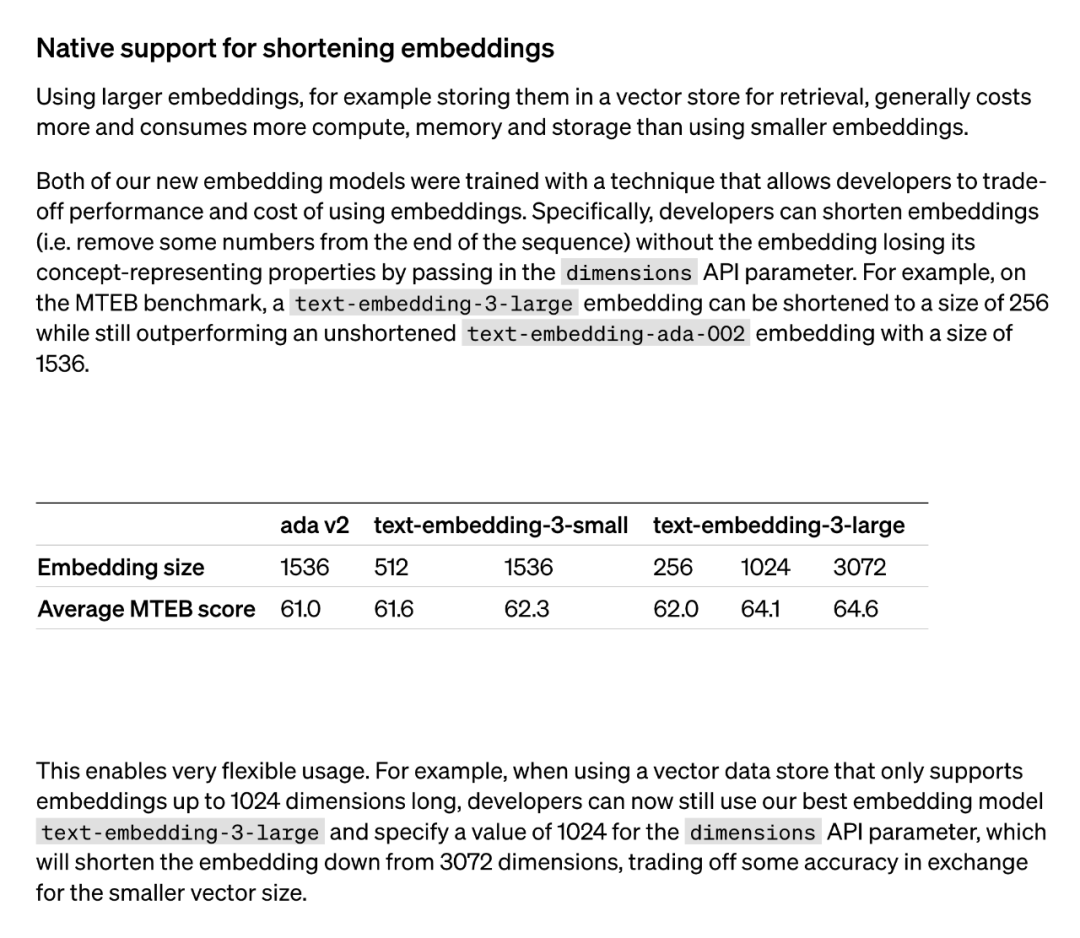

Generally, using larger embedding models (such as stored in vector memory for retrieval) consumes more cost, computing power, memory and storage resources. However, the two text embedding models launched by OpenAI offer different options. First, the text-embedding-3-small model is a smaller but efficient model. It can be used in resource-limited environments and performs well when handling text embedding tasks. On the other hand, the text-embedding-3-large model is larger and more powerful. This model can handle more complex text embedding tasks and provide more accurate and detailed embedding representations. However, using this model requires more computing resources and storage space. Therefore, depending on the specific needs and resource constraints, a suitable model can be selected to balance the relationship between cost and performance.

Both new embedding models are performed using a training technique that allows developers to trade off the performance and cost of embedding. Specifically, developers can shorten the size of the embedding without losing its conceptual representation properties by passing the embedding in the dimensions API parameter. For example, on the MTEB benchmark, text-embedding-3-large can be shortened to a size of 256 but still outperforms the unshortened text-embedding-ada-002 embedding (of size 1536). In this way, developers can choose a suitable embedding model based on specific needs, which can not only meet performance requirements but also control costs.

#The application of this technology is very flexible. For example, when using a vector data store that only supports embeddings up to 1024 dimensions, a developer can select the best embedding model text-embedding-3-large and change the embedding dimensions from 3072 by specifying a value of 1024 for the dimensions API parameter. shortened to 1024. Although some accuracy may be sacrificed by doing this, smaller vector sizes can be obtained.

The "shortened embedding" method used by OpenAI subsequently attracted widespread attention from researchers.

It was found that this method is the same as the "Matryoshka Representation Learning" method proposed in a paper in May 2022.

Hidden behind OpenAI’s new embedding model update is a cool one proposed by @adityakusupati et al. Embedding representation technology.

And Aditya Kusupati, one of the authors of MRL, also said: "OpenAI uses MRL by default in the v3 embedded API for retrieval and RAG! Other models and services should catch up soon."

So what exactly is MRL? How's the effect? It’s all in the 2022 paper below.

The question posed by the researchers is: Can a flexible representation method be designed to adapt to multiple downstream tasks with different computing resources?

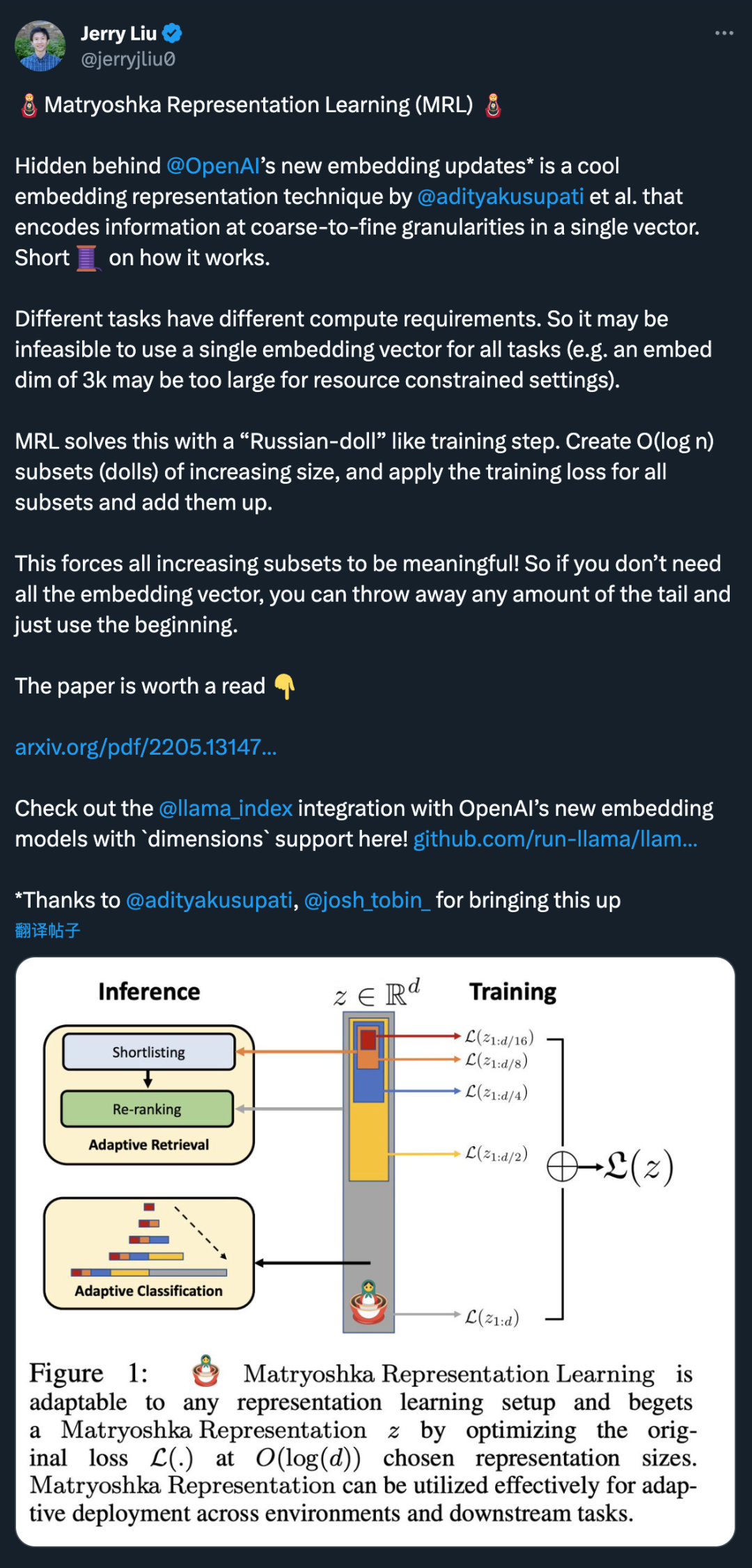

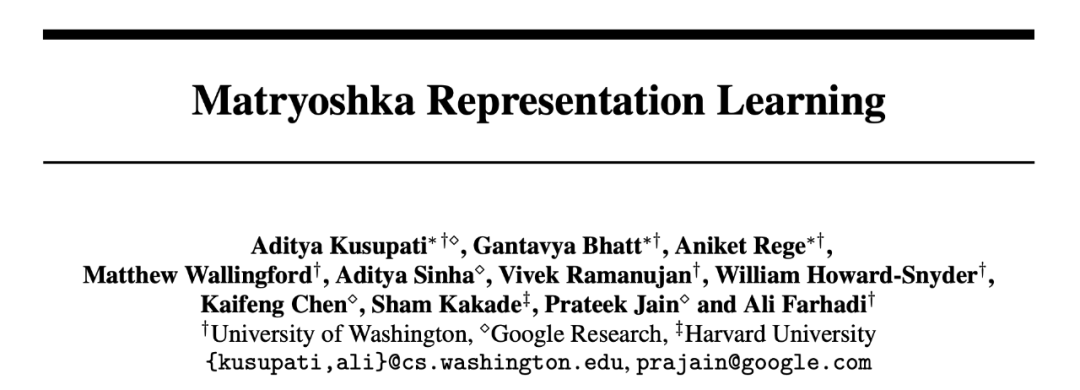

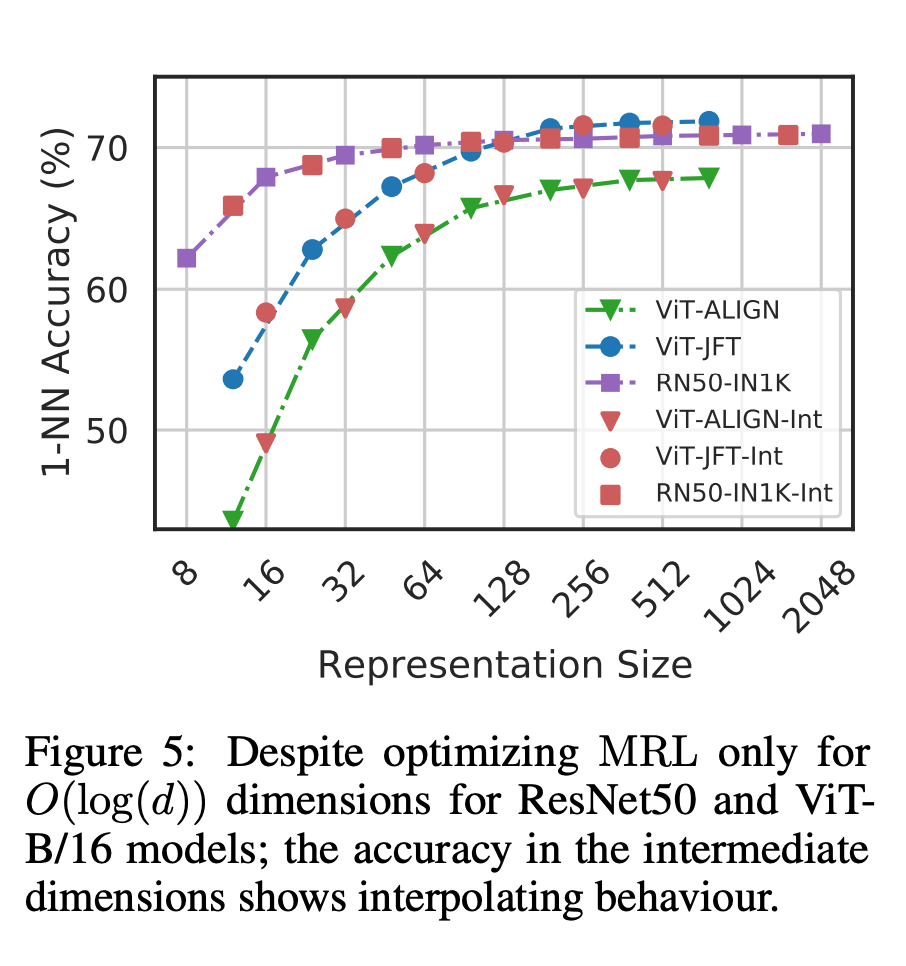

MRL learns representations of different capacities in the same high-dimensional vector by explicitly optimizing O (log (d)) low-dimensional vectors in a nested manner, hence the name Matryoshka "Russian matryoshka doll". MRL can be adapted to any existing representation pipeline and can be easily extended to many standard tasks in computer vision and natural language processing.

Figure 1 shows the core idea of MRL and the adaptive deployment setup of the learned Matryoshka representation:

The first of Matryoshka representation An m-dimensions (m∈[d]) is an information-rich low-dimensional vector that requires no additional training cost and is as accurate as an independently trained m-dimensional representation. The information content of Matryoshka representations increases with increasing dimensionality, forming a coarse-to-fine representation without requiring extensive training or additional deployment overhead. MRL provides the required flexibility and multi-fidelity for characterizing vectors, ensuring a near-optimal trade-off between accuracy and computational effort. With these advantages, MRL can be deployed adaptively based on accuracy and computational constraints.

In this work, we focus on two key building blocks of real-world ML systems: large-scale classification and retrieval.

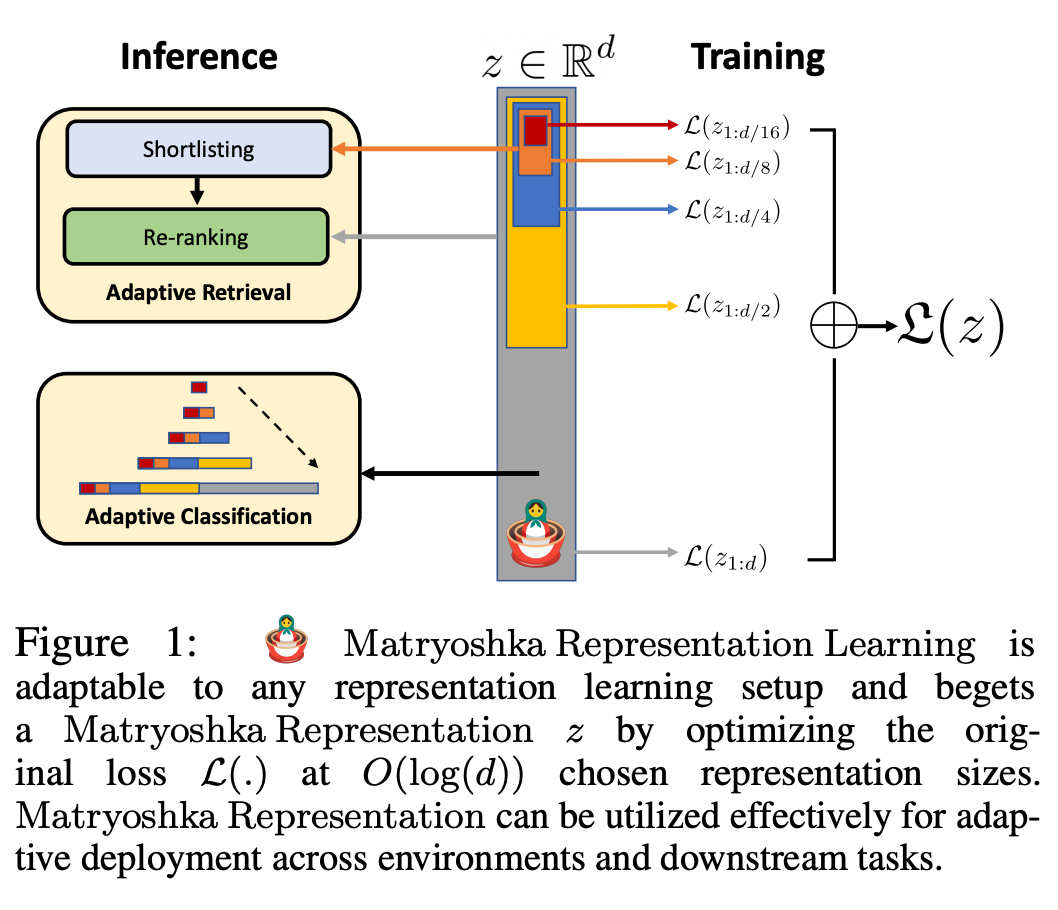

For classification, the researchers used adaptive cascades and used variable-size representations produced by models trained by MRL, which greatly reduced the time required to achieve a specific accuracy. Embedded average dimensionality. For example, on ImageNet-1K, MRL adaptive classification results in representation size reduction of up to 14x with the same accuracy as the baseline.

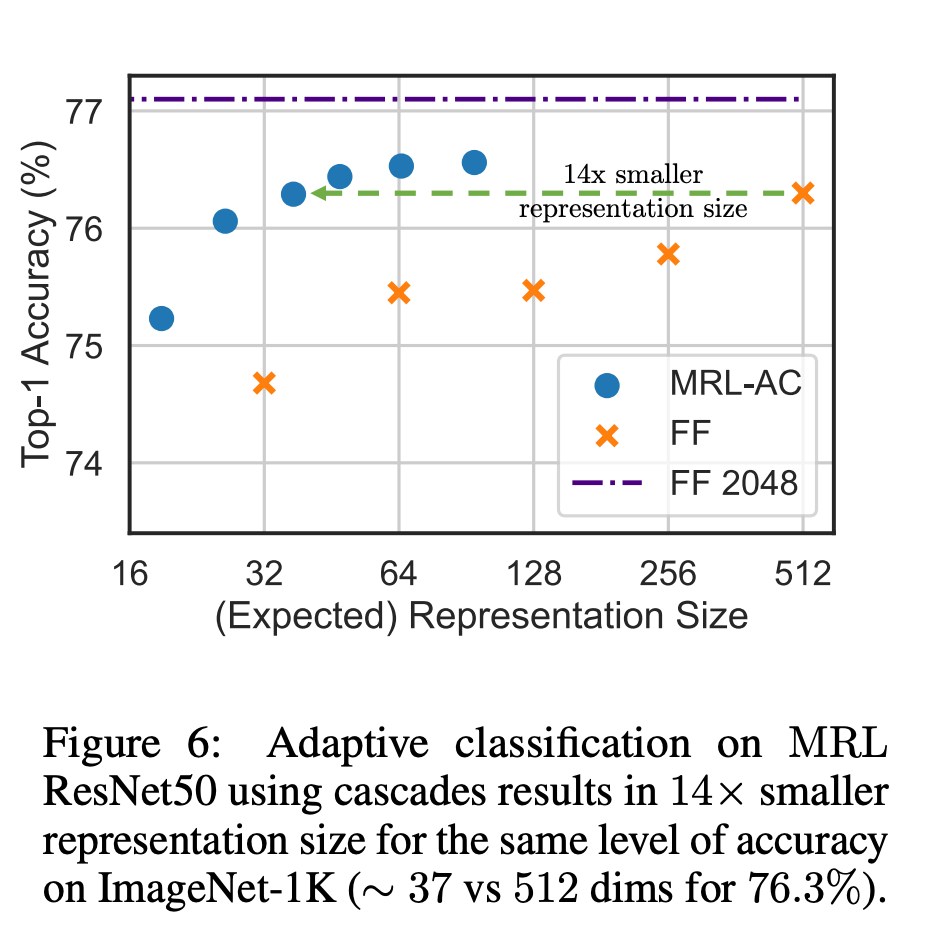

Similarly, researchers have also used MRL in adaptive retrieval systems. Given a query, the first few dimensions of the query embedding are used to filter the retrieval candidates, and then successively more dimensions are used to reorder the retrieval set. A simple implementation of this approach achieves 128x the theoretical speed in FLOPS and 14x the wall clock time compared to a single retrieval system using standard embedding vectors; it is important to note that the retrieval accuracy of MRL Comparable to the accuracy of a single retrieval (Section 4.3.1).

Finally, since MRL explicitly learns the representation vector from coarse to fine, intuitively it should be shared among different dimensions More semantic information (Figure 5). This is reflected in long-tail continuous learning settings, which can improve accuracy by up to 2% while being as robust as the original embeddings. In addition, due to the coarse-grained to fine-grained nature of MRL, it can also be used as a method to analyze the ease of instance classification and information bottlenecks.

For more research details, please refer to the original text of the paper.

The above is the detailed content of Netizens exposed the embedding technology used in OpenAI's new model. For more information, please follow other related articles on the PHP Chinese website!