Deep learning is a branch of machine learning that aims to simulate the brain's capabilities in data processing. It solves problems by building artificial neural network models that enable machines to learn without supervision. This approach allows machines to automatically extract and understand complex patterns and features. Through deep learning, machines can learn from large amounts of data and provide highly accurate predictions and decisions. This has enabled deep learning to achieve great success in areas such as computer vision, natural language processing, and speech recognition.

To understand the function of neural networks, consider the transmission of impulses in neurons. After data is received from the dendrite terminal, it is weighted (multiplied by w) in the nucleus and then transmitted along the axon and connected to another nerve cell. Axons (x's) are the output from one neuron and become the input to another neuron, thus ensuring the transfer of information between nerves.

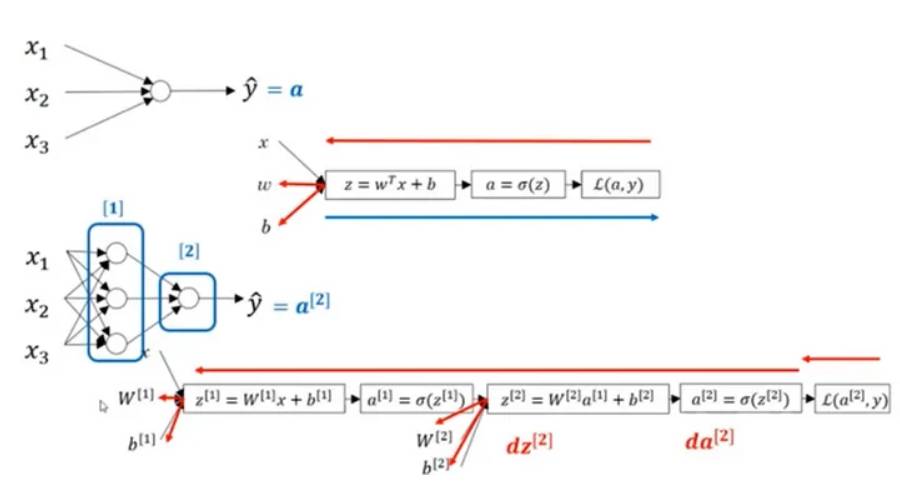

In order to model and train on the computer, we need to understand the algorithm of the operation and obtain the output by entering the command.

Here we express it through mathematics, as follows:

In the above figure, a 2-layer neural network is shown, which contains a hidden layer of 4 neurons and a Output layer of a single neuron. It should be noted that the number of input layers does not affect the operation of the neural network. The number of neurons in these layers and the number of input values are represented by the parameters w and b. Specifically, the input to the hidden layer is x, and the input to the output layer is the value of a.

Hyperbolic tangent, ReLU, Leaky ReLU and other functions can replace sigmoid as a differentiable activation function and be used in the layer, and the weights are updated through the derivative operation in backpropagation.

ReLU activation function is widely used in deep learning. Since the parts of the ReLU function that are less than 0 are not differentiable, they do not learn during training. The Leaky ReLU activation function solves this problem. It is differentiable in parts less than 0 and will learn in any case. This makes Leaky ReLU more effective than ReLU in some scenarios.

The above is the detailed content of Analysis of artificial neural network learning methods in deep learning. For more information, please follow other related articles on the PHP Chinese website!