When will GPT-5 arrive and what capabilities will it have?

A new model from the Allen Institute for AI tells you the answer.

Unified-IO 2, launched by the Allen Institute for Artificial Intelligence, is the first model that can process and generate text, images, audio, video and action sequences.

This advanced AI model is trained using billions of data points. The model size is only 7B, but it exhibits the broadest multi-modal capabilities to date.

Paper address: https://arxiv.org/pdf/2312.17172.pdf

Then , what is the relationship between Unified-IO 2 and GPT-5?

In June 2022, the Allen Institute for Artificial Intelligence launched the first generation of Unified-IO, becoming one of the multi-modal models capable of processing images and language simultaneously.

Around the same time, OpenAI is testing GPT-4 internally and will officially release it in March 2023.

So, Unified-IO can be seen as a preview of future large-scale AI models.

That said, OpenAI may be testing GPT-5 internally and will release it in a few months.

The capabilities shown to us by Unified-IO 2 this time will also be what we can look forward to in the new year:

## New AI models like #GPT-5 can handle more modalities, perform many tasks natively through extensive learning, and have a fundamental understanding of interacting with objects and robots.

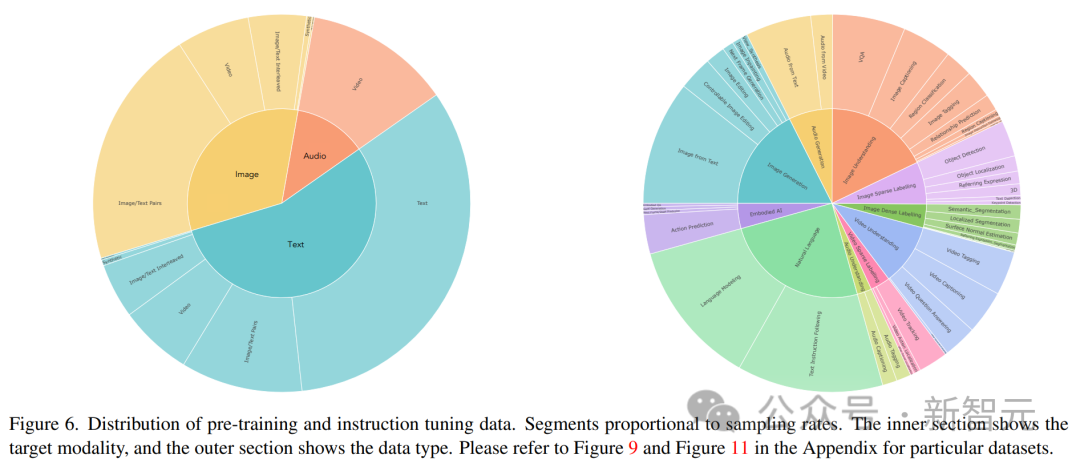

Unified-IO 2’s training data includes: 1 billion image-text pairs, 1 trillion text tokens, 180 million video clips , 130 million images with text, 3 million 3D assets, and 1 million robot agent motion sequences.

The research team combined a total of more than 120 datasets into a 600 TB package covering 220 visual, language, auditory and motor tasks.

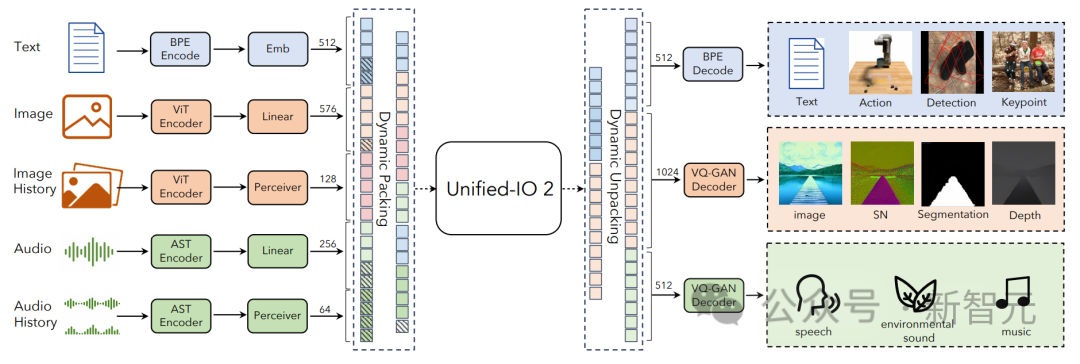

Unified-IO 2 adopts an encoder-decoder architecture with some changes to stabilize training and effectively utilize multi-modal signals.

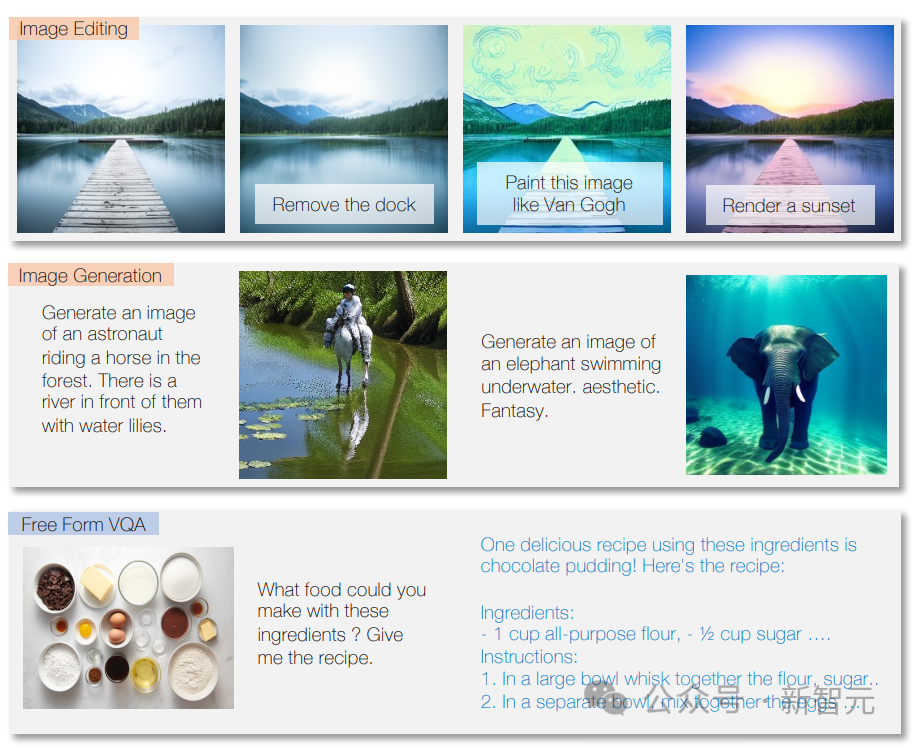

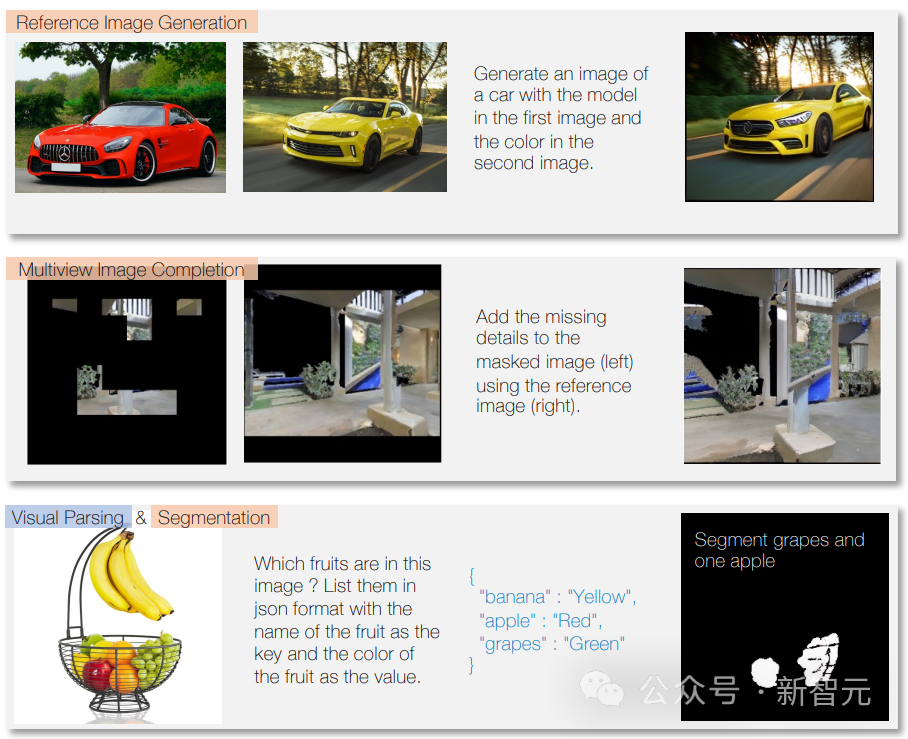

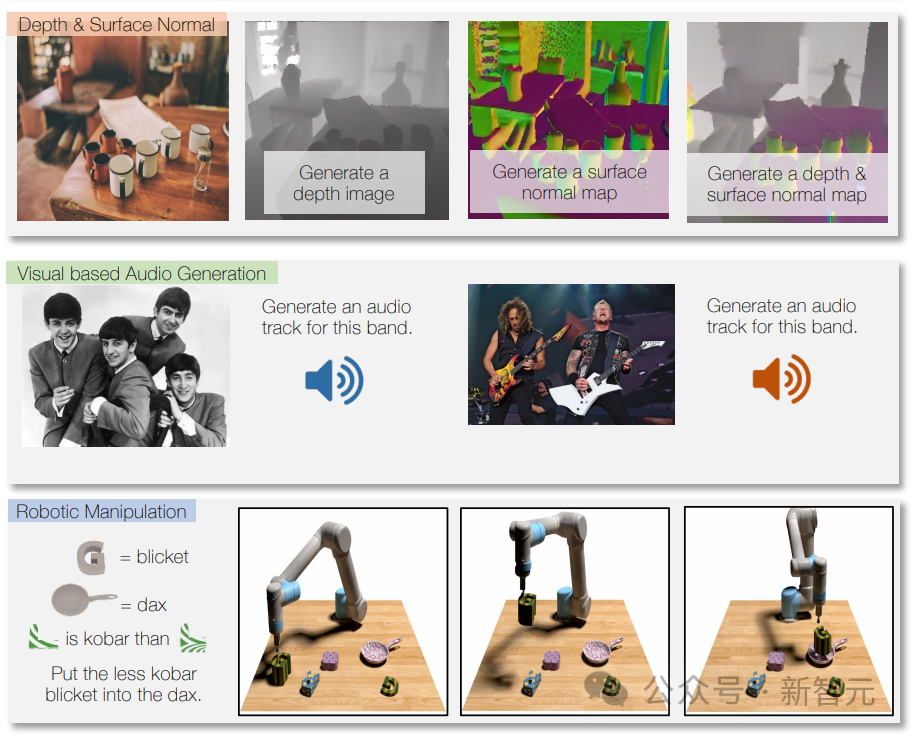

The model can answer questions, compose text according to instructions, and analyze text content.

The model can also identify image content, provide image descriptions, perform image processing tasks, and create new images based on text descriptions.

It can also generate music or sounds based on descriptions or instructions, as well as analyze videos and answer questions about them.

By using robot data for training, Unified-IO 2 can also generate actions for the robot system, such as converting instructions into sequences of actions for the robot.

Thanks to multi-modal training, it can also handle different modalities, for example, labeling the instruments used in a certain track on the image.

Unified-IO 2 performs well on more than 35 benchmarks, including image generation and understanding, natural language understanding, video and audio understanding, and Robotic operation.

In most tasks, it is as good as or better than dedicated models.

Unified-IO 2 achieved the highest score so far on the GRIT benchmark for image tasks (GRIT is used to test how a model handles image noise and other issues).

The researchers now plan to further extend Unified-IO 2, improve data quality, and convert the encoder-decoder model to an industry-standard decoder model architecture.

Unified-IO 2 is the first autoregressive to understand and generate images, text, audio and motion Multimodal model.

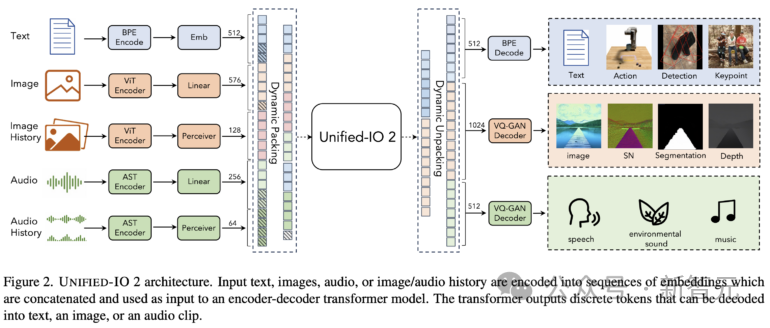

To unify different modalities, researchers label inputs and outputs (images, text, audio, actions, bounding boxes, etc.) into a shared semantic space and then use a single The encoder-decoder converter model processes it.

Due to the large amount of data used to train the model and from various different modalities, the researchers adopted a series of techniques to improve the entire training process.

To effectively facilitate self-supervised learning of signals across multiple modalities, the researchers developed a novel multimodal hybrid of denoiser targets that incorporates cross-modal denoising and generation.

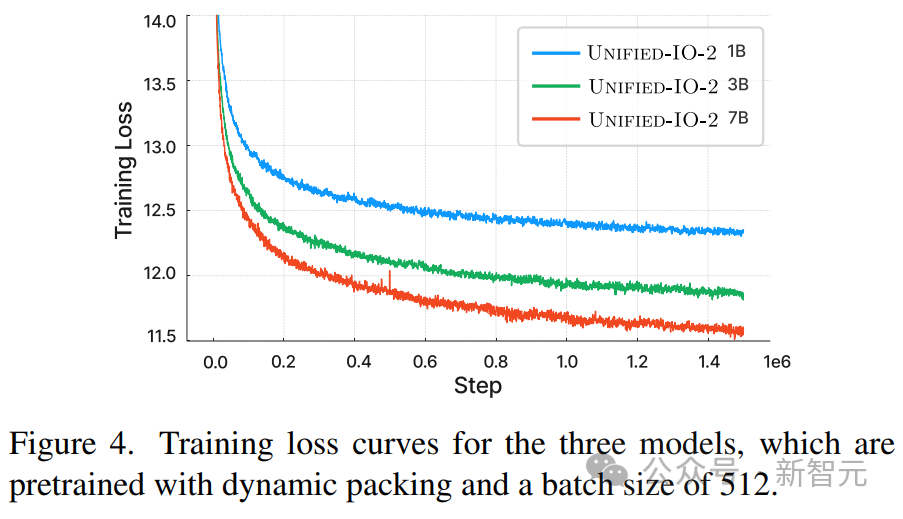

Dynamic packing has also been developed to increase training throughput by 4x to handle highly variable sequences.

To overcome stability and scalability issues in training, the researchers made architectural changes on the perceptron resampler, including 2D rotation embedding, QK normalization, and scaled cosine attention mechanism.

For directive adjustments, make sure each mission has a clear prompt, whether using an existing mission or making a new one. Open-ended tasks are also included, and synthetic tasks are created for less common patterns to enhance task and teaching variety.

Encode multimodal data into a sequence of tokens in a shared representation space, including the following Several aspects:

Text input and output are tokenized using byte pair encoding in LLaMA, Sparse structures such as bounding boxes, keypoints and camera poses are discretized and then encoded using 1000 special markers added to the vocabulary.

Points are encoded using two markers (x, y), boxes are encoded with a sequence of four markers (top left and bottom right), and 3D cuboids are represented with 12 markers ( encoding projection center, virtual depth, log-normalized box size, and continuous concentric rotation).

For embodied tasks, discrete robot actions are generated as textual commands (e.g., "move forward"). Special tags are used to encode the state of the robot (such as position and rotation).

Images are encoded using a pre-trained Visual Transformer (ViT). The patch features of the second and penultimate layers of ViT are concatenated to capture low-level and high-level visual information.

When generating an image, use VQ-GAN to convert the image into discrete labels. Here, a dense pre-trained VQ-GAN model with a patch size of 8 × 8 is used to convert the 256 × 256 image The encoding is 1024 tokens, and the codebook size is 16512.

The labels of each pixel (including depth, surface normal and binary segmentation mask) are then represented as an RGB image.

U-IO 2 encodes up to 4.08 seconds of audio into a spectrogram and then uses pre-trained audio spectrogram conversion The AST encodes the spectrogram and builds the input embedding by concatenating the second and penultimate layer features of the AST and applying a linear layer, just like the image ViT.

When generating audio, use ViT-VQGAN to convert the audio into discrete markers. The patch size of the model is 8 × 8, and the 256 × 128 spectrogram is encoded into 512 tokens. The codebook size is 8196.

The model allows up to four additional image and audio clips to be provided as input, these elements are also provided using ViT or AST is encoded, followed by a perceptron resampler to further compress the features into a smaller number (32 for images and 16 for audio).

This significantly reduces sequence length and allows the model to examine images or audio clips in high detail while using elements from the history as context.

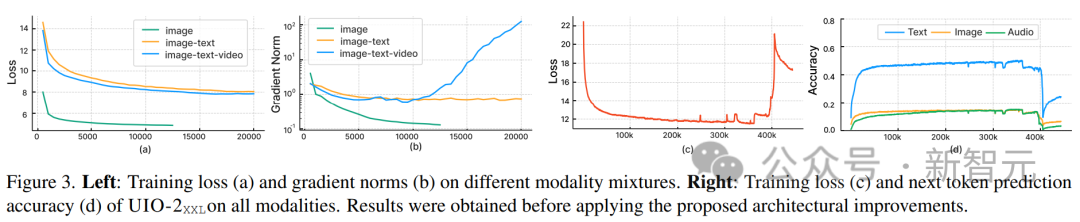

The researchers observed that as we integrate other models, the standard after using U-IO Implementation results in increasingly unstable training.

As shown in (a) and (b) below, training only on image generation (green curve) results in stable loss and gradient norm convergence.

Introducing the combination of image and text tasks (orange curve) slightly increases the gradient norm compared to single modality, but remains stable. However, including the video modality (blue curve) results in an unbounded upgrade of the gradient norm.

As shown in (c) and (d) in the figure, when the XXL version of the model is trained on all modalities, the loss is 350k Exploding after steps, the next label prediction accuracy drops significantly at 400k steps.

To solve this problem, the researchers made various architectural changes:

Apply Rotation Position Embedding (RoPE) at each Transformer layer . For non-text modalities, RoPE is extended to 2D locations; when image and audio modalities are included, LayerNorm is applied to Q and K before dot product attention calculations.

Also, use a perceptron resampler to compress each image frame and audio clip into a fixed number of tokens, and use scaled cosine attention to apply a stricter reduction in the perceptron This significantly stabilizes training.

In order to avoid numerical instability, float32 attention logarithm is also enabled, and ViT and AST are frozen during pre-training and fine-tuned at the end of instruction adjustment.

The above figure shows that despite the heterogeneity of input and output modalities, the pre-training loss of the model is stable.

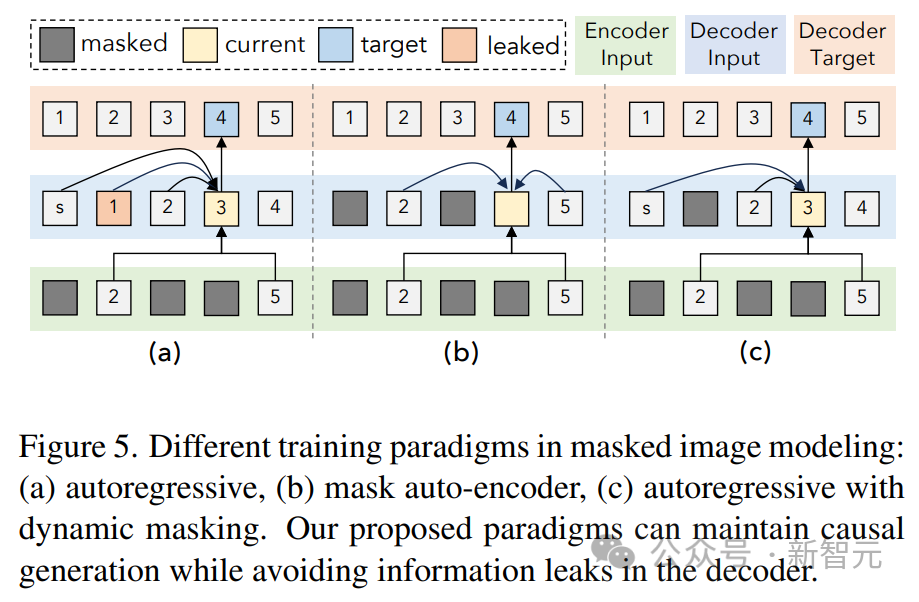

This article follows the UL2 paradigm. For image and audio targets, two similar paradigms are defined here:

[R]: Mask denoising, randomly mask x% of the input image or audio patch features, and let The model reconstructs it;

[S]: Asks the model to generate the target modality under other input modal conditions.

During training, use modal markers ([Text], [Image], or [Audio]) and paradigm markers ([R], [S], or [X]) as Enter a prefix of text to indicate the task and use dynamic masking for autoregression.

As shown in the figure above, one problem with image and audio masking denoising is information leakage on the decoder side.

The solution here is to mask the token in the decoder (unless it is predicting the token), which does not interfere with causal prediction while eliminating data leakage.

Training on a large amount of multi-modal data will result in a sequence of converter input and output Length highly variable.

Packing is used here to solve this problem: tags for multiple examples are packed into a sequence, and attention is shielded to prevent converters from cross-engaging between examples.

During training, a heuristic algorithm is used to rearrange the data streamed to the model so that long samples are matched with short samples that can be packed. This article’s dynamic packaging increases training throughput by nearly 4 times.

Multi-modal instruction tuning is to equip the model with different skills and abilities in various modalities, and even adapt to new and unique instructions for key processes.

The researchers built a multimodal instruction tuning dataset by combining a wide range of supervised datasets and tasks.

The distribution of command tuning data is shown in the figure above. Overall, the instruction tuning mix consisted of 60% hint data, 30% data inherited from pre-training (to avoid catastrophic forgetting), 6% task-augmented data built using existing data sources, and 4% Free-form text (to enable chat-like replies).

The above is the detailed content of GPT-5 preview! Allen Institute for Artificial Intelligence releases the strongest multi-modal model to predict new capabilities of GPT-5. For more information, please follow other related articles on the PHP Chinese website!