Java

Java

javaTutorial

javaTutorial

Comparing different Java crawler frameworks: Which one is suitable for achieving your goals?

Comparing different Java crawler frameworks: Which one is suitable for achieving your goals?

Comparing different Java crawler frameworks: Which one is suitable for achieving your goals?

Evaluating Java crawler frameworks: Which one can help you achieve your goals?

Introduction: With the rapid development of the Internet, crawler technology has become an important way to obtain information. In the field of Java development, there are many excellent crawler frameworks to choose from. This article will evaluate several commonly used Java crawler frameworks and give corresponding code examples to help readers choose the appropriate crawler framework.

1. Jsoup

Jsoup is a Java HTML parser that can easily extract data from web pages. It can parse, traverse and manipulate HTML elements through CSS selectors or a jQuery-like API. It is very simple to write a crawler using Jsoup. The following is a sample code:

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

public class JsoupSpider {

public static void main(String[] args) throws Exception {

// 发起HTTP请求,获取网页内容

Document doc = Jsoup.connect("https://example.com").get();

// 使用CSS选择器定位需要的元素

Elements links = doc.select("a[href]");

// 遍历并输出元素文本

for (Element link : links) {

System.out.println(link.text());

}

}

}2. WebMagic

WebMagic is a powerful Java crawler framework that supports multi-threading, distributed crawling and dynamic agents and other functions. It provides a flexible programming interface, and users can flexibly customize crawlers according to their own needs. The following is a sample code from WebMagic:

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

import us.codecraft.webmagic.pipeline.Pipeline;

public class WebMagicSpider {

public static void main(String[] args) {

// 创建爬虫,并设置URL、页面处理器和输出管道

Spider.create(new PageProcessor() {

@Override

public void process(Page page) {

// TODO: 解析页面,提取需要的数据

}

@Override

public Site getSite() {

return Site.me();

}

})

.addUrl("https://example.com")

.addPipeline(new Pipeline() {

@Override

public void process(ResultItems resultItems, Task task) {

// TODO: 处理爬取结果,保存数据

}

})

.run();

}

}3. HttpClient

HttpClient is a powerful HTTP client library that can be used to send HTTP requests and obtain responses. It supports multiple request methods, parameter settings and data transmission methods. Combined with other HTML parsing libraries, the crawler function can be implemented. The following is a sample code for crawling using HttpClient:

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

public class HttpClientSpider {

public static void main(String[] args) throws Exception {

// 创建HTTP客户端

CloseableHttpClient httpClient = HttpClients.createDefault();

// 创建HTTP GET请求

HttpGet httpGet = new HttpGet("https://example.com");

// 发送请求,获取响应

CloseableHttpResponse response = httpClient.execute(httpGet);

// 提取响应内容

String content = EntityUtils.toString(response.getEntity(), "UTF-8");

// TODO: 解析响应内容,提取需要的数据

}

}Summary: This article evaluates several commonly used Java crawler frameworks and gives corresponding code examples. Based on different needs and technical levels, readers can choose the appropriate crawler framework to achieve their goals. At the same time, it can also be used in combination with different frameworks according to specific situations to take advantage of each framework. During actual use, you need to pay attention to the legal and compliant use of crawler technology and comply with relevant laws and regulations and website usage regulations to avoid possible legal risks.

The above is the detailed content of Comparing different Java crawler frameworks: Which one is suitable for achieving your goals?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Perfect Number in Java. Here we discuss the Definition, How to check Perfect number in Java?, examples with code implementation.

Weka in Java

Aug 30, 2024 pm 04:28 PM

Weka in Java

Aug 30, 2024 pm 04:28 PM

Guide to Weka in Java. Here we discuss the Introduction, how to use weka java, the type of platform, and advantages with examples.

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Smith Number in Java. Here we discuss the Definition, How to check smith number in Java? example with code implementation.

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

In this article, we have kept the most asked Java Spring Interview Questions with their detailed answers. So that you can crack the interview.

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Java 8 introduces the Stream API, providing a powerful and expressive way to process data collections. However, a common question when using Stream is: How to break or return from a forEach operation? Traditional loops allow for early interruption or return, but Stream's forEach method does not directly support this method. This article will explain the reasons and explore alternative methods for implementing premature termination in Stream processing systems. Further reading: Java Stream API improvements Understand Stream forEach The forEach method is a terminal operation that performs one operation on each element in the Stream. Its design intention is

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

Guide to TimeStamp to Date in Java. Here we also discuss the introduction and how to convert timestamp to date in java along with examples.

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Capsules are three-dimensional geometric figures, composed of a cylinder and a hemisphere at both ends. The volume of the capsule can be calculated by adding the volume of the cylinder and the volume of the hemisphere at both ends. This tutorial will discuss how to calculate the volume of a given capsule in Java using different methods. Capsule volume formula The formula for capsule volume is as follows: Capsule volume = Cylindrical volume Volume Two hemisphere volume in, r: The radius of the hemisphere. h: The height of the cylinder (excluding the hemisphere). Example 1 enter Radius = 5 units Height = 10 units Output Volume = 1570.8 cubic units explain Calculate volume using formula: Volume = π × r2 × h (4

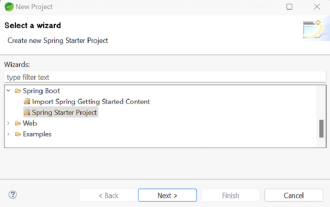

How to Run Your First Spring Boot Application in Spring Tool Suite?

Feb 07, 2025 pm 12:11 PM

How to Run Your First Spring Boot Application in Spring Tool Suite?

Feb 07, 2025 pm 12:11 PM

Spring Boot simplifies the creation of robust, scalable, and production-ready Java applications, revolutionizing Java development. Its "convention over configuration" approach, inherent to the Spring ecosystem, minimizes manual setup, allo