AI video generation artifact is here again. Recently, Alibaba and ByteDance secretly launched their respective tools

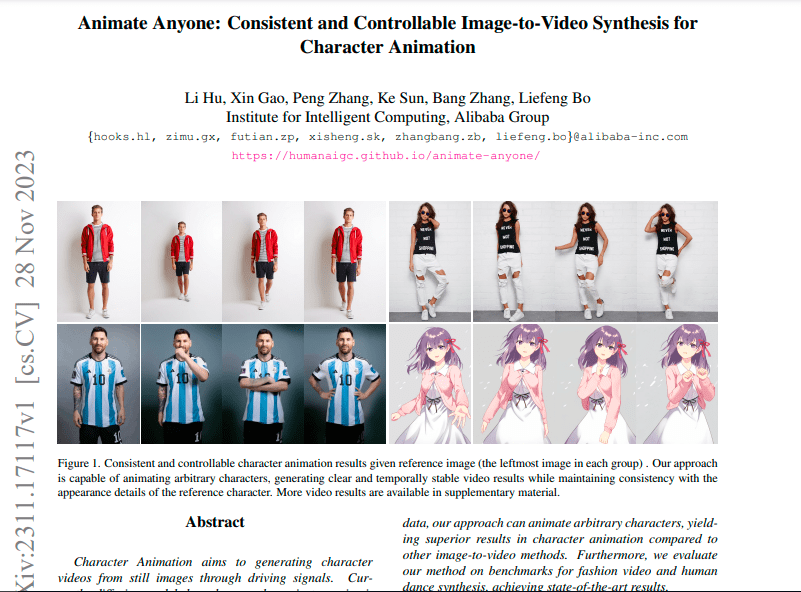

Ali launched Animate Anyone, a project developed by Alibaba Intelligent Computing Research Institute. You only need to provide a static character image (including real person, animation/cartoon characters, etc.) and some actions and postures (such as dancing, walking) , you can animate it while retaining the character's detailed features (such as facial expressions, clothing details, etc.).

As long as there is a photo of Messi, the "King of Balls" can be asked to pose in various poses (see the picture below). According to this principle, it is easy to make Messi dance.

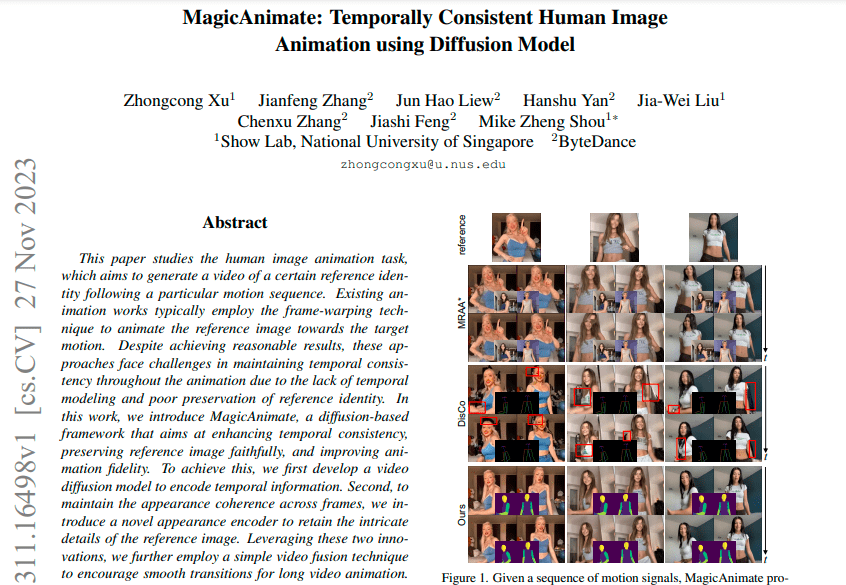

The National University of Singapore and ByteDance jointly launched Magic Animate, which also uses AI technology to turn static images into dynamic videos. Byte said that on the extremely challenging TikTok dance data set, the realism of the video generated by Magic Animate improved by more than 38% compared with the strongest baseline.

In the Tusheng Video project, Alibaba and ByteDance went hand in hand and completed a series of operations such as paper release, code disclosure and test address disclosure almost simultaneously. The release time of the two related papers was only one day apart

Related papers about bytes were released on November 27th:

Ali-related papers will be released on November 28:

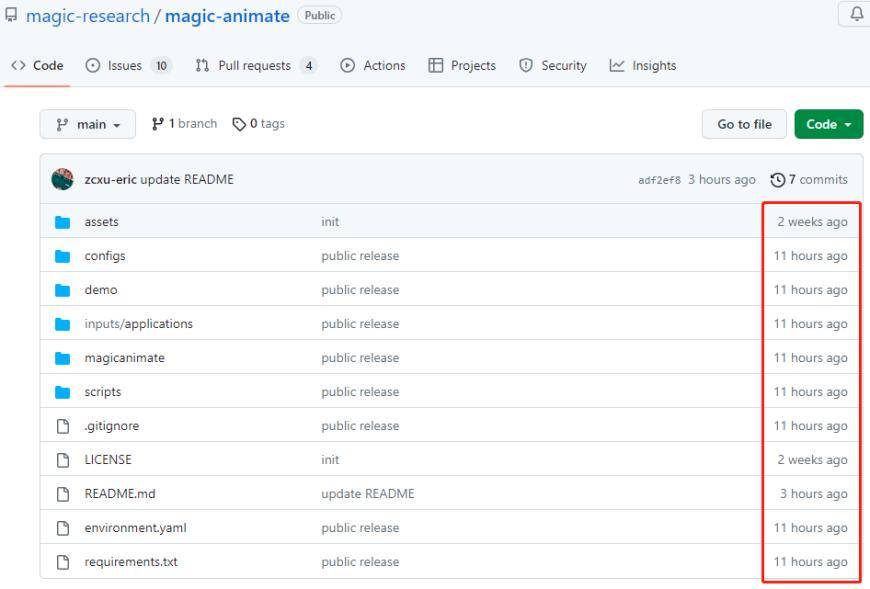

The open source files of the two companies are continuously updated on Github

The content that needs to be rewritten is: Magic Animate’s open source project file package

Animate Anyone’s open source project file package

This once again highlights the fact: Video generation is a popular competitive event in AIGC, and technology giants and star companies are paying close attention to it and actively investing in it. It is understood that Runway, Meta, and Stable AI have launched AI Vincent video applications, and Adobe recently announced the acquisition of AI video creation company Rephrase.ai.

Judging from the display videos of the above two companies, the generation effect has been significantly improved, and the smoothness and realism are better than before. Overcome the shortcomings of current image/video generation applications, such as local distortion, blurred details, inconsistent prompt words, differences from the original image, dropped frames, and screen jitter.

Both tools use diffusion models to create temporally coherent portrait animations, and their training data are much the same. Stable Diffusion, used by both, is a text-to-image latent diffusion model created by researchers and engineers at CompVis, Stability AI, and LAION, which was trained using 512x512 images from a subset of the LAION-5B database. LAION-5B is the largest freely accessible multi-modal dataset in existence.

Talking about applications, Alibaba researchers stated in the paper that Animate Anybody, as a basic method, may be extended to various Tusheng video applications in the future. The tool has many potential application scenarios, such as online retail, entertainment videos, Art creation and virtual characters. ByteDance also emphasized that Magic Animate has demonstrated strong generalization capabilities and can be applied to multiple scenarios.

The "Holy Grail" of multimodal applications: Vincent Video Vincent Video refers to the application of multi-modal analysis and processing of video content by combining text and speech technology. It associates text and speech information with video images to provide a richer video understanding and interactive experience. Vincent Video Application has a wide range of application fields, including intelligent video surveillance, virtual reality, video editing and content analysis, etc. Through text and speech analysis, Vincent Video can identify and understand objects, scenes and actions in videos, thereby providing users with more intelligent video processing and control functions. In the field of intelligent video surveillance, Vincent Video can automatically label and classify surveillance video content, thereby improving surveillance efficiency and accuracy. In the field of virtual reality, Vincent Video can interact with the user's voice commands and the virtual environment to achieve a more immersive virtual experience. In the field of video editing and content analysis, Vincent Video can help users automatically extract key information from videos and perform intelligent editing and editing. In short, Vincent Video, as the "holy grail" of multi-modal applications, provides a more comprehensive and intelligent solution for the understanding and interaction of video content. Its development will bring more innovation and convenience to various fields, and promote scientific and technological progress and social development

Video has advantages over text and pictures. It can express information better, enrich the picture, and be dynamic. Video can combine text, images, sounds and visual effects, integrating multiple information forms and presenting them in one media

AI video tools have powerful product functions and can open up wider application scenarios. Through simple text descriptions or other operations, AI video tools can generate high-quality and complete video content, thereby lowering the threshold for video creation. This allows non-professionals to accurately display content through videos, which is expected to improve the efficiency of content production and output more creative ideas in various industry segments

Song Jiaji of Guosheng Securities previously pointed out that AI Wensheng video is the next stop for multi-modal applications and the "holy grail" of multi-modal AIGC. As AI video completes the last piece of the multi-modal puzzle of AI creation, downstream The moment of accelerated application will also come; Shengang Securities said that video AI is the last link in the multi-modal field; Huatai Securities said that the AIGC trend has gradually shifted from Vincentian texts and Vincentian pictures to the field of Vincentian videos, and Vincentian videos are highly computationally difficult. and high data requirements will support the continued strong demand for upstream AI computing power.

However, the gap between large companies and between large companies and start-ups is not big, it can even be said that they are on the same starting line. Currently, Vincent Video has very few public beta applications, only a few such as Runway Gen-2, Zero Scope and Pika. Even Silicon Valley artificial intelligence giants such as Meta and Google are making slow progress on Vincent Video. Their respective launches of Make-A-Video and Phenaki have not yet been released for public testing.

From a technical perspective, the underlying models and technologies of video generation tools are still being optimized. Currently, the mainstream Vincent video models mainly use the Transformer model and the diffusion model. Diffusion model tools are mainly dedicated to improving video quality, overcoming the problems of rough effects and lack of detail. However, the duration of these videos is less than 4 seconds

On the other hand, although the diffusion model works well, its training process requires a lot of memory and computing power, which makes only large companies and start-ups that have received large investments affordable the cost of model training

Source: Science and Technology Innovation Board Daily

The above is the detailed content of The next hot application of AI applications has emerged: Alibaba and ByteDance quietly launched a similar artifact that can make Messi dance easily. For more information, please follow other related articles on the PHP Chinese website!