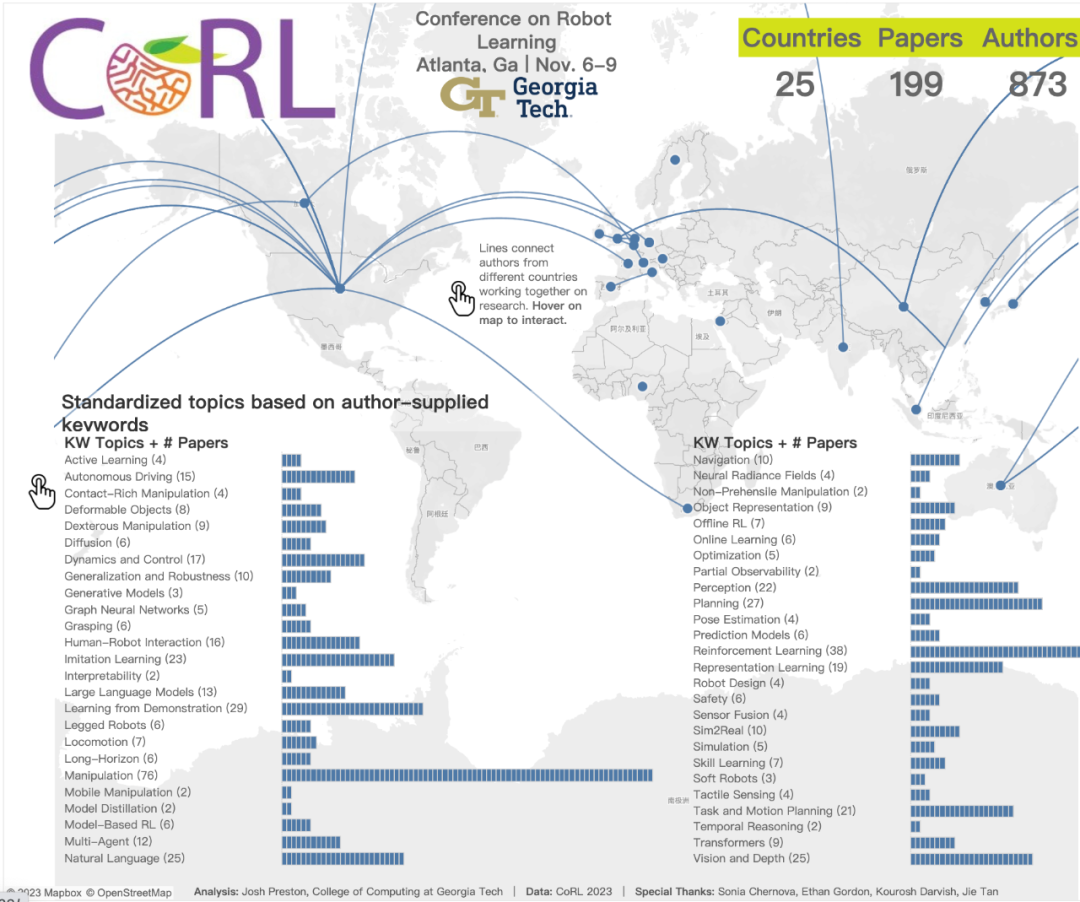

Since it was first held in 2017, CoRL has become one of the world's top academic conferences in the intersection of robotics and machine learning. CoRL is a single-theme conference for robot learning research, covering multiple topics such as robotics, machine learning and control, including theory and application

The 2023 CoRL Conference will be held in November It will be held in Atlanta, USA from the 6th to the 9th. According to official data, 199 papers from 25 countries were selected for CoRL this year. Popular topics include operations, reinforcement learning, and more. Although CoRL is smaller in scale than large AI academic conferences such as AAAI and CVPR, as the popularity of concepts such as large models, embodied intelligence, and humanoid robots increases this year, relevant research worthy of attention will also be presented at the CoRL conference.

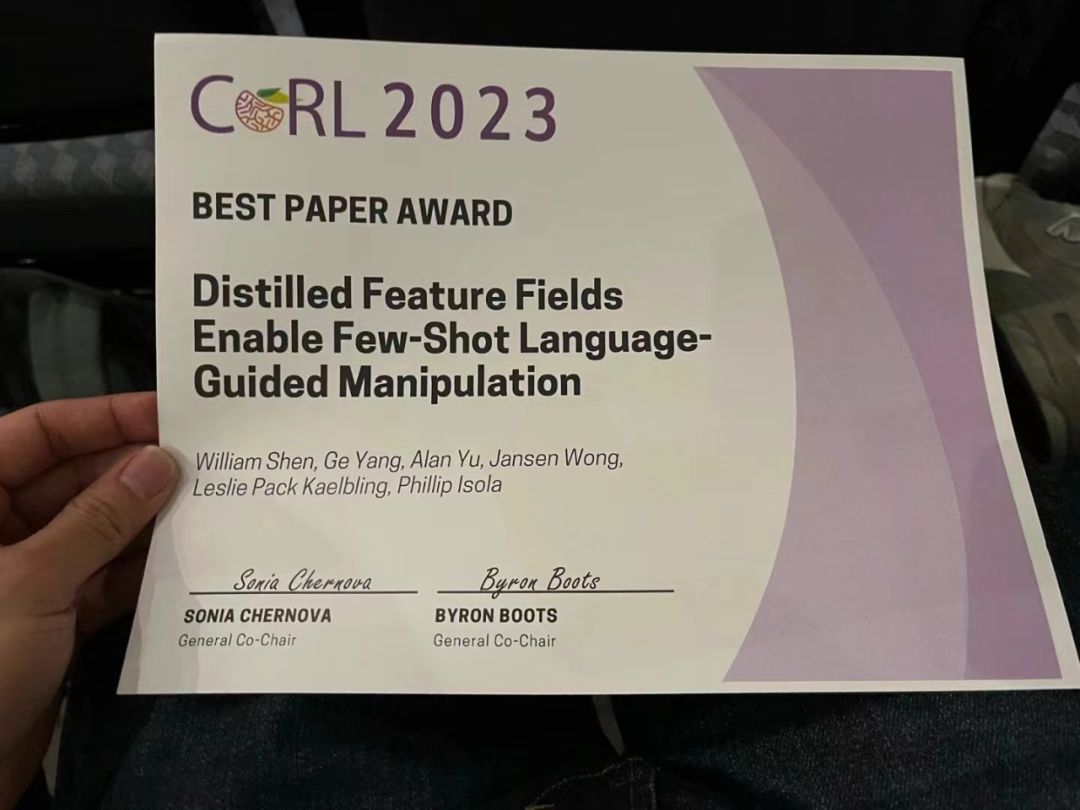

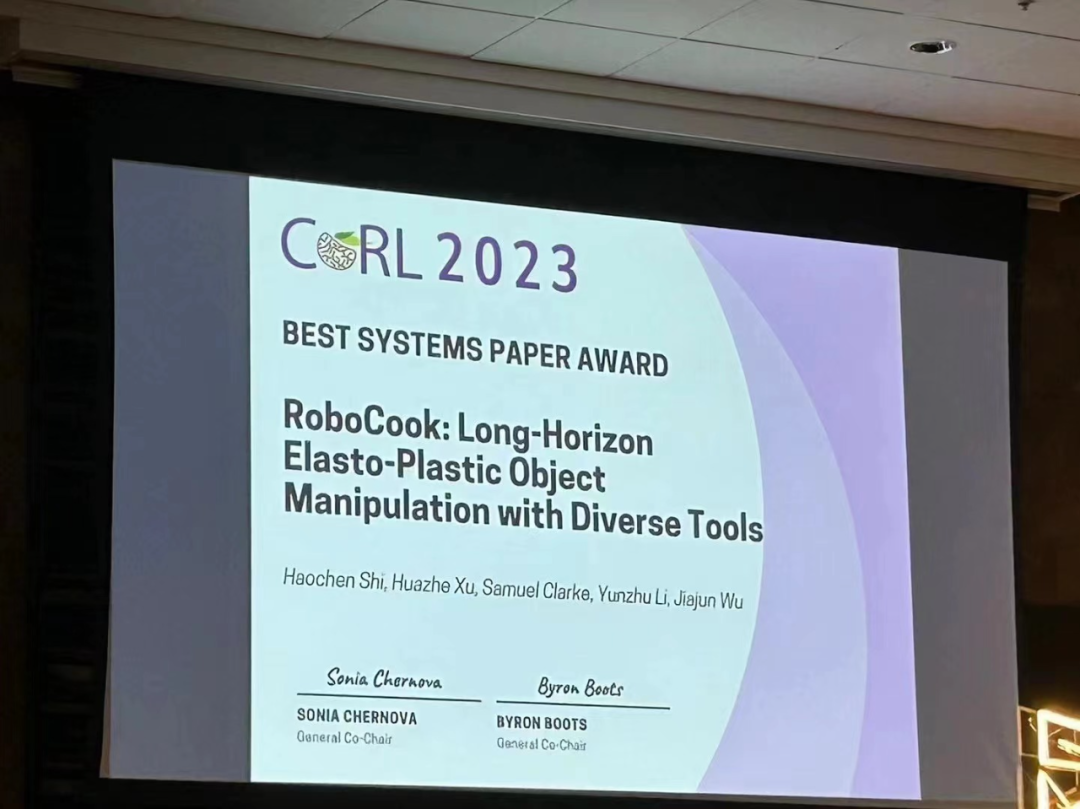

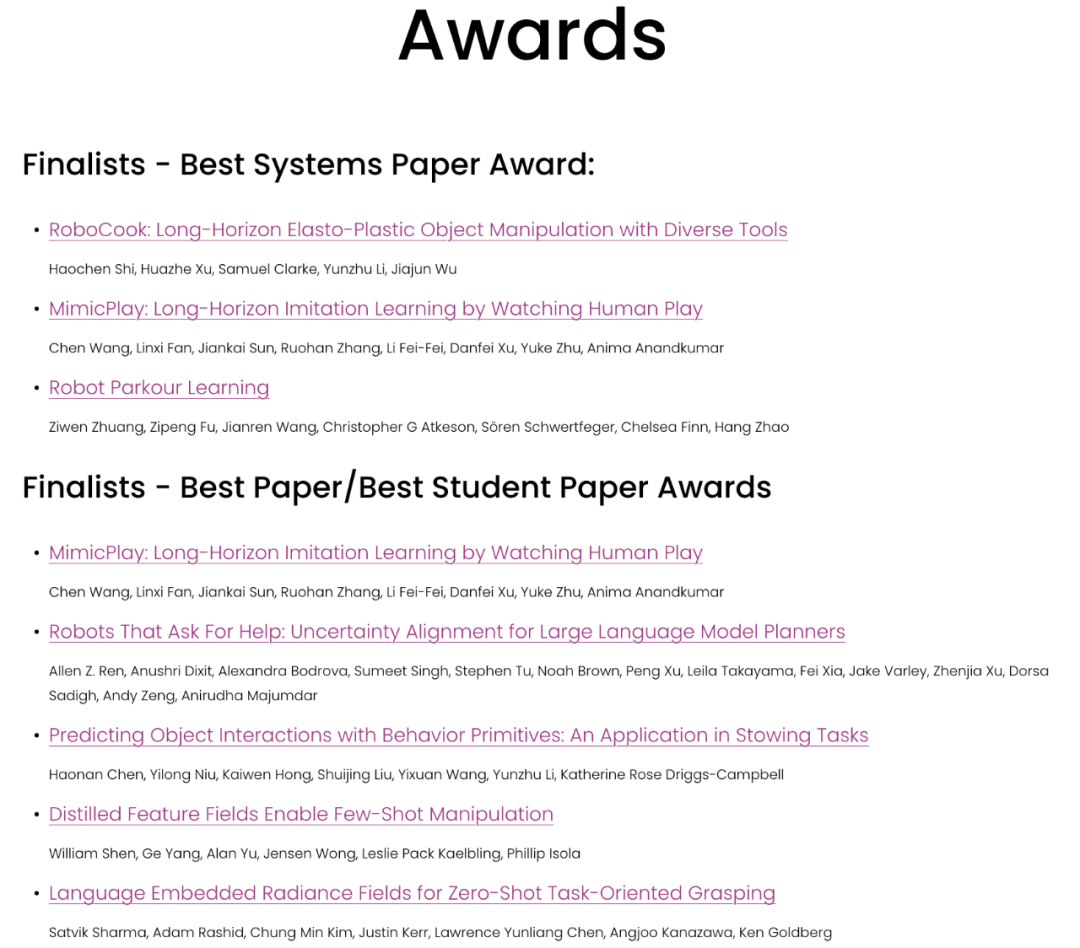

Currently, CoRL 2023 officials have announced the Best Paper Award, Best Student Paper Award, Best System Paper Award and other awards. Next, we will introduce these award-winning papers to you.

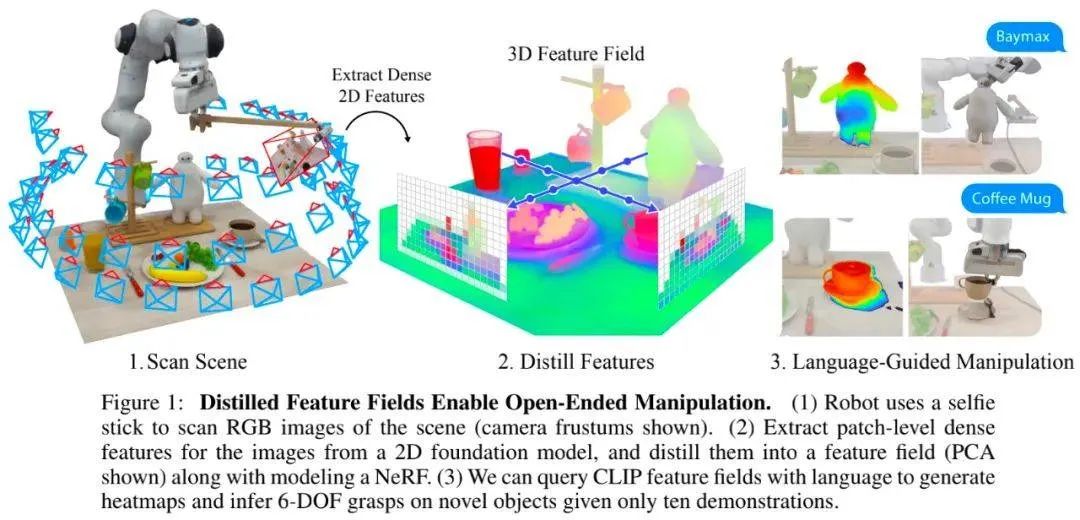

Research Overview: Currently, self-supervised learning and language supervised learning in image models have incorporated rich global knowledge, which is very critical to the generalization ability of the model. However, image features only contain two-dimensional information. We learned that in robotics tasks it is very important to have an understanding of the geometry of three-dimensional objects in the real world

By using Distilled Feature Field (DFF), This research combines precise 3D geometry with rich semantics from a 2D base model to enable robots to leverage rich visual and language priors in the 2D base model to complete language-guided operations

Specifically, this study proposes a few-shot learning method for 6-DOF grabbing and placing, and leverages strong spatial and semantic priors Generalize to unseen objects. Using features extracted from the vision-language model CLIP, this study proposes an open-ended natural language instruction to operate on new objects and demonstrates the ability of this method to generalize to unseen expressions and novel objects. .

The two co-authors of this paper are William Shen and Yang Ge, members of the CSAIL "Embodied Intelligence" team. Yang Ge is a co-author of the 2023 CSAIL Embodied Intelligence Symposium. Organizer.

I learned that "Heart of the Machine" has introduced this research in detail, please read "How powerful are robots supported by large models? MIT CSAIL&IAIFI uses natural language to guide robots to grasp objects 》

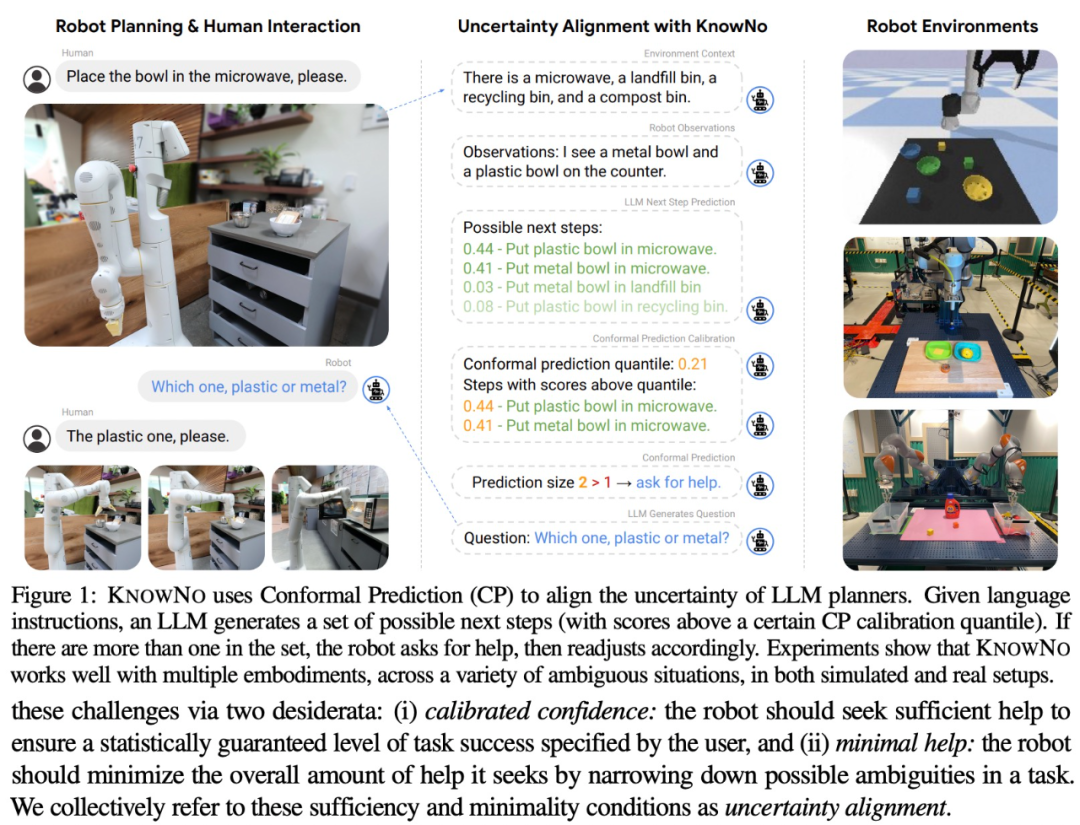

Large language model (LLM) is a technology with broad application prospects, especially in the field of robotics. However, although LLM shows great potential in step-by-step planning and common-sense reasoning, it also suffers from some illusion problems

Based on this, this study proposes a new framework—— KnowNo, for measuring and aligning uncertainty in LLM-based planners. It enables the LLM to realize what information is unknown and to ask for help when needed.

KnowNo is based on conformal prediction theory, which provides statistical guarantees of task completion and can minimize human intervention in multi-step planning tasks

This study tested KnowNo with various modes of uncertain tasks (including spatial uncertainty, numerical uncertainty, etc.) in various simulated and real robot experiments. Experimental results show that KnowNo performs well in improving efficiency and autonomy, outperforms baselines, and is safe and trustworthy. KnowNo can be used directly in LLM without model fine-tuning, providing an effective lightweight solution to model uncertainty and complementing the increasing capabilities of the underlying model.

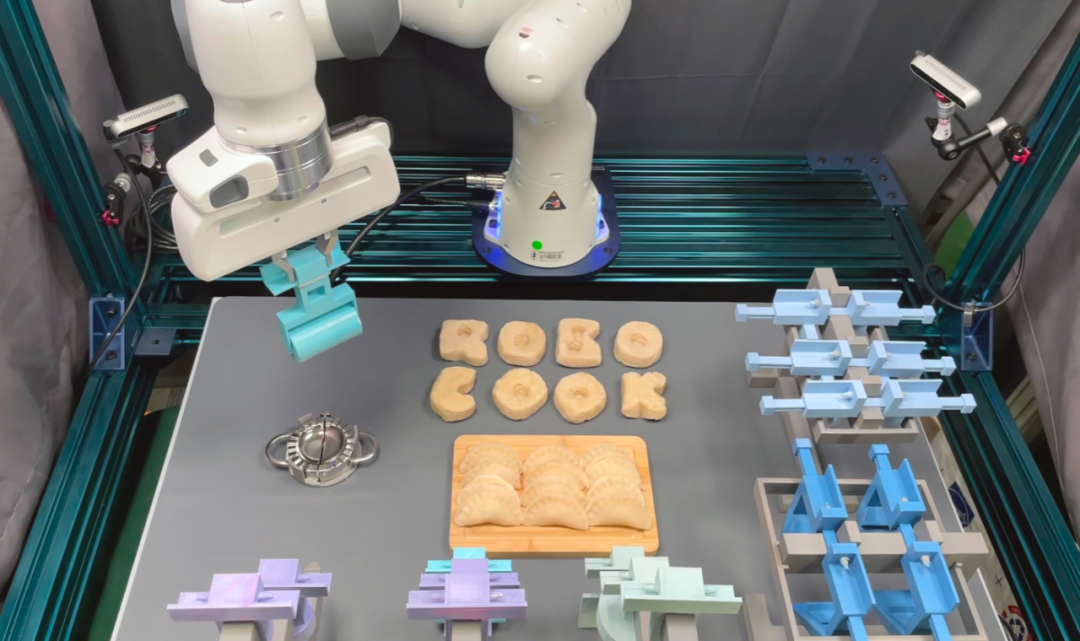

The study shows that with just 20 minutes of real-world interaction data per tool, RoboCook can learn and manipulate the robotic arm to complete some complex, long-term elastic-plastic behaviors Object manipulation tasks, such as making dumplings, alphabet cookies, etc.

According to experimental results, RoboCook's performance is significantly better than the existing SOTA method, and it can still show stability in the face of severe external interference and adapt to different materials. The ability is also better

It is worth mentioning that the co-authors of this paper are Haochen Shi, a doctoral student from Stanford University, a former postdoctoral researcher at Stanford University, and a current cross-disciplinary researcher at Tsinghua University. Huazhe Xu, assistant professor at the Institute of Information Science, and one of the authors of the paper is Wu Jiajun, an alumnus of Yao Class and assistant professor at Stanford University.

The above is the detailed content of The Chinese team won the best paper and best system paper awards, and the CoRL research results were announced.. For more information, please follow other related articles on the PHP Chinese website!