Neural Radiation Fields (NeRF) are a fairly new paradigm in the field of deep learning and computer vision. The technique was introduced in the ECCV 2020 paper "NeRF: Representing Scenes as Neural Radiation Fields for View Synthesis" (which won the Best Paper Award), and has since exploded in popularity, with nearly 800 citations to date [ 1]. The approach marks a sea change in the traditional way machine learning processes 3D data.

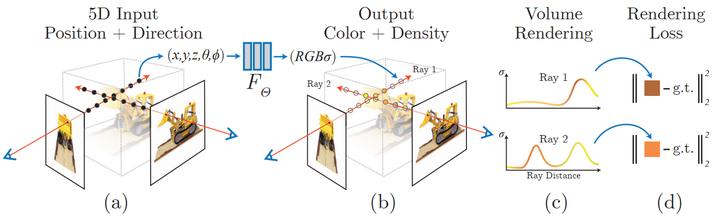

Neural radiation field scene representation and differentiable rendering process:

Synthesize the image by sampling 5D coordinates (position and viewing direction) along the camera ray; These locations are fed into an MLP to produce color and volumetric densities; and these values are composited into an image using volumetric rendering techniques; this rendering function is differentiable, so the scene can be optimized by minimizing the residual between the composite and real observed images express.

NeRF is a generative model that generates new views of a 3D scene given an image, conditioned on an image and a precise pose. This process is often called for "New View Composition". Not only that, it also clearly defines the 3D shape and appearance of the scene as a continuous function, which can generate 3D meshes by marching cubes. Although they learn directly from image data, they use neither convolutional nor transformer layers.

Over the years, there have been many ways to represent 3D data in machine learning applications, from 3D voxels to point clouds to signed distance functions. Their biggest common disadvantage is the need to assume a 3D model in advance, either using tools such as photogrammetry or lidar to generate 3D data, or to hand-craft the 3D model. However, many types of objects, such as highly reflective objects, "grid-like" objects, or transparent objects, cannot be scanned at scale. 3D reconstruction methods also often suffer from reconstruction errors, which can lead to step effects or drift that affect model accuracy.

In contrast, NeRF is based on the concept of ray light fields. A light field is a function that describes how light transmission occurs throughout a 3D volume. It describes the direction in which a ray of light moves at each x = (x, y, z) coordinate in space and in each direction d, described as the θ and ξ angles or unit vectors. Together they form a 5D feature space that describes light transmission in a 3D scene. Inspired by this representation, NeRF attempts to approximate a function that maps from this space to a 4D space consisting of color c = (R, G, B) and density (density) σ, which can be thought of as this 5D coordinate space The possibility of the ray being terminated (e.g. by occlusion). Therefore, standard NeRF is a function of the form F: (x, d) -> (c, σ).

The original NeRF paper parameterized this function using a multilayer perceptron trained on a set of images with known poses. This is one method in a class of techniques called generalized scene reconstruction, which aims to describe 3D scenes directly from a collection of images. This approach has some very nice properties:

Since then, a series of improvement papers have emerged, for example, less Lens and single-lens learning [2, 3], support for dynamic scenes [4, 5], generalization of light fields to feature fields [6], learning from uncalibrated image collections on the network [7], combined with lidar data [8], large-scale scene representation [9], learning without neural networks [10], and so on.

Overall, given a trained NeRF model and a camera with known pose and image dimensions, we build the scene through the following process:

Light emission field (many documents translate it as "radiation Field" (but the translator thinks "Light Shooting Field" is more intuitive) function is just one of several components that, once combined, can create the visual effects seen in the video before. Overall, this article includes the following parts:

In order to explain it with maximum clarity, this article lists the key elements of each component as Show code as concisely as possible. Reference is made to the original implementation of bmild and the PyTorch implementation of yenchenlin and krrish94.

Like the transformer model [11] introduced in 2017, NeRF also benefits from a positional encoder as its input. It uses high-frequency functions to map its continuous inputs into a higher-dimensional space to help the model learn high-frequency changes in the data, resulting in a cleaner model. This method circumvents the bias of the neural network on low-frequency functions, allowing NeRF to represent clearer details. The author refers to a paper on ICML 2019 [12].

If you are familiar with transformerd's positional encoding, the related implementation of NeRF is pretty standard, with the same alternating sine and cosine expressions. Position encoder implementation:

# pyclass PositionalEncoder(nn.Module):# sine-cosine positional encoder for input points.def __init__( self,d_input: int,n_freqs: int,log_space: bool = False ):super().__init__()self.d_input = d_inputself.n_freqs = n_freqs # 是不是视线上的采样频率?self.log_space = log_spaceself.d_output = d_input * (1 + 2 * self.n_freqs)self.embed_fns = [lambda x: x] # 冒号前面的x表示函数参数,后面的表示匿名函数运算# Define frequencies in either linear or log scaleif self.log_space:freq_bands = 2.**torch.linspace(0., self.n_freqs - 1, self.n_freqs)else:freq_bands = torch.linspace(2.**0., 2.**(self.n_freqs - 1), self.n_freqs)# Alternate sin and cosfor freq in freq_bands:self.embed_fns.append(lambda x, freq=freq: torch.sin(x * freq))self.embed_fns.append(lambda x, freq=freq: torch.cos(x * freq))def forward(self, x) -> torch.Tensor:# Apply positional encoding to input.return torch.concat([fn(x) for fn in self.embed_fns], dim=-1)

Thinking: This position encoding encodes input points. Is this input point a sampling point on the line of sight? Or a different viewing angle? Is self.n_freqs the sampling frequency on the line of sight? From this understanding, it should be the sampling position on the line of sight, because if the sampling position on the line of sight is not encoded, these positions cannot be effectively represented, and their RGBA cannot be trained.

In the original text, the light field function is represented by the NeRF model. The NeRF model is a typical multi-layer perceptron, using encoded 3D points and viewing direction as takes input and returns an RGBA value as output. Although this article uses neural networks, any function approximator can be used here. For example, Yu et al.’s follow-up paper Plenoxels uses spherical harmonics to achieve orders of magnitude faster training while achieving competitive results [10].

Picture

Picture

The NeRF model is 8 layers deep and the feature dimension of most layers is 256. The remaining connections are placed at layer 4. After these layers, RGB and σ values are generated. The RGB values are further processed with a linear layer, then concatenated with the viewing direction, then passed through another linear layer, and finally recombined with σ at the output. PyTorch module implementation of NeRF model:

class NeRF(nn.Module):# Neural radiance fields module.def __init__( self,d_input: int = 3,n_layers: int = 8,d_filter: int = 256,skip: Tuple[int] = (4,), # (4,)只有一个元素4的元组 d_viewdirs: Optional[int] = None): super().__init__()self.d_input = d_input# 这里是3D XYZ,?self.skip = skip# 是要跳过什么?为啥要跳过?被遮挡?self.act = nn.functional.reluself.d_viewdirs = d_viewdirs# d_viewdirs 是2D方向?# Create model layers# [if_true 就执行的指令] if [if_true条件] else [if_false]# 是否skip的区别是,训练输入维度是否多3维,# if i in skip =if i in (4,),似乎是判断i是否等于4# self.d_input=3 :如果层id=4,网络输入要加3维,这是为什么?第4层有何特殊的?self.layers = nn.ModuleList([nn.Linear(self.d_input, d_filter)] +[nn.Linear(d_filter + self.d_input, d_filter) if i in skip else \ nn.Linear(d_filter, d_filter) for i in range(n_layers - 1)])# Bottleneck layersif self.d_viewdirs is not None:# If using viewdirs, split alpha and RGBself.alpha_out = nn.Linear(d_filter, 1)self.rgb_filters = nn.Linear(d_filter, d_filter)self.branch = nn.Linear(d_filter + self.d_viewdirs, d_filter // 2)self.output = nn.Linear(d_filter // 2, 3) # 为啥要取一半?else:# If no viewdirs, use simpler outputself.output = nn.Linear(d_filter, 4) # d_filter=256,输出是4维RGBAdef forward(self,x: torch.Tensor, # ?viewdirs: Optional[torch.Tensor] = None) -> torch.Tensor: # Forward pass with optional view direction.if self.d_viewdirs is None and viewdirs is not None:raise ValueError('Cannot input x_direction')# Apply forward pass up to bottleneckx_input = x# 这里的x是几维?从下面的分离RGB和A看,应该是4D# 下面通过8层MLP训练RGBAfor i, layer in enumerate(self.layers):# 8层,每一层进行运算x = self.act(layer(x)) if i in self.skip:x = torch.cat([x, x_input], dim=-1)# Apply bottleneckbottleneck 瓶颈是啥?是不是最费算力的模块?if self.d_viewdirs is not None:# 从网络输出分离A,RGB还需要经过更多训练alpha = self.alpha_out(x)# Pass through bottleneck to get RGBx = self.rgb_filters(x) x = torch.concat([x, viewdirs], dim=-1)x = self.act(self.branch(x)) # self.branch shape: (d_filter // 2)x = self.output(x) # self.output shape: (3)# Concatenate alphas to outputx = torch.concat([x, alpha], dim=-1)else:# Simple outputx = self.output(x)return xThinking: What are the input and output of this NERF class? What happens through this class? It can be seen from the __init__ function parameters that it mainly sets the input, level and dimension of the neural network. 5D data is input, that is, the viewpoint position and line of sight direction, and the output is RGBA. Question, is the output RGBA one point? Or is it a series of lines of sight? If it is a series, I have not seen how the position coding determines the RGBA of each sampling point?

I have not seen any explanation of the sampling interval; if it is a point, then which point on the line of sight is this RGBA? of? Is it the point RGBA that is the result of a collection of sight sampling points seen by the eyes? It can be seen from the NERF class code that multi-layer feedforward training is mainly performed based on the viewpoint position and line of sight direction. The 5D viewpoint position and line of sight direction are input and the 4D RGBA is output.

The RGBA output points are located in 3D space, so to synthesize them into images, you need to apply equations 1-3 in Section 4 of the paper Describe the volume integral. Essentially, a weighted summation of all samples along the line of sight of each pixel is performed to obtain an estimated color value for that pixel. Each RGB sample is weighted by its transparency alpha value: higher alpha values indicate a higher likelihood that the sampled area is opaque, and therefore points further along the ray are more likely to be occluded. The cumulative product operation ensures that these further points are suppressed.

Volume rendering output by the original NeRF model:

def raw2outputs(raw: torch.Tensor,z_vals: torch.Tensor,rays_d: torch.Tensor,raw_noise_std: float = 0.0,white_bkgd: bool = False) -> Tuple[torch.Tensor, torch.Tensor, torch.Tensor, torch.Tensor]:# 将原始的NeRF输出转为RGB和其他映射# Difference between consecutive elements of `z_vals`. [n_rays, n_samples]dists = z_vals[..., 1:] - z_vals[..., :-1]# ?这里减法的意义是啥?dists = torch.cat([dists, 1e10 * torch.ones_like(dists[..., :1])], dim=-1)# 将每个距离乘以其对应方向光线的范数,以转换为真实世界的距离(考虑非单位方向)dists = dists * torch.norm(rays_d[..., None, :], dim=-1)# 将噪声添加到模型对密度的预测中,用于在训练期间规范网络(防止漂浮物伪影)noise = 0.if raw_noise_std > 0.:noise = torch.randn(raw[..., 3].shape) * raw_noise_std# Predict density of each sample along each ray. Higher values imply# higher likelihood of being absorbed at this point. [n_rays, n_samples]alpha = 1.0 - torch.exp(-nn.functional.relu(raw[..., 3] + noise) * dists)# Compute weight for RGB of each sample along each ray. [n_rays, n_samples]# The higher the alpha, the lower subsequent weights are driven.weights = alpha * cumprod_exclusive(1. - alpha + 1e-10)# Compute weighted RGB map.rgb = torch.sigmoid(raw[..., :3])# [n_rays, n_samples, 3]rgb_map = torch.sum(weights[..., None] * rgb, dim=-2)# [n_rays, 3]# Estimated depth map is predicted distance.depth_map = torch.sum(weights * z_vals, dim=-1)# Disparity map is inverse depth.disp_map = 1. / torch.max(1e-10 * torch.ones_like(depth_map),depth_map / torch.sum(weights, -1))# Sum of weights along each ray. In [0, 1] up to numerical error.acc_map = torch.sum(weights, dim=-1)# To composite onto a white background, use the accumulated alpha map.if white_bkgd:rgb_map = rgb_map + (1. - acc_map[..., None])return rgb_map, depth_map, acc_map, weightsdef cumprod_exclusive(tensor: torch.Tensor) -> torch.Tensor:# (Courtesy of https://github.com/krrish94/nerf-pytorch)# Compute regular cumprod first.cumprod = torch.cumprod(tensor, -1)# "Roll" the elements along dimension 'dim' by 1 element.cumprod = torch.roll(cumprod, 1, -1)# Replace the first element by "1" as this is what tf.cumprod(..., exclusive=True) does.cumprod[..., 0] = 1.return cumprod

Question: What is the main function here? What was entered? What is output?

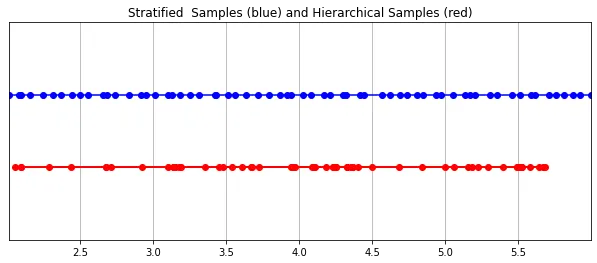

The RGB value finally picked up by the camera is the accumulation of light samples along the line of sight passing through the pixel. The classic volume rendering method is to accumulate points along the line of sight, and then The points are integrated, and at each point the probability that the ray travels without hitting any particles is estimated. Therefore, each pixel needs to sample points along the ray passing through it. To best approximate the integral, their stratified sampling method uniformly divides the space into N bins and draws a sample uniformly from each bin. Instead of simply drawing samples at equal intervals, the stratified sampling method allows the model to sample in continuous space, thus conditioning the network to learn on continuous space.

Picture

Picture

Hierarchical sampling implemented in PyTorch:

def sample_stratified(rays_o: torch.Tensor,rays_d: torch.Tensor,near: float,far: float,n_samples: int,perturb: Optional[bool] = True,inverse_depth: bool = False) -> Tuple[torch.Tensor, torch.Tensor]:# Sample along ray from regularly-spaced bins.# Grab samples for space integration along rayt_vals = torch.linspace(0., 1., n_samples, device=rays_o.device)if not inverse_depth:# Sample linearly between `near` and `far`z_vals = near * (1.-t_vals) + far * (t_vals)else:# Sample linearly in inverse depth (disparity)z_vals = 1./(1./near * (1.-t_vals) + 1./far * (t_vals))# Draw uniform samples from bins along rayif perturb:mids = .5 * (z_vals[1:] + z_vals[:-1])upper = torch.concat([mids, z_vals[-1:]], dim=-1)lower = torch.concat([z_vals[:1], mids], dim=-1)t_rand = torch.rand([n_samples], device=z_vals.device)z_vals = lower + (upper - lower) * t_randz_vals = z_vals.expand(list(rays_o.shape[:-1]) + [n_samples])# Apply scale from `rays_d` and offset from `rays_o` to samples# pts: (width, height, n_samples, 3)pts = rays_o[..., None, :] + rays_d[..., None, :] * z_vals[..., :, None]return pts, z_vals

The radiation field is represented by two multi-layer perceptrons: one operates at a coarse level, encoding the broad structural properties of the scene; the other refines the details at a fine level, enabling thin and detailed structures such as meshes and branches. Complex structure. Furthermore, the samples they receive are different, with coarse models processing wide, mostly regularly spaced samples throughout the ray, while fine models honing in regions with strong priors to obtain salient information.

这种“珩磨”过程是通过层次体积采样流程完成的。3D空间实际上非常稀疏,存在遮挡,因此大多数点对渲染图像的贡献不大。因此,对具有对积分贡献可能性高的区域进行过采样(oversample)更有好处。他们将学习到的归一化权重应用于第一组样本,以在光线上创建PDF,然后再将inverse transform sampling应用于该PDF以收集第二组样本。该集合与第一集合相结合,并被馈送到精细网络以产生最终输出。

分层采样PyTorch实现:

def sample_hierarchical(rays_o: torch.Tensor,rays_d: torch.Tensor,z_vals: torch.Tensor,weights: torch.Tensor,n_samples: int,perturb: bool = False) -> Tuple[torch.Tensor, torch.Tensor, torch.Tensor]:# Apply hierarchical sampling to the rays.# Draw samples from PDF using z_vals as bins and weights as probabilities.z_vals_mid = .5 * (z_vals[..., 1:] + z_vals[..., :-1])new_z_samples = sample_pdf(z_vals_mid, weights[..., 1:-1], n_samples, perturb=perturb)new_z_samples = new_z_samples.detach()# Resample points from ray based on PDF.z_vals_combined, _ = torch.sort(torch.cat([z_vals, new_z_samples], dim=-1), dim=-1)# [N_rays, N_samples + n_samples, 3]pts = rays_o[..., None, :] + rays_d[..., None, :] * z_vals_combined[..., :, None]return pts, z_vals_combined, new_z_samplesdef sample_pdf(bins: torch.Tensor, weights: torch.Tensor, n_samples: int, perturb: bool = False) -> torch.Tensor:# Apply inverse transform sampling to a weighted set of points.# Normalize weights to get PDF.# [n_rays, weights.shape[-1]]pdf = (weights + 1e-5) / torch.sum(weights + 1e-5, -1, keepdims=True) # Convert PDF to CDF.cdf = torch.cumsum(pdf, dim=-1) # [n_rays, weights.shape[-1]]# [n_rays, weights.shape[-1] + 1]cdf = torch.concat([torch.zeros_like(cdf[..., :1]), cdf], dim=-1) # Take sample positions to grab from CDF. Linear when perturb == 0.if not perturb:u = torch.linspace(0., 1., n_samples, device=cdf.device)u = u.expand(list(cdf.shape[:-1]) + [n_samples]) # [n_rays, n_samples]else:# [n_rays, n_samples]u = torch.rand(list(cdf.shape[:-1]) + [n_samples], device=cdf.device) # Find indices along CDF where values in u would be placed.u = u.contiguous() # Returns contiguous tensor with same values.inds = torch.searchsorted(cdf, u, right=True) # [n_rays, n_samples]# Clamp indices that are out of bounds.below = torch.clamp(inds - 1, min=0)above = torch.clamp(inds, max=cdf.shape[-1] - 1)inds_g = torch.stack([below, above], dim=-1) # [n_rays, n_samples, 2]# Sample from cdf and the corresponding bin centers.matched_shape = list(inds_g.shape[:-1]) + [cdf.shape[-1]]cdf_g = torch.gather(cdf.unsqueeze(-2).expand(matched_shape), dim=-1,index=inds_g)bins_g = torch.gather(bins.unsqueeze(-2).expand(matched_shape), dim=-1, index=inds_g)# Convert samples to ray length.denom = (cdf_g[..., 1] - cdf_g[..., 0])denom = torch.where(denom <h3>4 Training</h3><p>论文中训练NeRF推荐的每网络8层、每层256维的架构在训练过程中会消耗大量内存。缓解这种情况的方法是将前传(forward pass)分成更小的部分,然后在这些部分上积累梯度。注意与minibatching的区别:梯度是在采样光线的单个小批次上累积的,这些光线可能已经被收集成块。如果没有论文中使用的NVIDIA V100类似性能的GPU,可能必须相应地调整块大小以避免OOM错误。Colab笔记本采用了更小的架构和更适中的分块尺寸。</p><p>我个人发现,由于局部极小值,即使选择了许多默认值,NeRF的训练也有些棘手。一些有帮助的技术包括早期训练迭代和早期重新启动期间的中心裁剪(center cropping)。随意尝试不同的超参数和技术,以进一步提高训练收敛性。</p><h4>初始化</h4><pre class="brush:php;toolbar:false">def init_models():# Initialize models, encoders, and optimizer for NeRF training.encoder = PositionalEncoder(d_input, n_freqs, log_space=log_space)encode = lambda x: encoder(x)# View direction encodersif use_viewdirs:encoder_viewdirs = PositionalEncoder(d_input, n_freqs_views,log_space=log_space)encode_viewdirs= lambda x: encoder_viewdirs(x)d_viewdirs = encoder_viewdirs.d_outputelse:encode_viewdirs = Noned_viewdirs = Nonemodel = NeRF(encoder.d_output, n_layers=n_layers, d_filter=d_filter, skip=skip,d_viewdirs=d_viewdirs)model.to(device)model_params = list(model.parameters())if use_fine_model:fine_model = NeRF(encoder.d_output, n_layers=n_layers, d_filter=d_filter, skip=skip,d_viewdirs=d_viewdirs)fine_model.to(device)model_params = model_params + list(fine_model.parameters())else:fine_model = Noneoptimizer= torch.optim.Adam(model_params, lr=lr)warmup_stopper = EarlyStopping(patience=50)return model, fine_model, encode, encode_viewdirs, optimizer, warmup_stopper

def train():# Launch training session for NeRF.# Shuffle rays across all images.if not one_image_per_step:height, width = images.shape[1:3]all_rays = torch.stack([torch.stack(get_rays(height, width, focal, p), 0) for p in poses[:n_training]], 0)rays_rgb = torch.cat([all_rays, images[:, None]], 1)rays_rgb = torch.permute(rays_rgb, [0, 2, 3, 1, 4])rays_rgb = rays_rgb.reshape([-1, 3, 3])rays_rgb = rays_rgb.type(torch.float32)rays_rgb = rays_rgb[torch.randperm(rays_rgb.shape[0])]i_batch = 0train_psnrs = []val_psnrs = []iternums = []for i in trange(n_iters):model.train()if one_image_per_step:# Randomly pick an image as the target.target_img_idx = np.random.randint(images.shape[0])target_img = images[target_img_idx].to(device)if center_crop and i = rays_rgb.shape[0]:rays_rgb = rays_rgb[torch.randperm(rays_rgb.shape[0])]i_batch = 0target_img = target_img.reshape([-1, 3])# Run one iteration of TinyNeRF and get the rendered RGB image.outputs = nerf_forward(rays_o, rays_d, near, far, encode, model, kwargs_sample_stratified=kwargs_sample_stratified, n_samples_hierarchical=n_samples_hierarchical, kwargs_sample_hierarchical=kwargs_sample_hierarchical, fine_model=fine_model, viewdirs_encoding_fn=encode_viewdirs, chunksize=chunksize)# Backprop!rgb_predicted = outputs['rgb_map']loss = torch.nn.functional.mse_loss(rgb_predicted, target_img)loss.backward()optimizer.step()optimizer.zero_grad()psnr = -10. * torch.log10(loss)train_psnrs.append(psnr.item())# Evaluate testimg at given display rate.if i % display_rate == 0:model.eval()height, width = testimg.shape[:2]rays_o, rays_d = get_rays(height, width, focal, testpose)rays_o = rays_o.reshape([-1, 3])rays_d = rays_d.reshape([-1, 3])outputs = nerf_forward(rays_o, rays_d, near, far, encode, model, kwargs_sample_stratified=kwargs_sample_stratified, n_samples_hierarchical=n_samples_hierarchical, kwargs_sample_hierarchical=kwargs_sample_hierarchical, fine_model=fine_model, viewdirs_encoding_fn=encode_viewdirs, chunksize=chunksize)rgb_predicted = outputs['rgb_map']loss = torch.nn.functional.mse_loss(rgb_predicted, testimg.reshape(-1, 3))val_psnr = -10. * torch.log10(loss)val_psnrs.append(val_psnr.item())iternums.append(i)# Check PSNR for issues and stop if any are found.if i == warmup_iters - 1:if val_psnr <h4>训练</h4><pre class="brush:php;toolbar:false"># Run training session(s)for _ in range(n_restarts):model, fine_model, encode, encode_viewdirs, optimizer, warmup_stopper = init_models()success, train_psnrs, val_psnrs = train()if success and val_psnrs[-1] >= warmup_min_fitness:print('Training successful!')breakprint(f'Done!')辐射场标志着处理3D数据的方式发生了巨大变化。NeRF模型和更广泛的可微分渲染正在迅速弥合图像创建和体积场景创建之间的差距。虽然我们的组件可能看起来非常复杂,但受vanilla NeRF启发的无数其他方法证明,基本概念(连续函数逼近器+可微分渲染器)是构建各种解决方案的坚实基础,这些解决方案可用于几乎无限的情况。

原文:NeRF From Nothing: A Tutorial with PyTorch | Towards Data Science

原文链接:https://mp.weixin.qq.com/s/zxJAIpAmLgsIuTsPqQqOVg

The above is the detailed content of What is NeRF? Is NeRF-based 3D reconstruction voxel-based?. For more information, please follow other related articles on the PHP Chinese website!