To rewrite the content without changing the original meaning, the language needs to be rewritten into Chinese, and the original sentence does not need to appear

Review | The content of Chonglou needs to be rewritten

Generative artificial intelligence has attracted considerable interest in recent months with its ability to create unique text, sounds and images. However, the potential of generative AI is not limited to creating new data

The underlying techniques of generative AI (such as Transformers and diffusion models) can power many other applications, including Information search and discovery. In particular, generative AI could revolutionize image search, allowing people to browse visual information in ways that were previously impossible

Here’s what people need What you need to know about how generative AI is redefining the image search experience.

Traditional image search methods rely on text descriptions, tags, and other metadata accompanying images, which puts users The search options are limited to information that has been explicitly attached to the image. People uploading images must carefully consider the type of search queries they enter to ensure their images are discoverable by others. When searching for images, users seeking information must try to imagine what kind of description the image uploader might have added to the image

As the saying goes, "a picture is worth a thousand words." However, there are limits to what can be written about image descriptions. Of course, this can be described in many ways depending on how people view the image. People sometimes search based on the objects in the picture, and sometimes they search based on features such as style, light, location, etc. Unfortunately, images are rarely accompanied by such rich information. Many people upload many images with little to no information attached, making them difficult to discover in searches.

Artificial intelligence image search plays an important role in this regard. There are many approaches to AI image search, and different companies have their own proprietary technologies. However, there are also technologies that are jointly owned by these companies

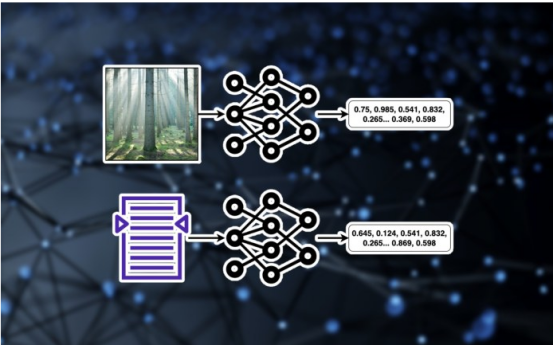

Artificial intelligence image search and many other deep learning systems have embeddings at their core. Embedding is a method of numerical representation of different data types. For example, a 512×512 resolution image contains approximately 260,000 pixels (or features). Embedding models learn low-dimensional representations of visual data by training on millions of images. Image embedding can be applied in many useful areas, including image compression, generating new images, or comparing the visual properties of different images. The same mechanism applies to other forms such as text. Text embedding models are low-dimensional representations of the content of text excerpts. Text embeddings have many applications, including similarity search and retrieval enhancement for large language models (LLMs).

How Artificial Intelligence Image Search Works

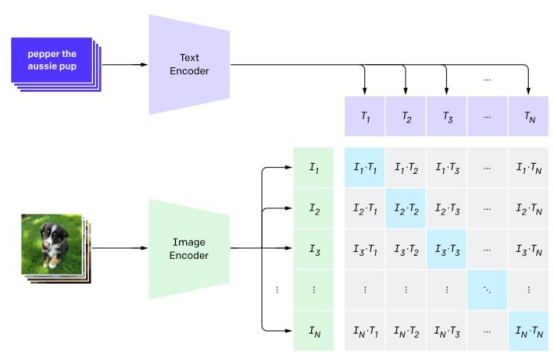

Contrastive Image Language Pre-trained (CLIP) model learns joint embedding of text and images

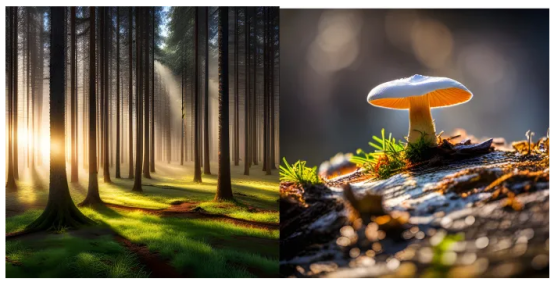

Now, we have Tool for converting text into visual embeddings. When we feed this joint model a text description, it generates text embeddings and corresponding image embeddings. We can then compare the image embeddings with images in the database and retrieve the most relevant ones. This is the basic principle of artificial intelligence image search. Registered in metadata. You can use rich search terms that were not possible before, such as "Lush green forest shrouded in morning mist, bright sunshine filtering through the tall pine forest, and some mushrooms growing on the grass."

Now, we have Tool for converting text into visual embeddings. When we feed this joint model a text description, it generates text embeddings and corresponding image embeddings. We can then compare the image embeddings with images in the database and retrieve the most relevant ones. This is the basic principle of artificial intelligence image search. Registered in metadata. You can use rich search terms that were not possible before, such as "Lush green forest shrouded in morning mist, bright sunshine filtering through the tall pine forest, and some mushrooms growing on the grass."

In the example above, the AI search returned a set of images whose visual characteristics matched this query. Many of the text descriptions do not contain the query keywords. But their embedding is similar to that of queries. Without AI image search, finding the right image would be much more difficult.

Sometimes, the image people are looking for doesn’t exist, and even an AI search can’t find it. In this case, generative AI can help users achieve desired outcomes in one of two ways.

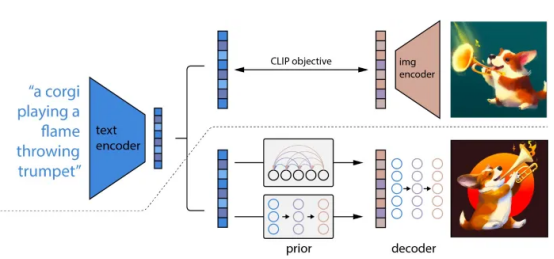

First, we can create a new image from scratch based on the user’s query. This approach involves using a text-to-image generative model (such as Stable Diffusion or DALL-E) to create an embedding for the user's query and leveraging that embedding to generate the image. Generative models utilize joint embedding models such as Contrastive Image Language Pretraining (CLIP) and other architectures such as Transformers or Diffusion models to convert embedded numerical values into stunning images

DALL-E uses Contrastive Image Language Pre-training (CLIP) and diffusion to generate images from text

DALL-E uses Contrastive Image Language Pre-training (CLIP) and diffusion to generate images from text

The second method is to leverage existing images and use the generated ones according to personal preference model for editing. For example, in an image showing a pine forest, mushrooms are missing from the grass. Users can choose a suitable image as a starting point and add mushrooms to it via a generative model.

Generative AI creates a whole new paradigm , blurring the lines between discovery and creativity. And within a single interface, users can find images, edit images, or create entirely new images.

Original title:How generative AI is redefining image search, by Ben Dickson

The above is the detailed content of How generative AI is redefining image search. For more information, please follow other related articles on the PHP Chinese website!