On September 25, 2021, Alibaba Cloud released the open source project Tongyi Qianwen 14 billion parameter model Qwen-14B and its conversation model Qwen-14B-Chat, and they are free for commercial use. Qwen-14B has performed well in multiple authoritative evaluations, surpassing models of the same size, and even some indicators are close to Llama2-70B. Previously, Alibaba Cloud also open sourced the 7 billion parameter model Qwen-7B. The number of downloads exceeded 1 million in just over a month, making it a popular project in the open source community.

Qwen-14B is a model that supports multiple The high-performance open source model of language uses more high-quality data than similar models. The overall training data exceeds 3 trillion Tokens, giving the model stronger reasoning, cognition, planning and memory capabilities. Qwen-14B supports a maximum context window length of 8k.

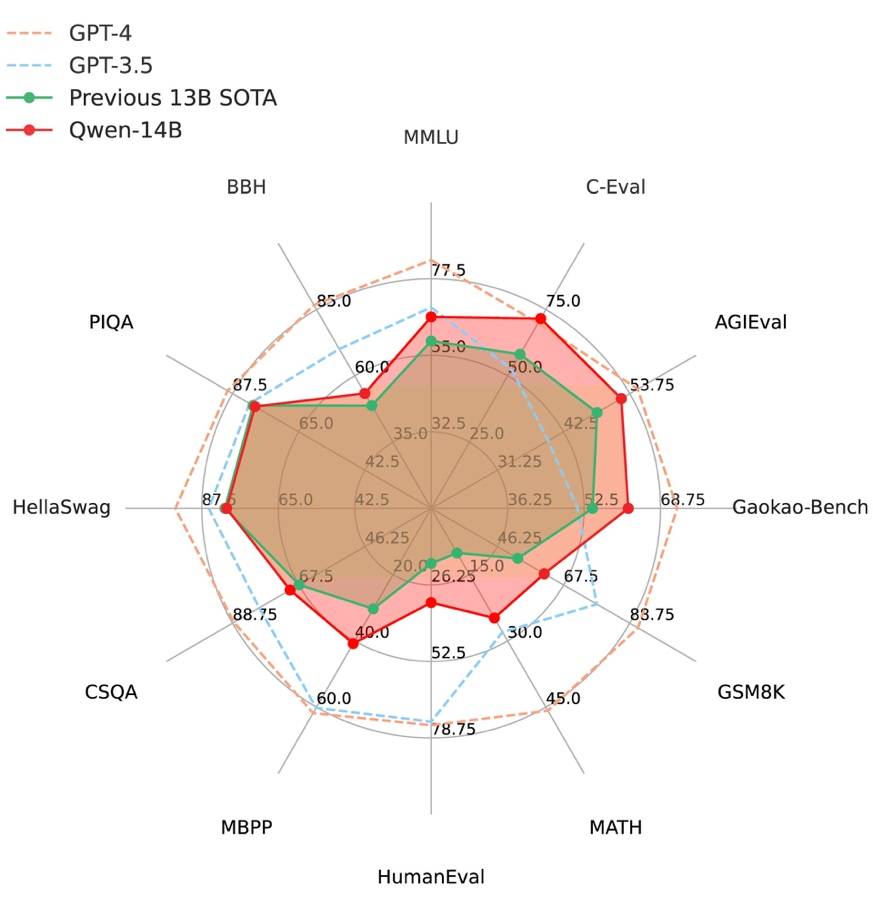

Figure 1: Qwen-14B surpasses the large SOTA model of the same scale in all aspects in twelve authoritative evaluations

Qwen-14B-Chat is based on The dialogue model obtained by fine SFT on the seat model. With the powerful performance of the base model, the accuracy of the content generated by Qwen-14B-Chat has been greatly improved, and it is more in line with human preferences. The imagination and richness of content creation have also been significantly expanded.

Qwen has excellent tool calling capabilities, which can help developers build Qwen-based agents faster. Developers can use simple instructions to teach Qwen to use complex tools, such as using the Code Interpreter tool to execute Python code for complex mathematical calculations, data analysis, and chart drawing. In addition, Qwen can also develop "advanced digital assistants" with capabilities such as multi-document Q&A and long-text writing.

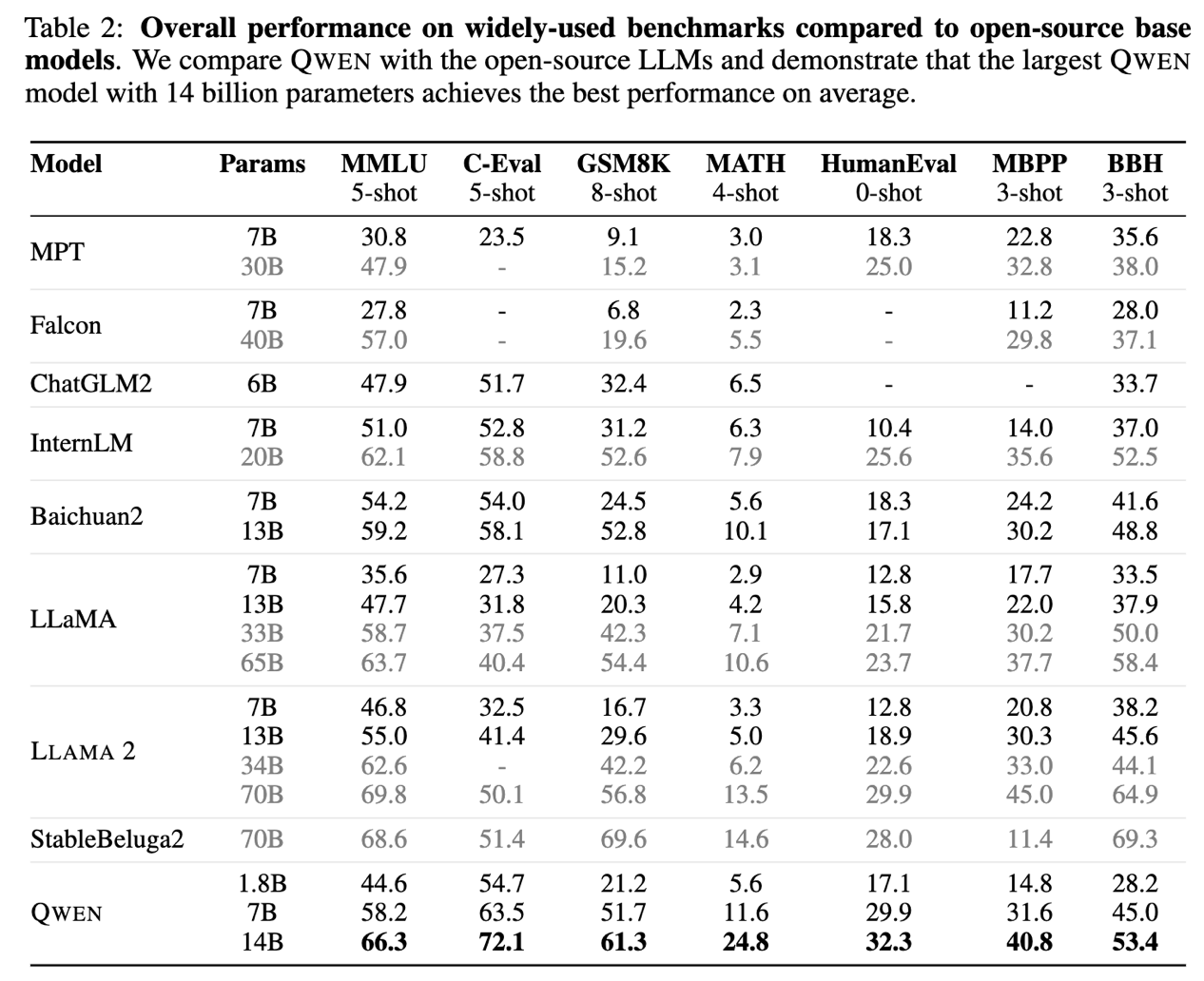

Large-scale language models with parameter levels within tens of billions are currently the mainstream choice for developers to develop and iterate applications. . Qwen-14B has further improved the performance upper limit of small-size models and achieved the best results in 12 authoritative evaluations including MMLU, C-Eval, GSM8K, MATH, GaoKao-Bench, etc., surpassing SOTA (State-Of) in all evaluations. -The-Art) large model, also completely surpassed Llama-2-13B. At the same time, Qwen-7B has also undergone a new upgrade, with the core indicators increased by up to 22.5%

Rewritten content: Picture 2: Qwen-14B Showing stronger performance on models of the same size

Users can download models directly from the Moda community, or access and call Qwen-14B and Qwen-14B-Chat through the Alibaba Cloud Lingji platform . Alibaba Cloud provides users with complete services, including model training, inference, deployment and fine-tuning.

In August, Alibaba Cloud opened up the Tongyi Qianwen 7 billion parameter base model Qwen-7B, which has successively reached the top of the market. Trending lists of HuggingFace and Github. In just over a month, the cumulative downloads exceeded 1 million. More than 50 models based on Qwen have appeared in the open source community, and many well-known tools and frameworks in the community have integrated Qwen.

#Tongyi Qianwen is the most in-depth and widely used large-scale model in China. There are already many domestic applications connected to Tongyi Qianwen, and the monthly active users of these applications have exceeded 100 million. Many small and medium-sized enterprises, scientific research institutions and individual developers are using Tongyi Qianwen to develop exclusive large-scale models or application products. For example, Alibaba's Taobao, DingTalk and Future Wizards, as well as external scientific research institutions and entrepreneurial enterprises

Zhejiang University and Higher Education Press developed the Zhihai-Sanle education vertical model based on Qwen-7B. It has been used in 12 universities across the country. The model has functions such as intelligent question answering, test question generation, learning navigation, and teaching evaluation. The model has been provided on the Alibaba Cloud Lingji platform and can be called with just one line of code. Zhejiang Youlu Robot Technology Co., Ltd. has integrated Qwen-7B into its road cleaning robot, allowing the robot to interact with users in real time and understand their needs. It can analyze and disassemble users' high-level instructions, perform logical analysis and task planning, and thereby complete cleaning tasks

Alibaba Cloud CTO Zhou Jingren said that Alibaba Cloud will continue to support and promote open source and is committed to promoting China's large model Ecosystem construction. Alibaba Cloud firmly believes in the power of open source and is the first to open source its own large model technology, hoping to allow more small and medium-sized enterprises and individual developers to more quickly access and apply large model technology

Alibaba Cloud also leads The construction of ModelScope, China's largest AI model open source community, unites the power of the entire industry to jointly promote the popularization and application of large model technology. In the past two months, the number of model downloads in Moda Community has soared from 45 million to 85 million, an increase of nearly 100%

The rewritten content is as follows: Attachment:

Moda Community Model address:

What is the experience of Moda community model?

Alibaba Cloud Lingji Platform Address:

//m.sbmmt.com/link/da796dcc49ab9fc5ac26db17e02a9e33

Github: HuggingFace:The above is the detailed content of Alibaba Cloud Tongyi Qianwen 14B model is open source! Performance surpasses Llama2 and other models of the same size. For more information, please follow other related articles on the PHP Chinese website!

what is mysql index

what is mysql index

What is highlighting in jquery

What is highlighting in jquery

Ethereum browser blockchain query

Ethereum browser blockchain query

How to retrieve Douyin flames after they are gone?

How to retrieve Douyin flames after they are gone?

How to solve the problem of 400 bad request when the web page displays

How to solve the problem of 400 bad request when the web page displays

Commonly used search tools

Commonly used search tools

Free software for building websites

Free software for building websites

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence