Recently, Alibaba Cloud Linux 3 has provided some optimization and upgrades to make the AI development experience more efficient. This article is a preview of the series of articles "Introduction to AI Capabilities of Alibaba Cloud Linux 3". It takes a GPU instance as an example to demonstrate Alibaba Cloud. Linux 3 supports the AI ecosystem. Next, two series of articles will be published, mainly introducing the cloud market image based on Alinux to provide users with an out-of-box AI basic software environment, and introducing the differentiation of AI capabilities based on AMD. Stay tuned. For more information about Alibaba Cloud Linux 3, please visit the official website: https://www.aliyun.com/product/ecs/alinux

When developing artificial intelligence (AI) applications on Linux operating systems, developers may encounter some challenges, which include but are not limited to:

1. GPU Driver: In order to use an NVIDIA GPU for training or inference on a Linux system, the correct NVIDIA GPU driver needs to be installed and configured. Some additional work may be required as different operating systems and GPU models may require different drivers.

2. AI Framework Compilation: When programming with an AI framework on a Linux system, you need to install and configure the appropriate compiler and other dependencies. These frameworks often require compilation, so you need to ensure that the compiler and other dependencies are installed correctly, and that the compiler is configured correctly.

3. Software compatibility: The Linux operating system supports many different software and tools, but there may be compatibility issues between different versions and distributions. This may cause some programs to not run properly or be unavailable on some operating systems. Therefore, R&D personnel need to understand the software compatibility of their working environment and make necessary configurations and modifications.

4. Performance issues: The AI software stack is an extremely complex system that usually requires professional optimization of different models of CPUs and GPUs to achieve its best performance. Performance optimization of software and hardware collaboration is a challenging task for AI software stacks, requiring a high level of technology and expertise.

Alibaba Cloud Linux 3 (hereinafter referred to as "Alinux 3"), Alibaba Cloud's third-generation cloud server operating system, is a commercial version of the operating system developed based on the Anolis OS, providing developers with Powerful AI development platform, by supporting the Dragon Lizard ecological repo (epao), Alinux 3 achieves full support for the mainstream nvidia GPU and CUDA ecosystem, making AI development more convenient and efficient. In addition, Alinux 3 also supports the optimization of AI by different CPU platforms such as mainstream AI frameworks TensorFlow/PyTorch and Intel/amd. It will also introduce native support for large model SDKs such as modelscope and huggingface, providing developers with rich resources and tool. These supports make Alinux 3 a complete AI development platform, solving the pain points of AI developers without having to fiddle with the environment, making the AI development experience easier and more efficient.

Alinux 3 provides developers with a powerful AI development platform. In order to solve the above challenges that developers may encounter, Alinux 3 provides the following optimization upgrades:

1. Alinux 3 supports developers to install mainstream NVIDIA GPU drivers and CUDA acceleration libraries with one click by introducing the Dragon Lizard Ecological Software Repository (epao) , saving developers the need to match driver versions and manual installation time.

2. The epao warehouse also provides version support for the mainstream AI framework Tensorflow/PyTorch. At the same time, the dependency problem of the AI framework will be automatically solved during the installation process. Developers do not need to perform additional compilation. Use the system Python environment for rapid development.

3. Before the AI capabilities of Alinux 3 are provided to developers, all components have been tested for compatibility. Developers can install the corresponding AI capabilities with one click, eliminating possible problems in environment configuration. Modifications to system dependencies have improved stability during use.

4. Alinux 3 has been specially optimized for AI for CPUs on different platforms such as Intel/AMD to better release the full performance of the hardware.

5. In order to adapt to the rapid iteration of the AIGC industry, Alinux 3 will also introduce native support for large model SDKs such as ModelScope and HuggingFace, providing developers with a wealth of resources and tools.

With the support of multi-dimensional optimization, Alinux 3 has become a complete AI development platform, solving the pain points of AI developers and making the AI development experience easier and more efficient.

The following uses Alibaba Cloud GPU instances as an example to demonstrate Alinux 3’s support for the AI ecosystem:

1. Purchase a GPU instance

2. Select Alinux 3 image

3. Install epao repo configuration

dnf install -y anolis-epao-release

4. Install nvidia GPU driver

Before installing nvidia driver, make sure kernel-devel is installed to ensure that nvidia driver is installed successfully.

dnf install -y kernel-devel-$(uname-r)

Install nvidia driver:

dnf install -y nvidia-driver nvidia-driver-cuda

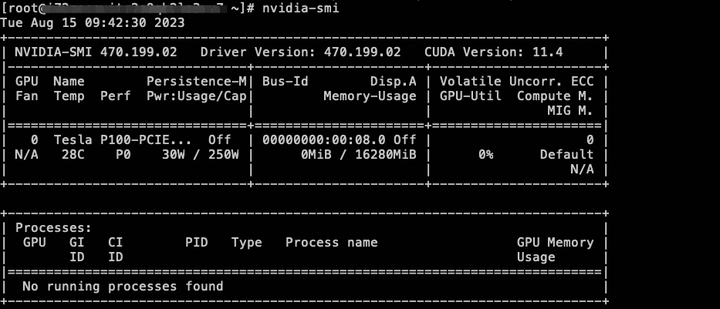

After the installation is complete, you can view the GPU device status through the nvidia-smi command.

5. Install cuda ecological library

dnf install -y cuda

6. Install the AI framework tensorflow/pytorch

Currently provides the CPU version of tensorflow/pytorch, and will support the GPU version of the AI framework in the future.

dnf install tensorflow -y dnf install pytorch -y

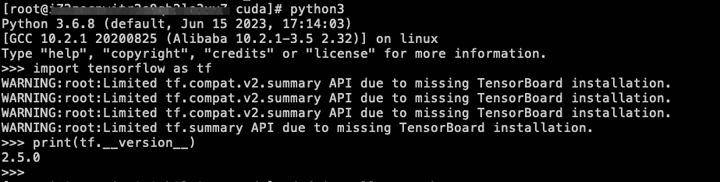

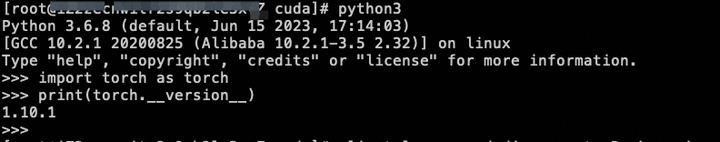

After the installation is completed, you can check whether the installation is successful through a simple command:

7. Deployment model

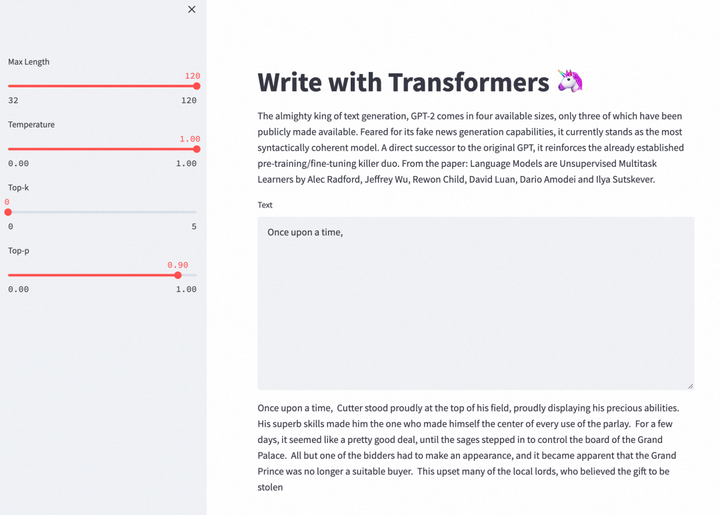

Using Alinux 3’s ecological support for AI, the GPT-2 Large model can be deployed to continue the task of writing this article.

Install Git and Git LFS to facilitate subsequent downloading of models.

dnf install -y git git-lfs wget

Update pip to facilitate subsequent deployment of the Python environment.

python -m pip install --upgrade pip

Enable Git LFS support.

git lfs install

Download the write-with-transformer project source code and pre-trained model. The write-with-transformer project is a web writing APP that can use the GPT-2 large model to continue writing content.

git clone https://huggingface.co/spaces/merve/write-with-transformer

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/gpt2-large

wget https://huggingface.co/gpt2-large/resolve/main/pytorch_model.bin -O gpt2-large/pytorch_model.bin

Install the dependent environments required by write-with-transformer.

cd ~/write-with-transformer

pip install --ignore-installed pyyaml==5.1

pip install -r requirements.txt

After the environment is deployed, you can run the web version of the APP to experience the fun of writing with the help of GPT-2. Currently GPT-2 only supports text generation in English.

cd ~/write-with-transformer

sed -i 's?"gpt2-large"?"../gpt2-large"?g' app.py

sed -i '34s/10/32/;34s/30/120/' app.py

streamlit run app.py --server.port 7860

The echo information appears External URL: http://

Click to try the cloud product for free now: https://click.aliyun.com/m/1000373503/

Original link: https://click.aliyun.com/m/1000379727/

This article is original content of Alibaba Cloud and may not be reproduced without permission.

The above is the detailed content of Using GPU instances to demonstrate Alibaba Cloud Linux 3's support for the AI ecosystem. For more information, please follow other related articles on the PHP Chinese website!