Alibaba Cloud took the lead in launching a full series of training and deployment solutions for Llama2 on July 25. This open source project supports free commercial use, which has attracted widespread attention in the industry to large-scale open source models. Developers are welcome to build their own customized large models on Alibaba Cloud

Recently, Llama2 large-scale language models have been announced as open source, including 7 billion, 13 billion and 70 billion parameter version. Llama2 can be used for free in research and commercial scenarios with less than 700 million monthly active users, providing enterprises and developers with new tools for large-scale model research. However, retraining and deploying Llama2 still has a high threshold, especially for the more powerful large-size version

For the convenience of developers, Alibaba Cloud Machine Learning Platform PAI is the first in China to deeply adapt the Llama2 series models, and has launched best practice solutions suitable for lightweight fine-tuning, full-parameter fine-tuning, inference services and other scenarios to help developers quickly retrain and build based on Llama2 Your own customized large model

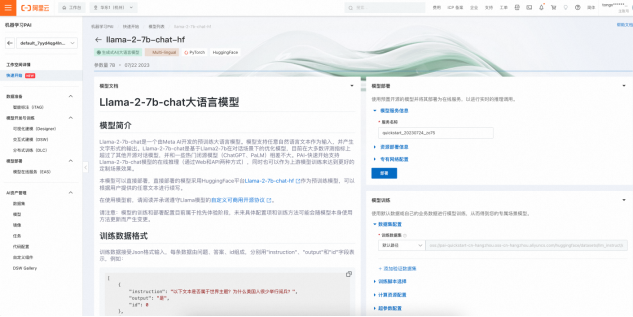

ModelScope, the AI model community led by Alibaba Cloud, immediately launched Llama2 Series model . Developers can click "Notebook Rapid Development" on the Llama2 model page of the Magic Community to launch the Alibaba Cloud machine learning platform PAI with one click to develop and deploy the model on the cloud. Llama2 models downloaded from other platforms can also be used with Alibaba Cloud. developed by PAI.

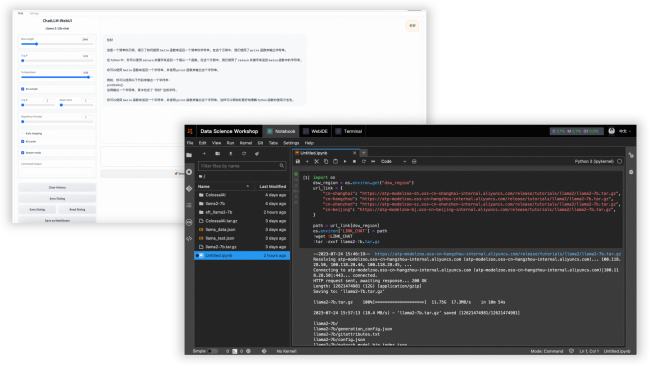

In order to meet the needs of special scenarios, developers usually need to use fine-tuning training methods to adjust the model to obtain professional capabilities and capabilities in specific fields. Knowledge. To this end, PAI provides support for lightweight Lora fine-tuning or deeper full-parameter fine-tuning on the cloud. Once the model is fine-tuned, developers can deploy Llama2 through the Web UI and API so that the model can interact with users through web pages or embedded applications

On Alibaba Cloud, through the distributed computing capabilities provided by PAI Lingjun Intelligent Computing Service, in-depth development of the ultra-large size model Llama2 can be easily completed. The preset environment eliminates the trouble of operation and maintenance, making the operation simple and convenient. In addition, developers can also enjoy abundant AI computing resources and extreme flexibility. In contrast, the local single-card GPU can only handle the lightweight Lora fine-tuning and inference of the Llama2 7 billion parameter version, and it is difficult to support the larger size version and deeper training

As one of the top three cloud vendors in the world and the number one in Asia, Alibaba Cloud is an important leader and service provider of China's AI wave. It has built a complete integrated IaaS PaaS MaaS AI service. At the infrastructure layer, Alibaba Cloud has the strongest intelligent computing power reserve in China. The Lingjun Intelligent Computing Cluster has the scalability of up to 100,000 cards of GPU and can carry multiple large models with trillions of parameters for simultaneous online training; at the AI platform layer, Alibaba Cloud machine learning platform PAI provides engineering capabilities for the entire process of AI development. PAI Lingjun intelligent computing service supports the training and application of very large models such as Tongyi Qianwen, which can improve the training performance of large models by nearly 10 times and increase the inference efficiency by 37 %; At the model service layer, Alibaba Cloud has built the most active AI model community in China, , and supports enterprises to retrain based on Tongyi Qianwen or Sanparty large models.

#In early July this year, Alibaba Cloud announced that it would promote the prosperity of China's large model ecosystem as its primary goal and provide comprehensive services to large model startups. Including model training, inference, deployment, fine-tuning, evaluation and product implementation, and providing sufficient funding and commercial exploration support

The above is the detailed content of Alibaba Cloud fully supports Llama2 training deployment, helping enterprises quickly build their own large-scale models. For more information, please follow other related articles on the PHP Chinese website!